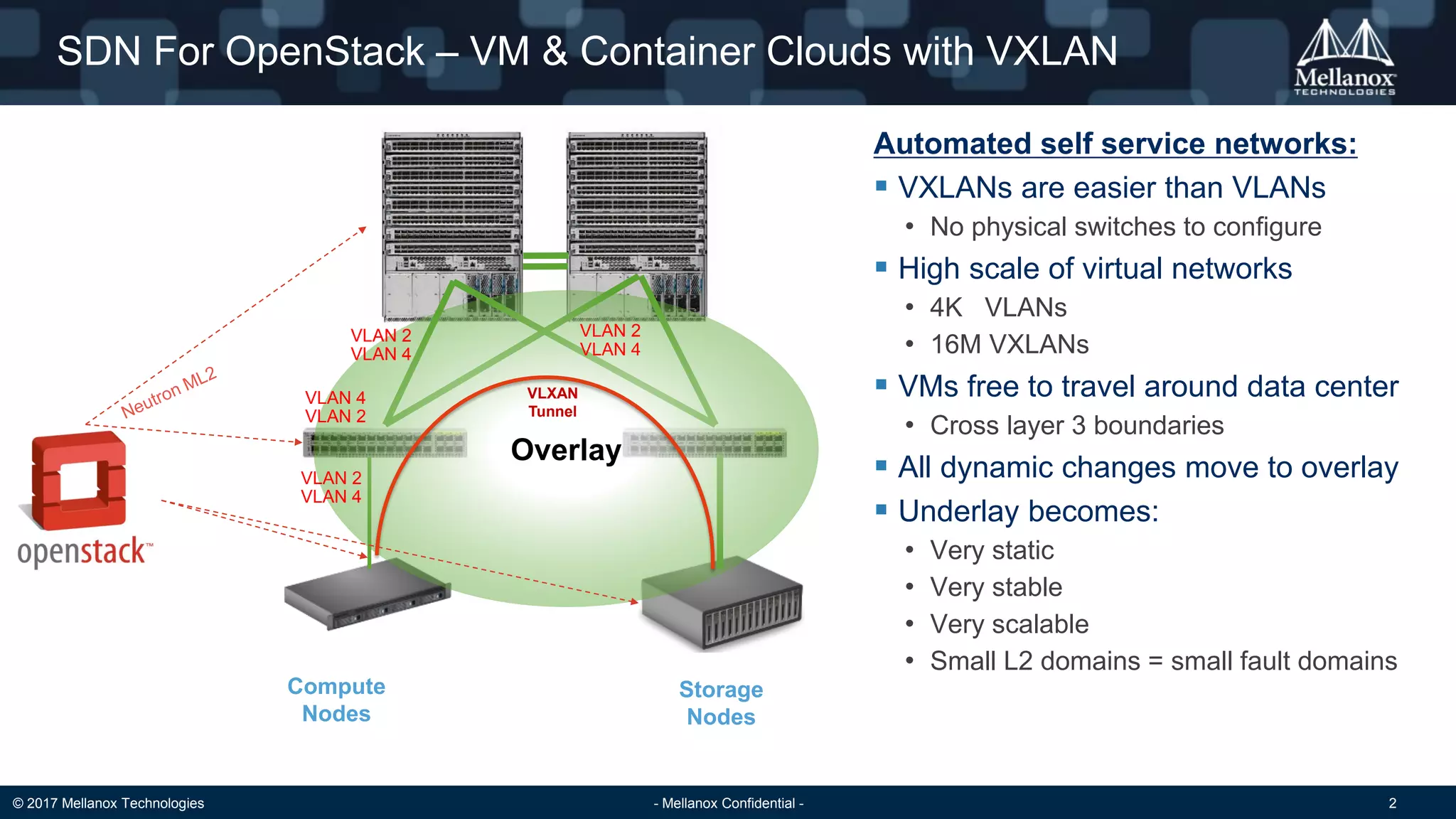

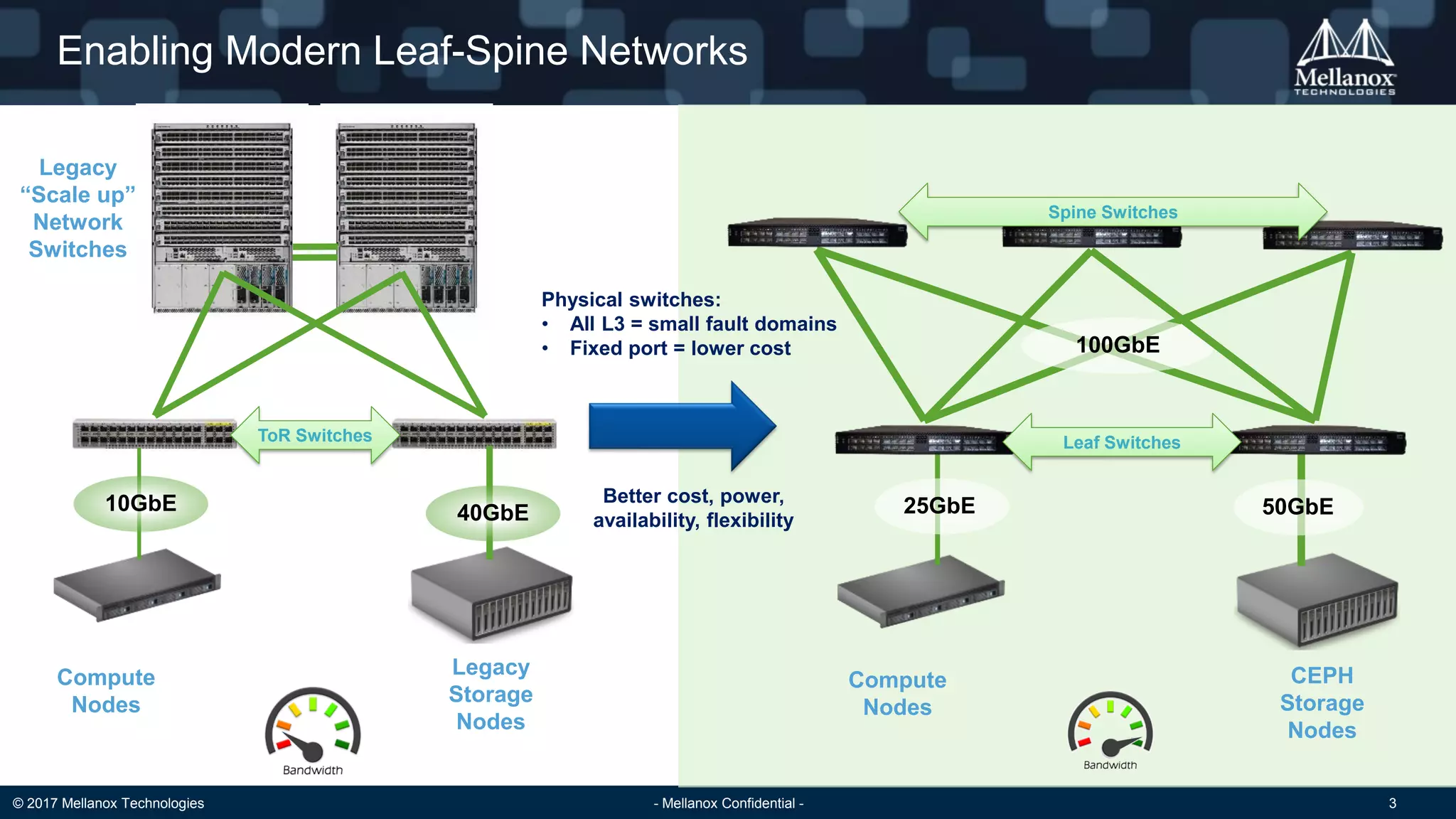

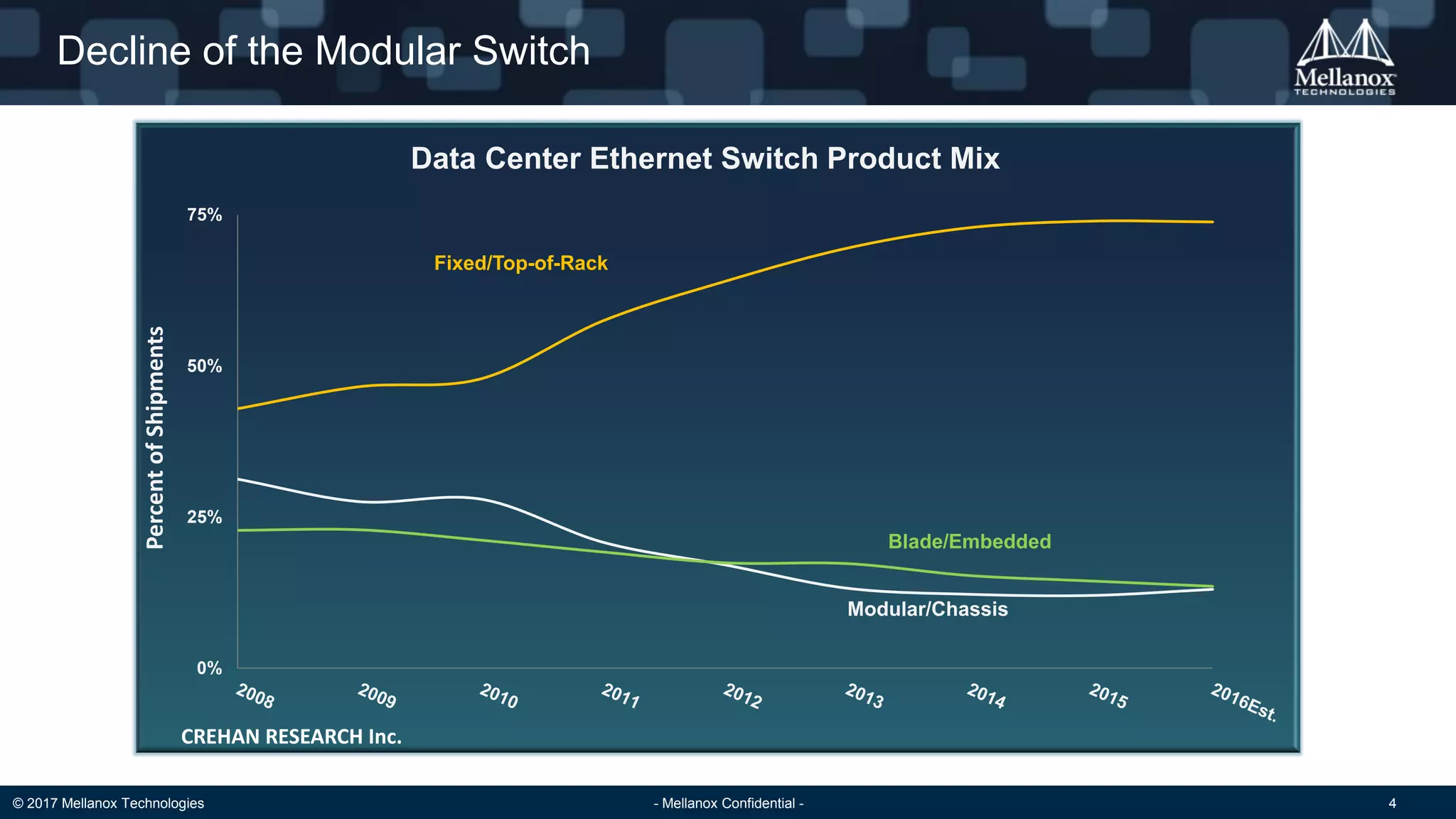

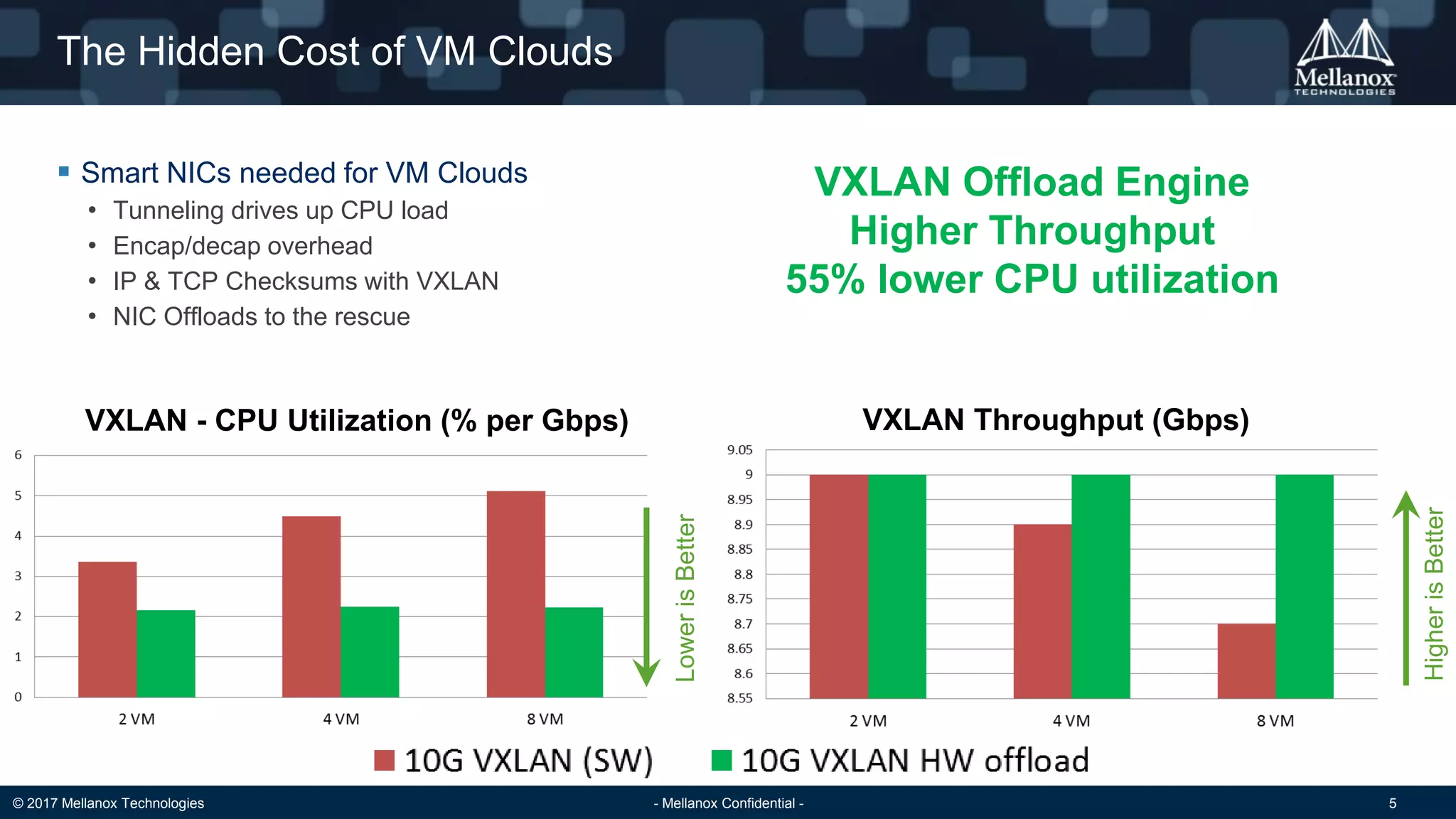

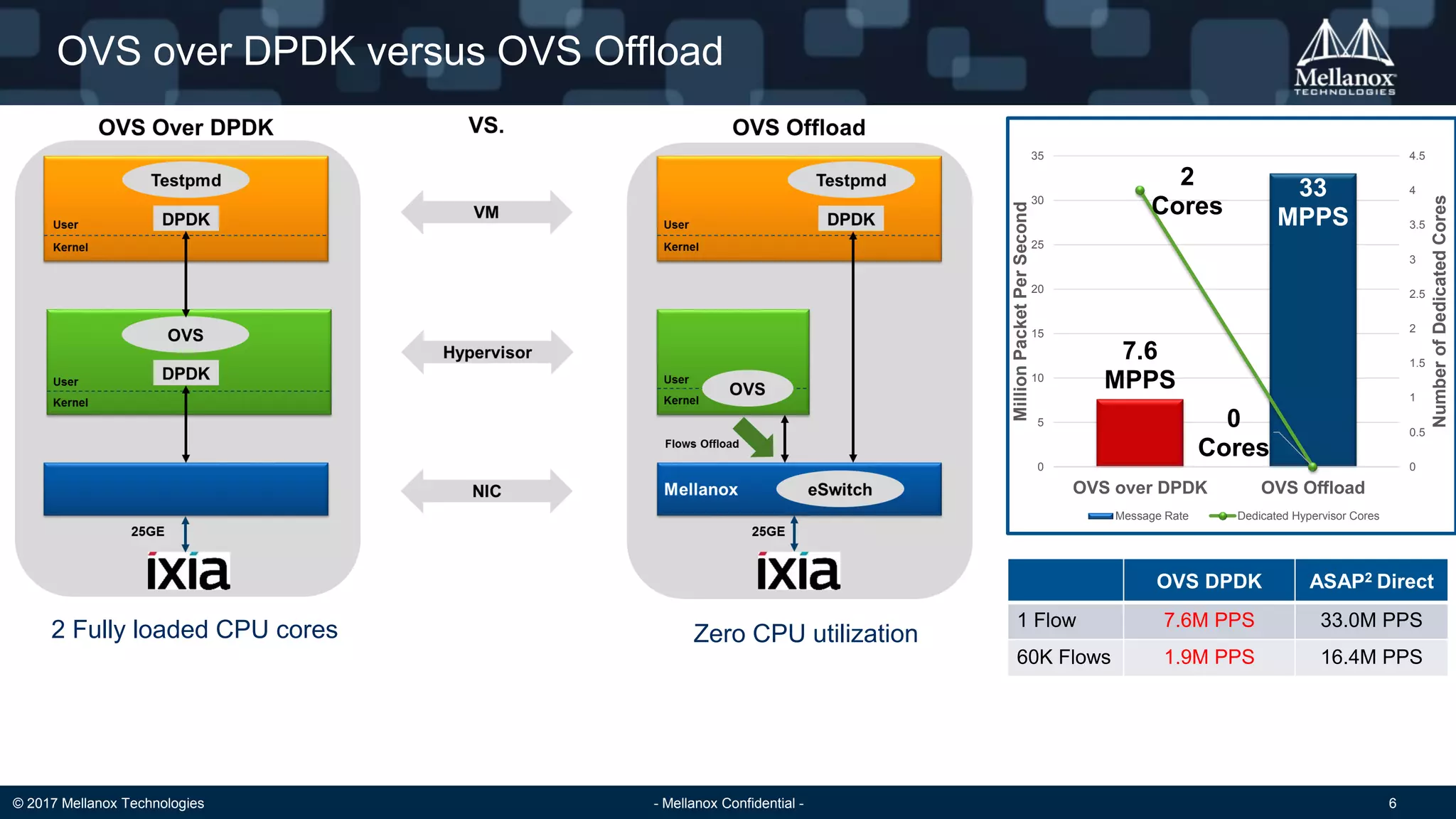

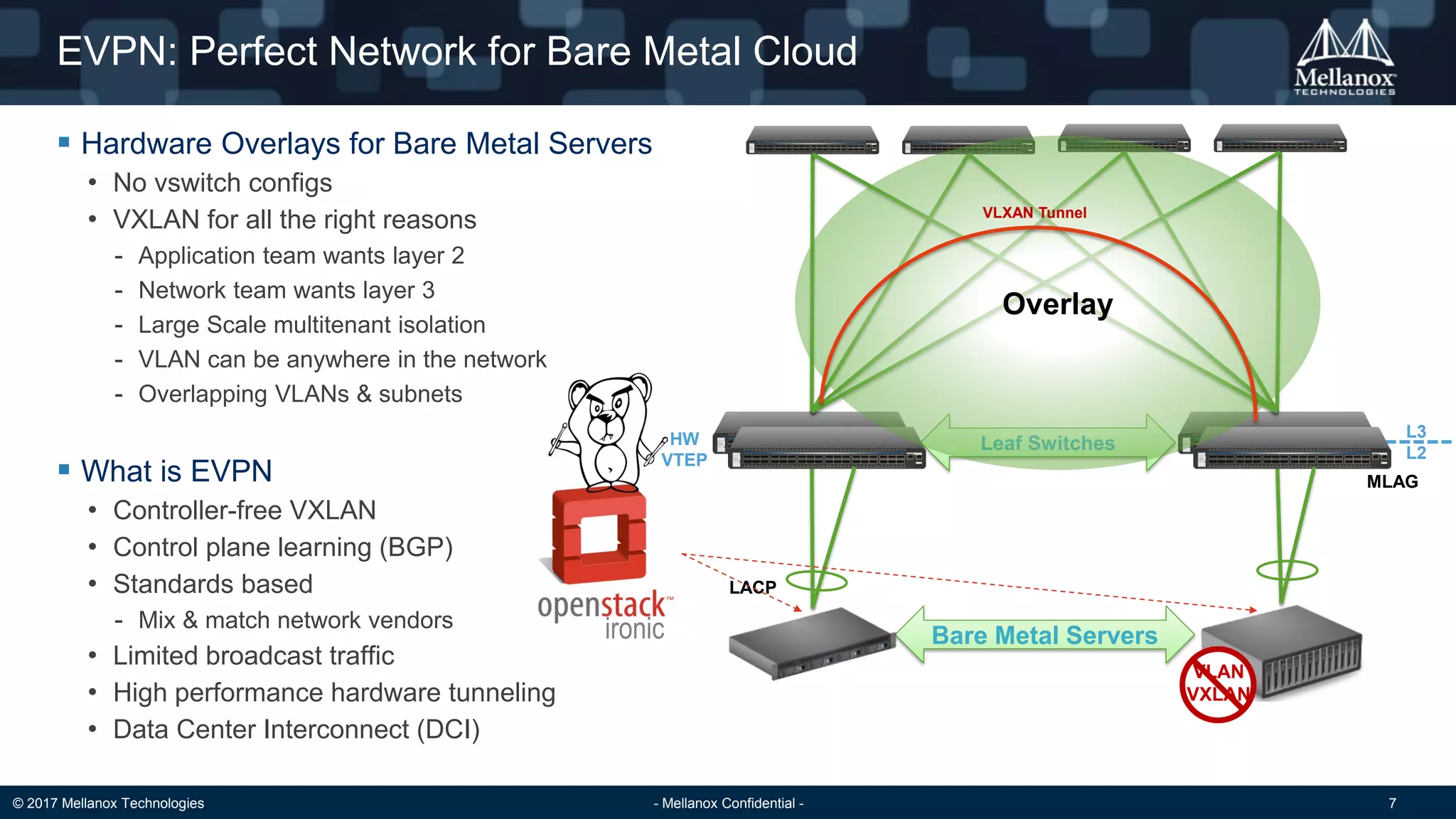

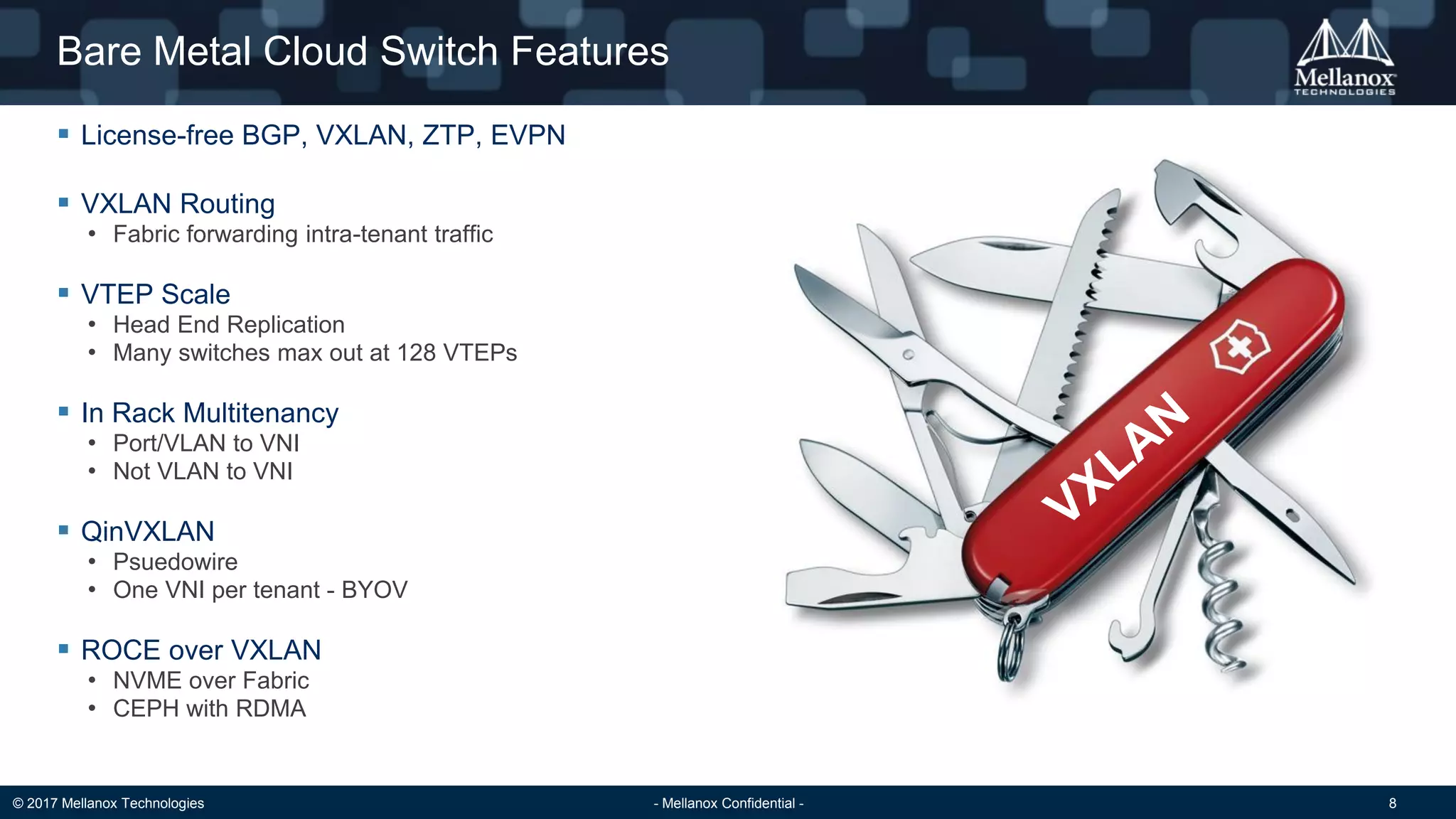

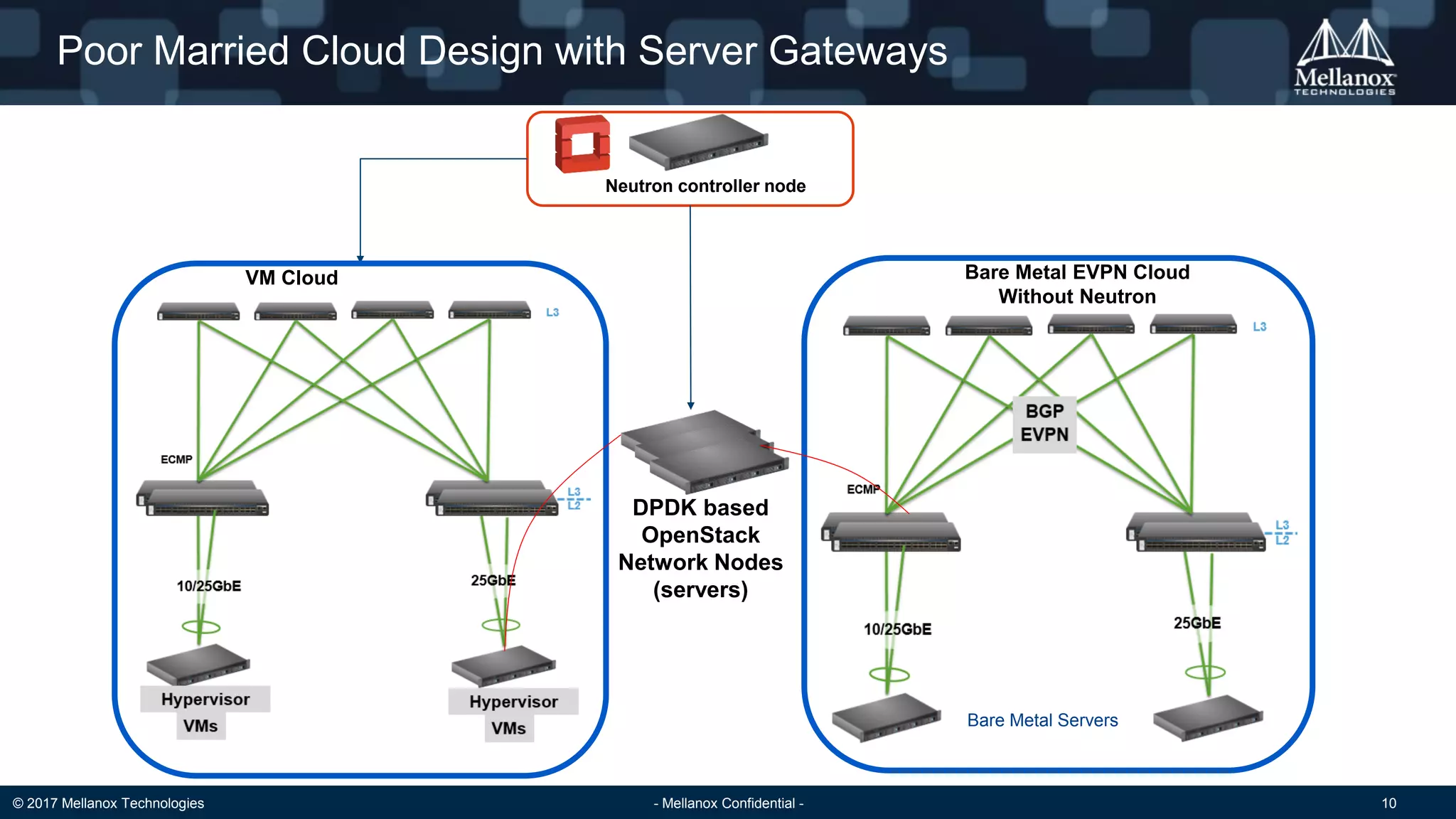

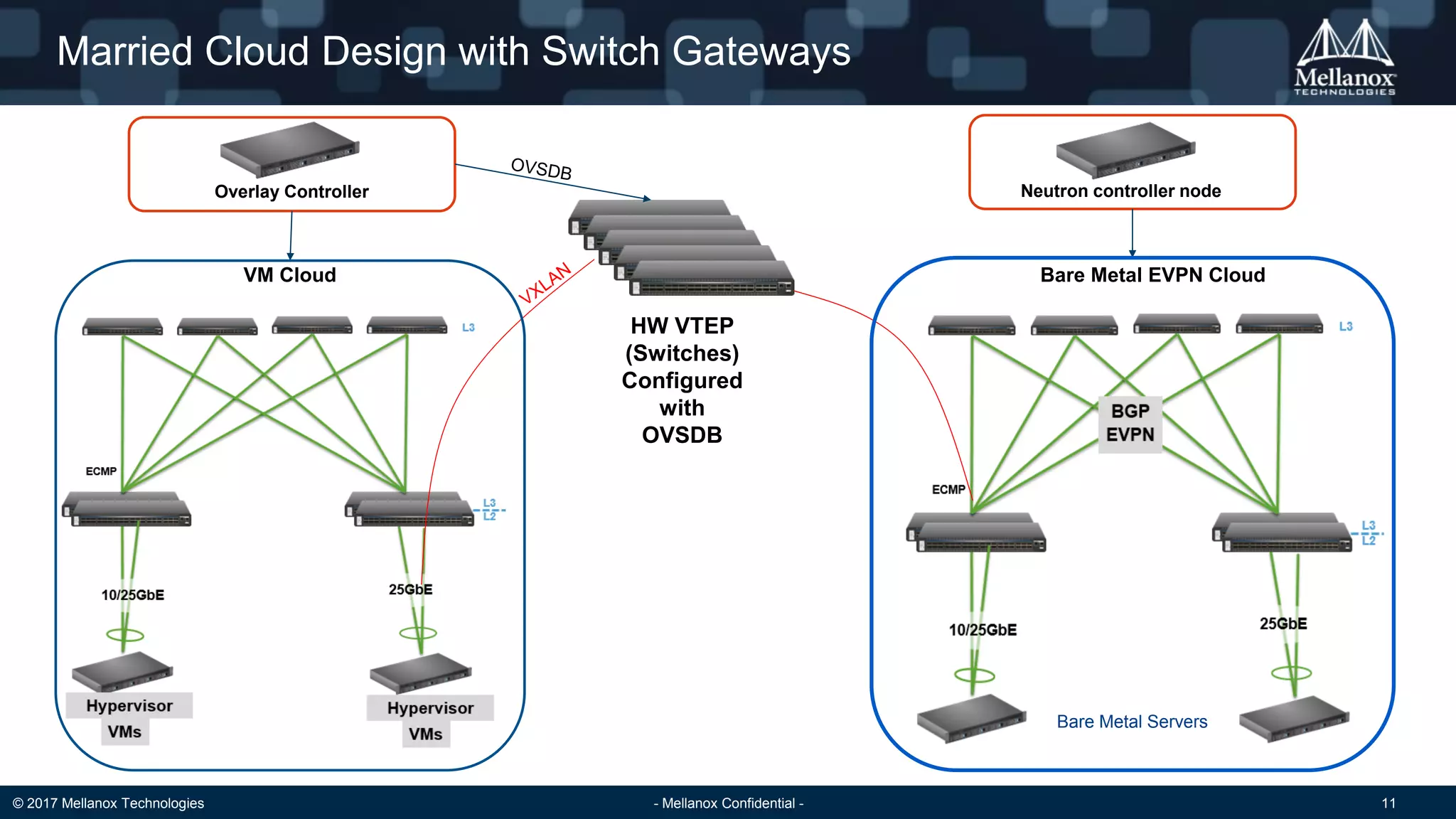

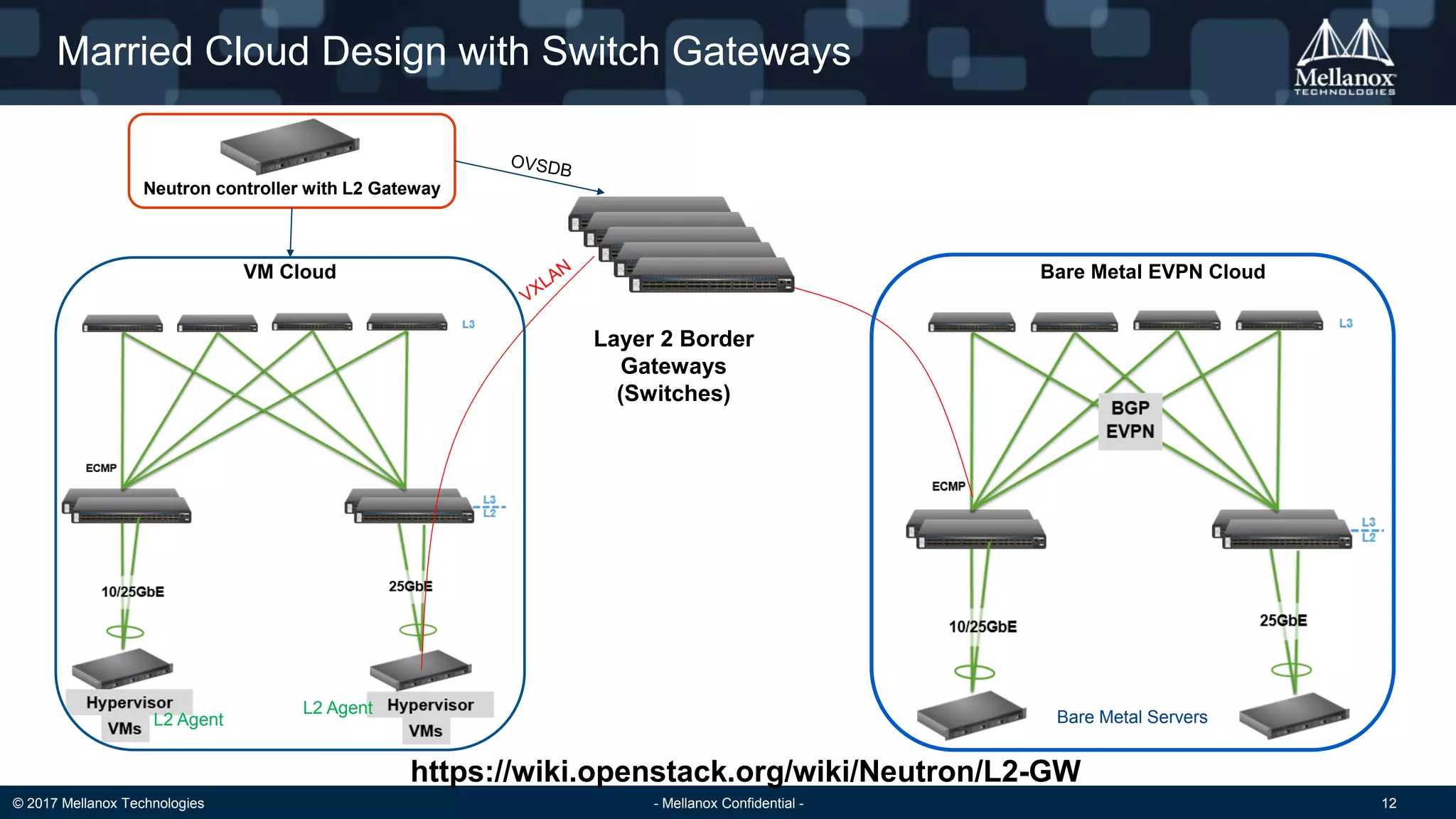

The document discusses integrating OpenStack with bare metal networks using EVPN, highlighting the advantages of VXLan for automated, scalable, and dynamic networks. It emphasizes the need for specialized hardware and software configurations to leverage modern networking features while addressing the complexities of managing cloud environments with both VMs and bare metal servers. Additionally, it outlines the capabilities and configurations required to create a robust networking infrastructure, including gateways and specific technologies like OVS and DPDK.