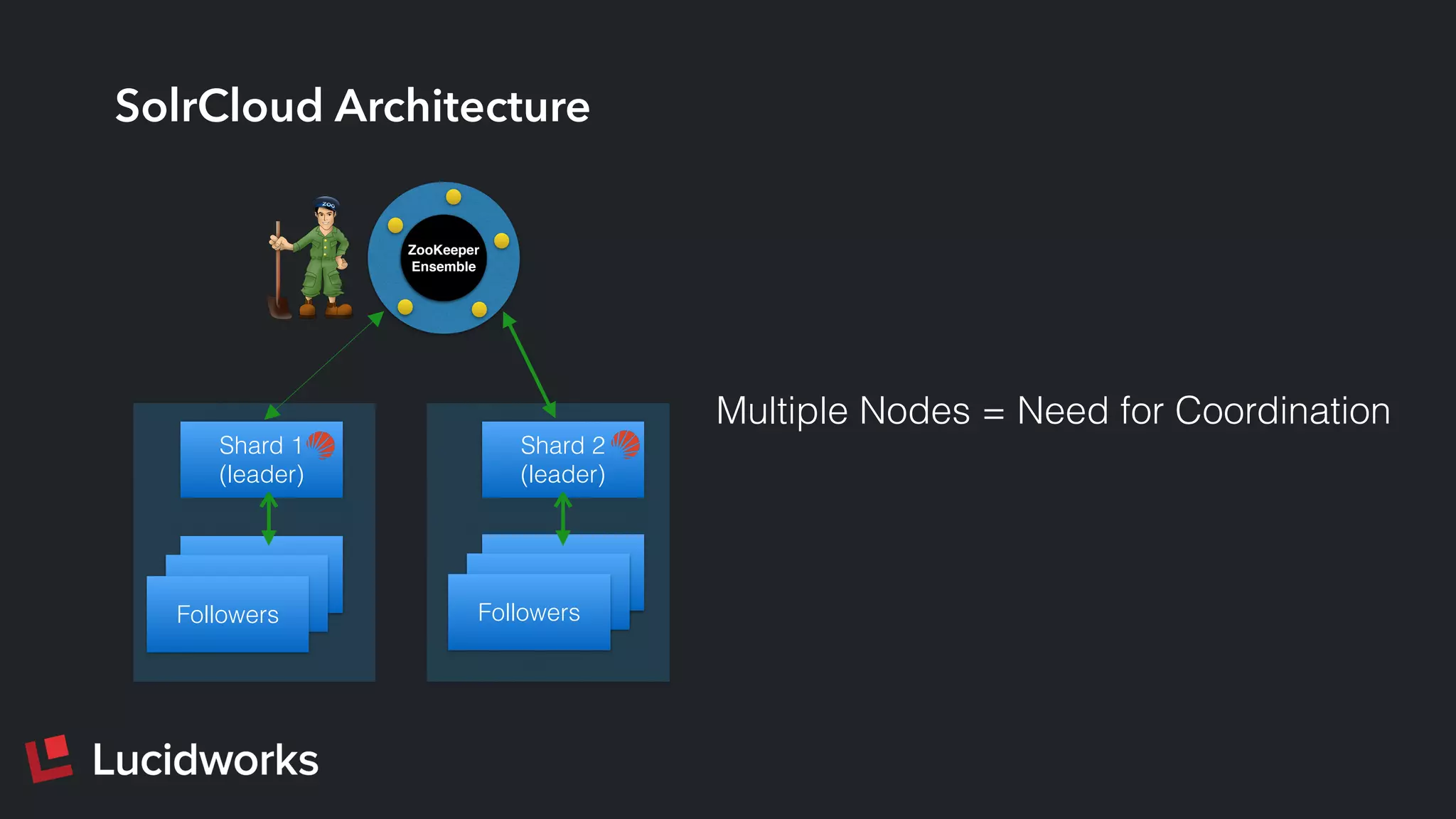

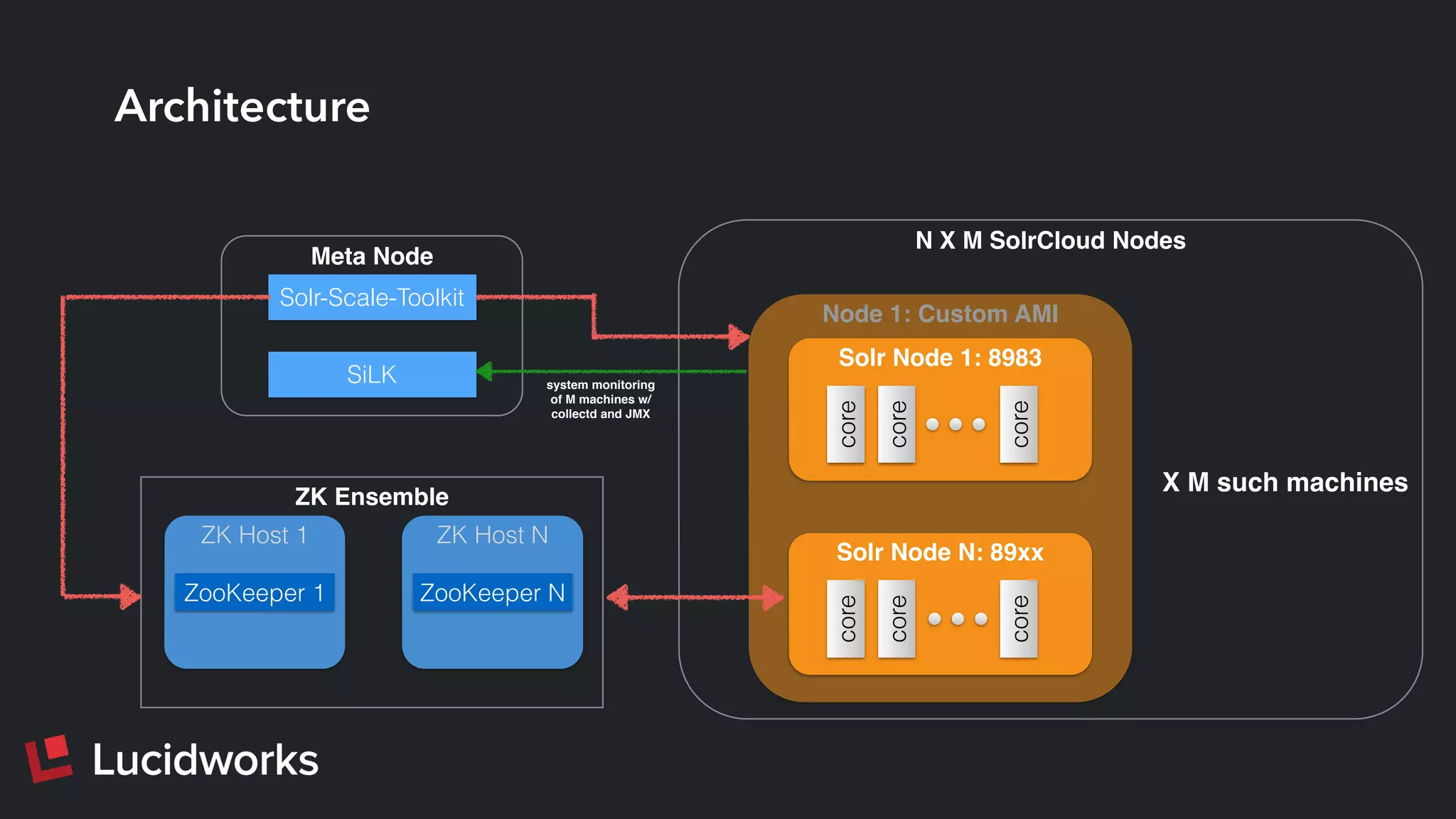

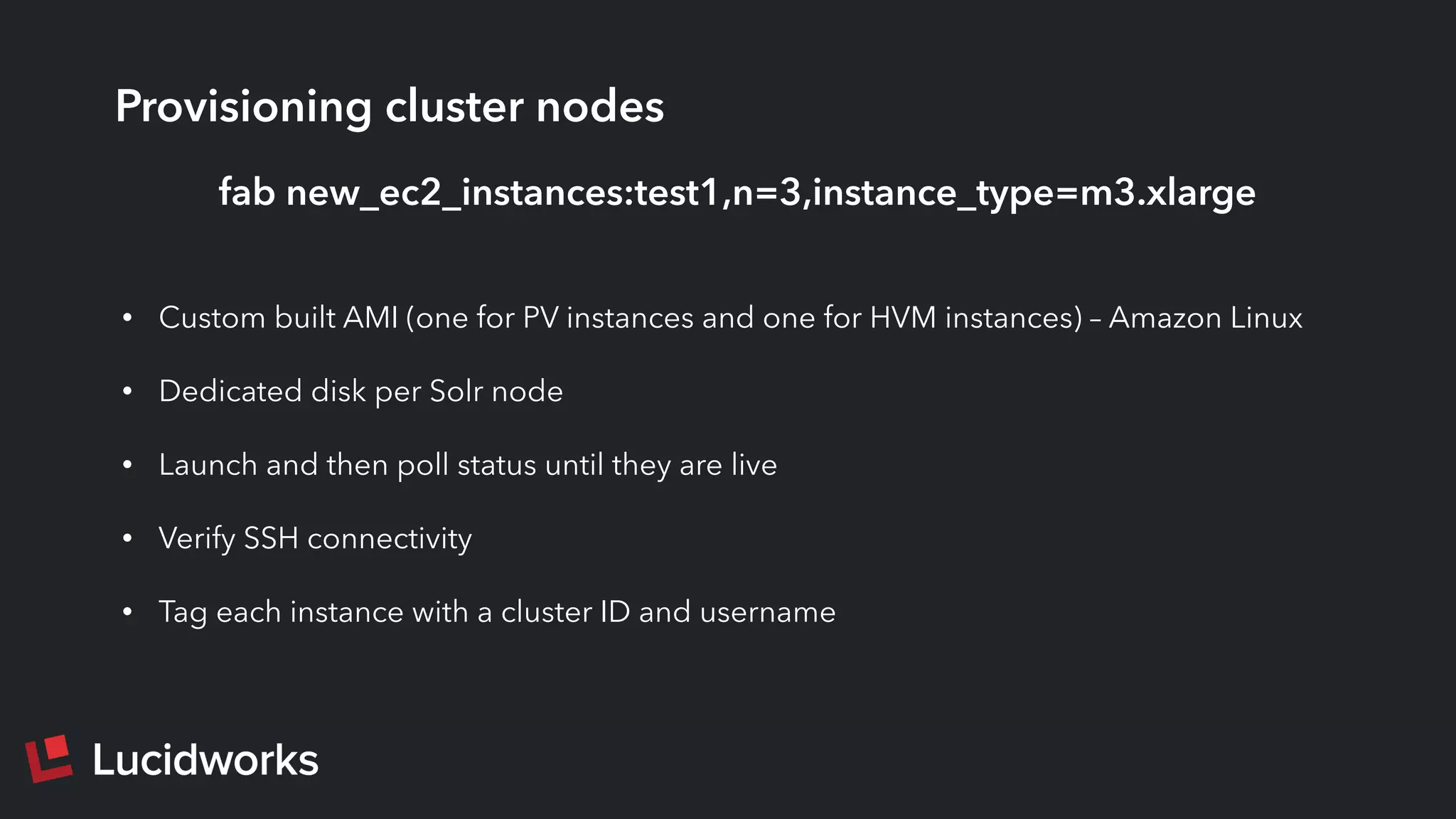

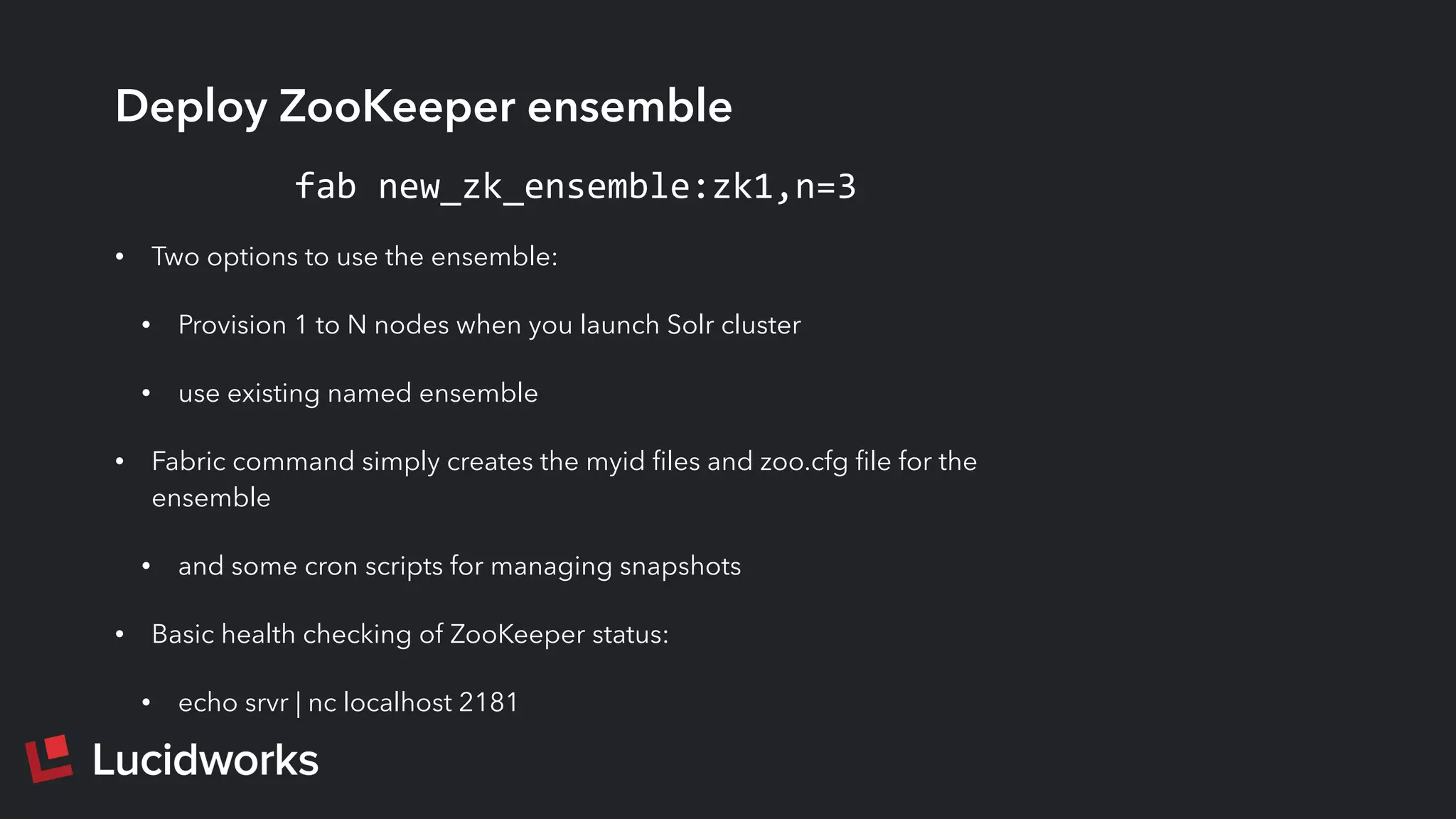

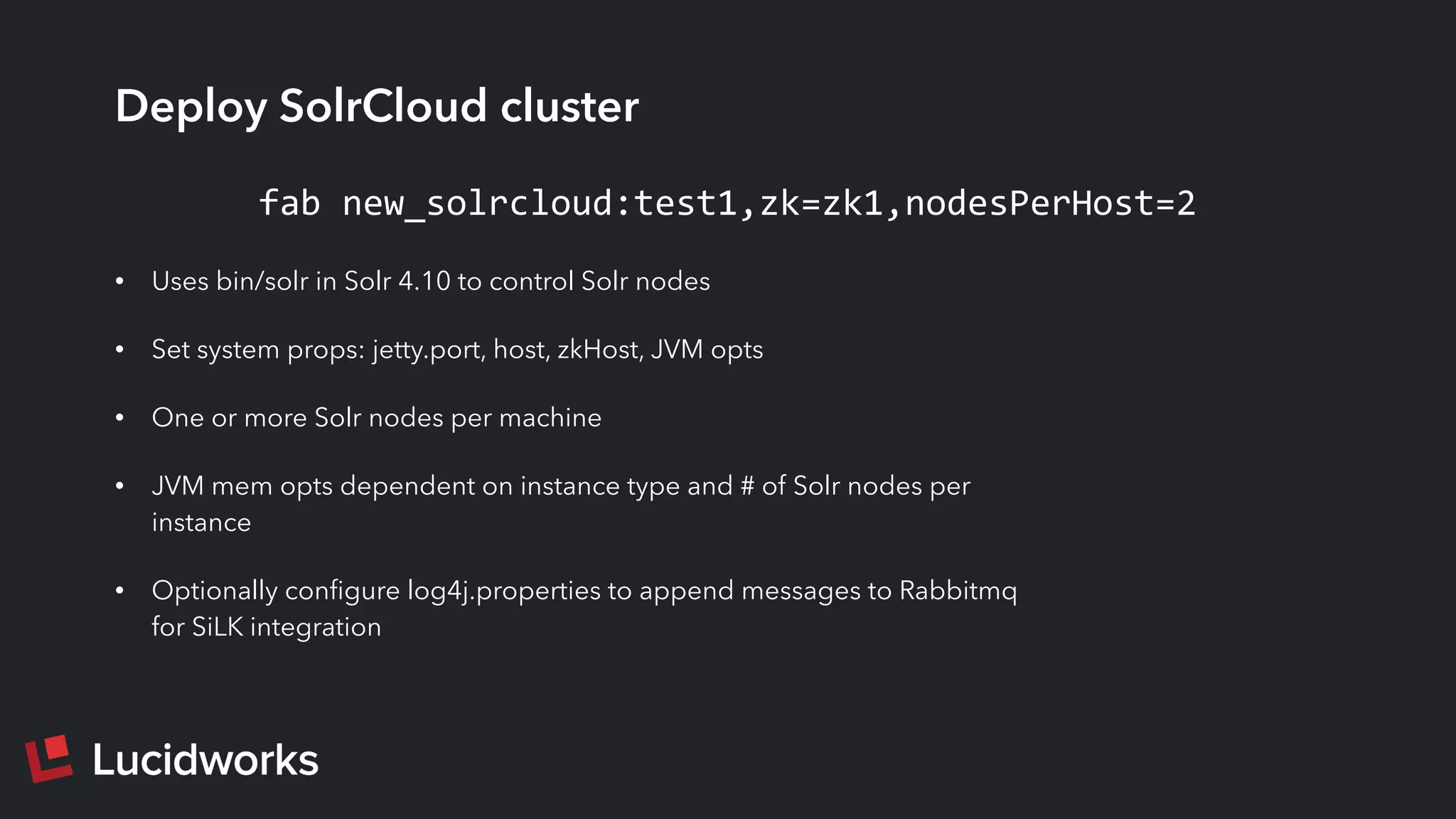

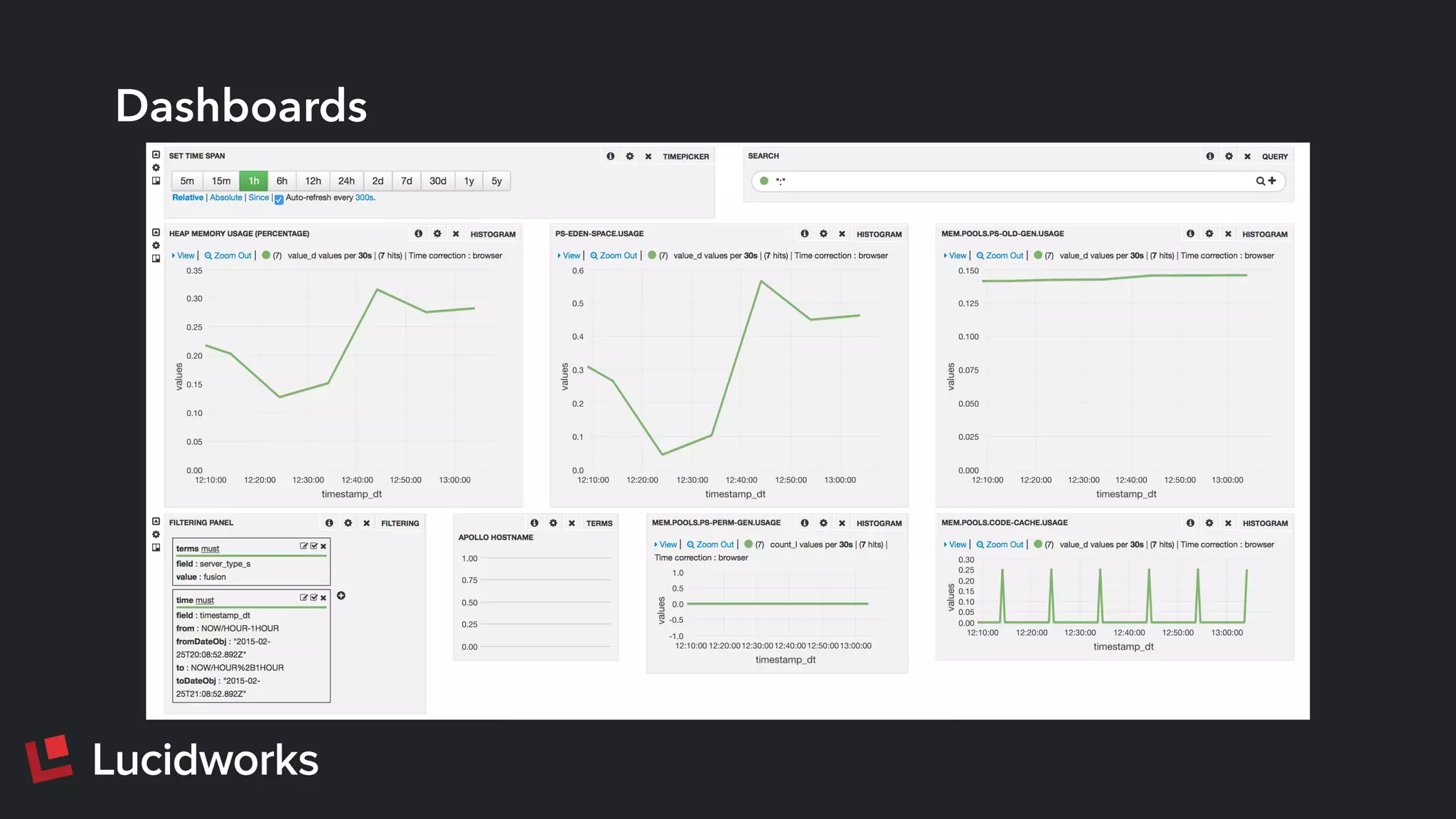

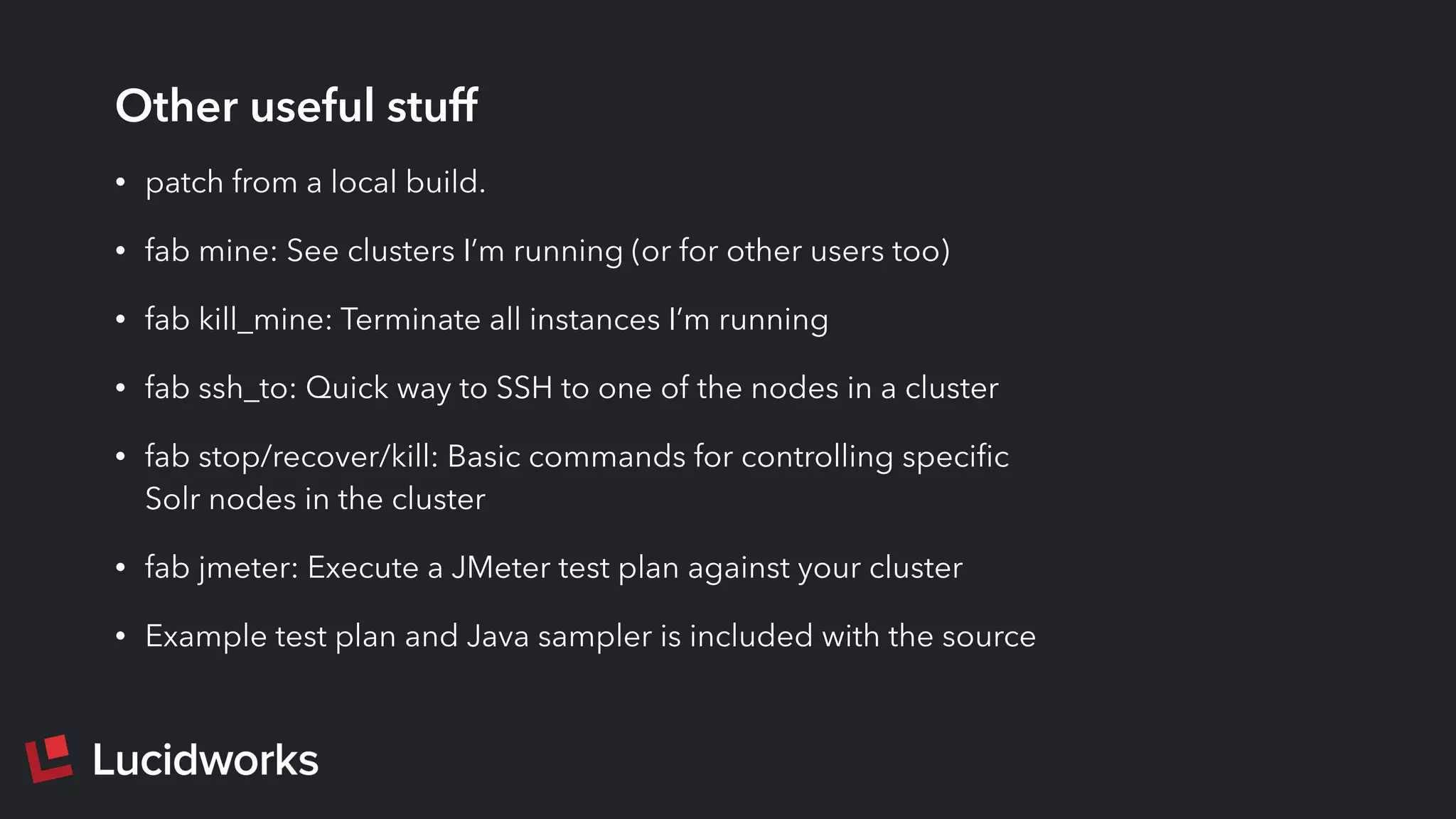

This document discusses deploying and managing Apache Solr at scale. It introduces the Solr Scale Toolkit, an open source tool for deploying and managing SolrCloud clusters in cloud environments like AWS. The toolkit uses Python tools like Fabric to provision machines, deploy ZooKeeper ensembles, configure and start SolrCloud clusters. It also supports benchmark testing and system monitoring. The document demonstrates using the toolkit and discusses lessons learned around indexing and query performance at scale.