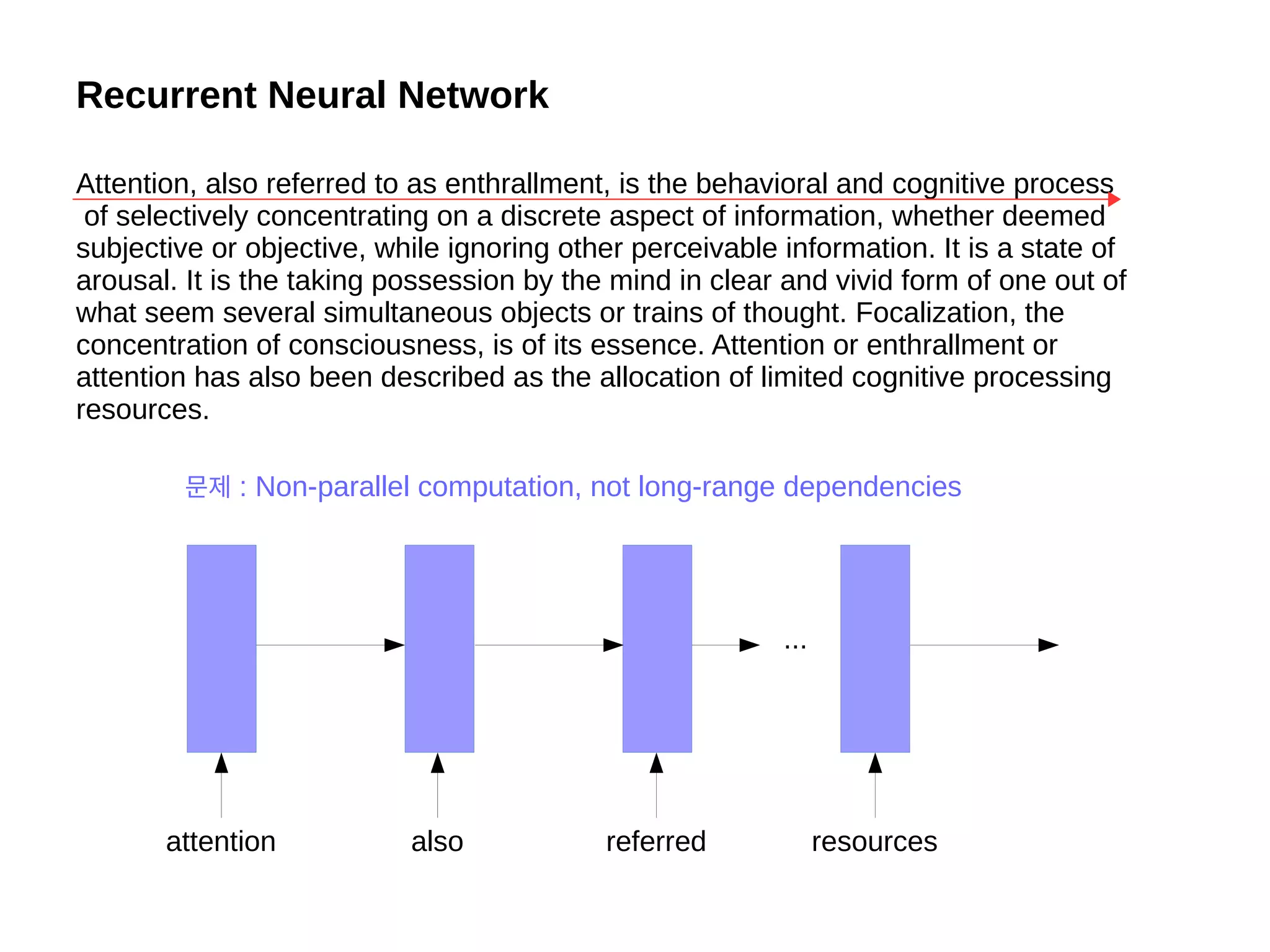

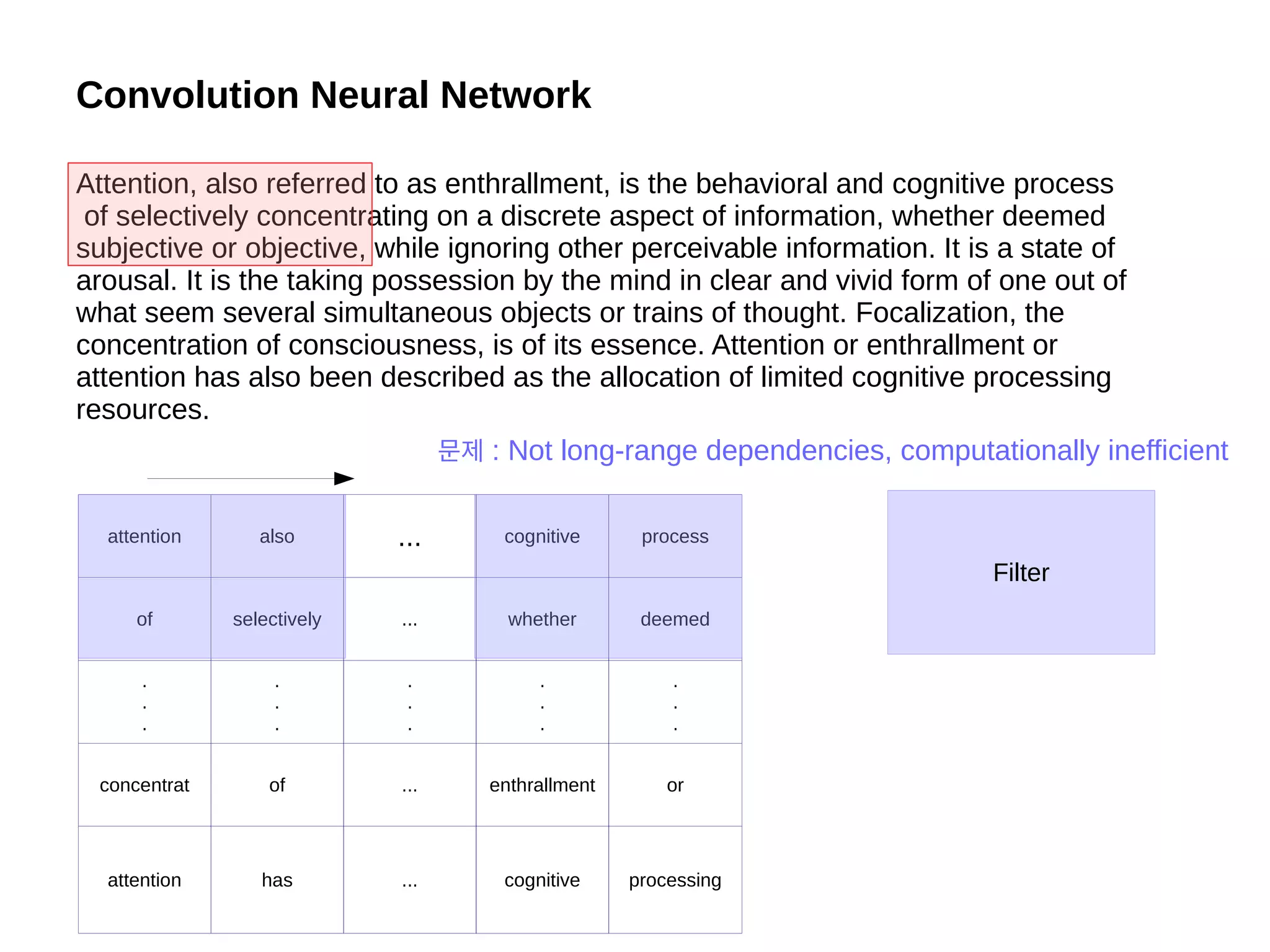

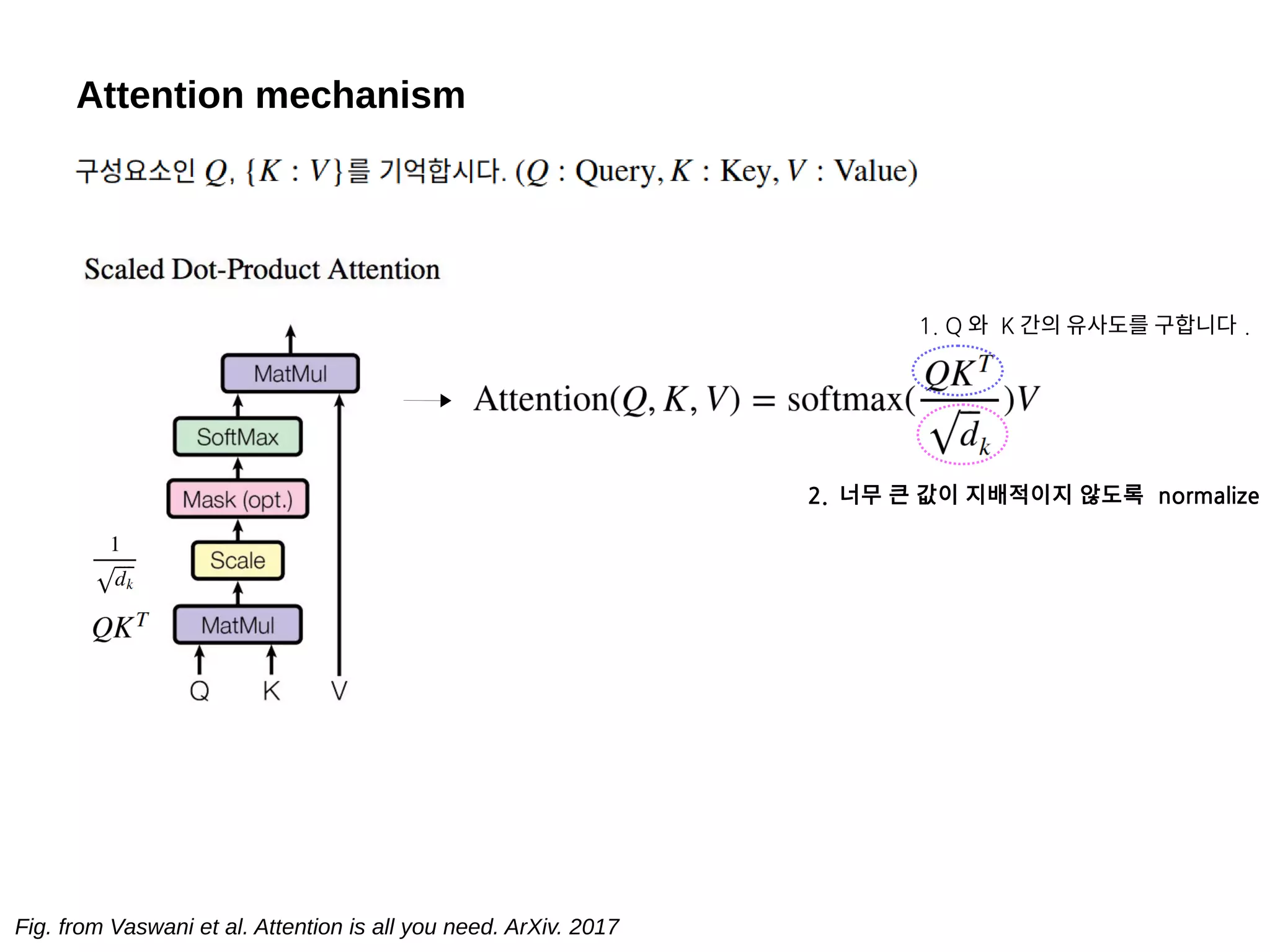

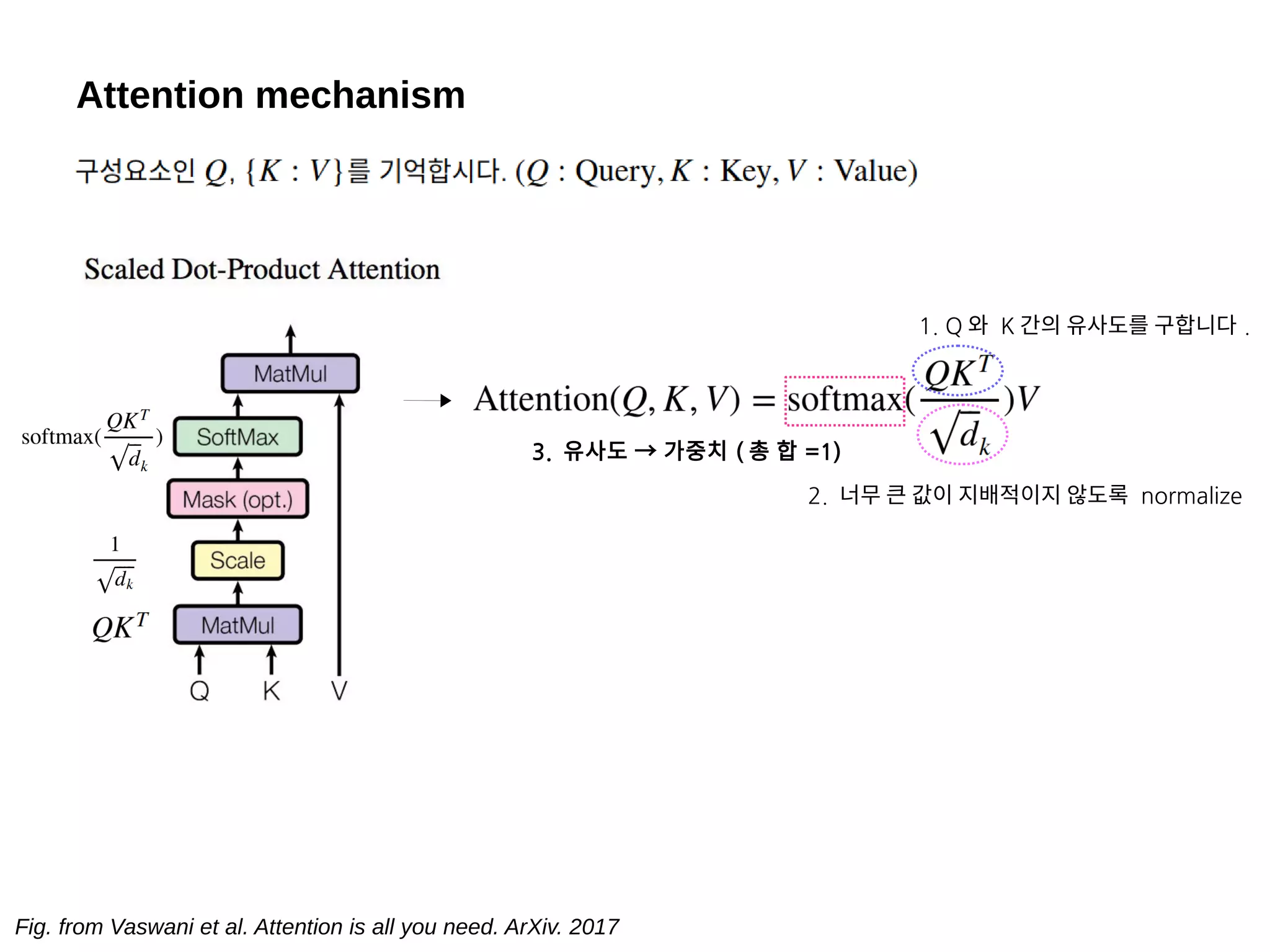

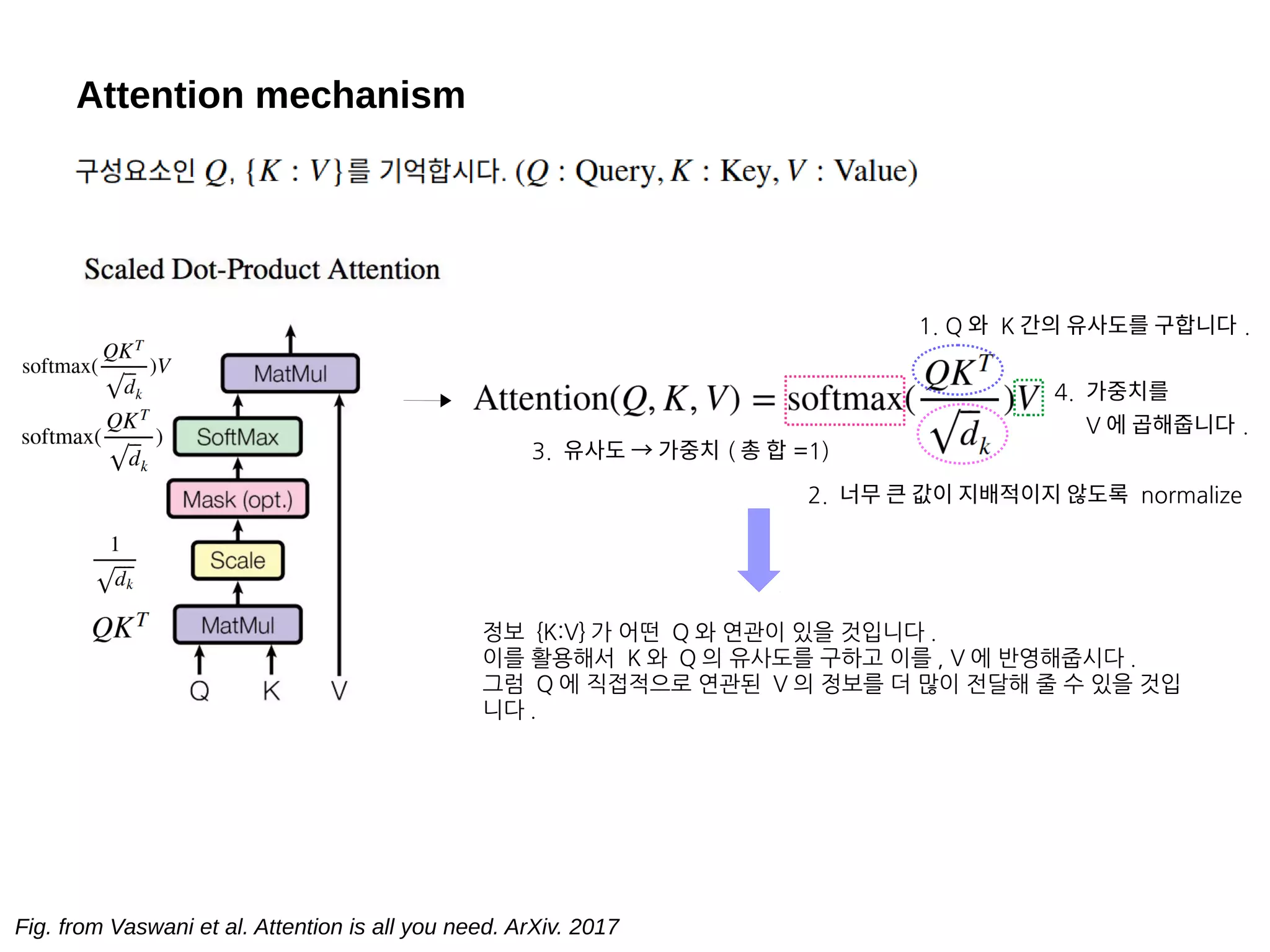

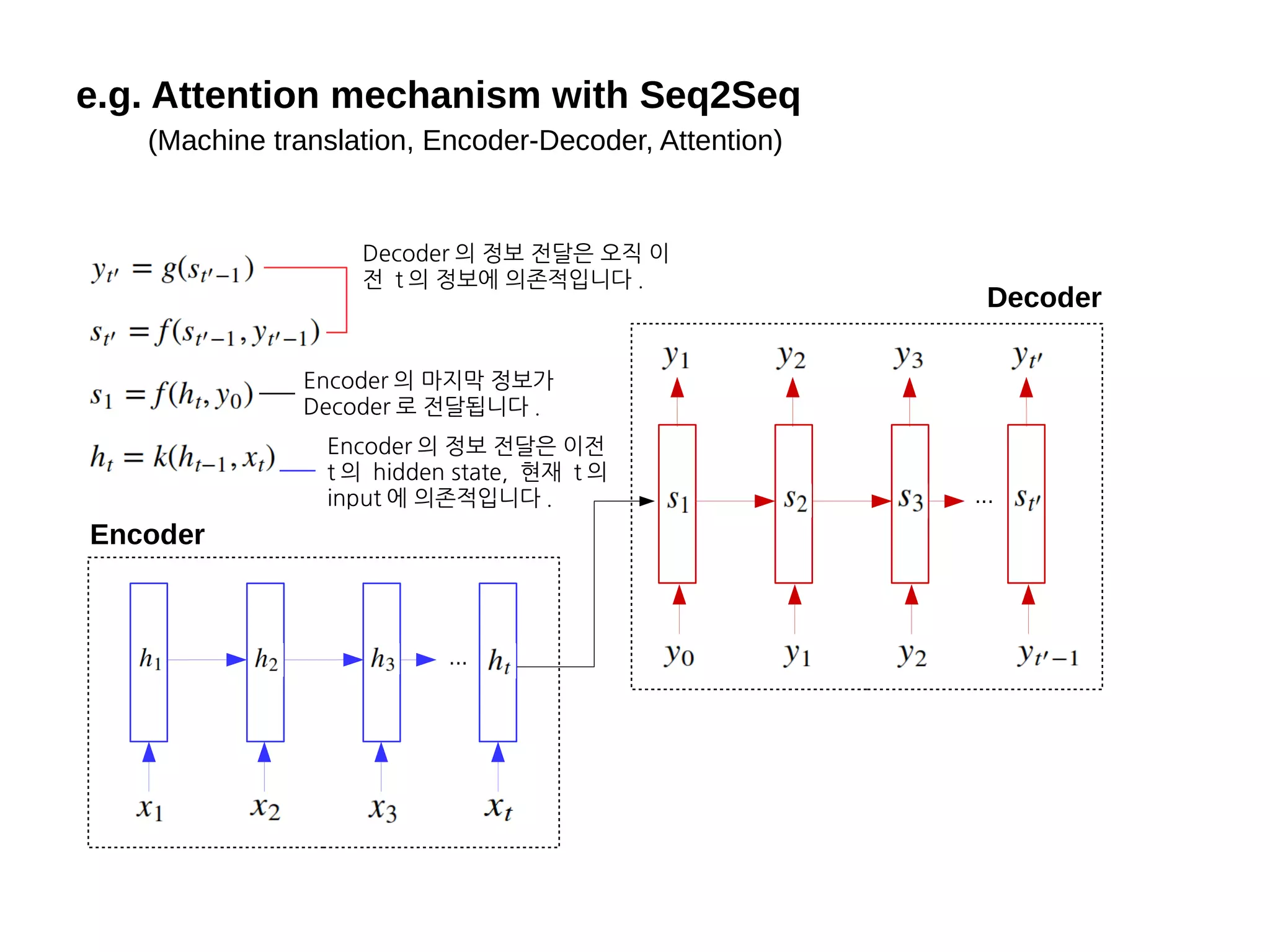

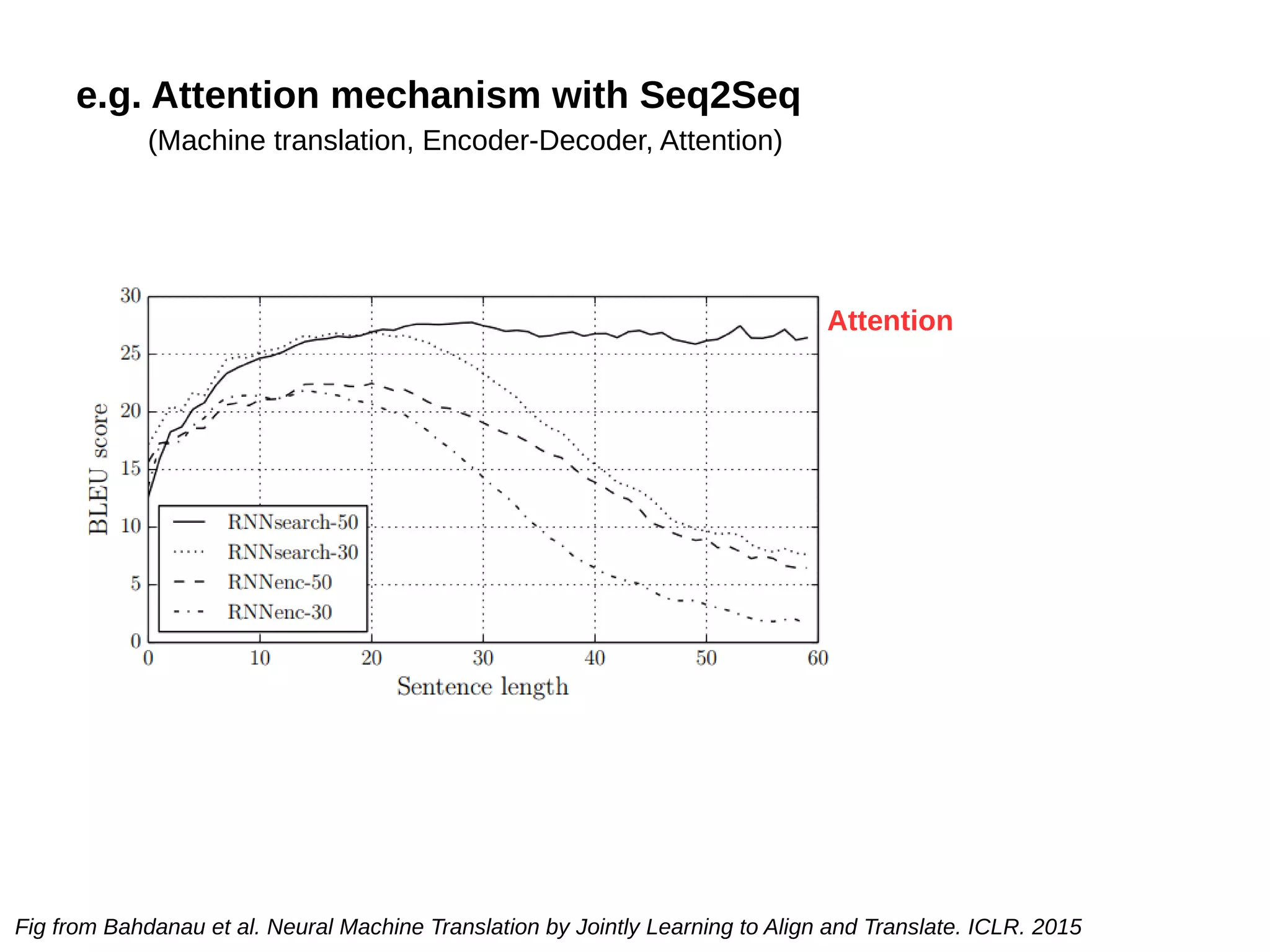

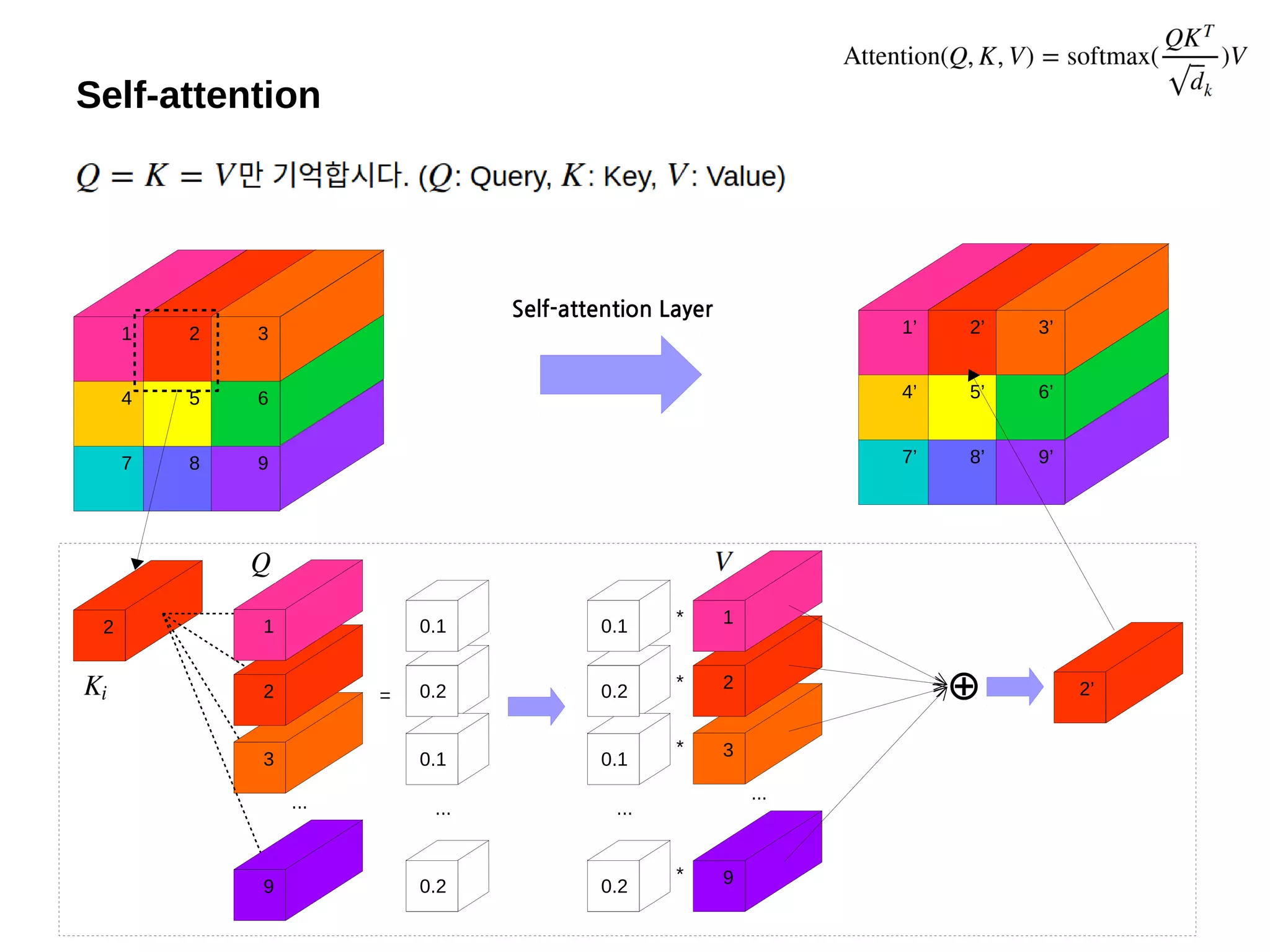

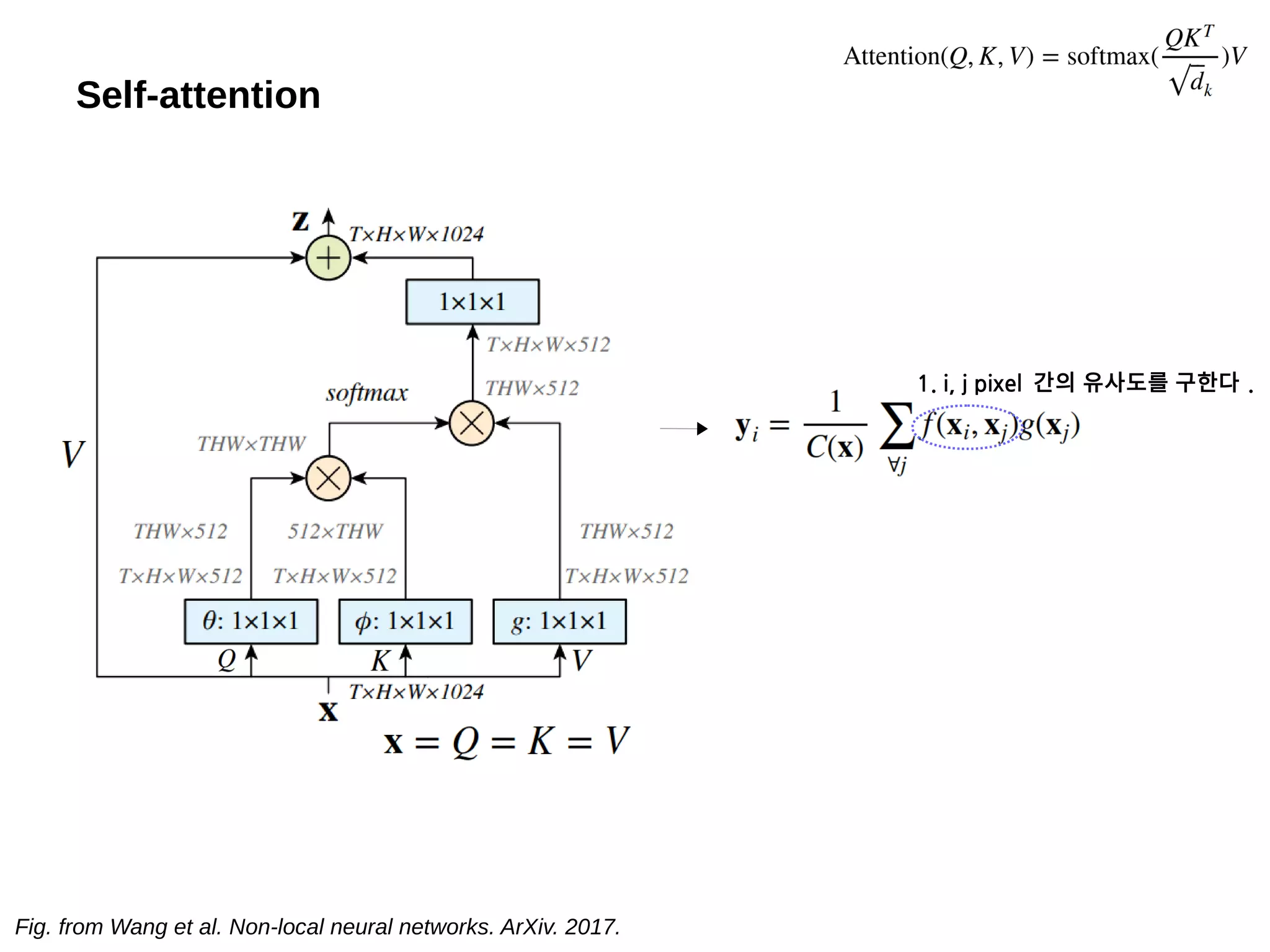

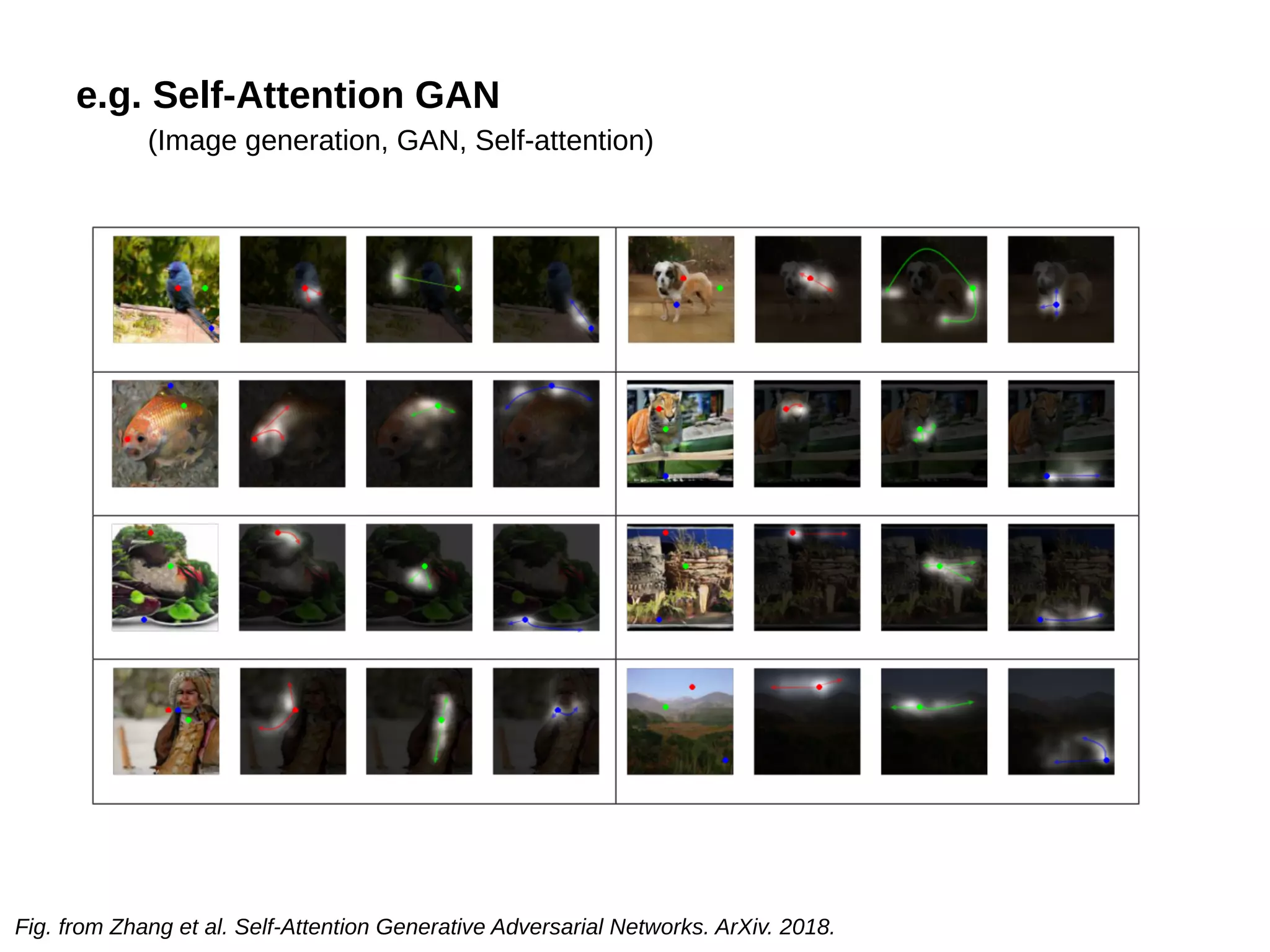

The document discusses the concept of attention in cognitive and behavioral processes, emphasizing its role in selectively focusing on specific information while ignoring others. It details the attention mechanism, its application in neural networks, and introduces types such as self-attention, which allows for modeling long-range dependencies. Additionally, references to various research papers and applications in fields like machine translation and image generation are provided.