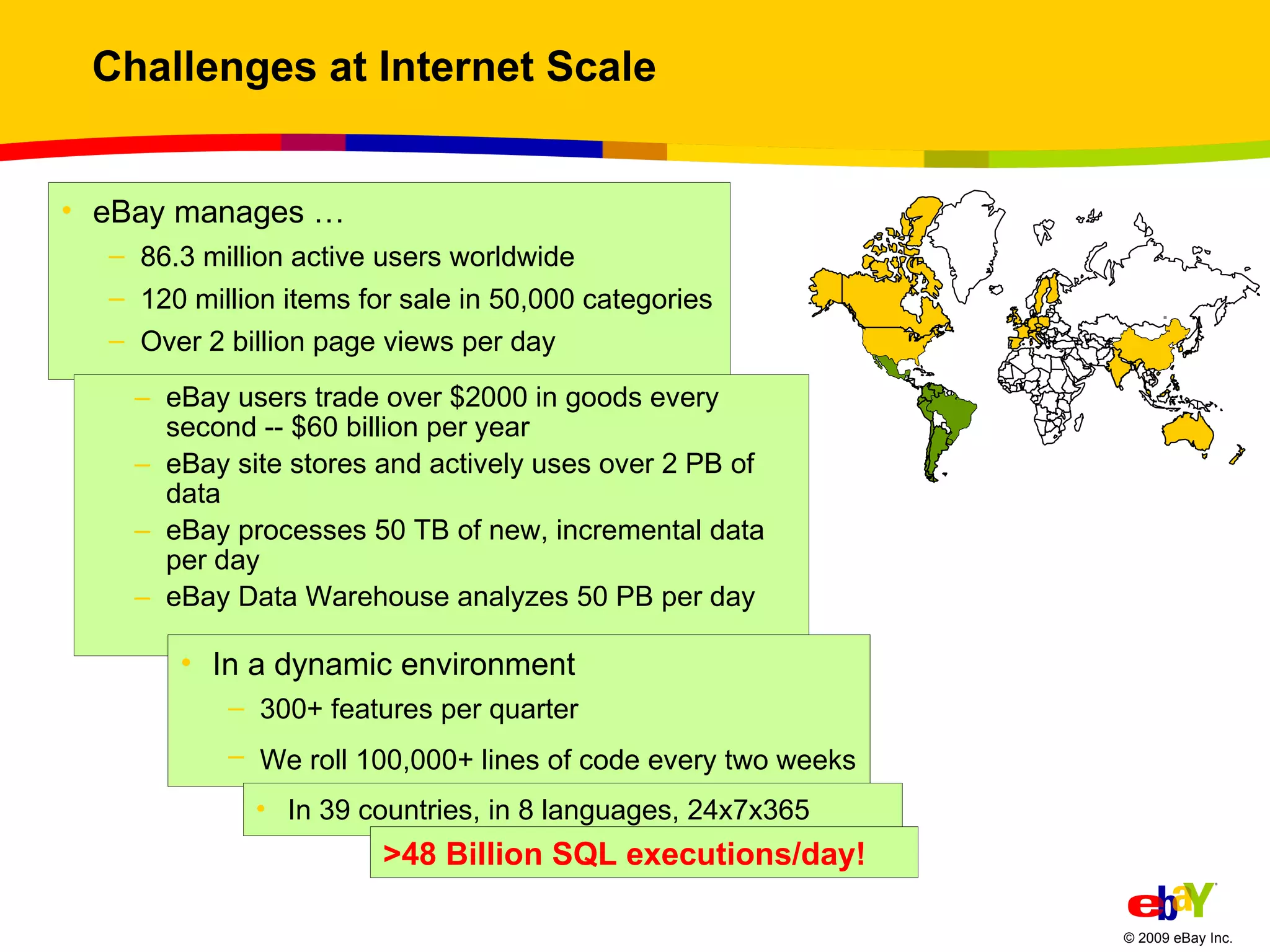

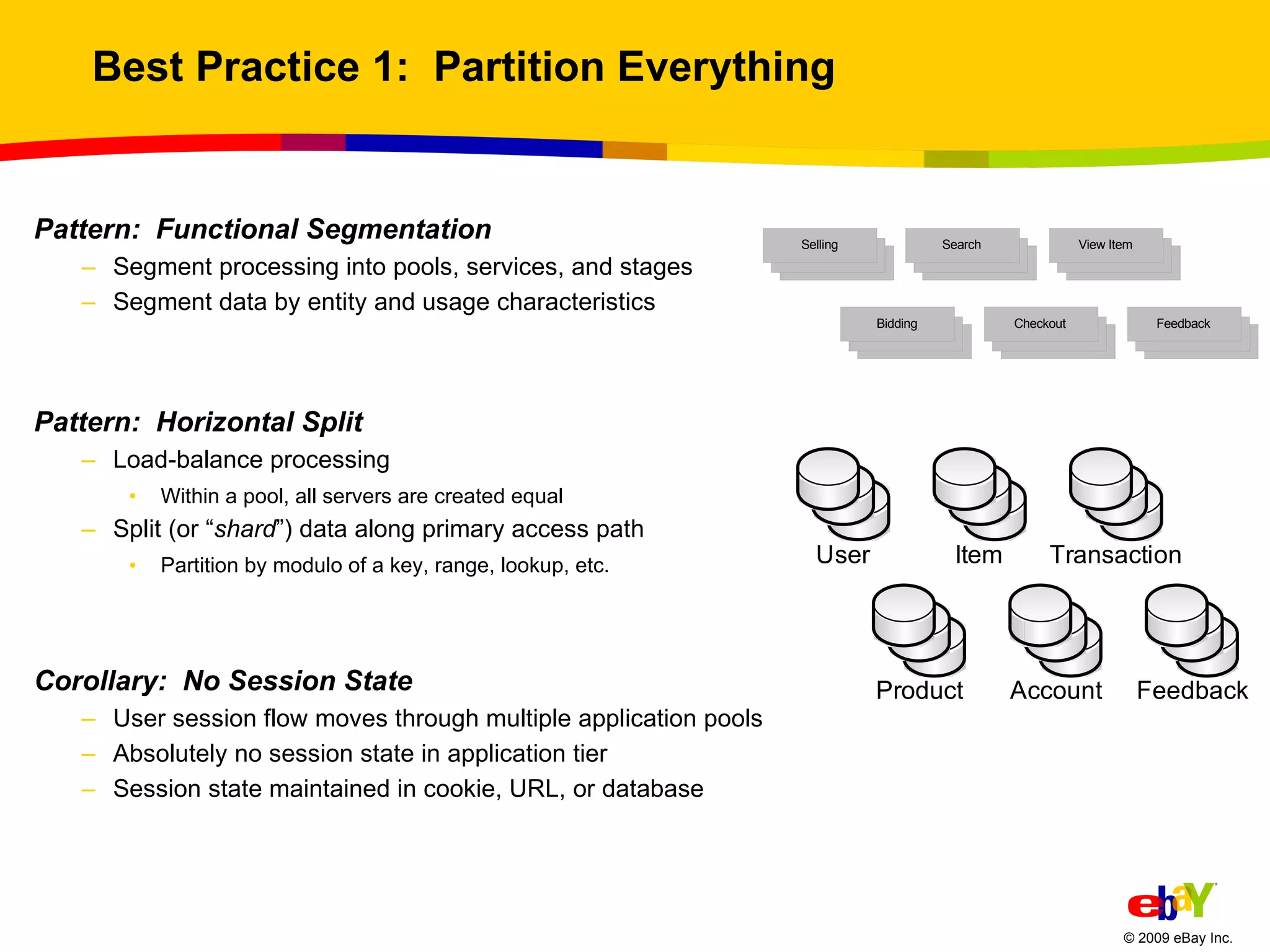

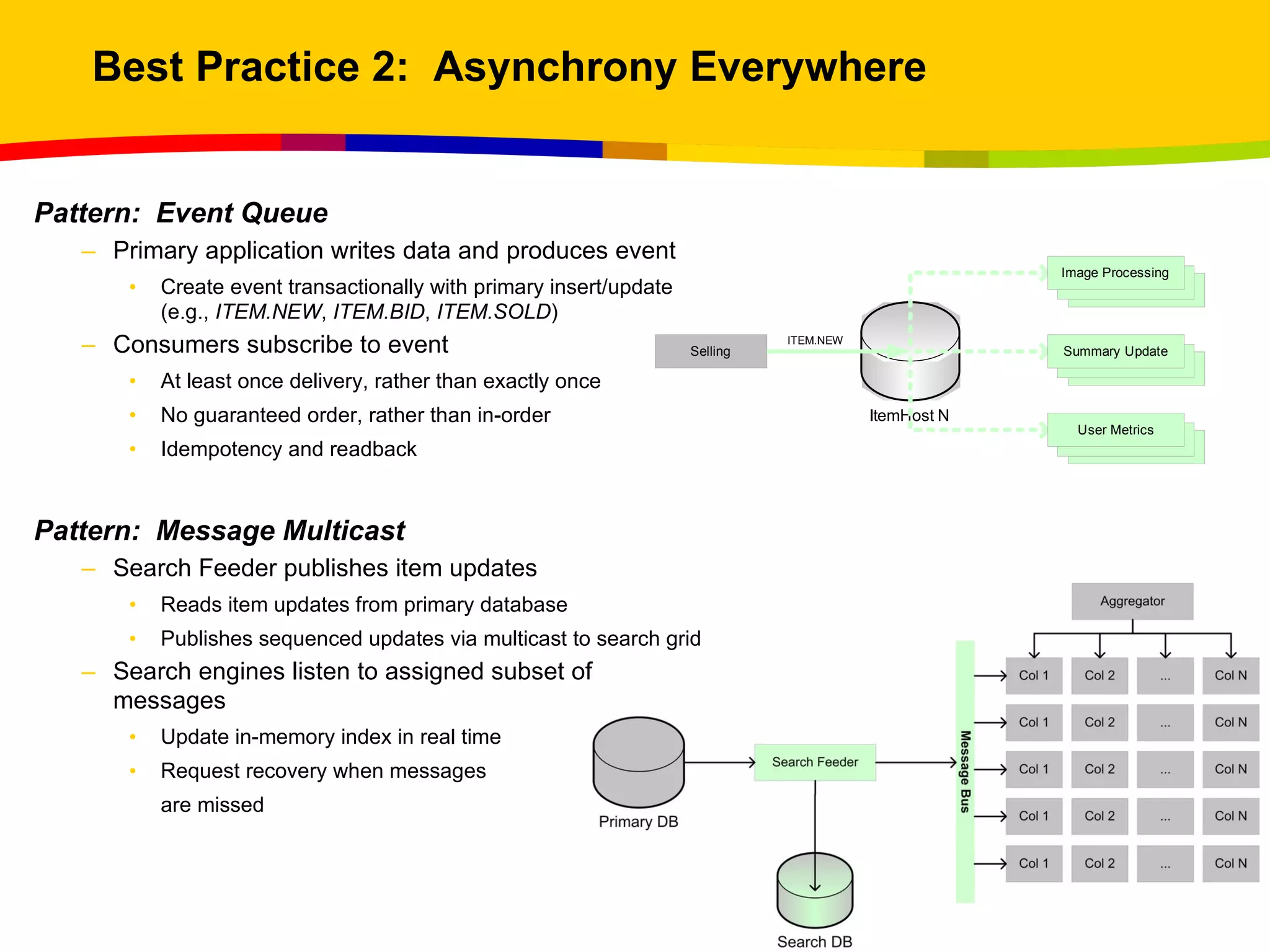

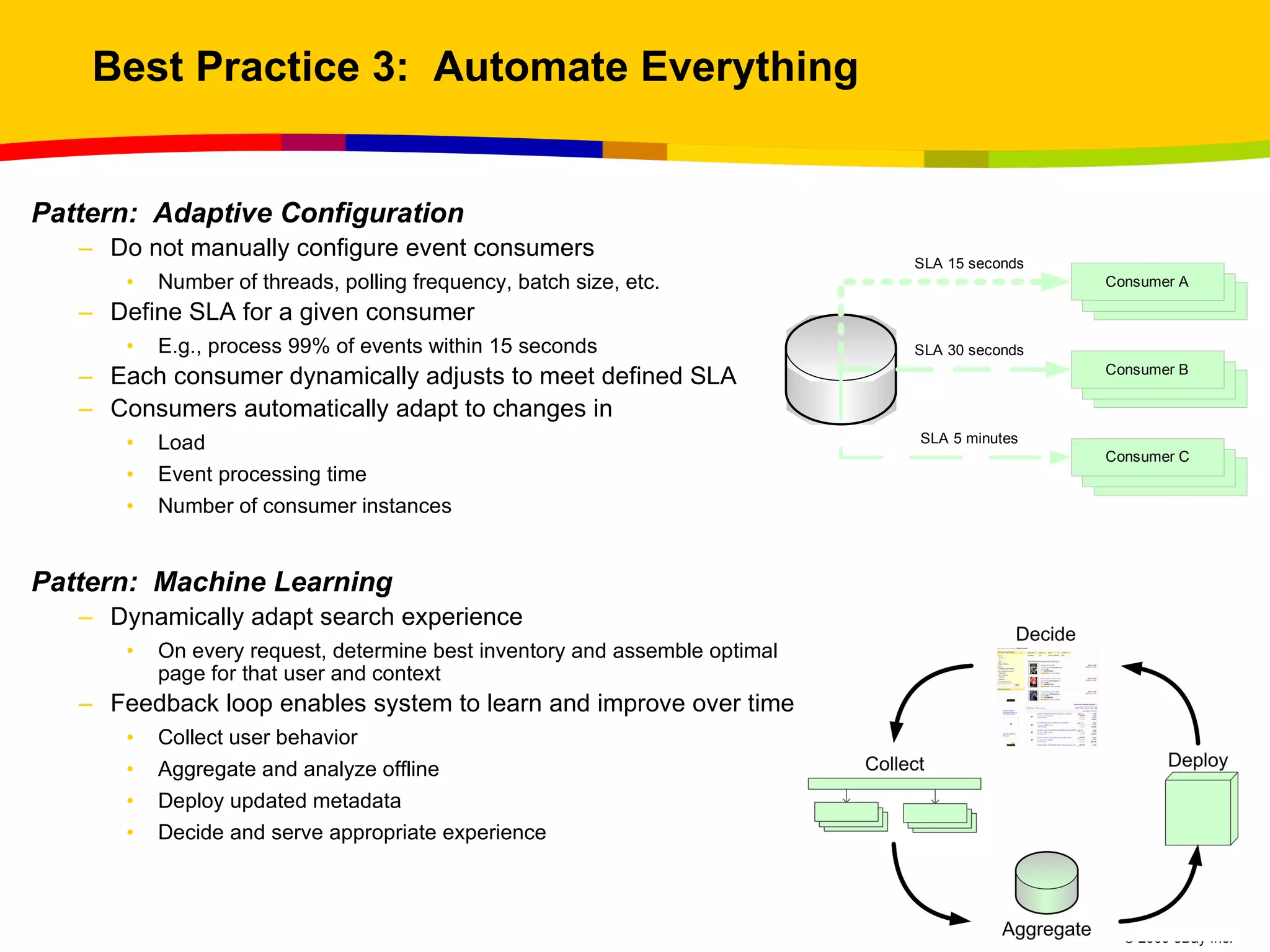

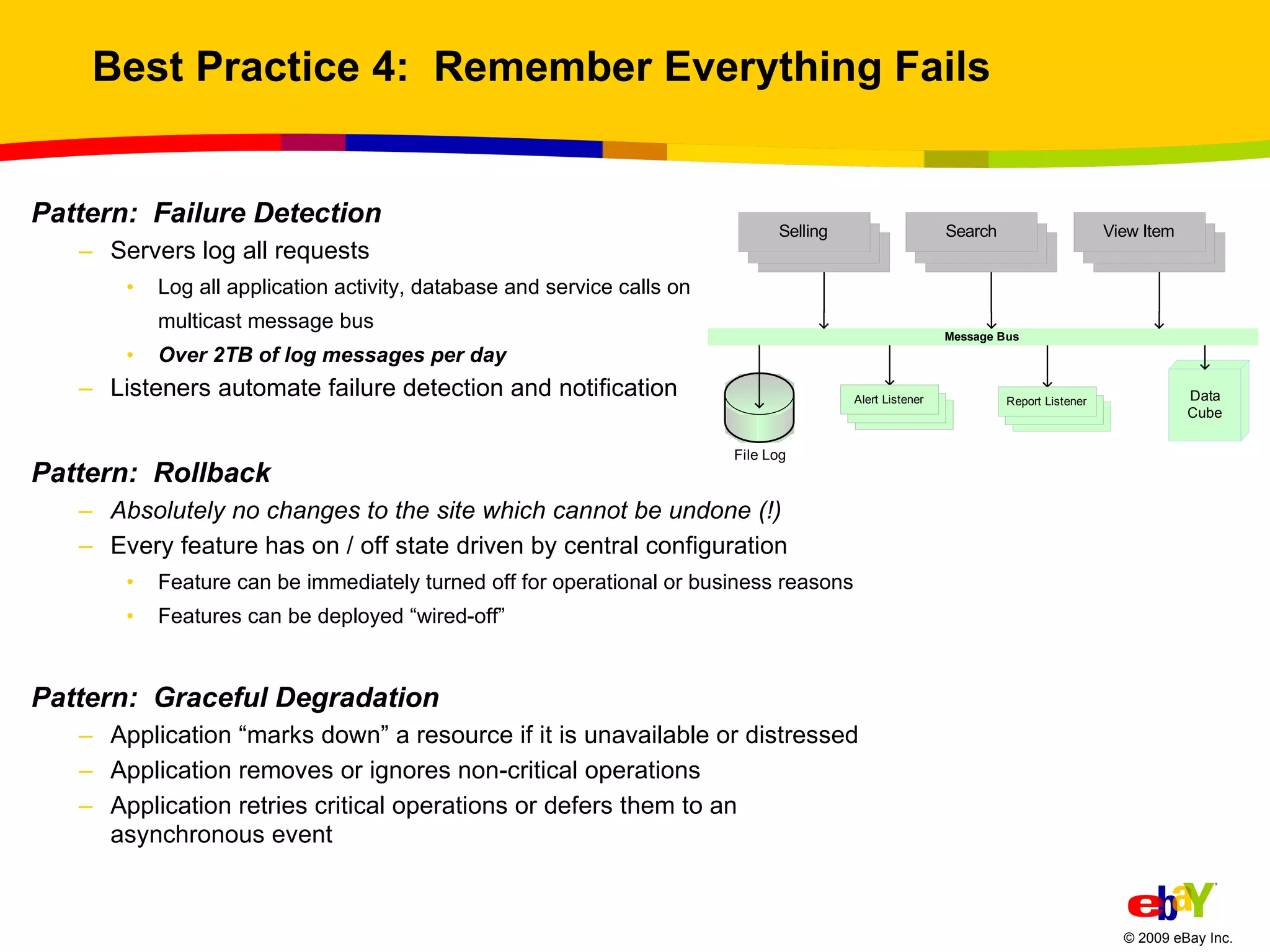

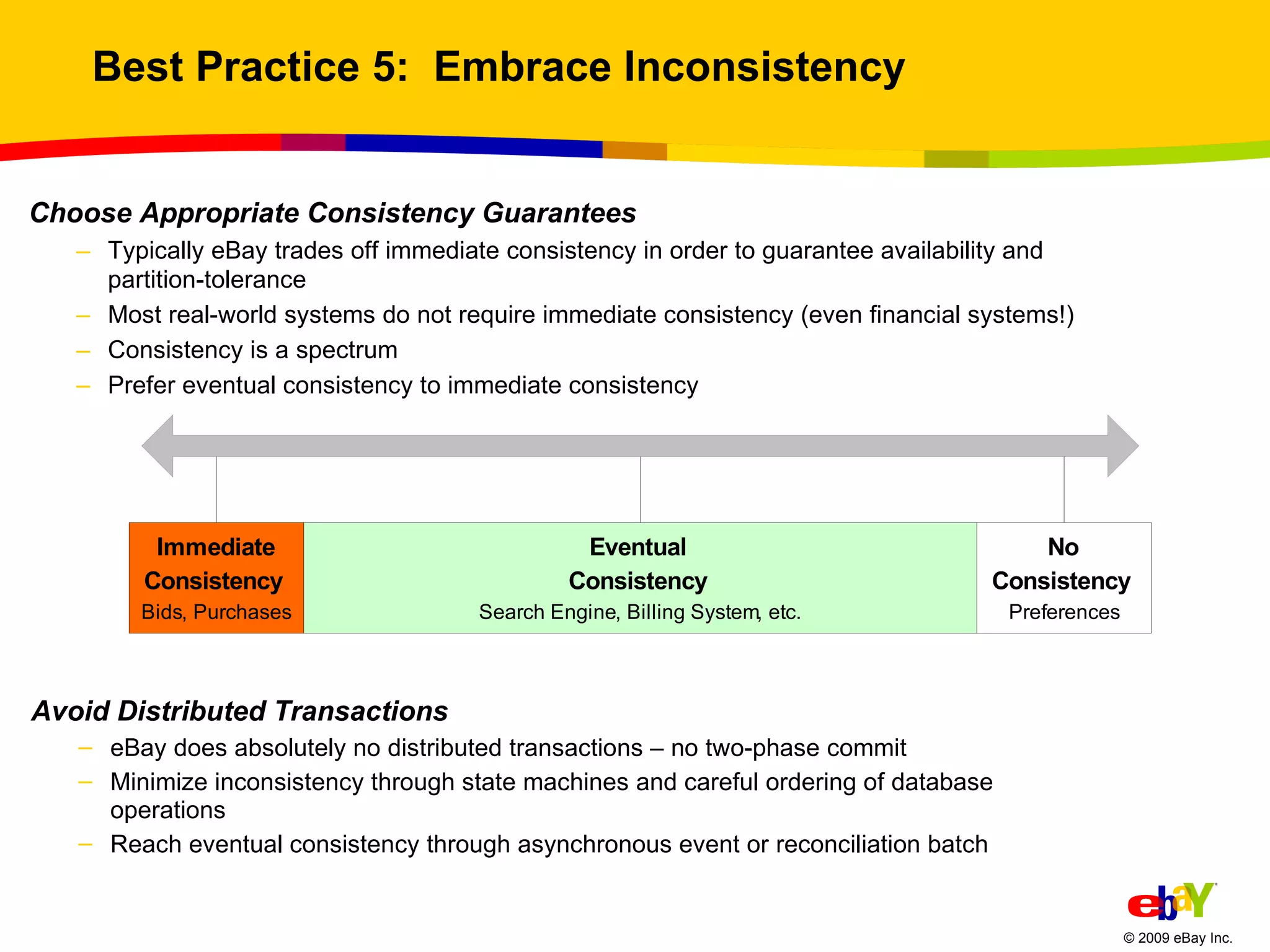

The document outlines best practices for scaling websites, drawing lessons from eBay's architectural strategies. It emphasizes the importance of partitioning, adopting asynchronous processing, automation, building systems that tolerate failure, and understanding consistency trade-offs. These practices aim to enhance scalability, availability, and manageability in high-demand internet environments.

![Questions? About the Presenter Randy Shoup has been the primary architect for eBay's search infrastructure since 2004. Prior to eBay, Randy was Chief Architect and Technical Fellow at Tumbleweed Communications, and has also held a variety of software development and architecture roles at Oracle and Informatica. [email_address]](https://image.slidesharecdn.com/bestpracticesforscalingwebsites-lessonsfromebaytokyo-110515181212-phpapp01/75/Best-Practices-for-Large-Scale-Websites-Lessons-from-eBay-16-2048.jpg)