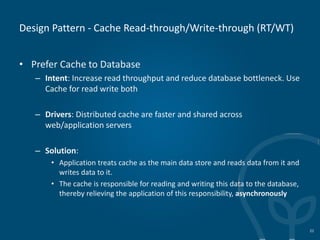

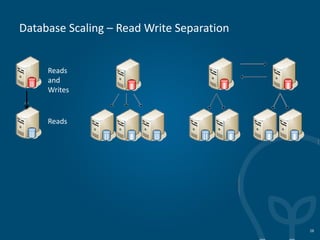

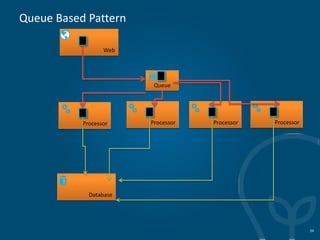

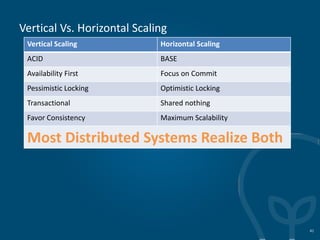

The document discusses principles of scalable web design. It defines scalability as the ability to effectively support increasing user traffic and data growth without degrading performance. Scalability is achieved through horizontal scaling (adding more resources) rather than just vertical scaling (increasing power of individual resources). Key patterns for scalability include stateless design, caching, load balancing, database replication, sharding, asynchronous processing, queue-based architectures, and eventual consistency. Both horizontal and vertical scaling have tradeoffs. The document emphasizes designing for scalability from the start through patterns like loose coupling, parallelization, and fault tolerance.