Big Data Analytics with MariaDB ColumnStore provides an overview of MariaDB ColumnStore. Key points include:

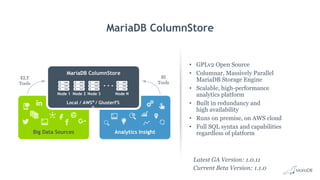

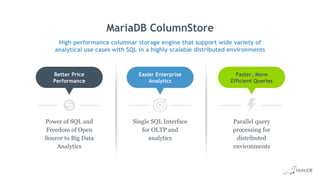

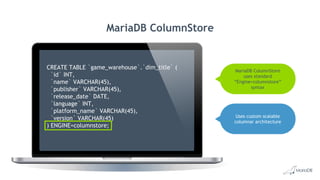

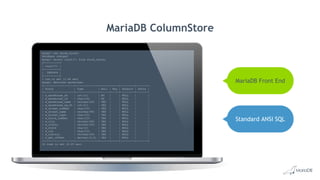

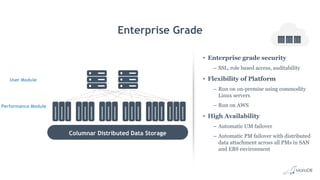

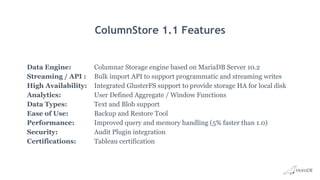

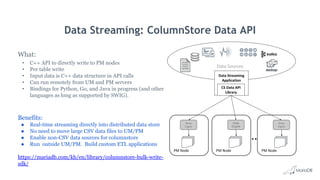

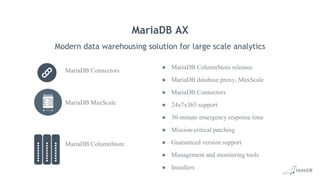

- MariaDB ColumnStore is an open source columnar storage engine that provides high performance analytics on large datasets in a scalable distributed environment using standard SQL.

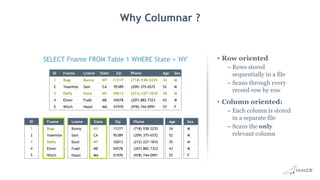

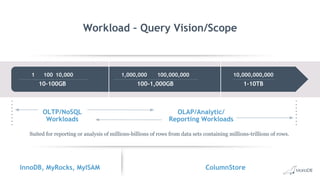

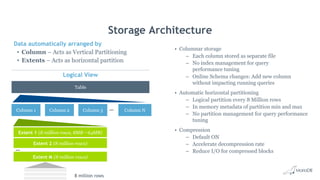

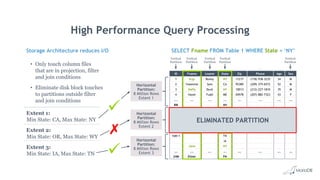

- Columnar storage organizes data by columns rather than rows, improving query performance by only accessing relevant columns. It supports workloads from terabytes to petabytes of data.

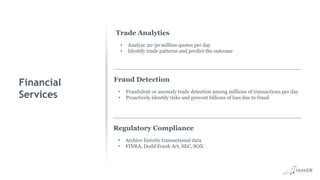

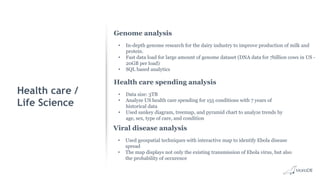

- Common use cases include data warehousing, financial services, healthcare, telecom, and any workload requiring analysis of millions to billions of rows.

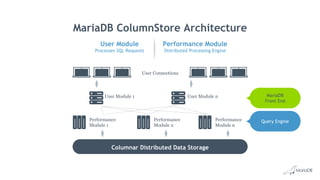

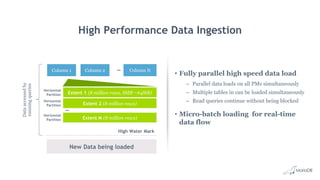

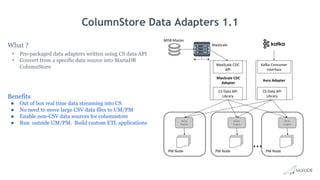

- The architecture employs a distributed query processing model with horizontal partitioning and parallel query execution across nodes for high scalability