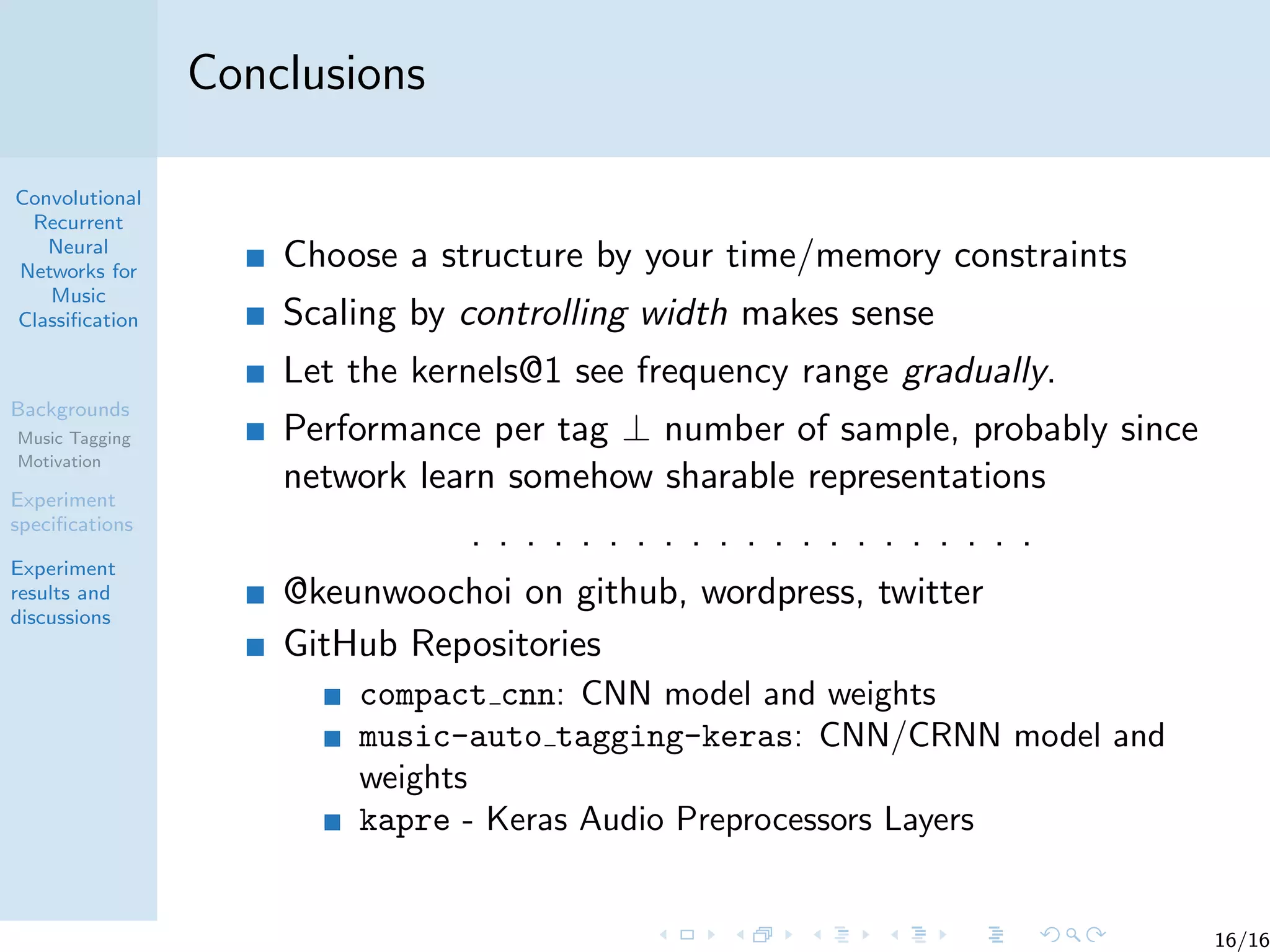

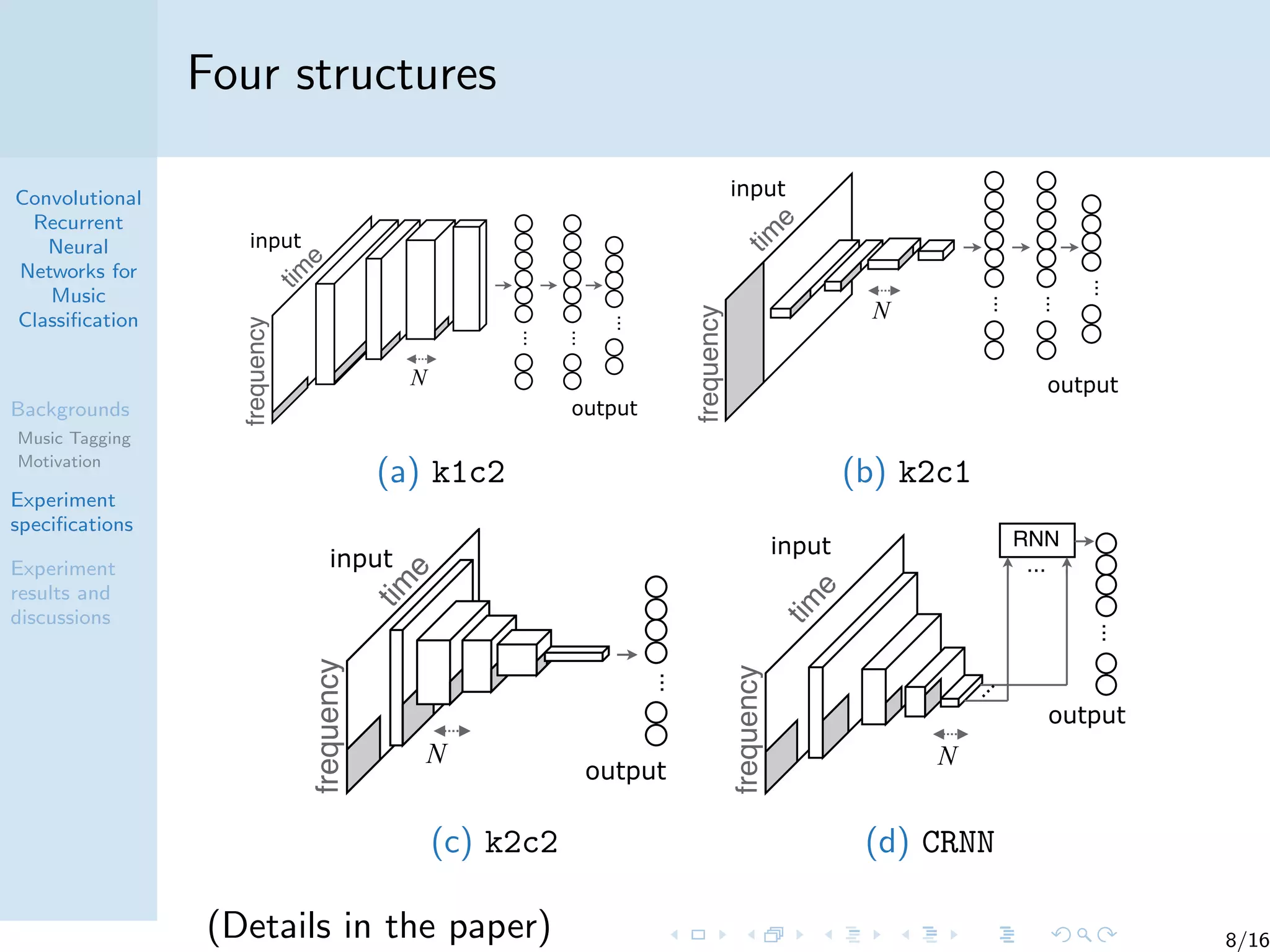

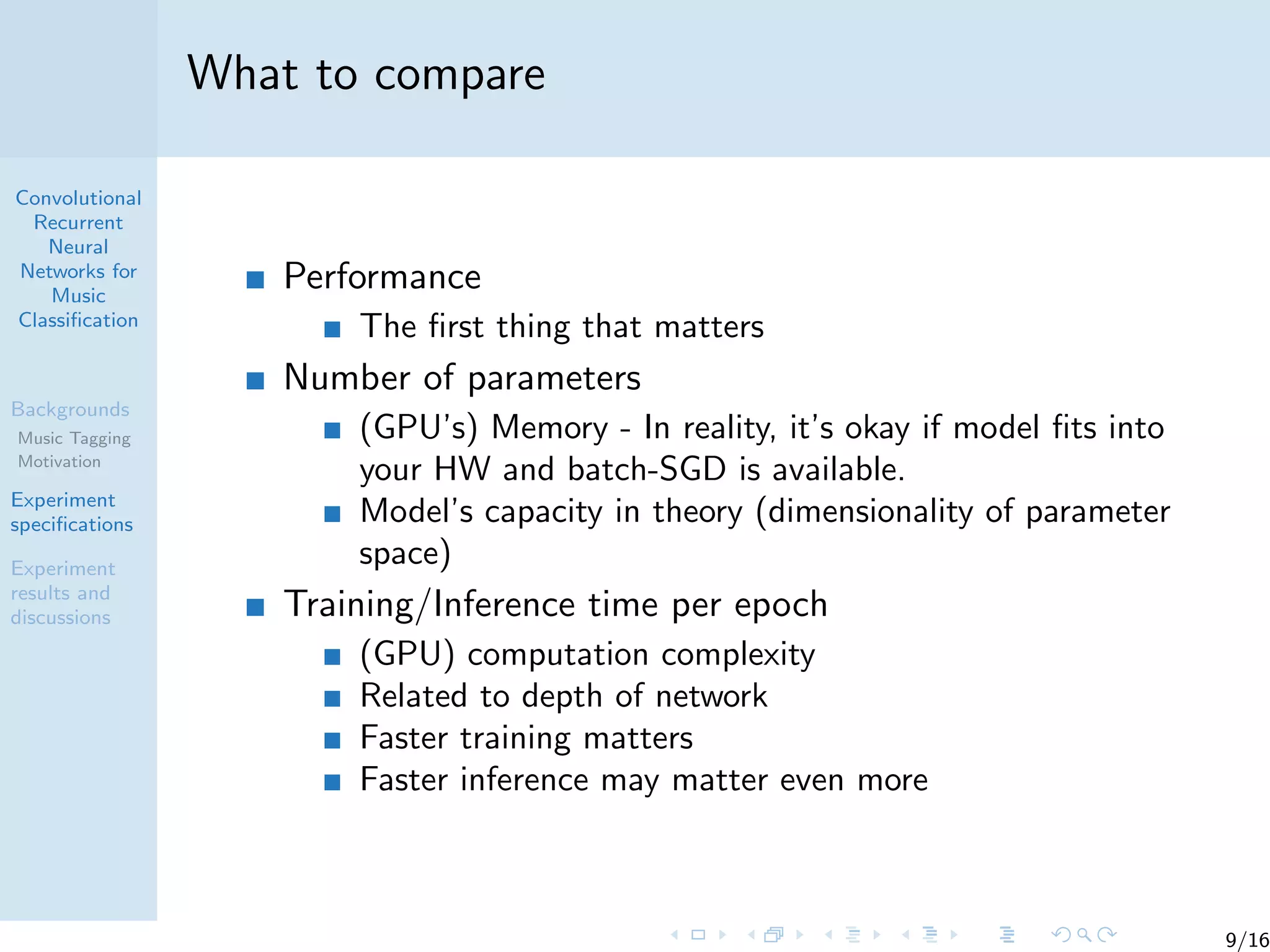

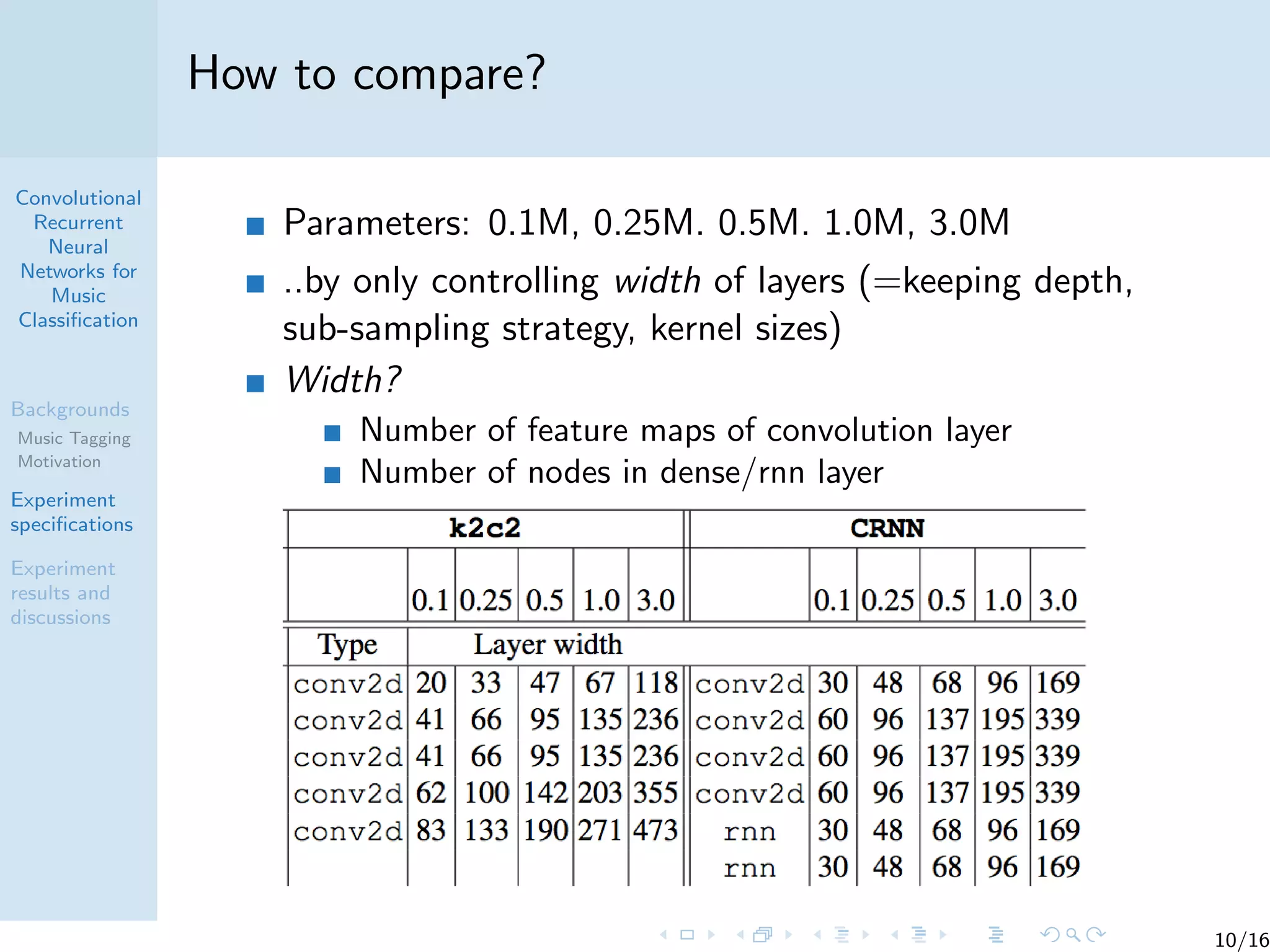

The document describes an experiment comparing different convolutional and recurrent neural network architectures for music classification and tagging. Specifically, it compares models with 1D convolutions (k1c1, k1c2), 2D convolutions (k2c1, k2c2), and a convolutional recurrent neural network (CRNN). The CRNN and k2c2 models achieved the best performance while balancing complexity, though k2c1 was most computationally efficient. Performance varied across tags depending on factors like number of training examples and tag difficulty or ambiguity. The authors conclude the best structure depends on constraints but CRNN generally performed best when feasible.

![Convolutional

Recurrent

Neural

Networks for

Music

Classification

Backgrounds

Music Tagging

Motivation

Experiment

specifications

Experiment

results and

discussions

Backgrounds

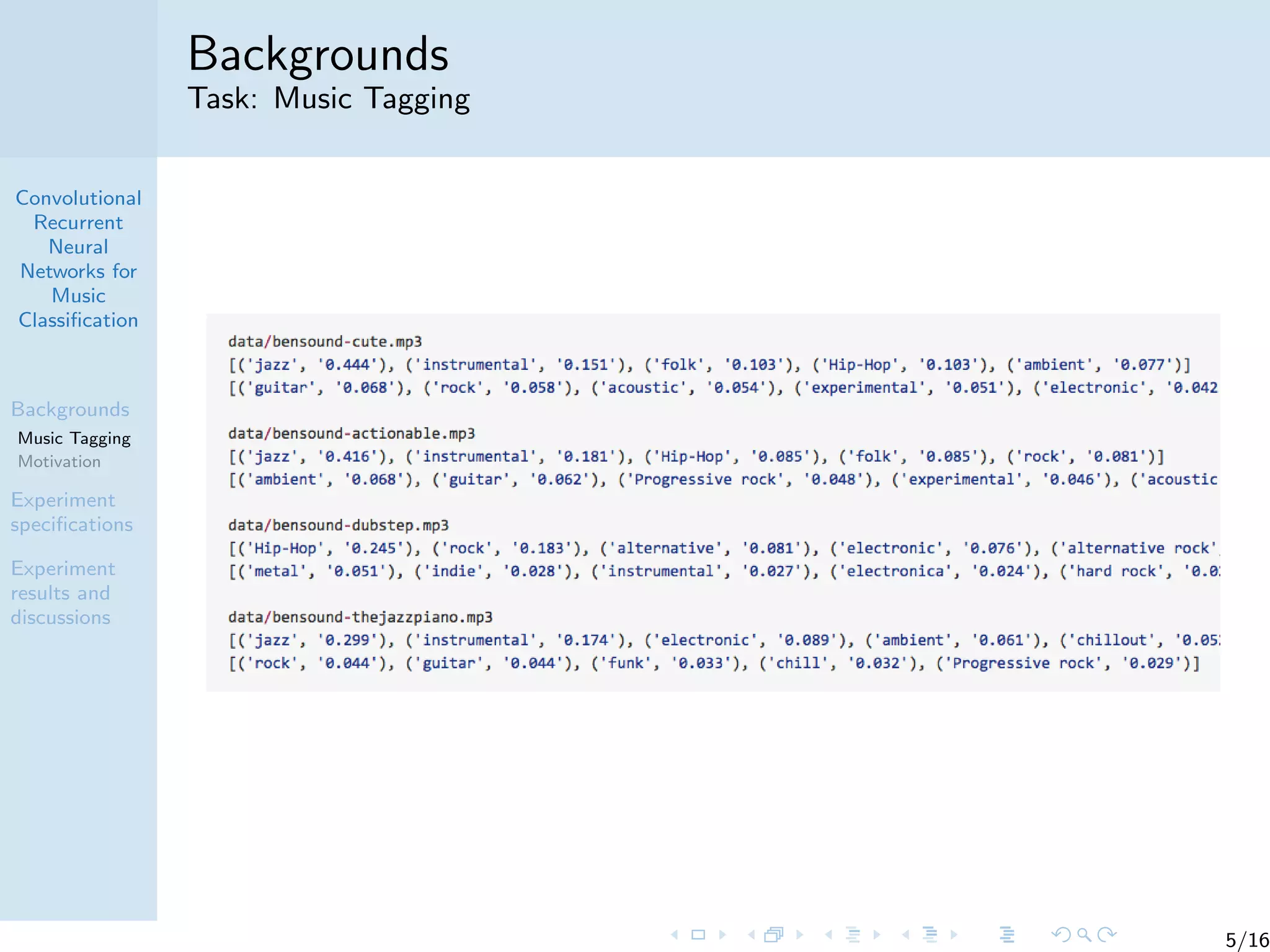

Task: Music Tagging

Tags

WHATEVER KEYWORDS that people think describes music

Multi-label nature

E.g. {rock, guitar, drive, 90’s}

Music tags include Genres (rock, pop, alternative, indie),

Instruments (vocalists, guitar, violin), Emotions (mellow,

chill), Activities (party, drive), Eras (00’s, 90’s, 80’s).

Collaboratively created (Last.fm ) → noisy and

ill-defined (of course)

Ill-defined but useful task - because it’s reality!

Evaluation: AUC, [0.0 - 1.0] but effectively: [0.5 - 1.0]

4/16](https://image.slidesharecdn.com/slide-171110112847/75/Convolutional-recurrent-neural-networks-for-music-classification-4-2048.jpg)

![Convolutional

Recurrent

Neural

Networks for

Music

Classification

Backgrounds

Music Tagging

Motivation

Experiment

specifications

Experiment

results and

discussions

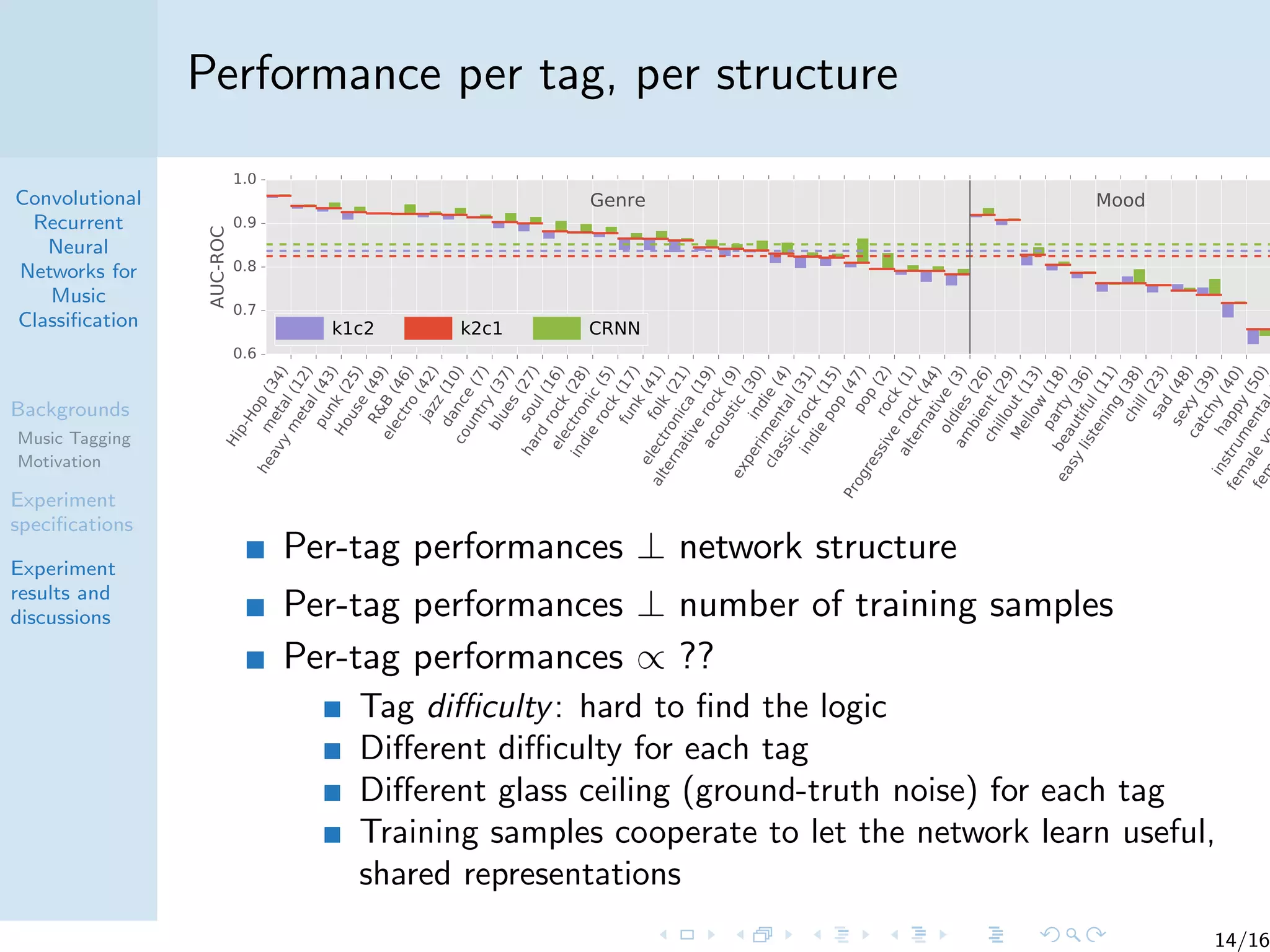

Performance vs number of parameters

(a) k1c2 (b) k2c1 (c) k2c2 (d) CRNN

0.1 0.25 0.5 1.0 3.0

Number of paramteres [x106]

0.80

0.81

0.82

0.83

0.84

0.85

0.86

0.87AUC-ROC

k1c2

k2c1

k2c2

CRNN

SOTA

k2c2 and CRNN work well

1D conv (k2c1, k1c2): not too good

Why? (I don’t know exactly)

Difference: Flexibility. k2c2 allows small invariances in

every conv layer, for both time/frequency axis, while k2c1

sees the whole frequency range at once, and therefore

don’t allow any distortion invariance.

12/16](https://image.slidesharecdn.com/slide-171110112847/75/Convolutional-recurrent-neural-networks-for-music-classification-12-2048.jpg)

![Convolutional

Recurrent

Neural

Networks for

Music

Classification

Backgrounds

Music Tagging

Motivation

Experiment

specifications

Experiment

results and

discussions

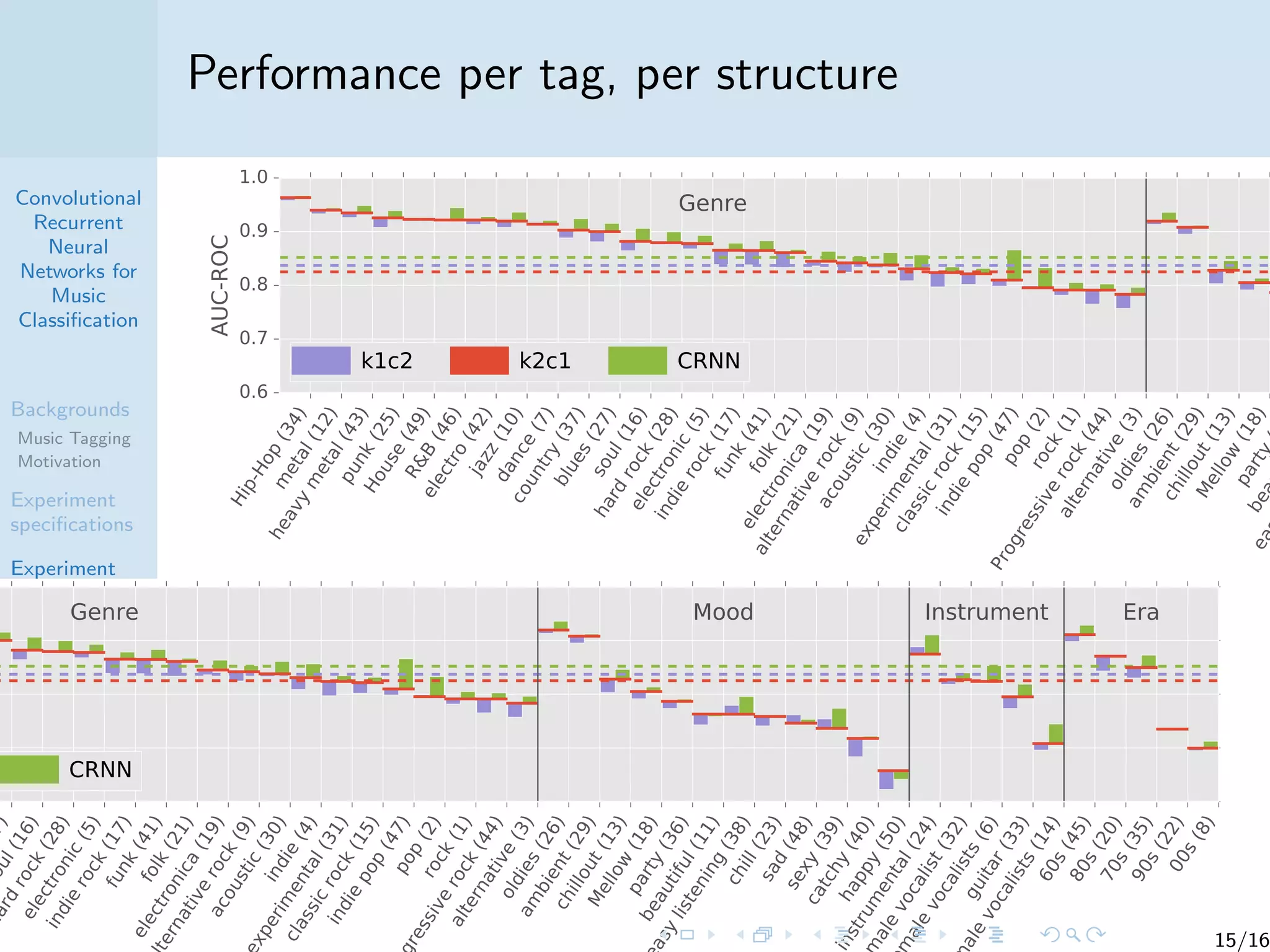

Performance vs training/inference time

(a) k1c2 (b) k2c1 (c) k2c2 (d) CRNN

9 20 50 100 200 300 400

training time per epoch [s]

0.80

0.81

0.82

0.83

0.84

0.85

0.86

0.87

AUC-ROC

k1c2

k2c1

k2c2

CRNN

1D convolution is SO FAST – the big kernels at first layer

make life easier

time consumption ∝ feature map size

time consumption ∝ 1/depth

k2c2 and CRNN are similar in different time ranges

CRNN for the best performance, k2c2 for time-efficient

performance (or make it wider/shallower) 13/16](https://image.slidesharecdn.com/slide-171110112847/75/Convolutional-recurrent-neural-networks-for-music-classification-13-2048.jpg)