The document discusses current practices in software testing, specifically around the use of mock objects. It presents results from several studies:

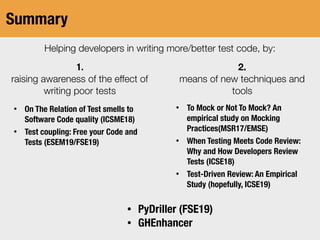

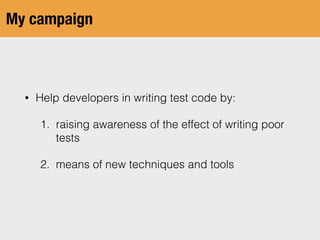

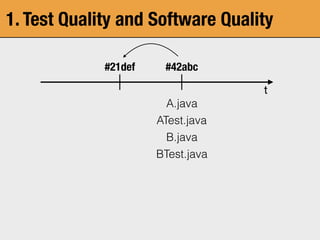

1. A study of over 2,000 test dependencies in open source and proprietary projects found that the use of mocks depends on the class's responsibilities and architecture. Databases and external dependencies are often mocked, while domain objects are less likely to be mocked.

2. A survey of over 100 professionals found that mocks are mostly introduced when test classes are first created and tend to remain throughout the test class's lifetime. Mocks are occasionally removed in response to changes.

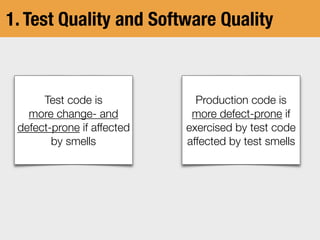

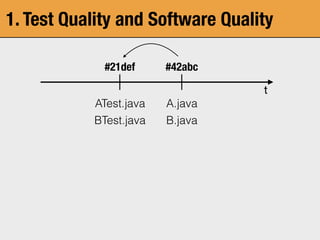

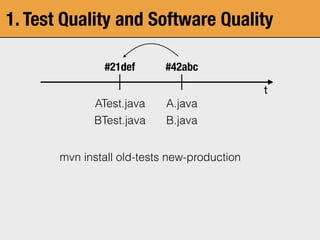

3. An analysis of code review practices for tests found that tests receive fewer reviews than production code. Developers see value in reviewing

![1. Test Quality and Software Quality

On The Relation of Test Smells to

Software Code Quality

Davide Spadini,⇤‡ Fabio Palomba§ Andy Zaidman,⇤ Magiel Bruntink,‡ Alberto Bacchelli§

‡Software Improvement Group, ⇤Delft University of Technology, §University of Zurich

⇤{d.spadini, a.e.zaidman}@tudelft.nl, ‡m.bruntink@sig.eu, §{palomba, bacchelli}@ifi.uzh.ch

Abstract—Test smells are sub-optimal design choices in the

implementation of test code. As reported by recent studies, their

presence might not only negatively affect the comprehension of

test suites but can also lead to test cases being less effective

in finding bugs in production code. Although significant steps

toward understanding test smells, there is still a notable absence

of studies assessing their association with software quality.

In this paper, we investigate the relationship between the

presence of test smells and the change- and defect-proneness of

test code, as well as the defect-proneness of the tested production

code. To this aim, we collect data on 221 releases of ten software

systems and we analyze more than a million test cases to investi-

gate the association of six test smells and their co-occurrence with

software quality. Key results of our study include:(i) tests with

smells are more change- and defect-prone, (ii) ‘Indirect Testing’,

‘Eager Test’, and ‘Assertion Roulette’ are the most significant

smells for change-proneness and, (iii) production code is more

defect-prone when tested by smelly tests.

I. INTRODUCTION

Automated testing (hereafter referred to as just testing)

found evidence of a negative impact of test smells on both

comprehensibility and maintainability of test code [7].

Although the study by Bavota et al. [7] made a first,

necessary step toward the understanding of maintainability

aspects of test smells, our empirical knowledge on whether

and how test smells are associated with software quality

aspects is still limited. Indeed, van Deursen et al. [74] based

their definition of test smells on their anecdotal experience,

without extensive evidence on whether and how such smells

are negatively associated with the overall system quality.

To fill this gap, in this paper we quantitatively investigate

the relationship between the presence of smells in test methods

and the change- and defect-proneness of both these test

methods and the production code they intend to test. Similar

to several previous studies on software quality [24], [62], we

employ the proxy metrics change-proneness (i.e., number of

times a method changes between two releases) and defect-

proneness (i.e., number of defects the method had between two](https://image.slidesharecdn.com/presentation-190527193500/85/Practices-and-Tools-for-Better-Software-Testing-9-320.jpg)

![2. Current Practices in Testing

To Mock or Not To Mock?

An Empirical Study on Mocking Practices

Davide Spadini⇤†, Maurício Aniche†, Magiel Bruntink⇤, Alberto Bacchelli†

⇤Software Improvement Group

{d.spadini, m.bruntink}@sig.eu

†Delft University of Technology

{d.spadini, m.f.aniche, a.bacchelli}@tudelft.nl

Abstract—When writing automated unit tests, developers often

deal with software artifacts that have several dependencies. In

these cases, one has the possibility of either instantiating the

dependencies or using mock objects to simulate the dependen-

cies’ expected behavior. Even though recent quantitative studies

showed that mock objects are widely used in OSS projects,

scientific knowledge is still lacking on how and why practitioners

use mocks. Such a knowledge is fundamental to guide further

research on this widespread practice and inform the design of

tools and processes to improve it.

The objective of this paper is to increase our understanding

of which test dependencies developers (do not) mock and why,

as well as what challenges developers face with this practice.

To this aim, we create MOCKEXTRACTOR, a tool to mine

the usage of mock objects in testing code and employ it to

collect data from three OSS projects and one industrial system.

Sampling from this data, we manually analyze how more than

2,000 test dependencies are treated. Subsequently, we discuss

our findings with developers from these systems, identifying

practices, rationales, and challenges. These results are supported

by a structured survey with more than 100 professionals. The

study reveals that the usage of mocks is highly dependent on

the responsibility and the architectural concern of the class.

To support the simulation of dependencies, mocking frame-

works have been developed (e.g., Mockito [7], EasyMock [2],

and JMock [3] for Java, Mock [5] and Mocker [6] for Python),

which provide APIs for creating mock (i.e., simulated) objects,

setting return values of methods in the mock objects, and

checking interactions between the component under test and

the mock objects. Past research has reported that software

projects are using mocking frameworks widely [21] [32] and

has provided initial evidence that using a mock object can ease

the process of unit testing [29].

However, empirical knowledge is still lacking on how

and why practitioners use mocks. To scientifically evaluate

mocking and its effects, as well as to help practitioners in

their software testing phase, one has to first understand and

quantify developers’ practices and perspectives. In fact, this

allows both to focus future research on the most relevant

aspects of mocking and on real developers’ needs, as well

as to effectively guide the design of tools and processes.

To fill this gap of knowledge, the goal of this paper is to](https://image.slidesharecdn.com/presentation-190527193500/85/Practices-and-Tools-for-Better-Software-Testing-17-320.jpg)

![2. Current Practices in Testing

To Mock or Not To Mock?

An Empirical Study on Mocking Practices

Davide Spadini⇤†, Maurício Aniche†, Magiel Bruntink⇤, Alberto Bacchelli†

⇤Software Improvement Group

{d.spadini, m.bruntink}@sig.eu

†Delft University of Technology

{d.spadini, m.f.aniche, a.bacchelli}@tudelft.nl

Abstract—When writing automated unit tests, developers often

deal with software artifacts that have several dependencies. In

these cases, one has the possibility of either instantiating the

dependencies or using mock objects to simulate the dependen-

cies’ expected behavior. Even though recent quantitative studies

showed that mock objects are widely used in OSS projects,

scientific knowledge is still lacking on how and why practitioners

use mocks. Such a knowledge is fundamental to guide further

research on this widespread practice and inform the design of

tools and processes to improve it.

The objective of this paper is to increase our understanding

of which test dependencies developers (do not) mock and why,

as well as what challenges developers face with this practice.

To this aim, we create MOCKEXTRACTOR, a tool to mine

the usage of mock objects in testing code and employ it to

collect data from three OSS projects and one industrial system.

Sampling from this data, we manually analyze how more than

2,000 test dependencies are treated. Subsequently, we discuss

our findings with developers from these systems, identifying

practices, rationales, and challenges. These results are supported

by a structured survey with more than 100 professionals. The

study reveals that the usage of mocks is highly dependent on

the responsibility and the architectural concern of the class.

To support the simulation of dependencies, mocking frame-

works have been developed (e.g., Mockito [7], EasyMock [2],

and JMock [3] for Java, Mock [5] and Mocker [6] for Python),

which provide APIs for creating mock (i.e., simulated) objects,

setting return values of methods in the mock objects, and

checking interactions between the component under test and

the mock objects. Past research has reported that software

projects are using mocking frameworks widely [21] [32] and

has provided initial evidence that using a mock object can ease

the process of unit testing [29].

However, empirical knowledge is still lacking on how

and why practitioners use mocks. To scientifically evaluate

mocking and its effects, as well as to help practitioners in

their software testing phase, one has to first understand and

quantify developers’ practices and perspectives. In fact, this

allows both to focus future research on the most relevant

aspects of mocking and on real developers’ needs, as well

as to effectively guide the design of tools and processes.

To fill this gap of knowledge, the goal of this paper is to

452376

442445 9

402669 11

16171013 27

6438 27 5

Web service

External dependency

Database

Domain object

Native library

Never Almost never Occasionally/ Sometimes Almost always Always](https://image.slidesharecdn.com/presentation-190527193500/85/Practices-and-Tools-for-Better-Software-Testing-18-320.jpg)

![2. Current Practices in Testing

When Testing Meets Code Review:

Why and How Developers Review Tests

Davide Spadini

Delft University of Technology

Software Improvement Group

Delft, The Netherlands

d.spadini@sig.eu

Maurício Aniche

Delft University of Technology

Delft, The Netherlands

m.f.aniche@tudelft.nl

Margaret-Anne Storey

University of Victoria

Victoria, BC, Canada

mstorey@uvic.ca

Magiel Bruntink

Software Improvement Group

Amsterdam, The Netherlands

m.bruntink@sig.eu

Alberto Bacchelli

University of Zurich

Zurich, Switzerland

bacchelli@i.uzh.ch

ABSTRACT

Automated testing is considered an essential process for ensuring

software quality. However, writing and maintaining high-quality

test code is challenging and frequently considered of secondary

importance. For production code, many open source and industrial

software projects employ code review, a well-established software

quality practice, but the question remains whether and how code

review is also used for ensuring the quality of test code. The aim

of this research is to answer this question and to increase our un-

derstanding of what developers think and do when it comes to

reviewing test code. We conducted both quantitative and quali-

tative methods to analyze more than 300,000 code reviews, and

interviewed 12 developers about how they review test les. This

work resulted in an overview of current code reviewing practices, a

set of identied obstacles limiting the review of test code, and a set

of issues that developers would like to see improved in code review

ACM Reference Format:

Davide Spadini, Maurício Aniche, Margaret-Anne Storey, Magiel Bruntink,

and Alberto Bacchelli. 2018. When Testing Meets Code Review: Why and

How Developers Review Tests. In Proceedings of ICSE ’18: 40th International

Conference on Software Engineering , Gothenburg, Sweden, May 27-June 3,

2018 (ICSE ’18), 11 pages.

https://doi.org/10.1145/3180155.3180192

1 INTRODUCTION

Automated testing has become an essential process for improving

the quality of software systems [15, 31]. Automated tests (hereafter

referred to as just ‘tests’) can help ensure that production code is

robust under many usage conditions and that code meets perfor-

mance and security needs [15, 16]. Nevertheless, writing eective

tests is as challenging as writing good production code. A tester has

to ensure that test results are accurate, that all important execution](https://image.slidesharecdn.com/presentation-190527193500/85/Practices-and-Tools-for-Better-Software-Testing-21-320.jpg)

![2. Current Practices in Testing

When Testing Meets Code Review:

Why and How Developers Review Tests

Davide Spadini

Delft University of Technology

Software Improvement Group

Delft, The Netherlands

d.spadini@sig.eu

Maurício Aniche

Delft University of Technology

Delft, The Netherlands

m.f.aniche@tudelft.nl

Margaret-Anne Storey

University of Victoria

Victoria, BC, Canada

mstorey@uvic.ca

Magiel Bruntink

Software Improvement Group

Amsterdam, The Netherlands

m.bruntink@sig.eu

Alberto Bacchelli

University of Zurich

Zurich, Switzerland

bacchelli@i.uzh.ch

ABSTRACT

Automated testing is considered an essential process for ensuring

software quality. However, writing and maintaining high-quality

test code is challenging and frequently considered of secondary

importance. For production code, many open source and industrial

software projects employ code review, a well-established software

quality practice, but the question remains whether and how code

review is also used for ensuring the quality of test code. The aim

of this research is to answer this question and to increase our un-

derstanding of what developers think and do when it comes to

reviewing test code. We conducted both quantitative and quali-

tative methods to analyze more than 300,000 code reviews, and

interviewed 12 developers about how they review test les. This

work resulted in an overview of current code reviewing practices, a

set of identied obstacles limiting the review of test code, and a set

of issues that developers would like to see improved in code review

ACM Reference Format:

Davide Spadini, Maurício Aniche, Margaret-Anne Storey, Magiel Bruntink,

and Alberto Bacchelli. 2018. When Testing Meets Code Review: Why and

How Developers Review Tests. In Proceedings of ICSE ’18: 40th International

Conference on Software Engineering , Gothenburg, Sweden, May 27-June 3,

2018 (ICSE ’18), 11 pages.

https://doi.org/10.1145/3180155.3180192

1 INTRODUCTION

Automated testing has become an essential process for improving

the quality of software systems [15, 31]. Automated tests (hereafter

referred to as just ‘tests’) can help ensure that production code is

robust under many usage conditions and that code meets perfor-

mance and security needs [15, 16]. Nevertheless, writing eective

tests is as challenging as writing good production code. A tester has

to ensure that test results are accurate, that all important execution

Prod. files are

2 times more likely

to be discussed

than test files

Checking out the

change and run

the tests

Test

Driven

Review](https://image.slidesharecdn.com/presentation-190527193500/85/Practices-and-Tools-for-Better-Software-Testing-22-320.jpg)

![2. Current Practices in Testing

Test-Driven Code Review: An Empirical Study

BLINDED AUTHOR(S)

AFFILIATION(S)

Abstract—Test-Driven Code Review (TDR) is a code review

practice in which a reviewer inspects a patch by examining the

changed test code before the changed production code. Although

this practice has been mentioned positively by practitioners

in informal literature and interviews, there is no systematic

knowledge on its effects, prevalence, problems, and advantages.

In this paper, we aim at empirically understanding whether

this practice has an effect on code review effectiveness and how

developers’ perceive TDR. We conduct (i) a controlled experiment

with 93 developers that perform more than 150 reviews, and (ii)

9 semi-structured interviews and a survey with 103 respondents

to gather information on how TDR is perceived. Key results from

the experiment show that developers adopting TDR find the same

proportion of defects in production code, but more in test code, at

the expenses of fewer maintainability issues in production code.

Furthermore, we found that most developers prefer to review

production code as they deem it more critical and tests should

follow from it. Moreover, general poor test code quality and no

tool support hinder the adoption of TDR.

I. INTRODUCTION

Peer code review is a well-established and widely adopted

practice aimed at maintaining and promoting software qual-

ity [3]. Contemporary code review, also known as Change-

programmers, another article supported TDR (collecting more

than 1,200 likes): “By looking at the requirements and check-

ing them against the test cases, the developer can have a pretty

good understanding of what the implementation should be

like, what functionality it covers and if the developer omitted

any use cases.” Interviewed developers reported preferring to

review test code first to better understanding the code change

before looking for defects in production [50].

These above are compelling arguments in favor of TDR, yet

we have no systematic knowledge on this practice: whether

TDR is effective in finding defects during code review, how

frequently it is used, and what are its potential problems and

advantages beside review effectiveness. This knowledge can

provide insights for both practitioners and researchers. De-

velopers and project stakeholders can use empirical evidence

about TDR effects, problems, and advantages to make informed

decisions about when to adopt it. Researchers can focus their

attention on the novel aspects of TDR and challenges reviewers

face to inform future research.

In this paper, our goal is to obtain a deeper understanding

of TDR. We do this by conducting an empirical study set up](https://image.slidesharecdn.com/presentation-190527193500/85/Practices-and-Tools-for-Better-Software-Testing-23-320.jpg)

![PyDriller ❤

PyDriller: Python Framework for Mining Soware Repositories

Davide Spadini

Delft University of Technology

Software Improvement Group

Delft, The Netherlands

d.spadini@sig.eu

Maurício Aniche

Delft University of Technology

Delft, The Netherlands

m.f.aniche@tudelft.nl

Alberto Bacchelli

University of Zurich

Zurich, Switzerland

bacchelli@i.uzh.ch

ABSTRACT

Software repositories contain historical and valuable information

about the overall development of software systems. Mining software

repositories (MSR) is nowadays considered one of the most inter-

esting growing elds within software engineering. MSR focuses

on extracting and analyzing data available in software repositories

to uncover interesting, useful, and actionable information about

the system. Even though MSR plays an important role in software

engineering research, few tools have been created and made public

to support developers in extracting information from Git reposi-

tory. In this paper, we present P, a Python Framework that

eases the process of mining Git. We compare our tool against the

state-of-the-art Python Framework GitPython, demonstrating that

P can achieve the same results with, on average, 50% less

LOC and signicantly lower complexity.

URL: https://github.com/ishepard/pydriller,

Materials: https://doi.org/10.5281/zenodo.1327363,

Pre-print: https://doi.org/10.5281/zenodo.1327411

CCS CONCEPTS

• Software and its engineering;

KEYWORDS

actionable insights for software engineering, such as understanding

the impact of code smells [13–15], exploring how developers are

doing code reviews [2, 4, 10, 21] and which testing practices they

follow [20], predicting classes that are more prone to change/de-

fects [3, 6, 16, 17], and identifying the core developers of a software

team to transfer knowledge [12].

Among the dierent sources of information researchers can use,

version control systems, such as Git, are among the most used ones.

Indeed, version control systems provide researchers with precise

information about the source code, its evolution, the developers of

the software, and the commit messages (which explain the reasons

for changing).

Nevertheless, extracting information from Git repositories is

not trivial. Indeed, many frameworks can be used to interact with

Git (depending on the preferred programming language), such as

GitPython [1] for Python, or JGit for Java [8]. However, these tools

are often dicult to use. One of the main reasons for such diculty

is that they encapsulate all the features from Git, hence, developers

are forced to write long and complex implementations to extract

even simple data from a Git repository.

In this paper, we present P, a Python framework that

helps developers to mine software repositories. P provides

developers with simple APIs to extract information from a Git

repository, such as commits, developers, modications, dis, and](https://image.slidesharecdn.com/presentation-190527193500/85/Practices-and-Tools-for-Better-Software-Testing-27-320.jpg)