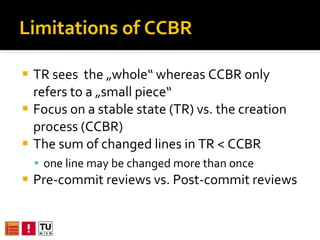

The document discusses adopting code reviews in agile environments. Traditional code reviews are resource-intensive. An industrial case study found reviews were often skipped due to lack of time at the end of sprints. The paper proposes Continuous Changeset-Based Reviews (CCBR) as an alternative. CCBR makes reviews continuous, asynchronous tasks based on automated rules. It was implemented in a review tool integrated with Eclipse called ReviewClipse. An empirical evaluation is planned to compare CCBR to traditional reviews.

![Introduction Code reviews have many benefits, most importantly to find bugs early [1, 2, 3] It is commonly accepted that code reviews are resource-intensive [4] Due to the lack of time and resources many (agile) software development teams do not perform traditional code reviews [5] Code reviews support knowledge sharing [18] and collective code ownership [22]](https://image.slidesharecdn.com/adoptingcodereviewsforagilesoftwaredevelopment-100812143452-phpapp01/85/Adopting-code-reviews-for-agile-software-development-2-320.jpg)

![Code reviews in agile environm. Formal reviews such as the IEEE1028 [2] tend to be too heavyweight in agile contexts [4, 5] There is a growing interest in code reviews and review tools in the agile community [24] An empirical study [25] proposes a combination of pair programming and code reviews Q: How to adopt traditional code reviews to support reviews in an agile environment?](https://image.slidesharecdn.com/adoptingcodereviewsforagilesoftwaredevelopment-100812143452-phpapp01/85/Adopting-code-reviews-for-agile-software-development-3-320.jpg)

![CCBR attributes Process Coherence Review is done shortly after the development Rework is done shortly after the original work “ It's All about Feedback and Change” [23] Information Coherence Changes within one timeframe often correlate [15] Task size small, typically <200 LOC per review task](https://image.slidesharecdn.com/adoptingcodereviewsforagilesoftwaredevelopment-100812143452-phpapp01/85/Adopting-code-reviews-for-agile-software-development-7-320.jpg)