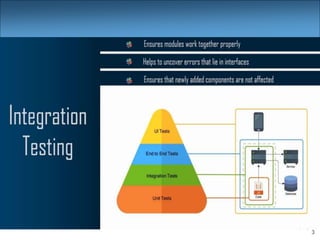

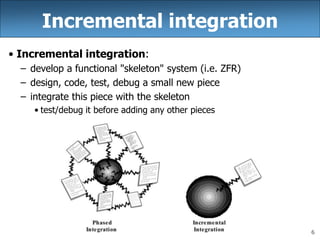

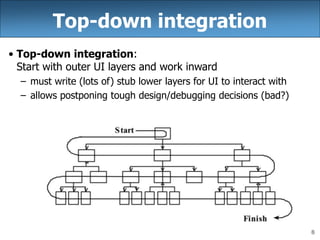

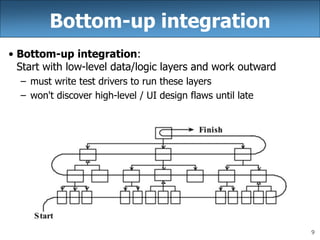

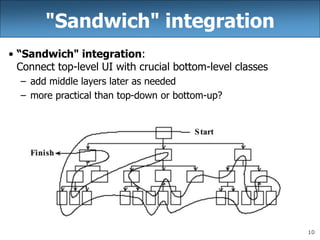

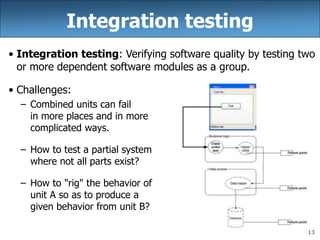

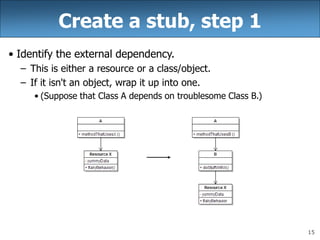

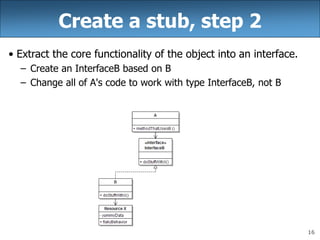

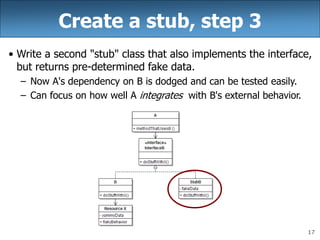

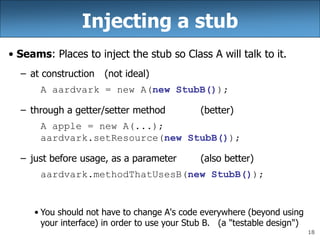

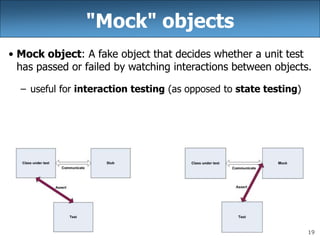

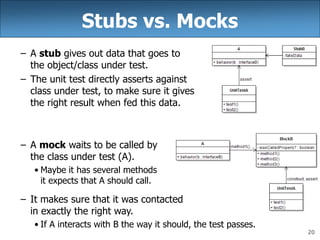

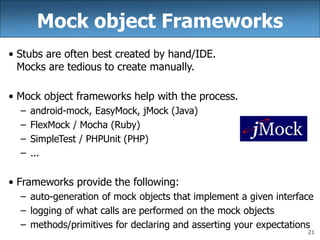

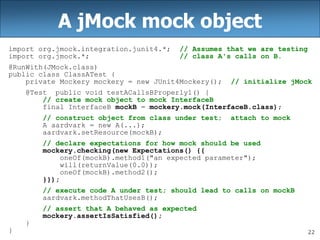

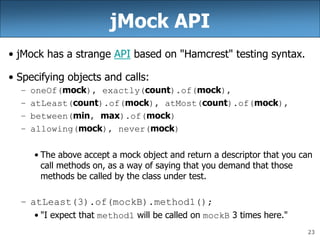

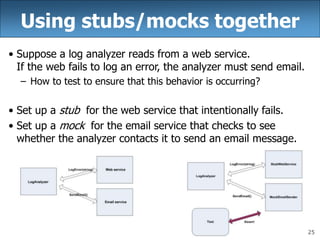

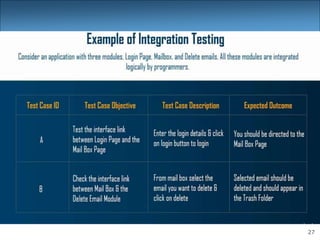

This document discusses integration testing. It defines integration testing as verifying software quality by testing two or more dependent software modules as a group. It describes different integration strategies like incremental, top-down, bottom-up, and "sandwich" integration. It also discusses challenges in integration testing and how to address them using stubs and mock objects to isolate modules and verify their interactions as expected.