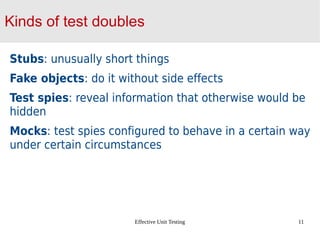

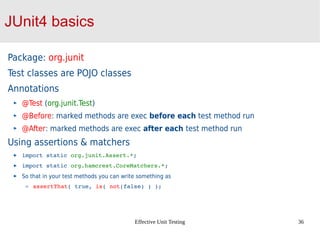

The document discusses effective unit testing. It covers topics like the value of writing tests, test-driven development, behavior-driven development, test doubles, structuring tests with arrange-act-assert/given-when-then, checking behavior not implementation, test smells, JUnit basics, and parameterized testing. The goal of tests is to validate code behavior, catch mistakes, and shape design. Writing tests also helps learn what the code is intended to do.

![Effective Unit Testing 37

Parametrized-Test pattern in JUnit

Mark the test class with

@RunWith(org.junit.runners.Parametrized.class)

Define private fields and a constructor that accepts, in order, your parameters

public MyParamTestCase(int k, String name) { this.k=k; … }

Define a method that returns your all your parameter data

@org.junit.runners.Parametrized.Parameters

public static Collection<Object[]> data(){

return Arrays.asList( new Object[][] { { 10, “roby”}, … } );

}

Define a @Test method that works against the private fields that are defined to

contain the parameters.](https://image.slidesharecdn.com/effectiveunittesting-130604061644-phpapp02/85/Effective-unit-testing-37-320.jpg)