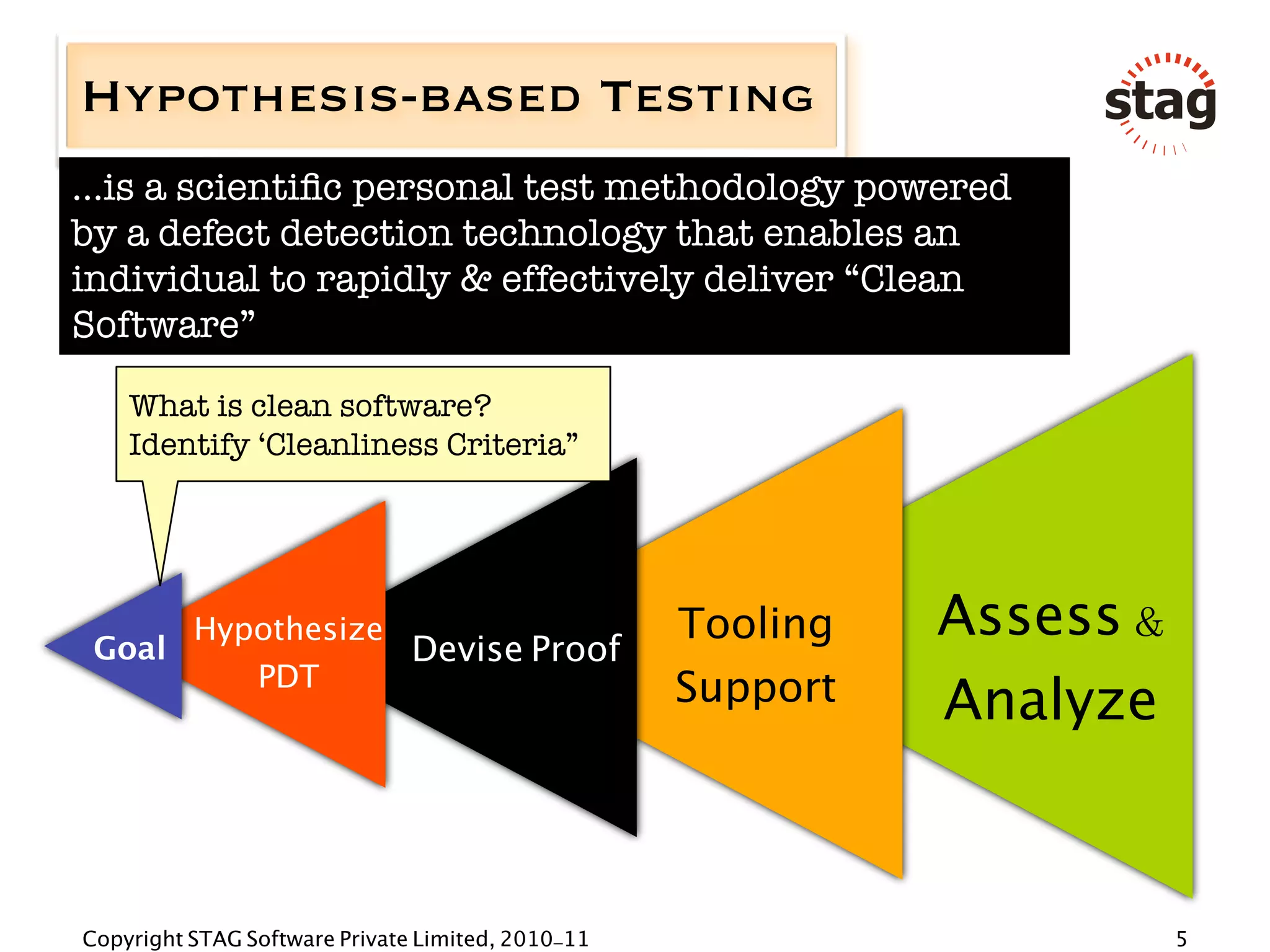

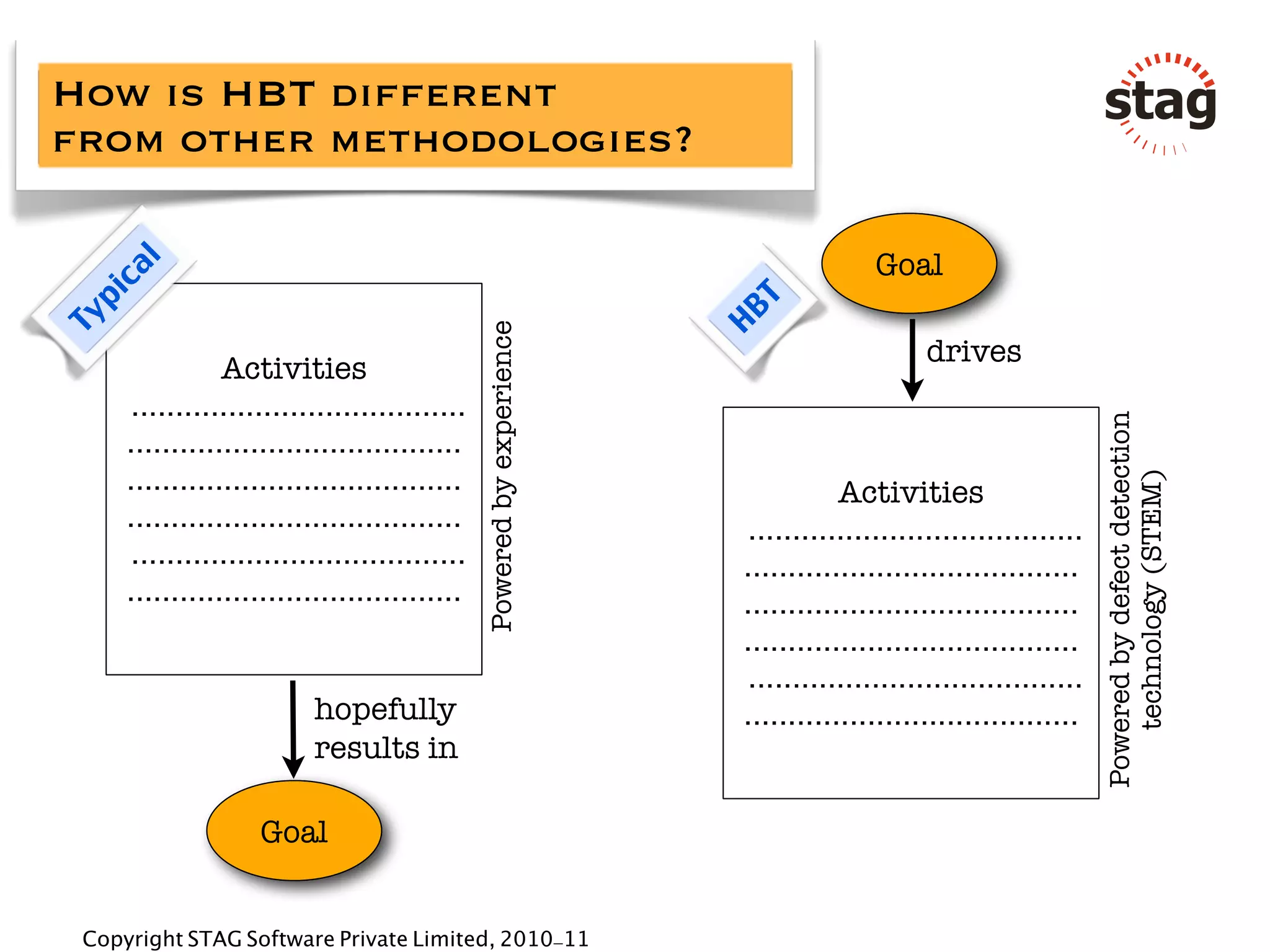

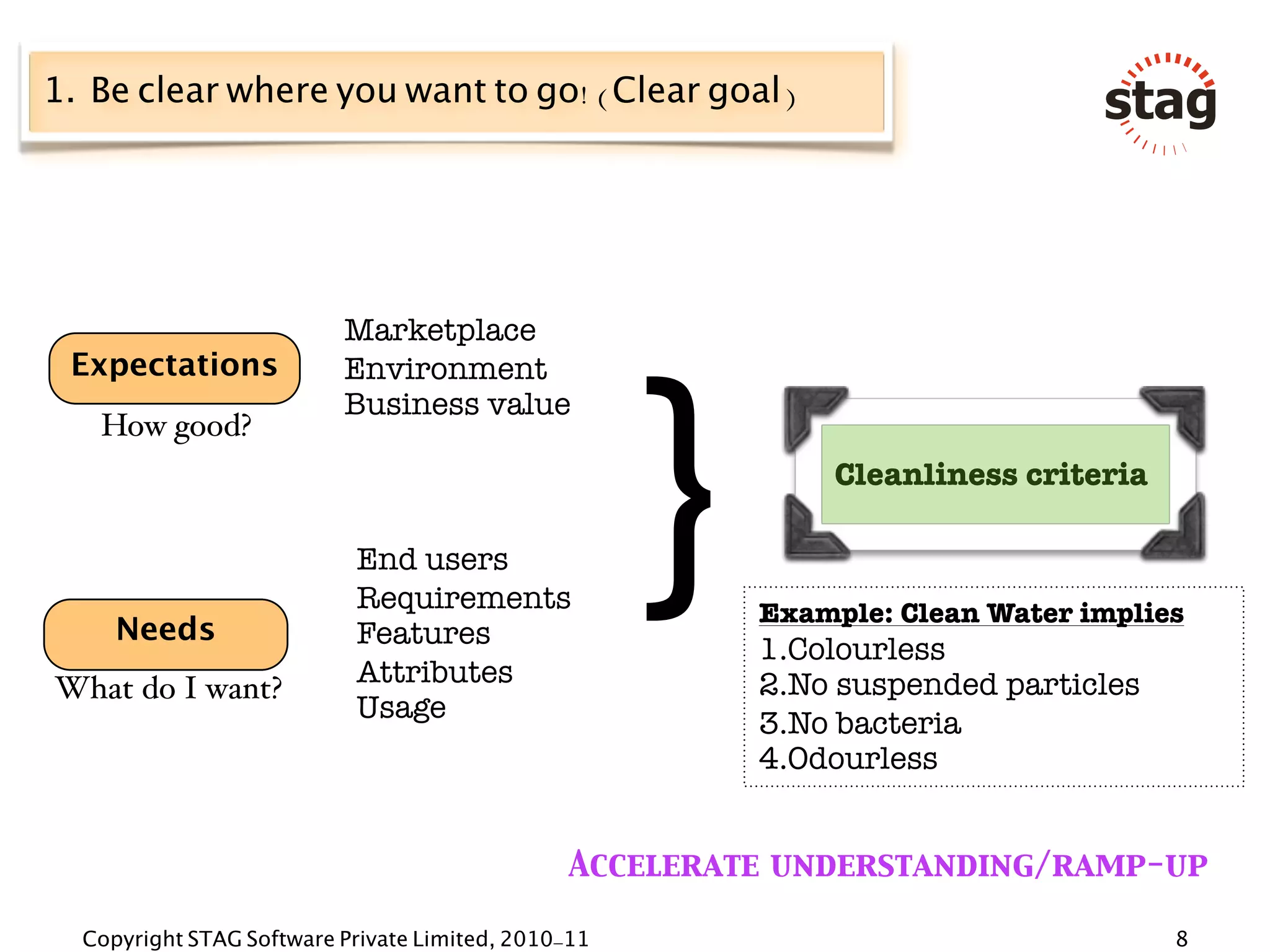

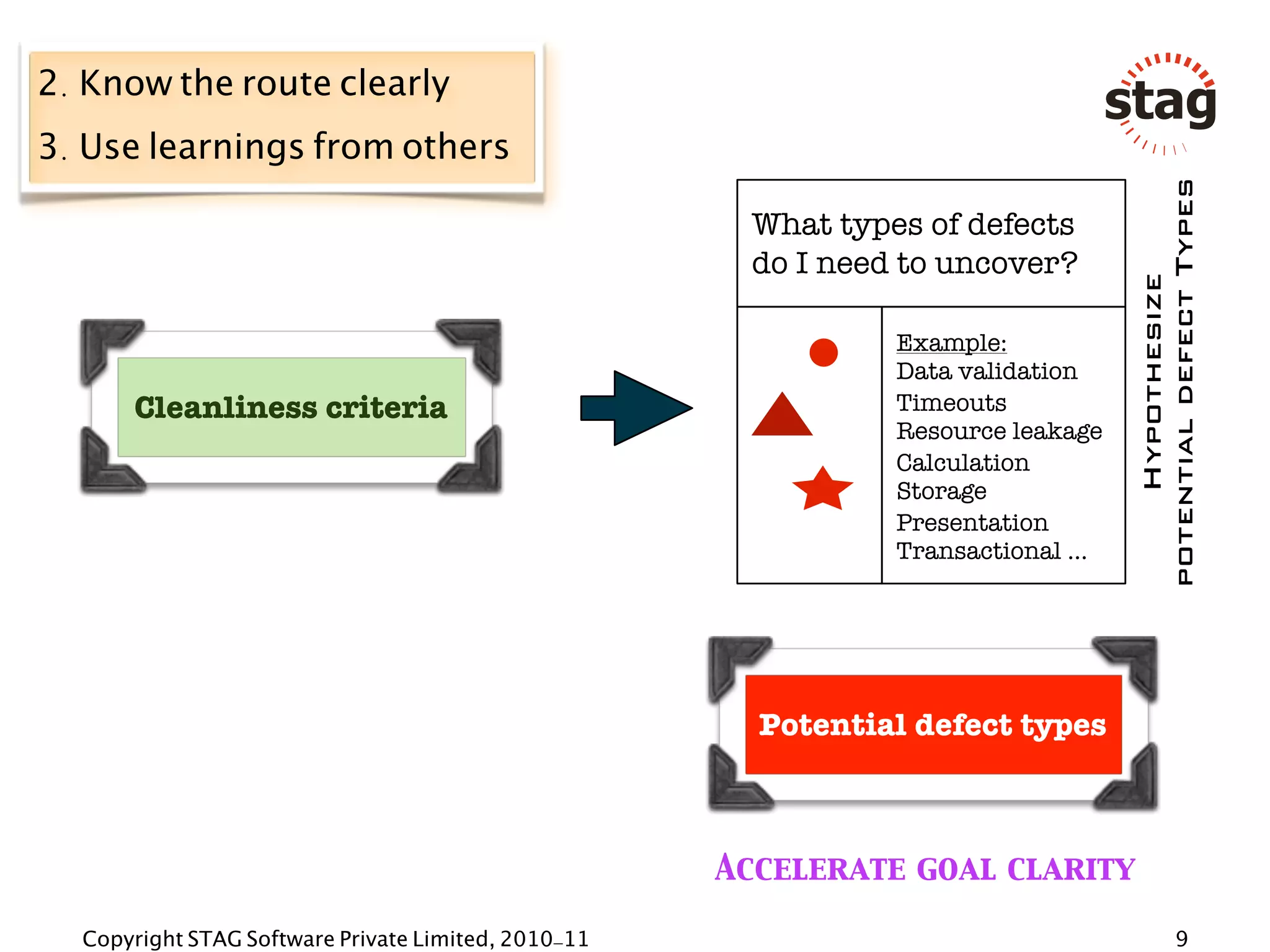

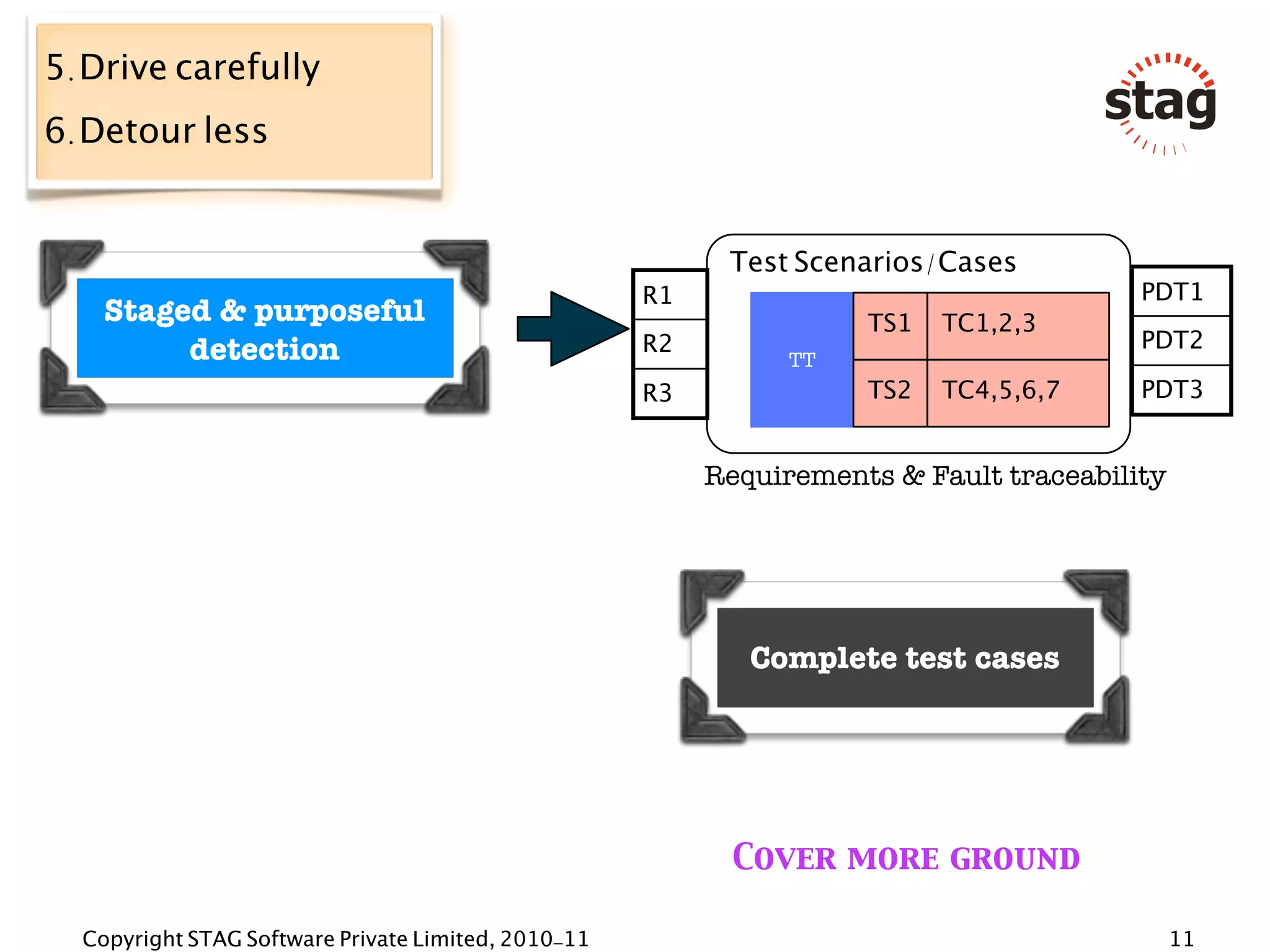

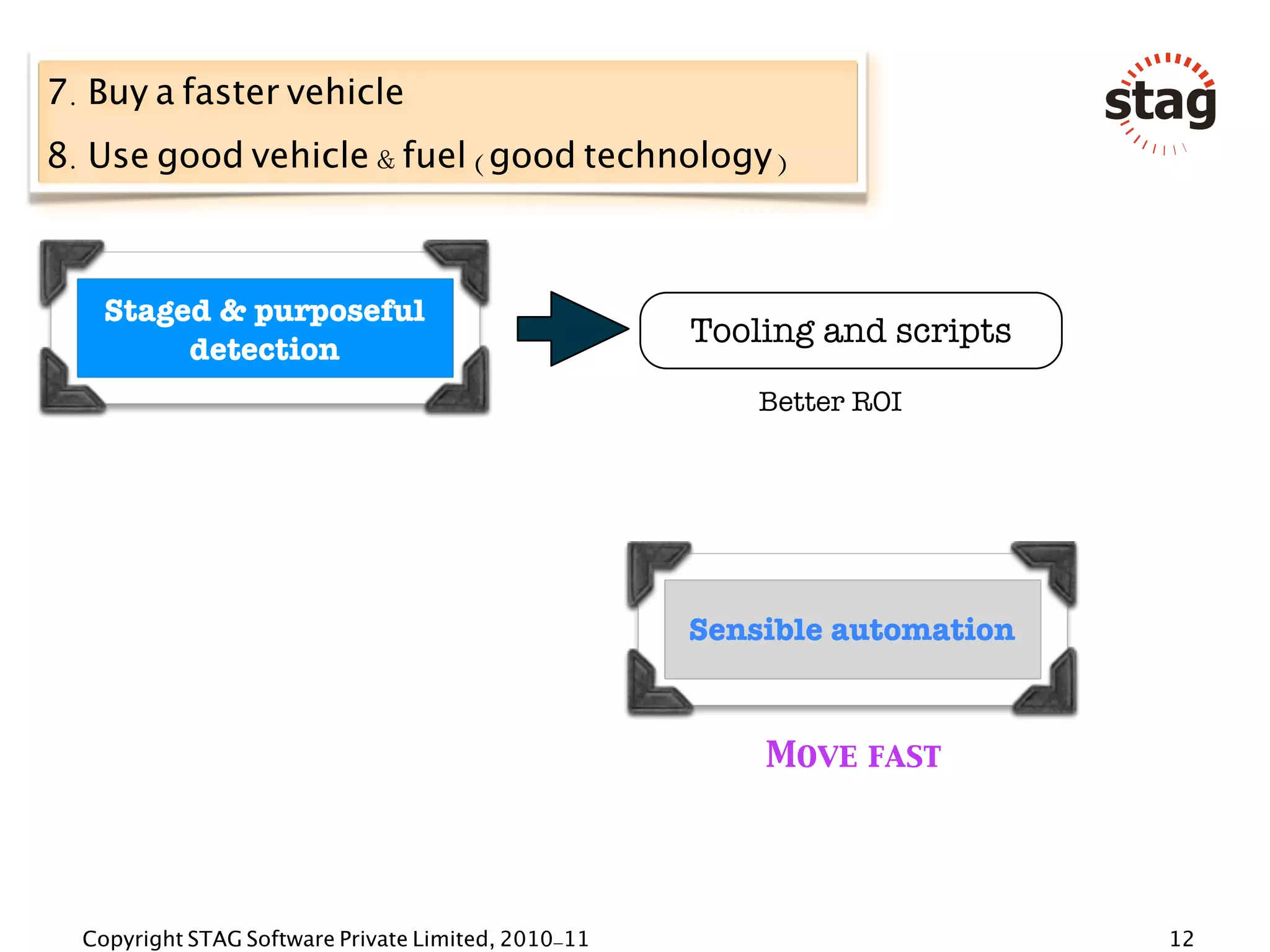

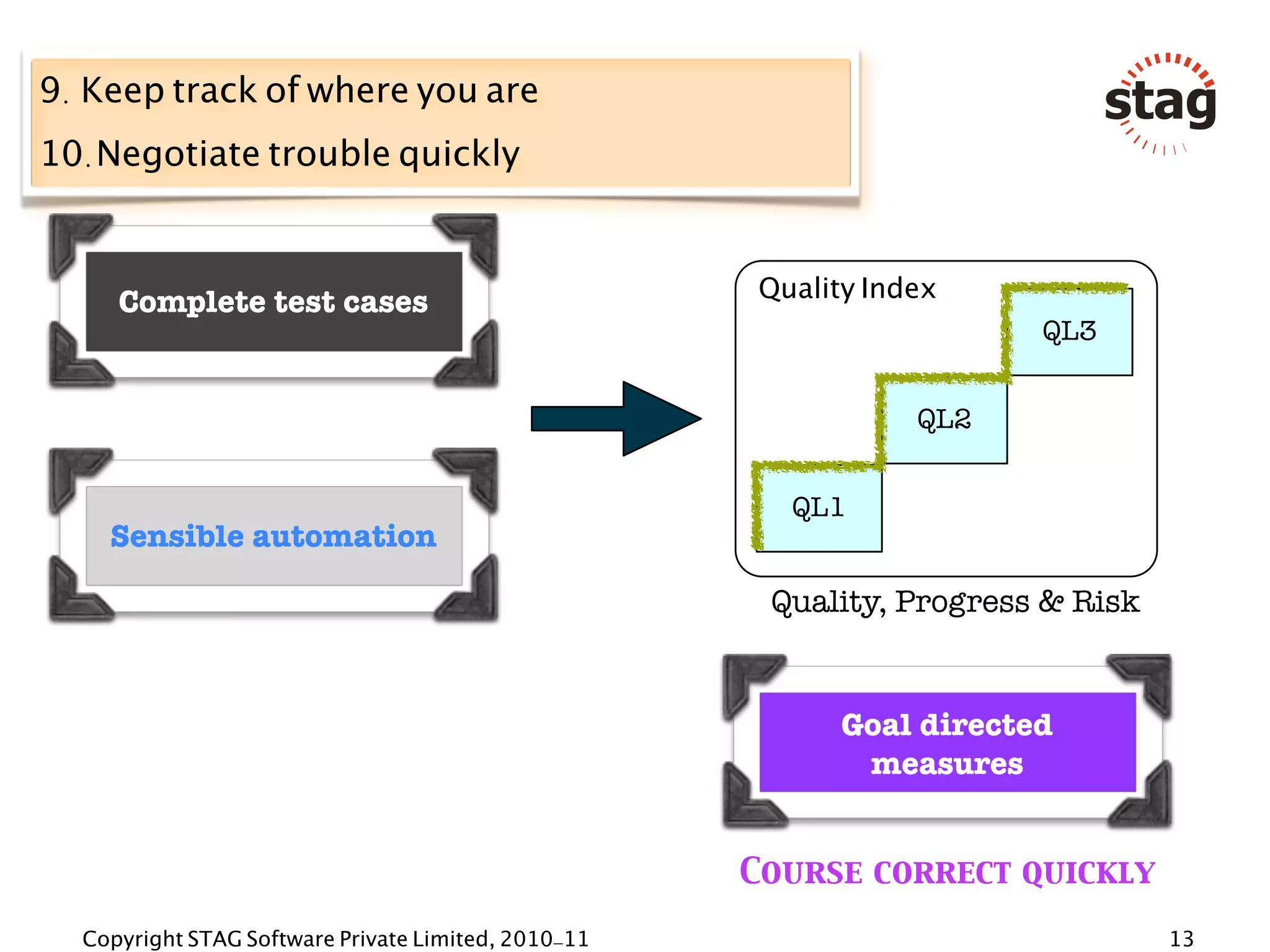

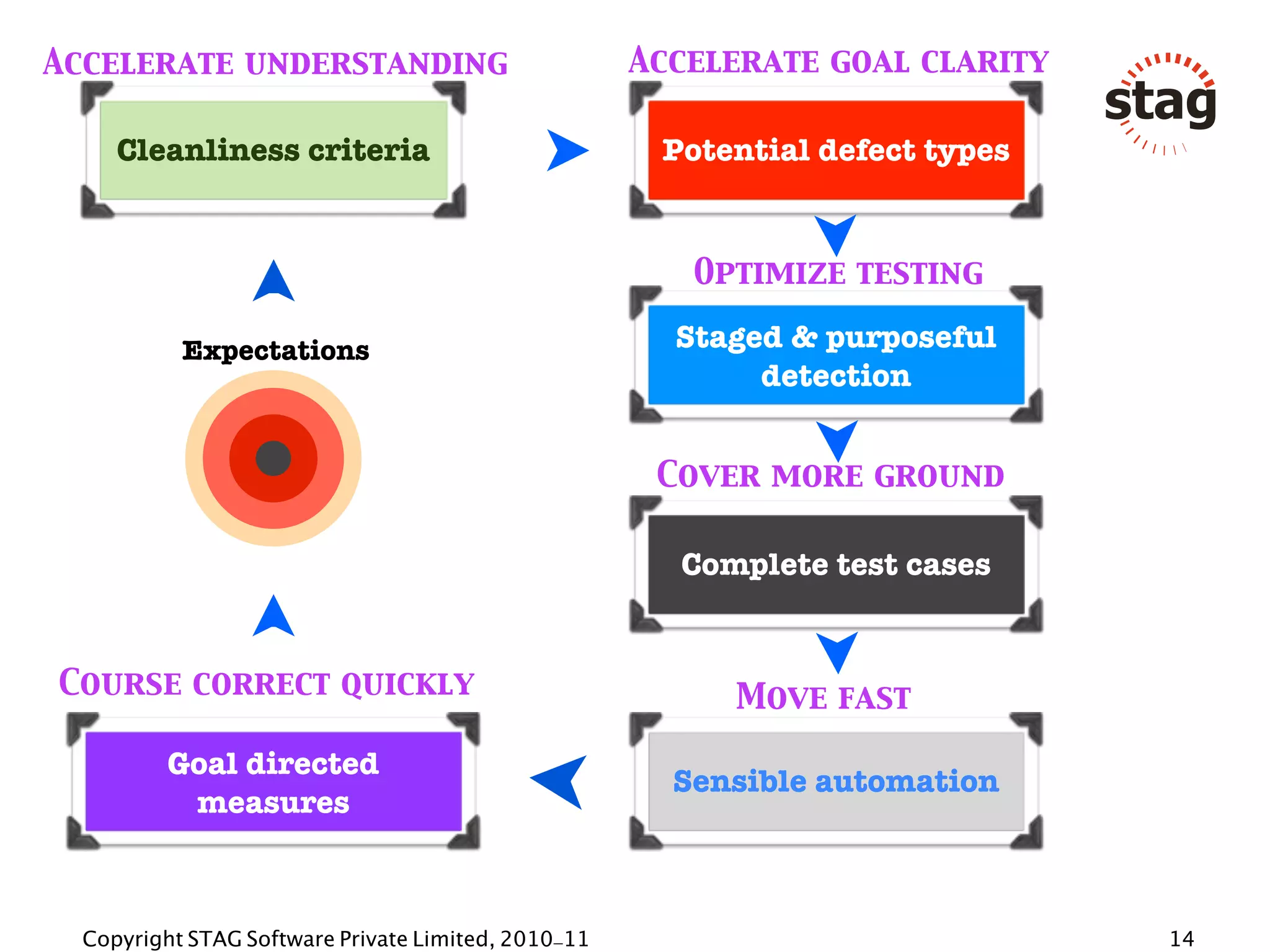

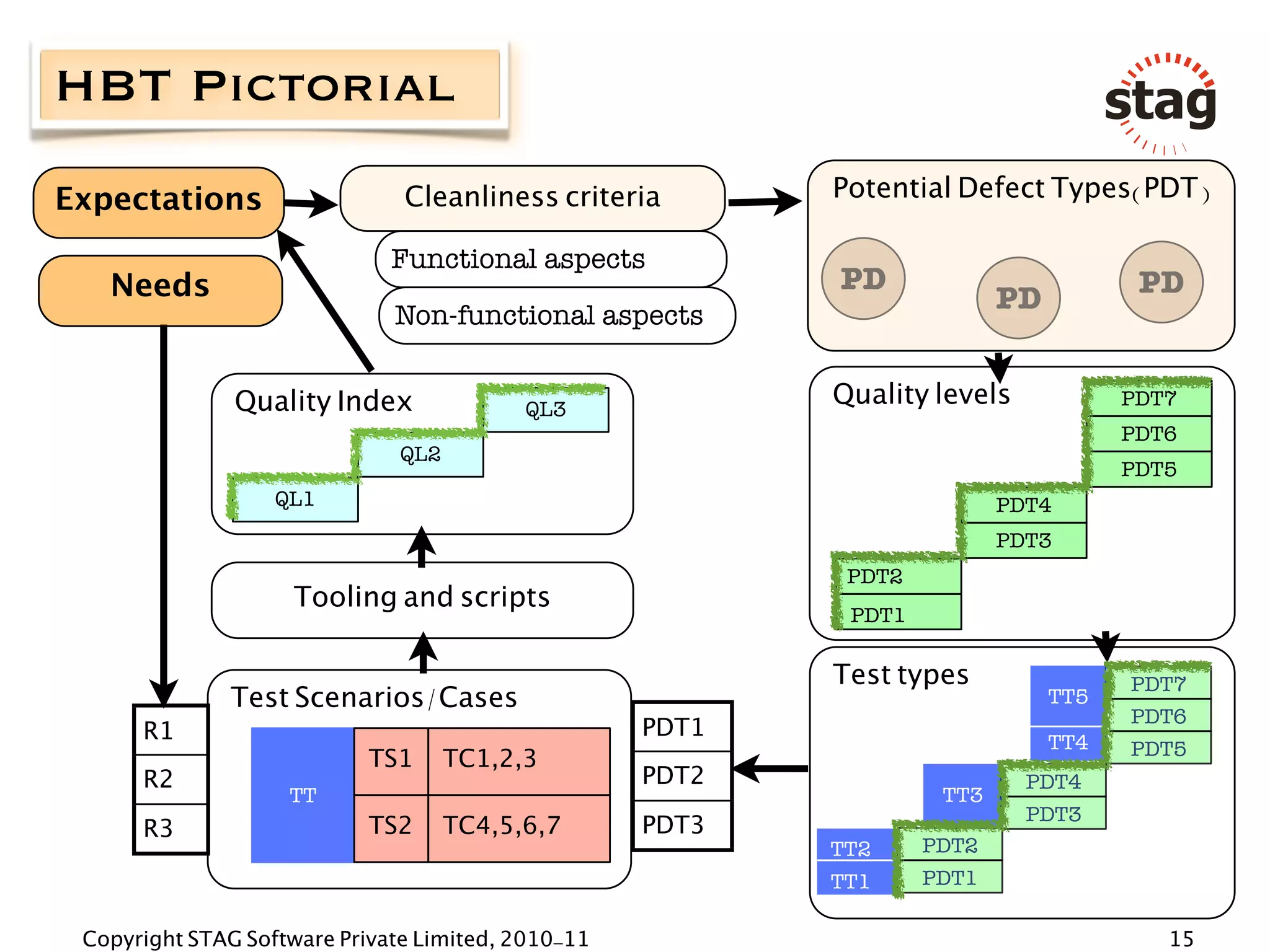

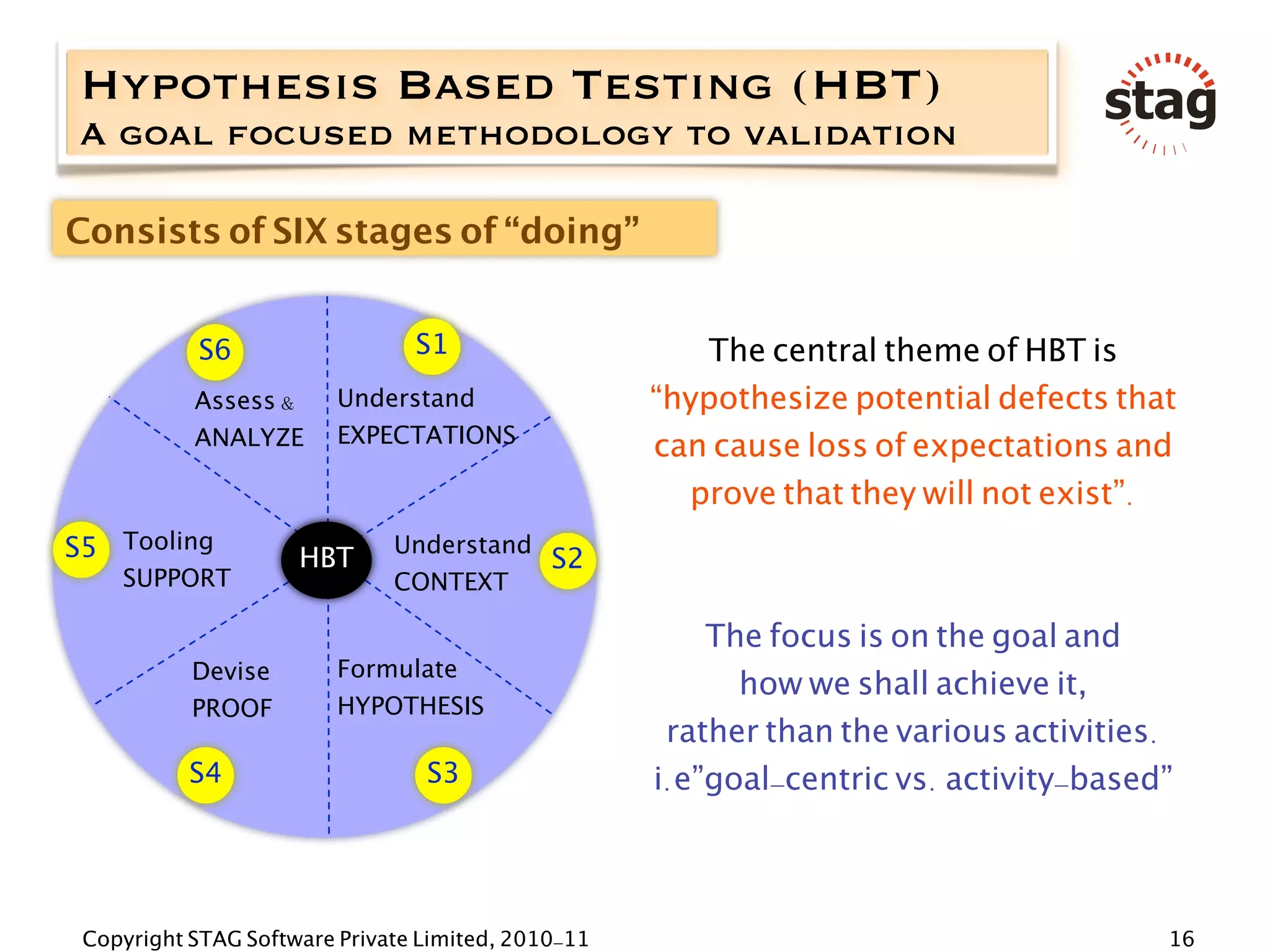

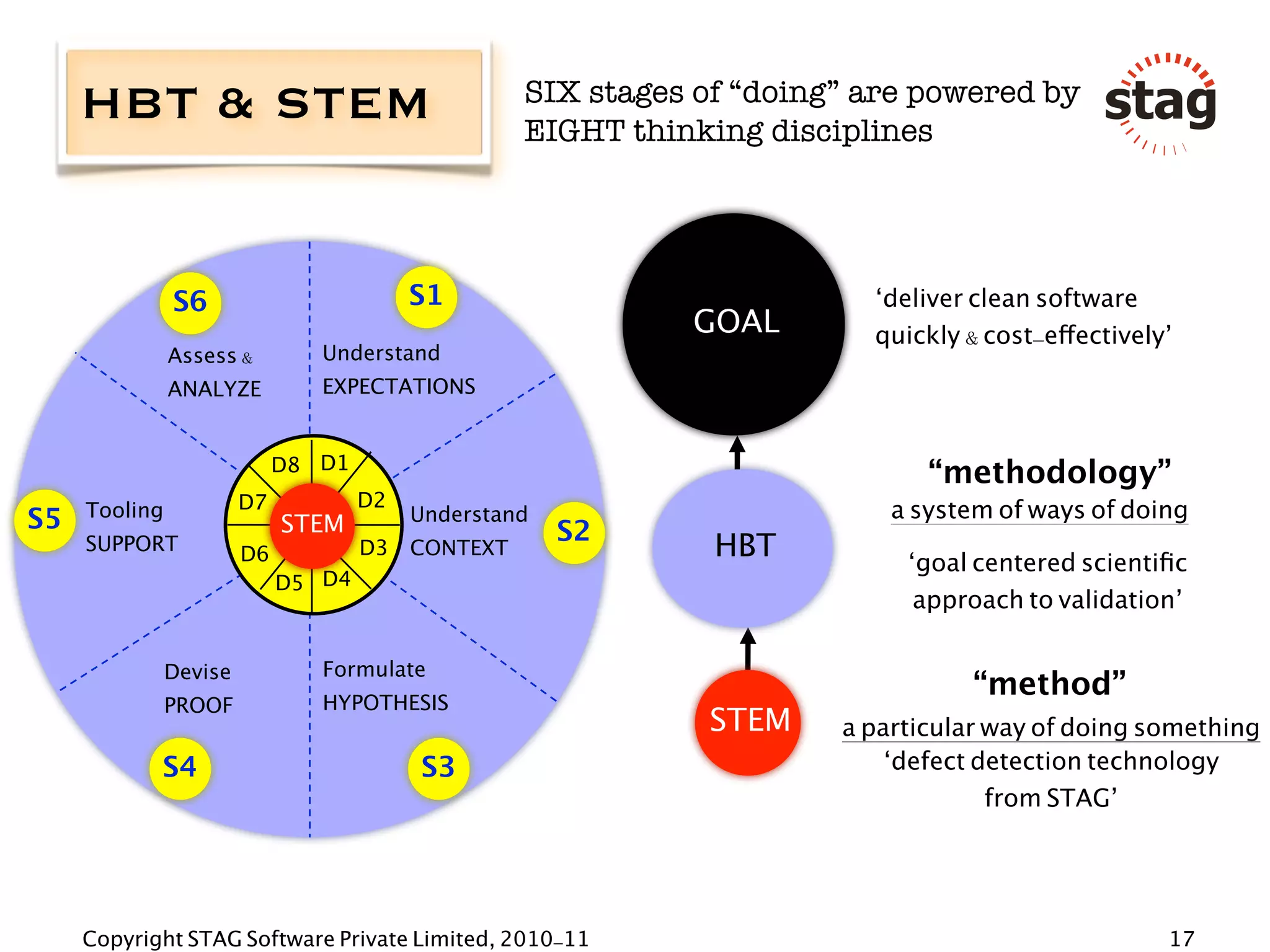

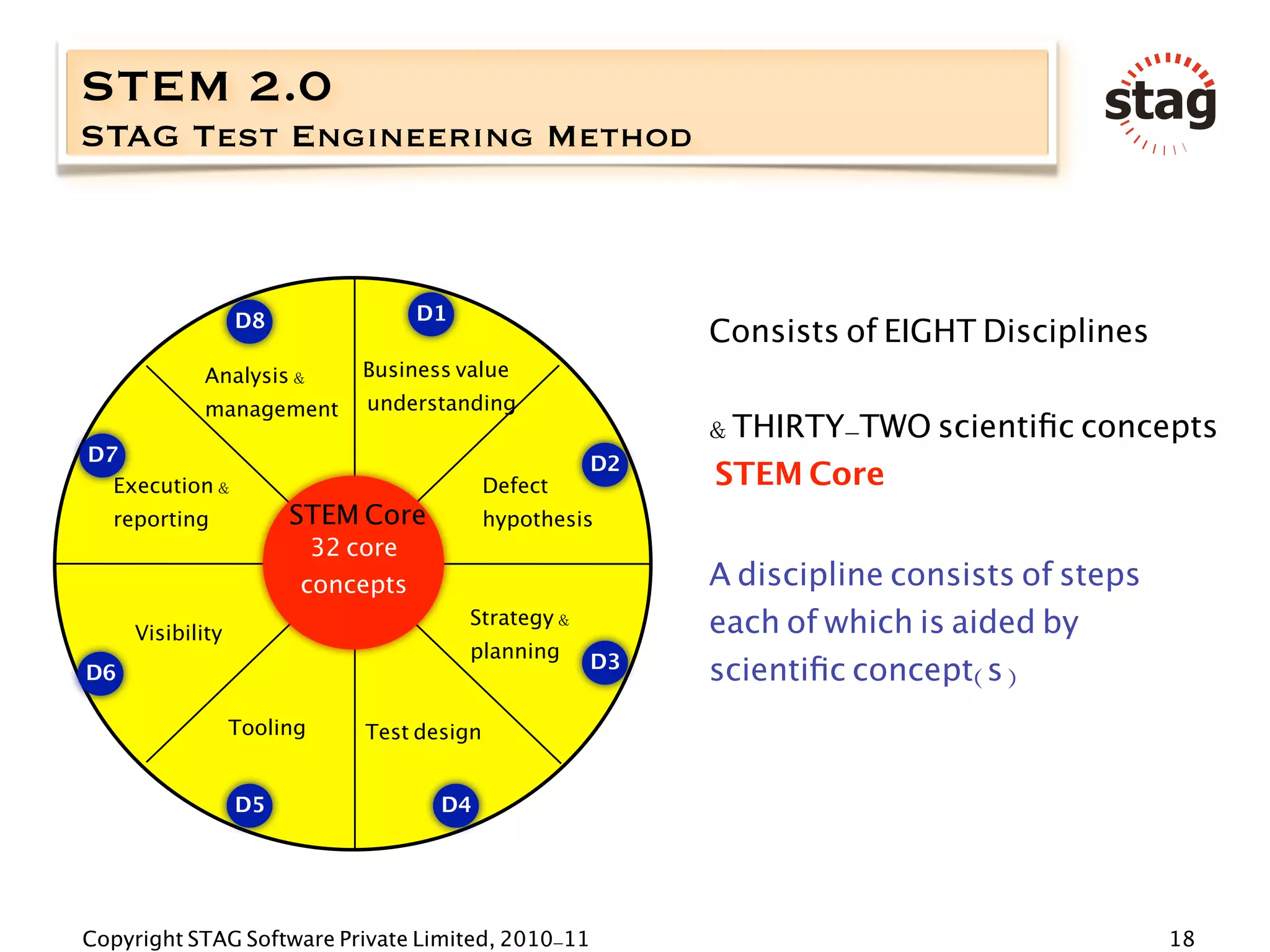

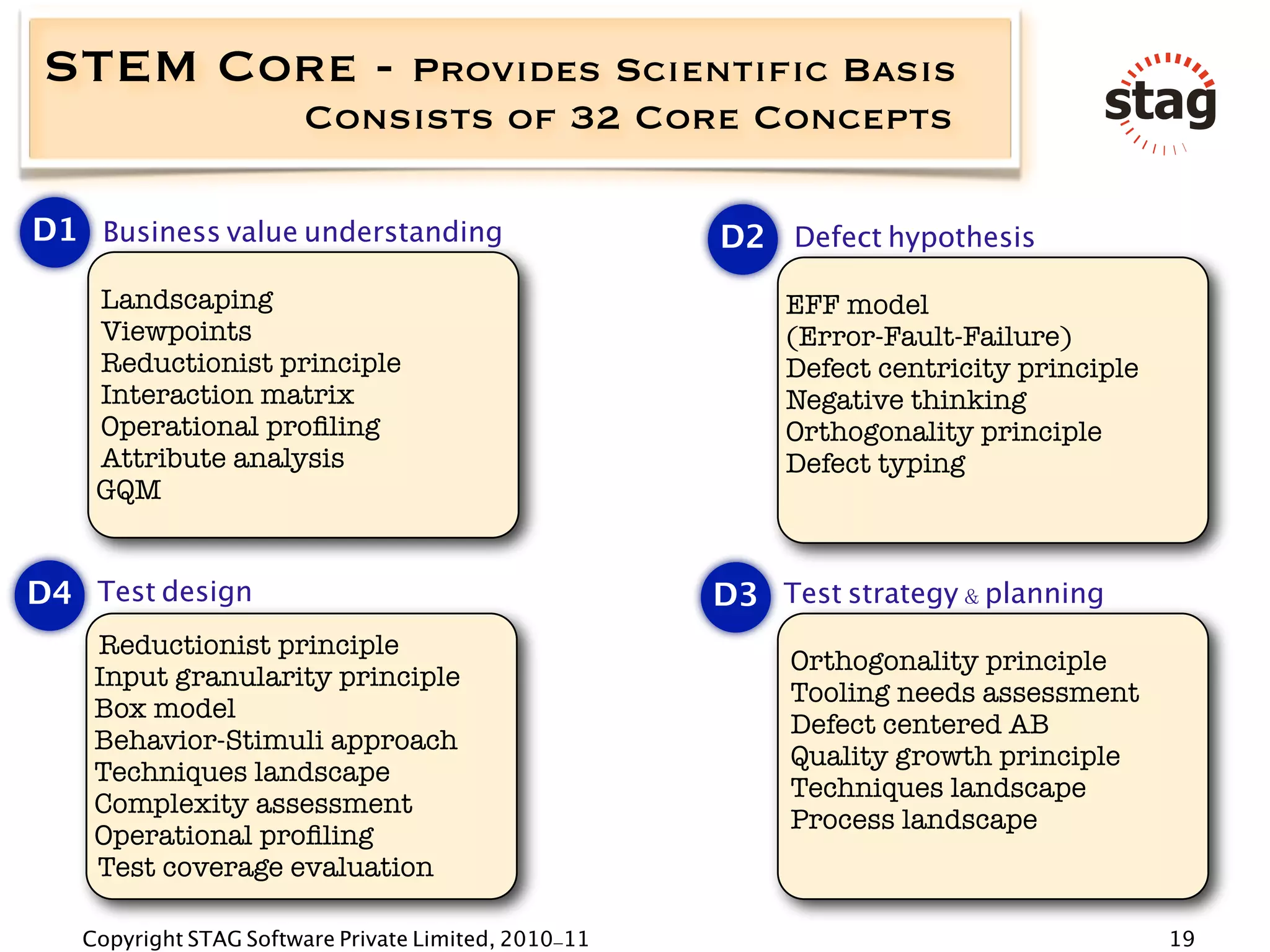

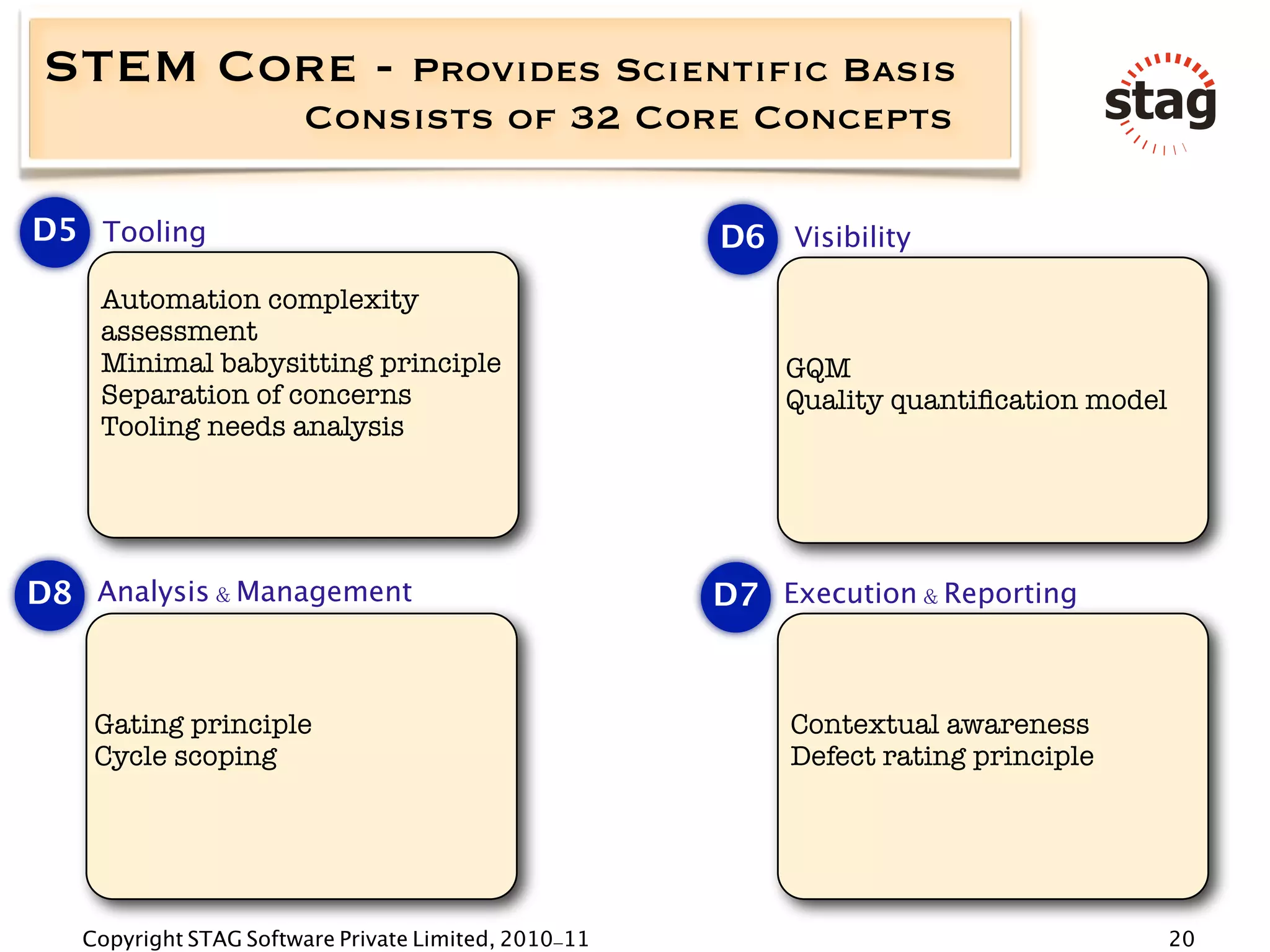

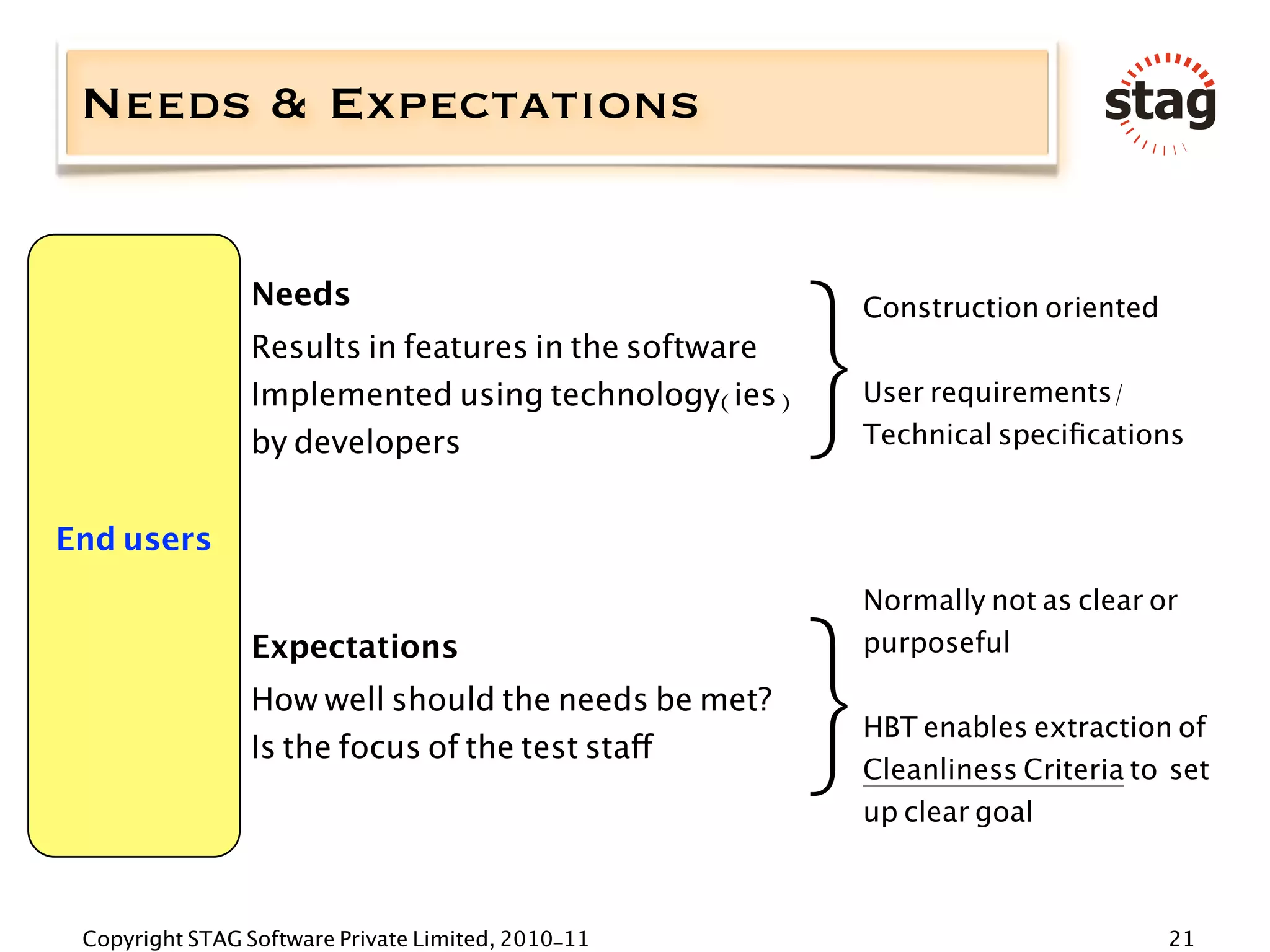

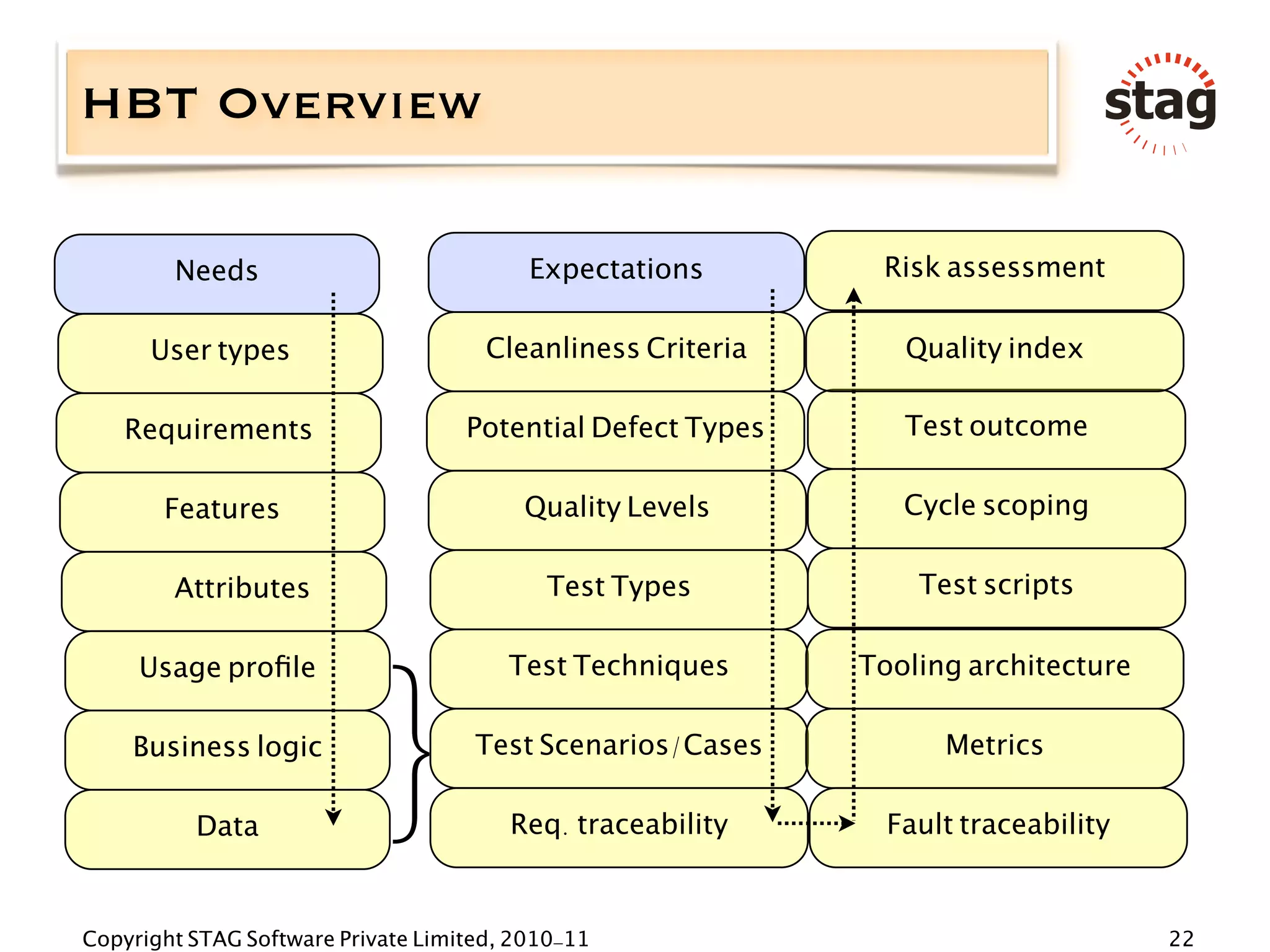

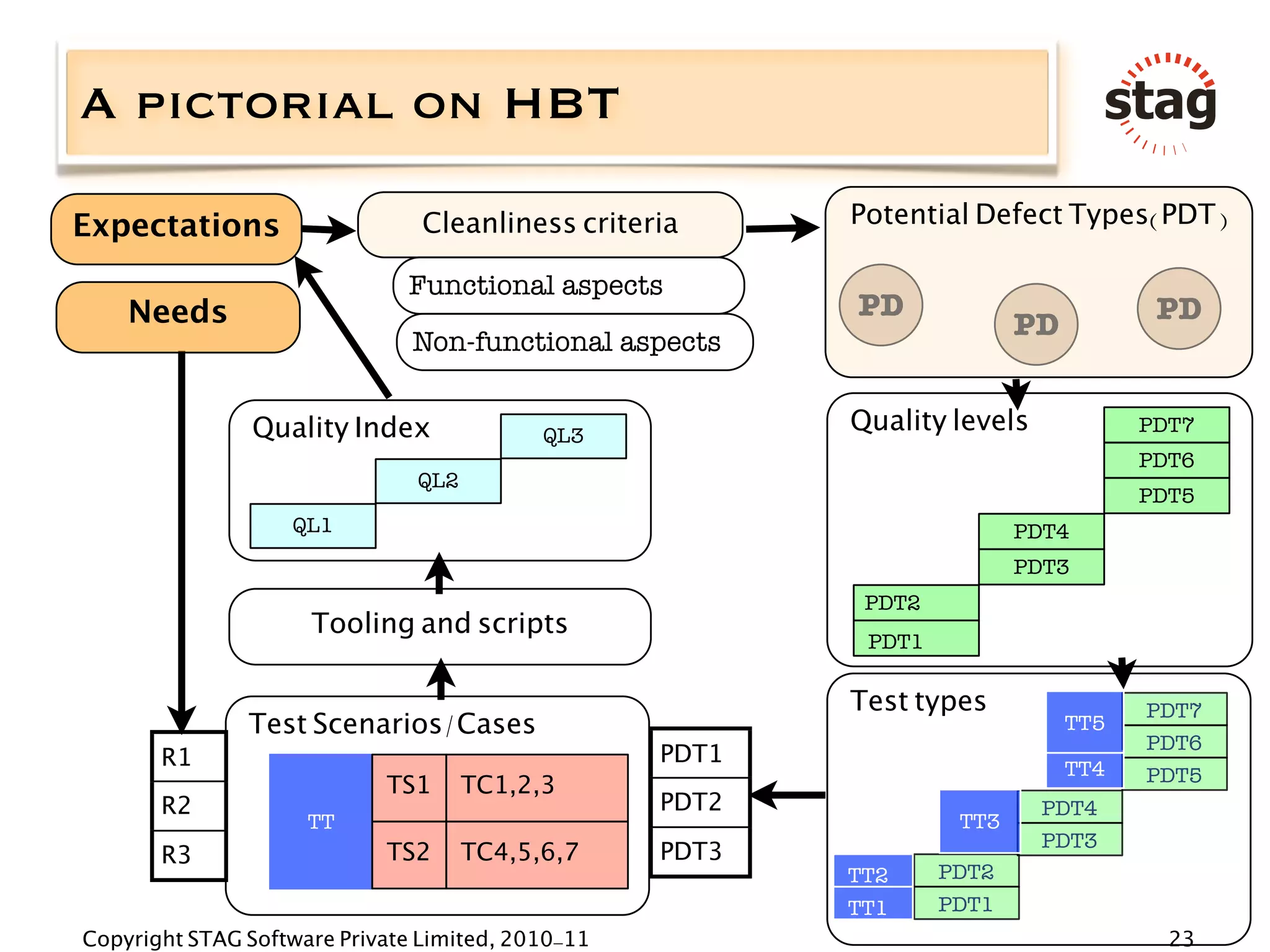

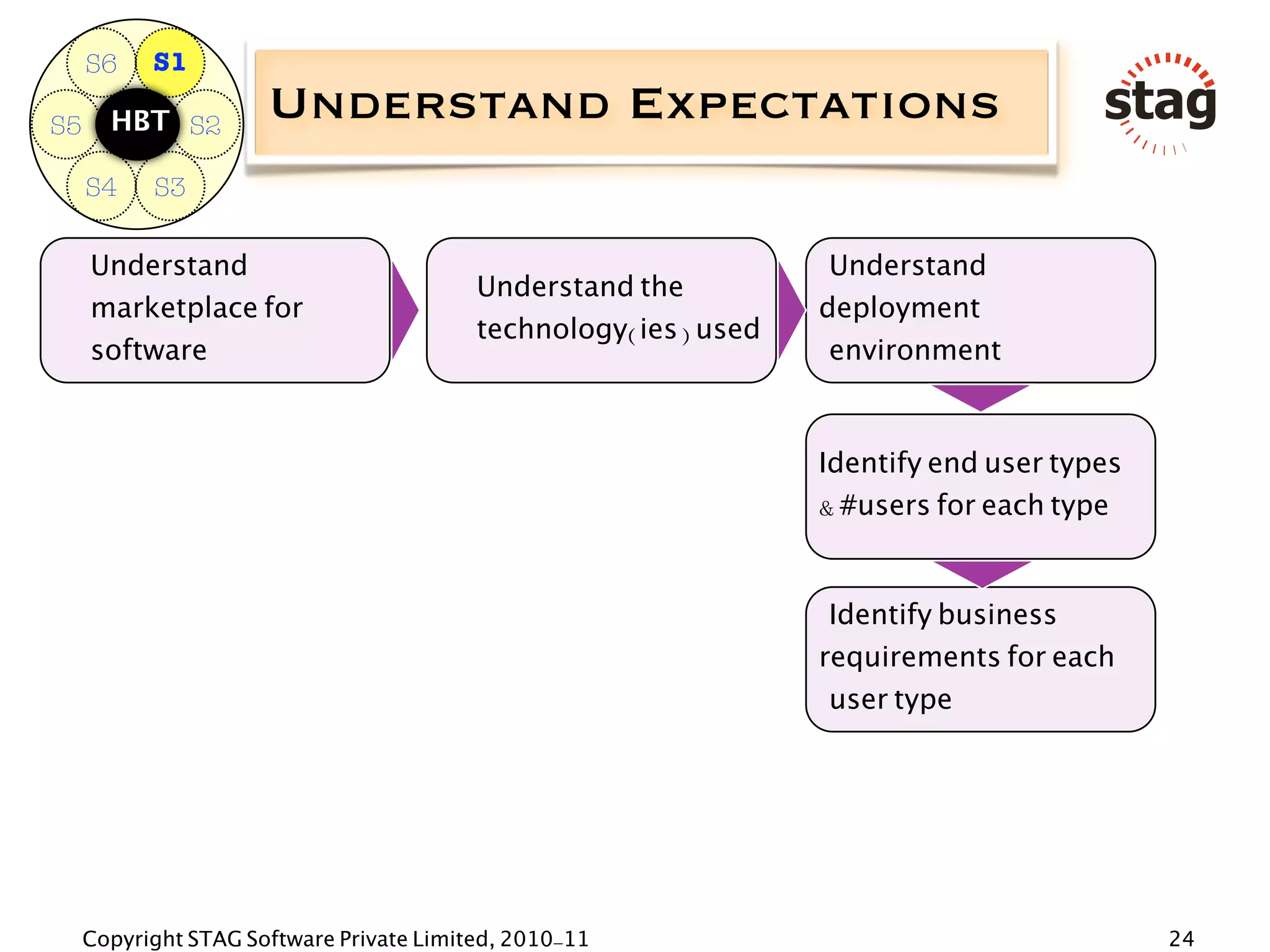

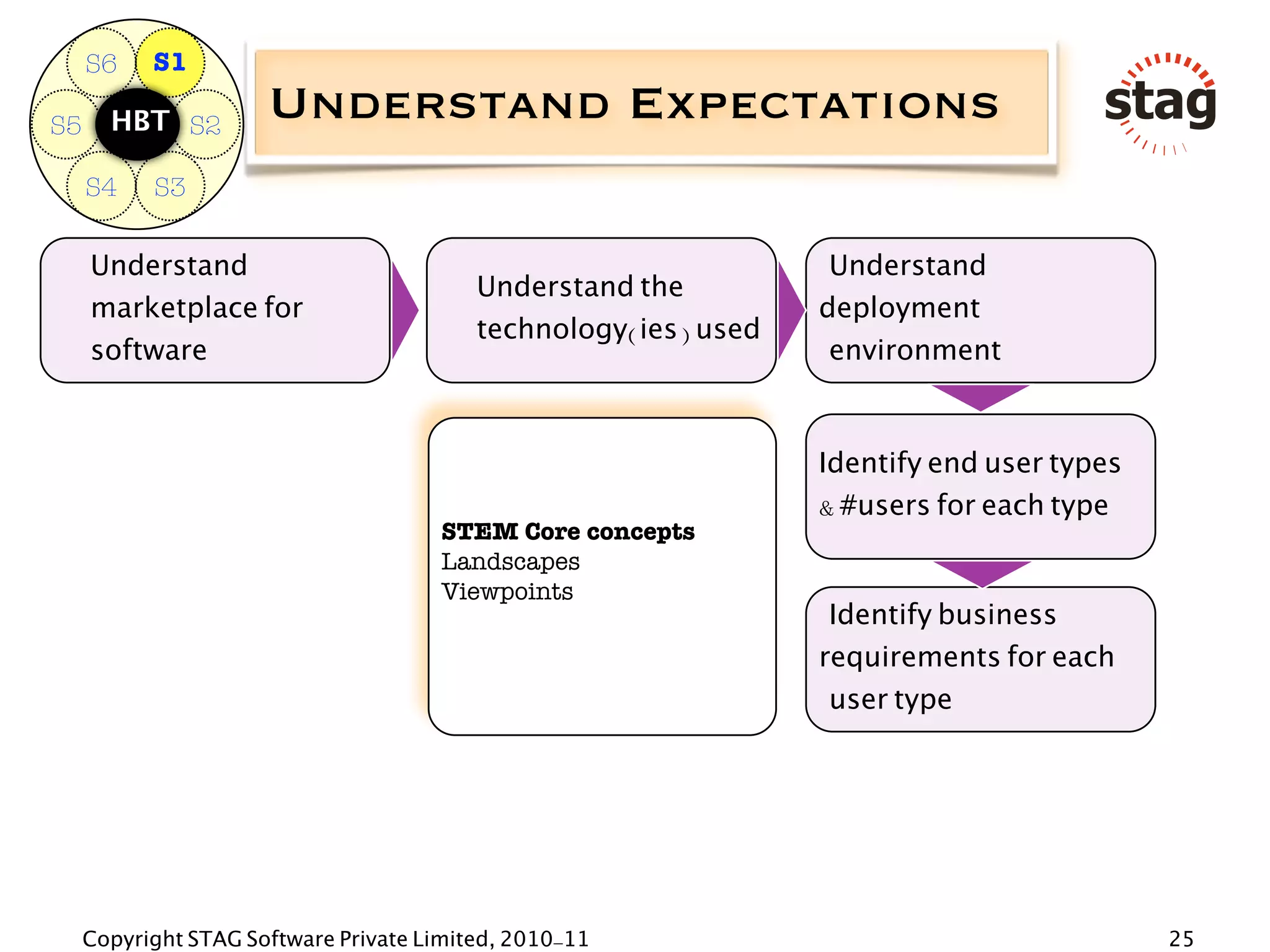

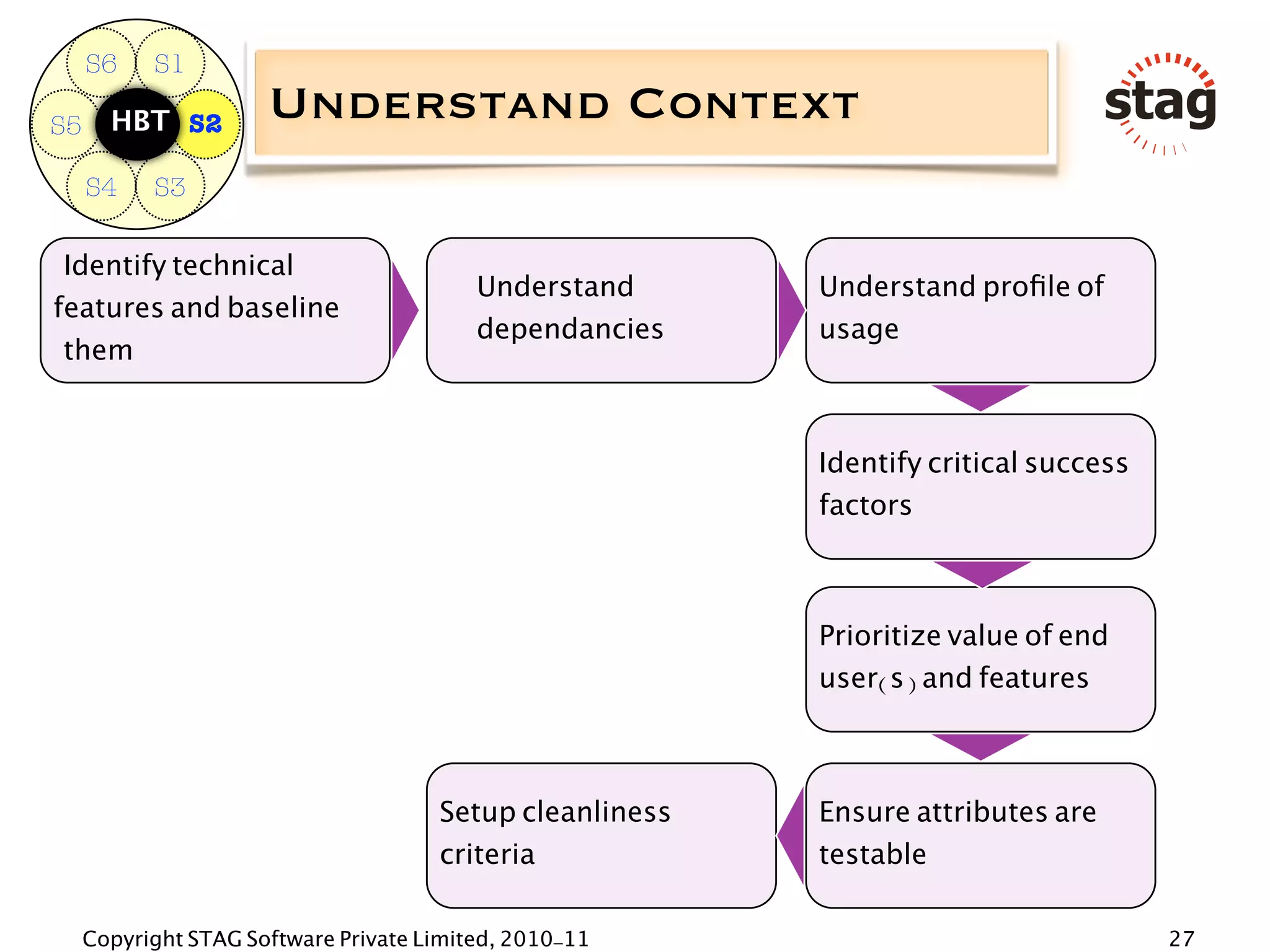

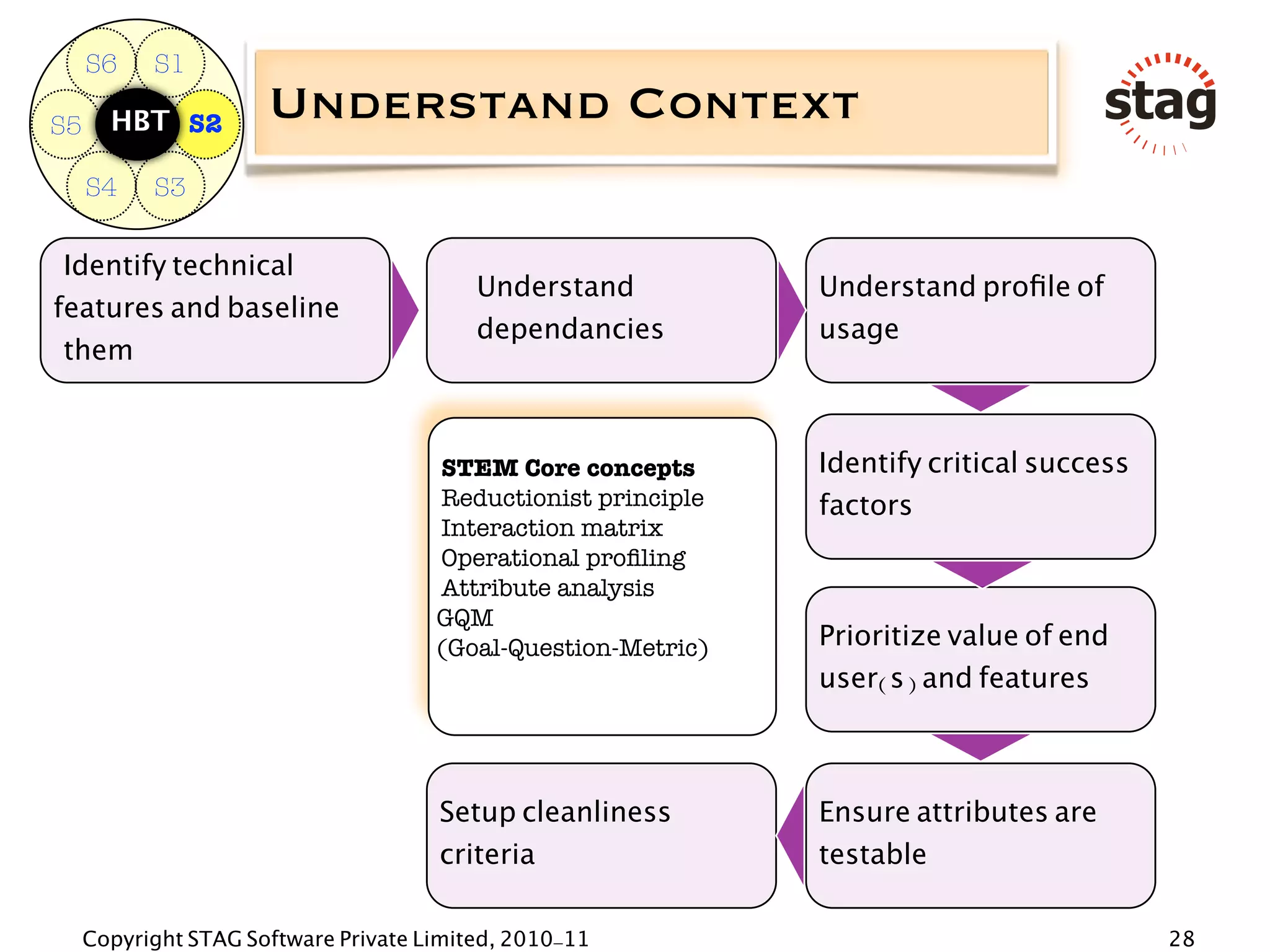

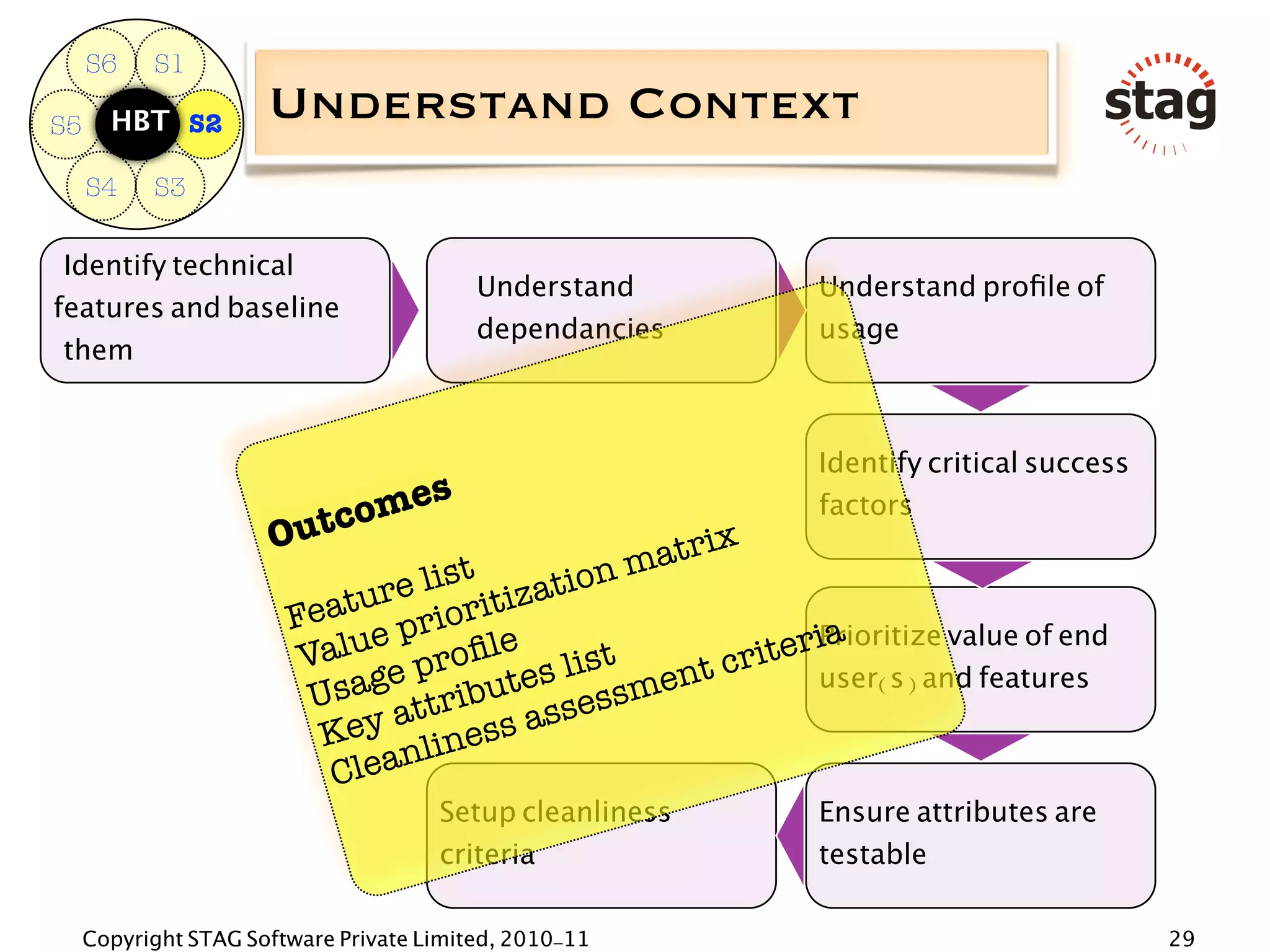

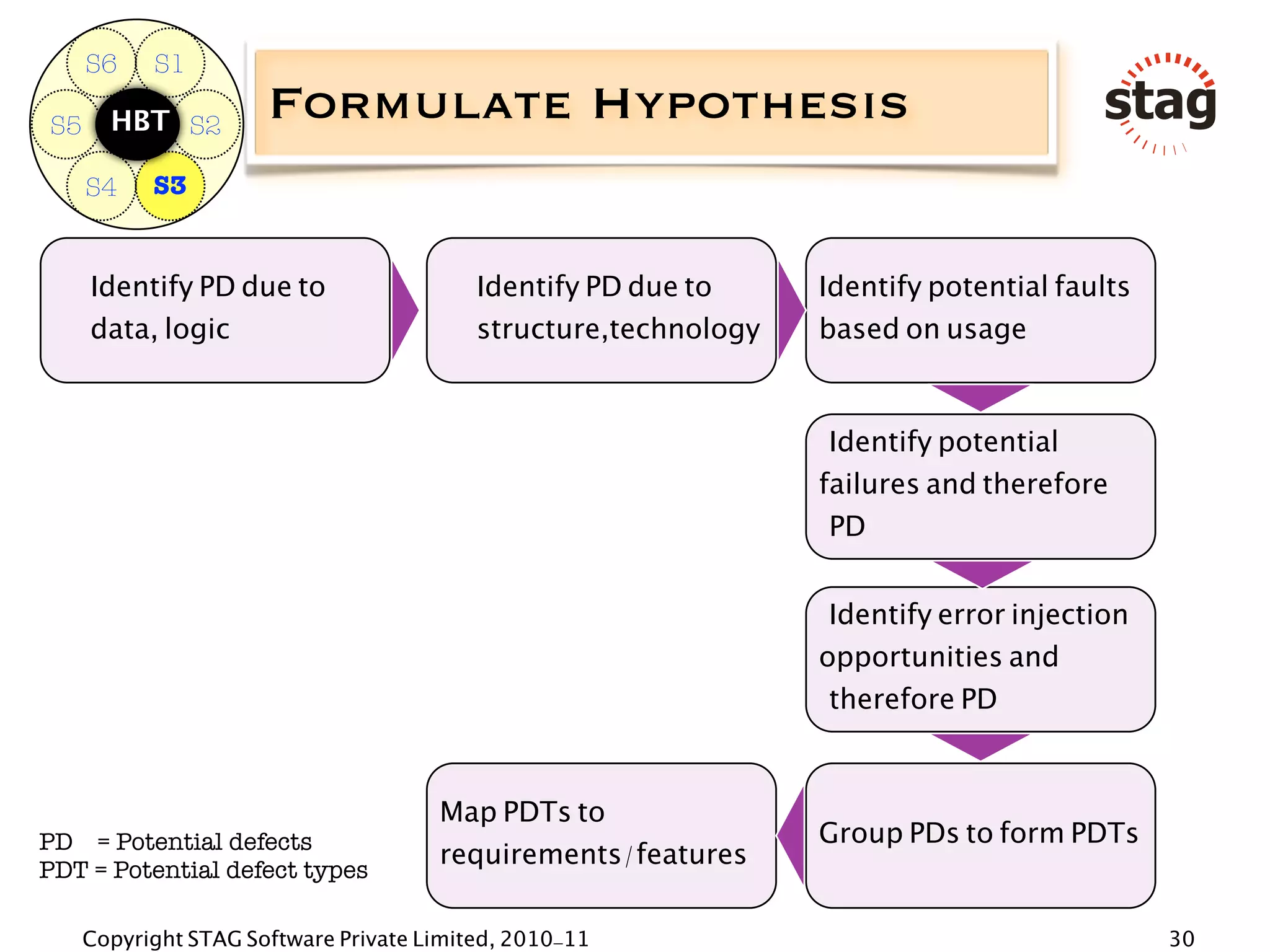

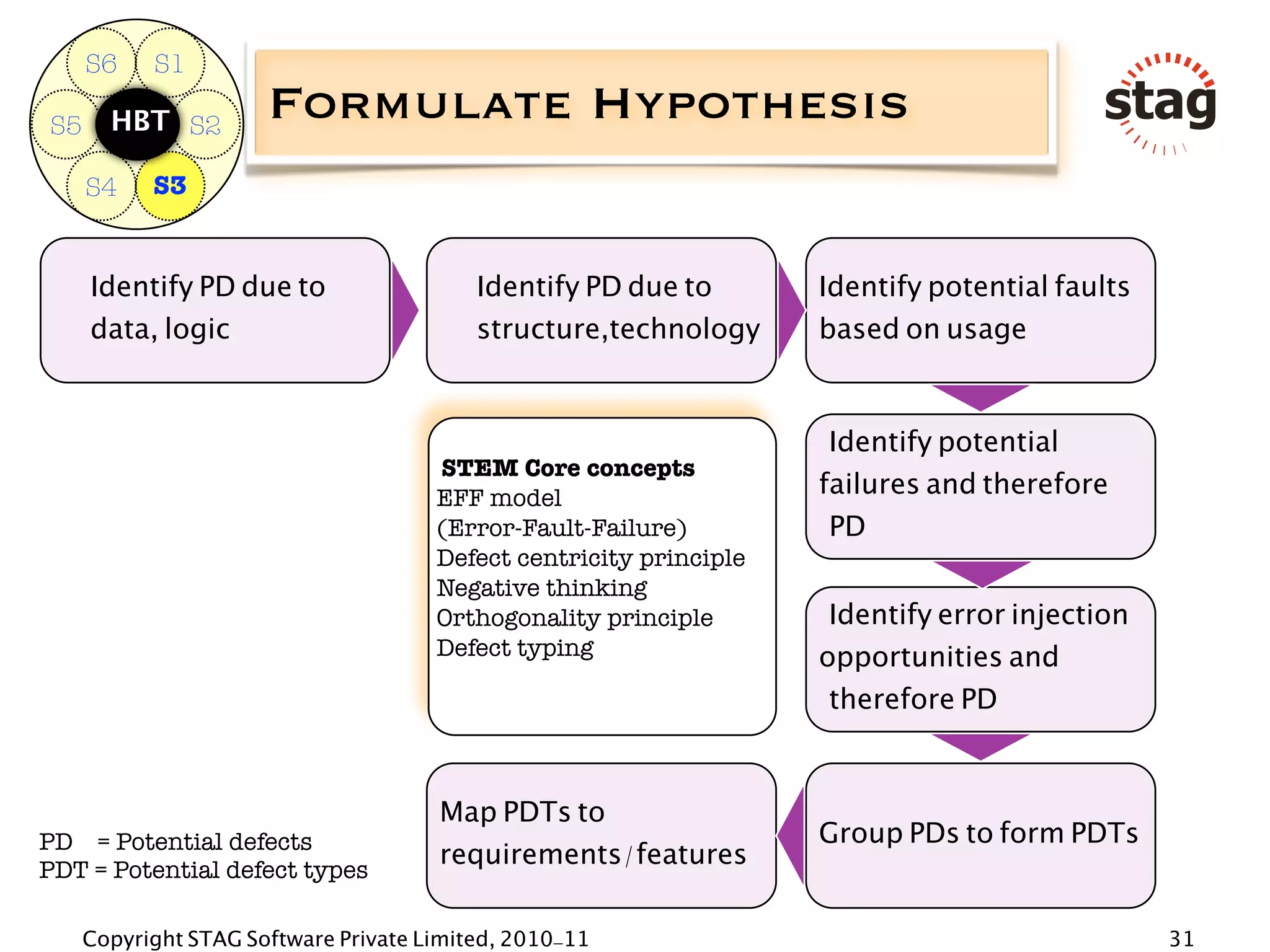

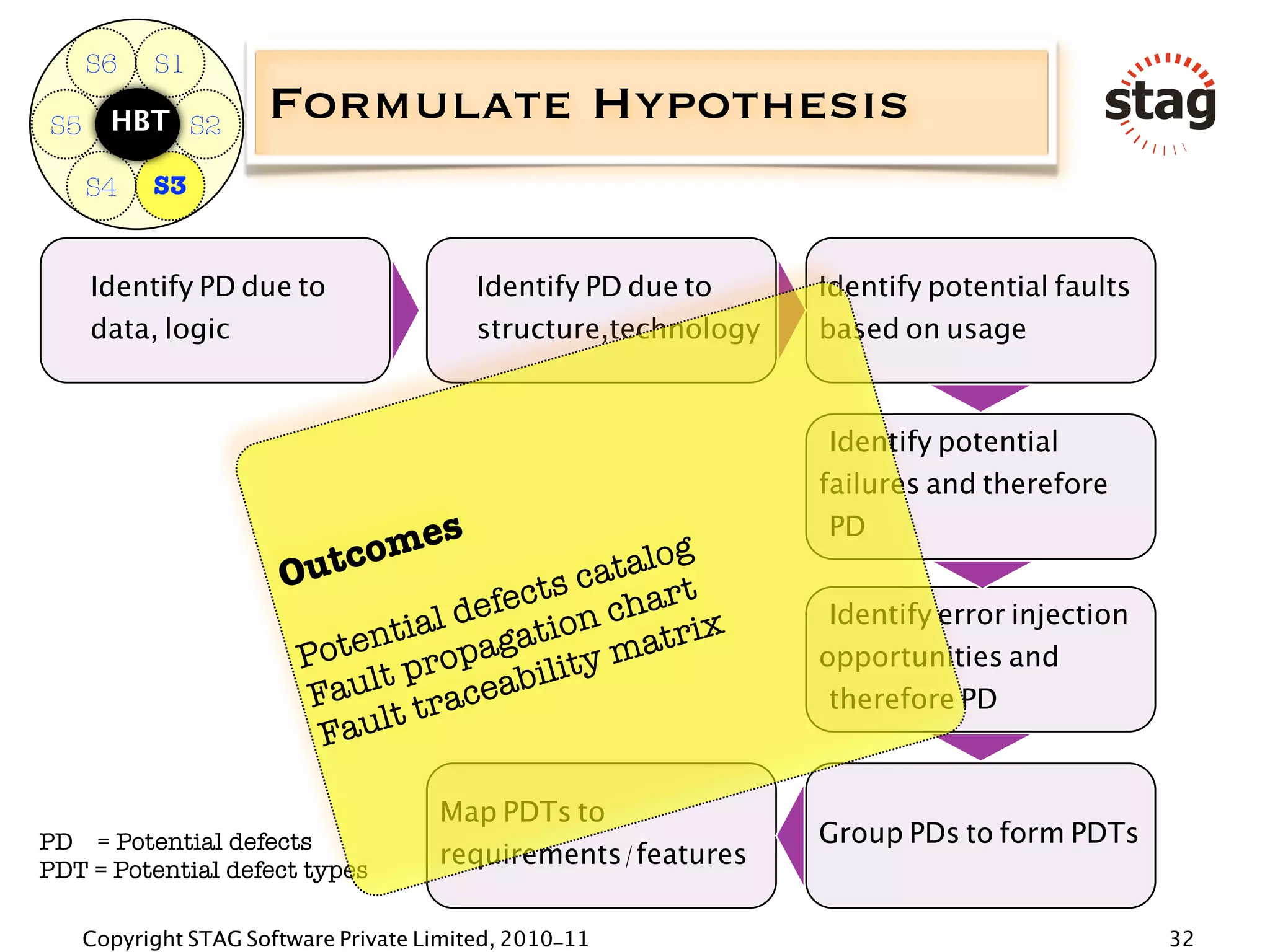

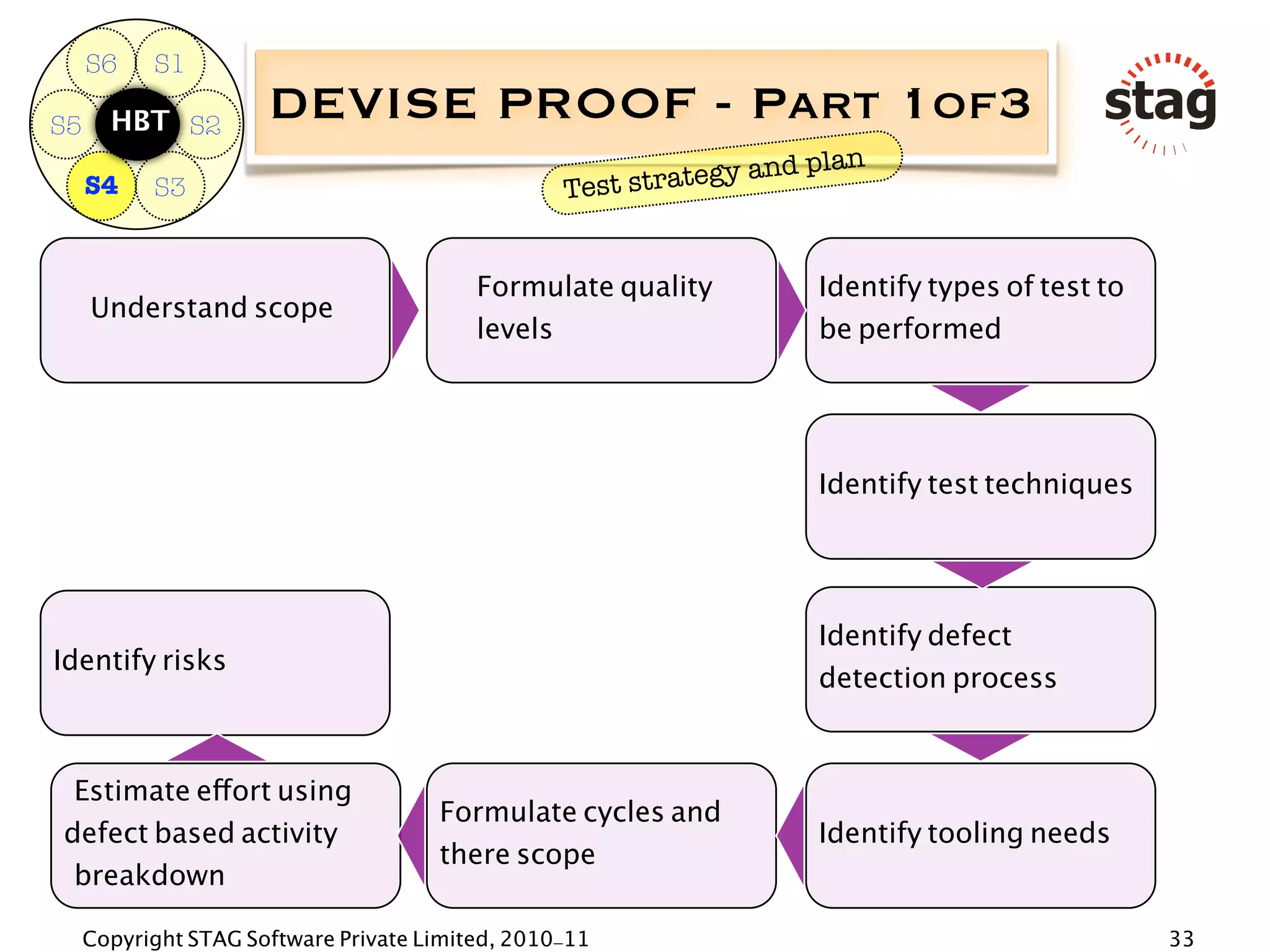

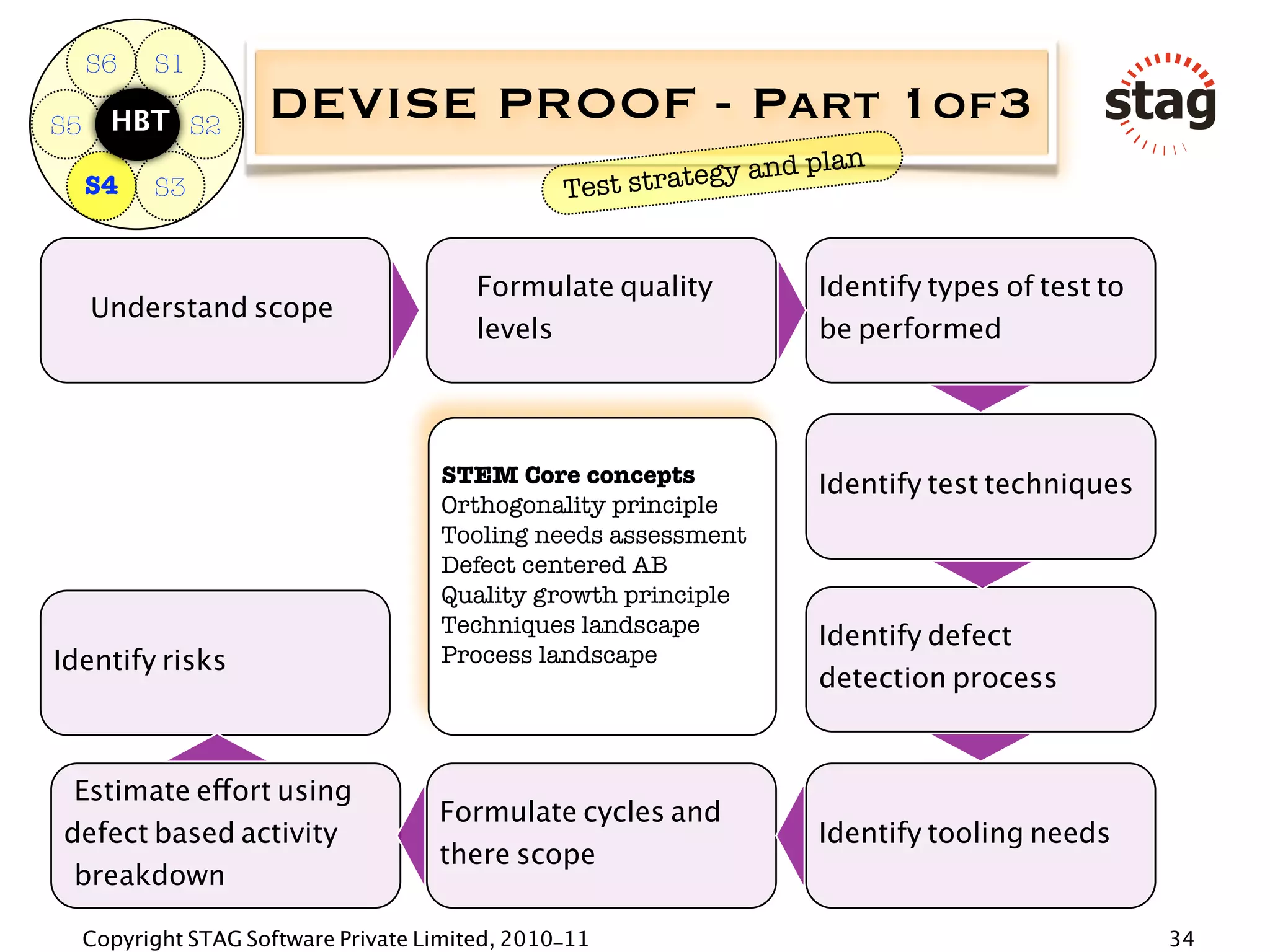

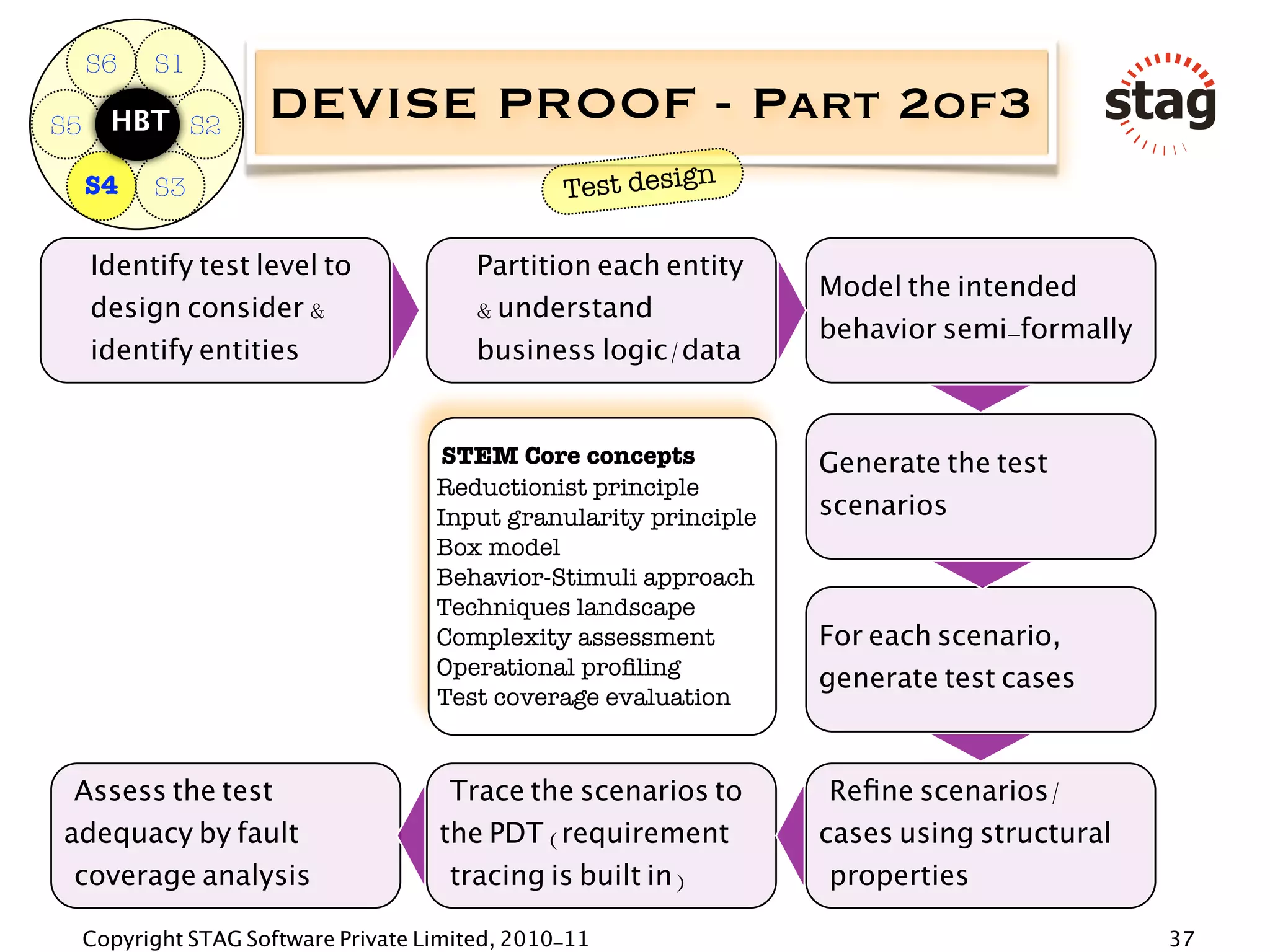

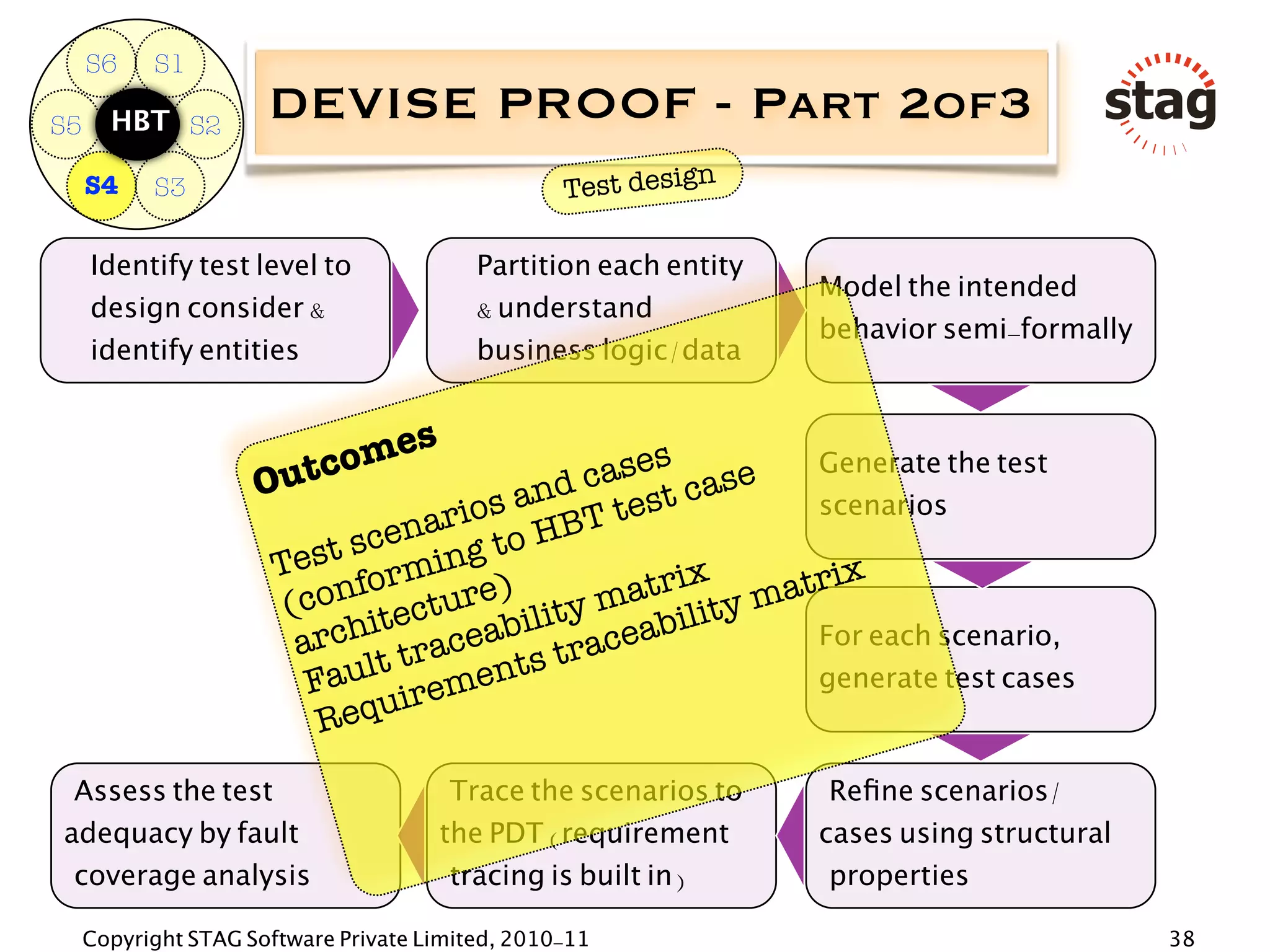

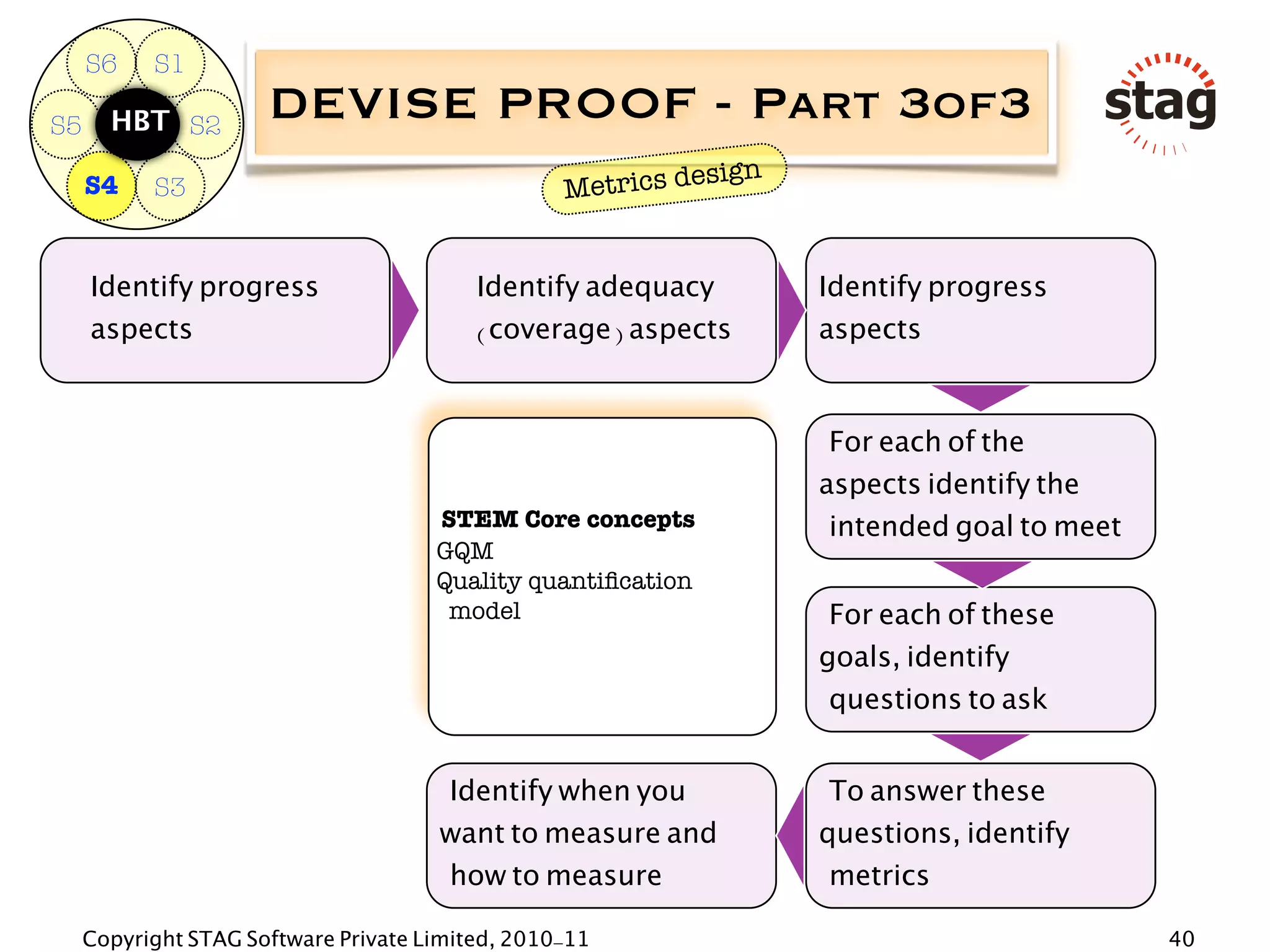

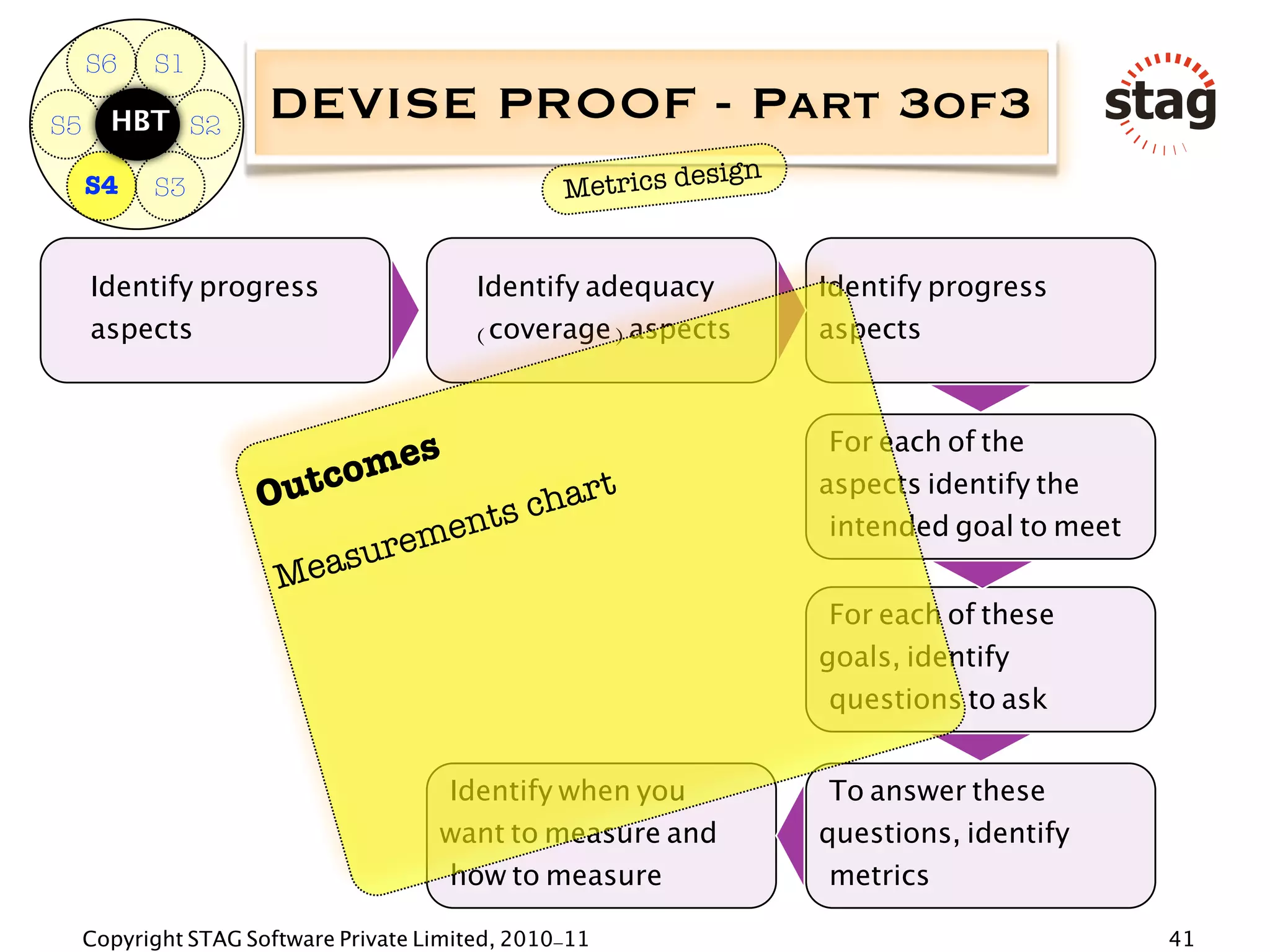

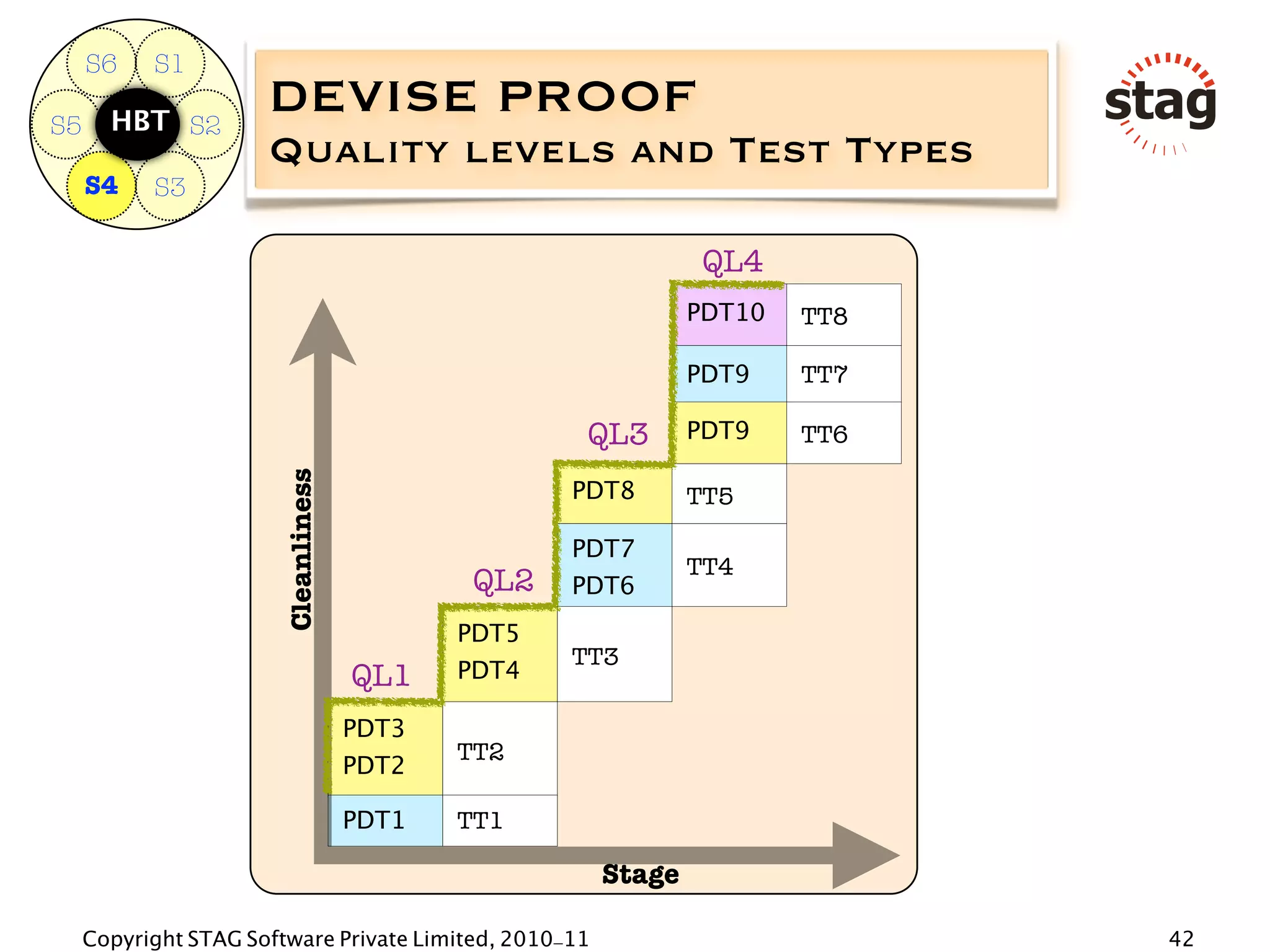

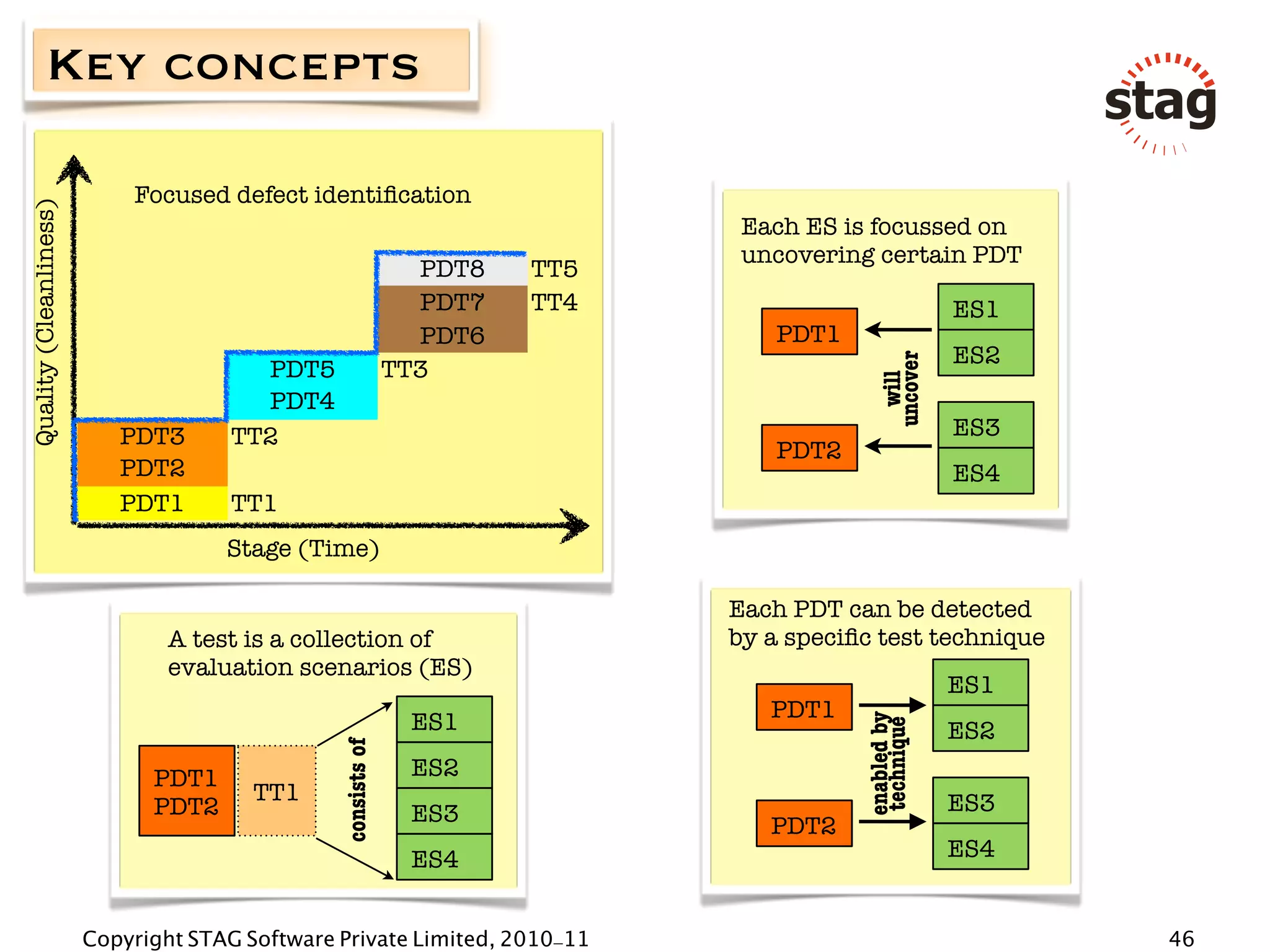

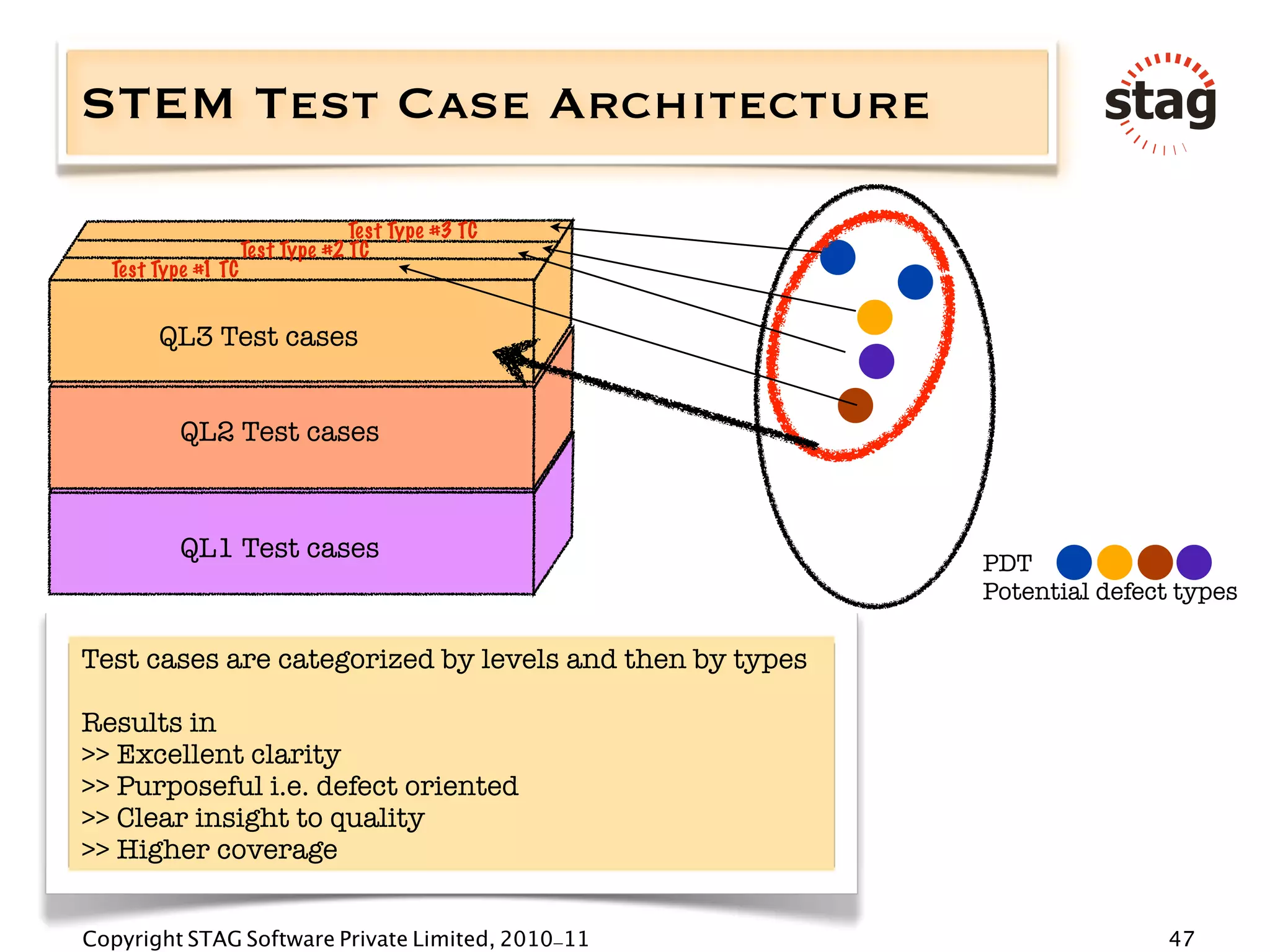

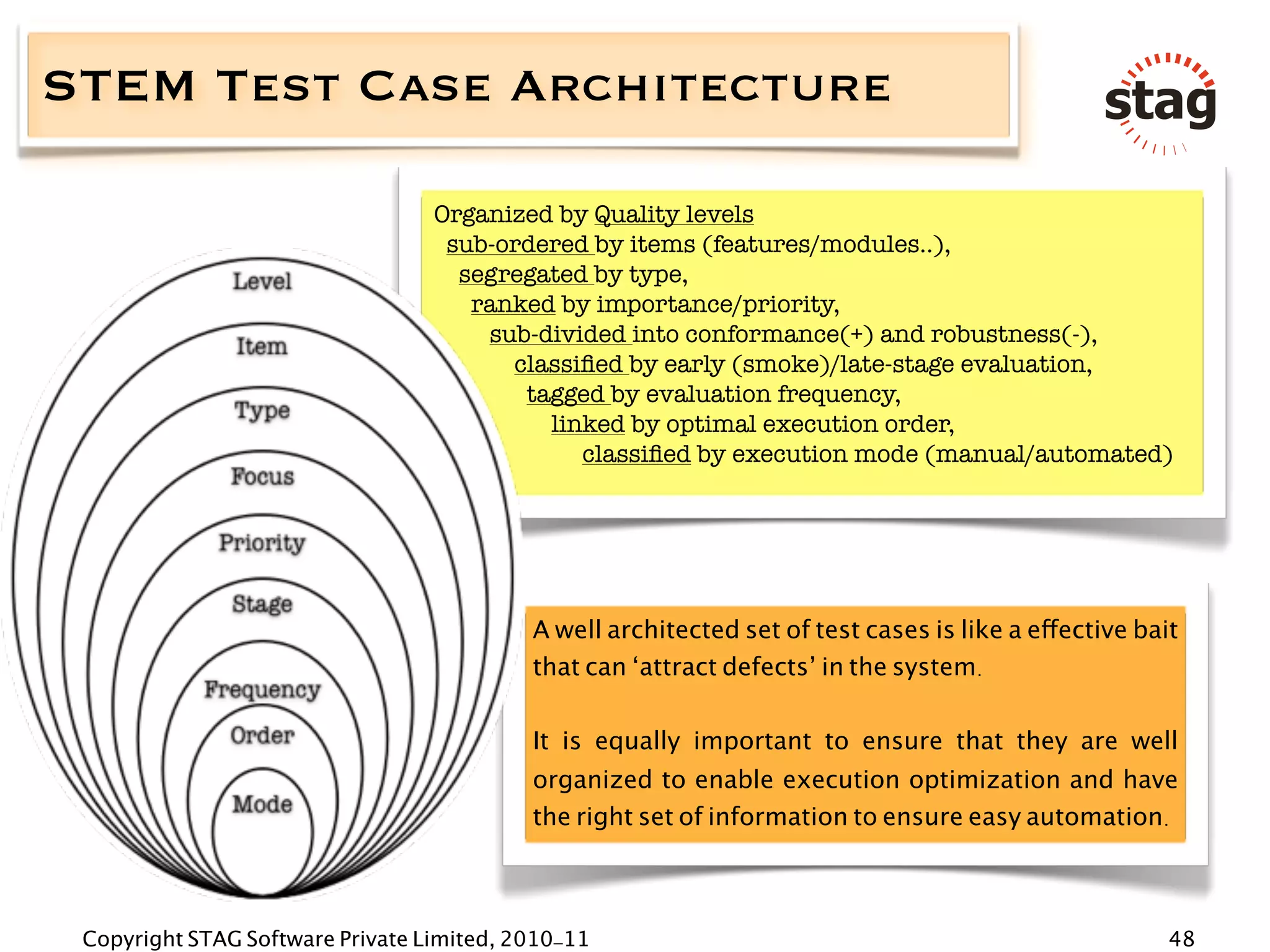

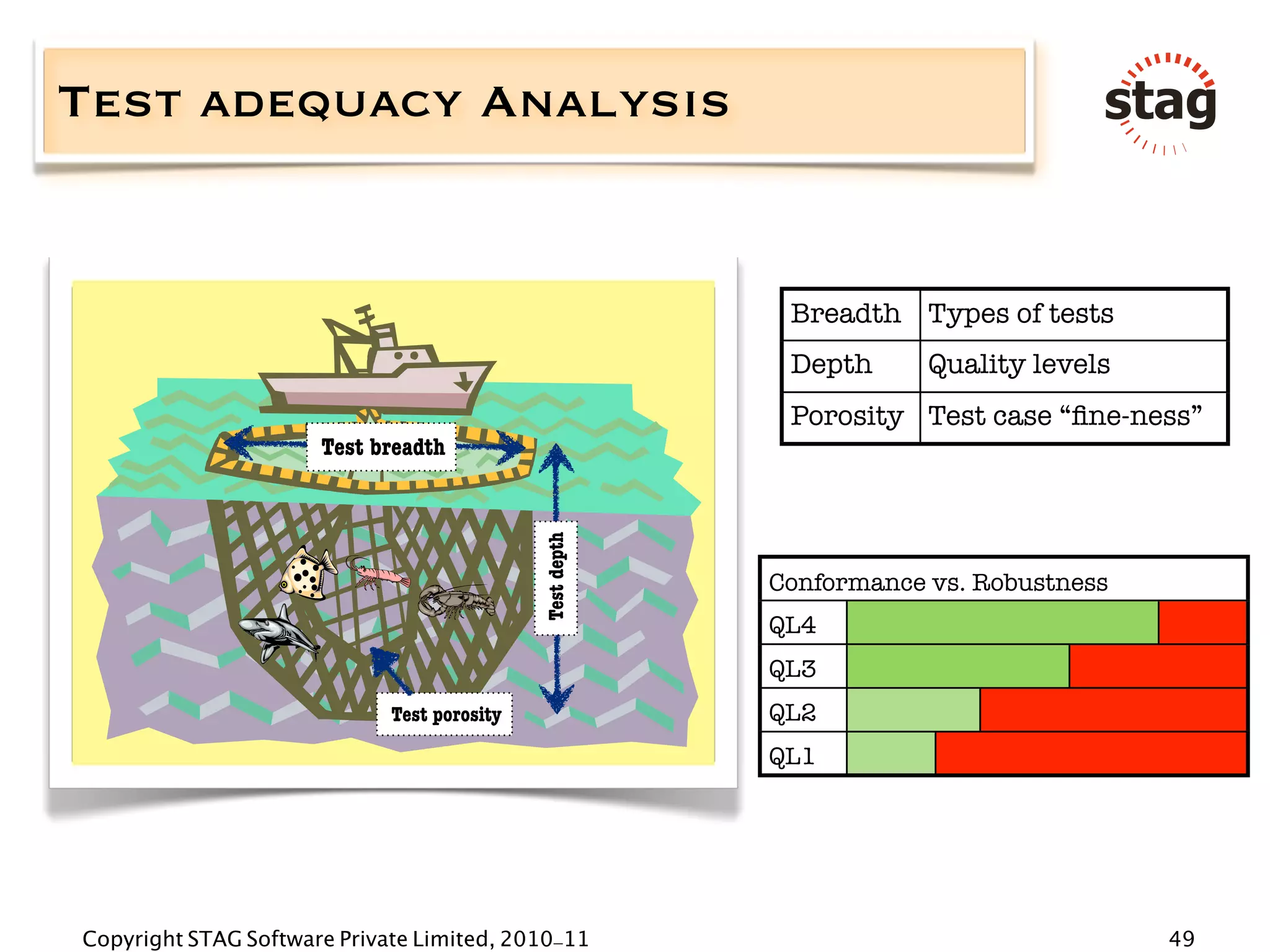

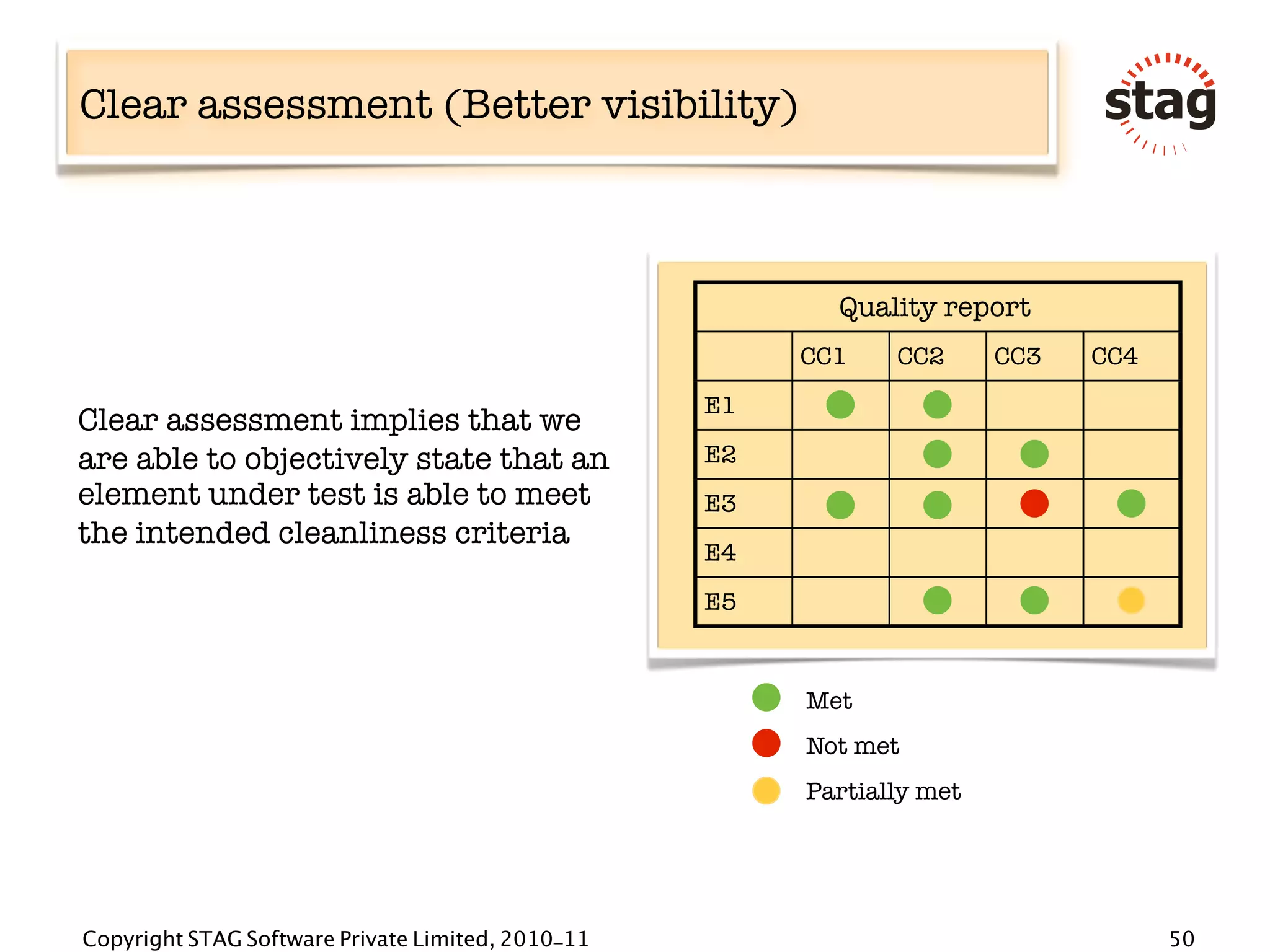

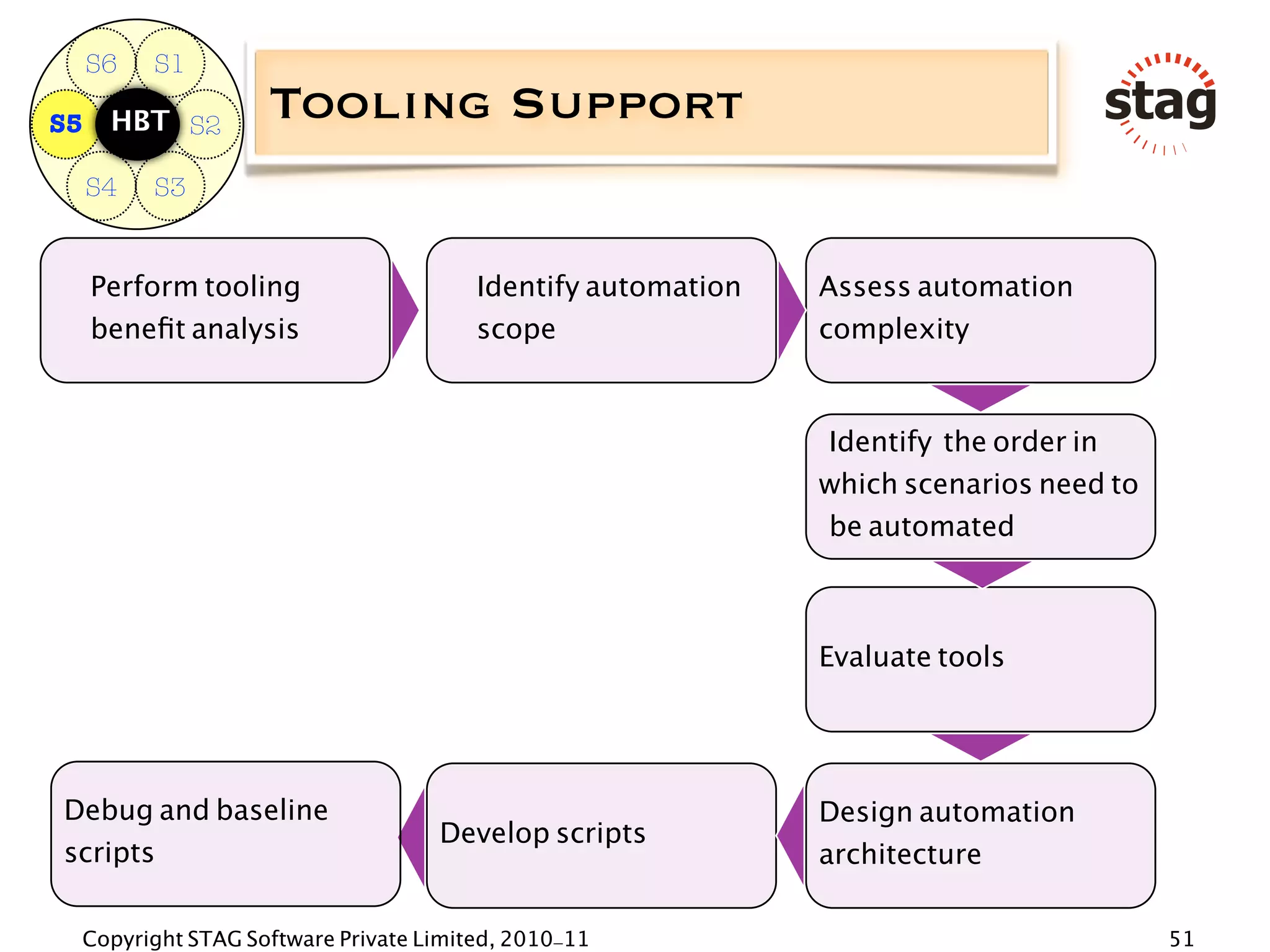

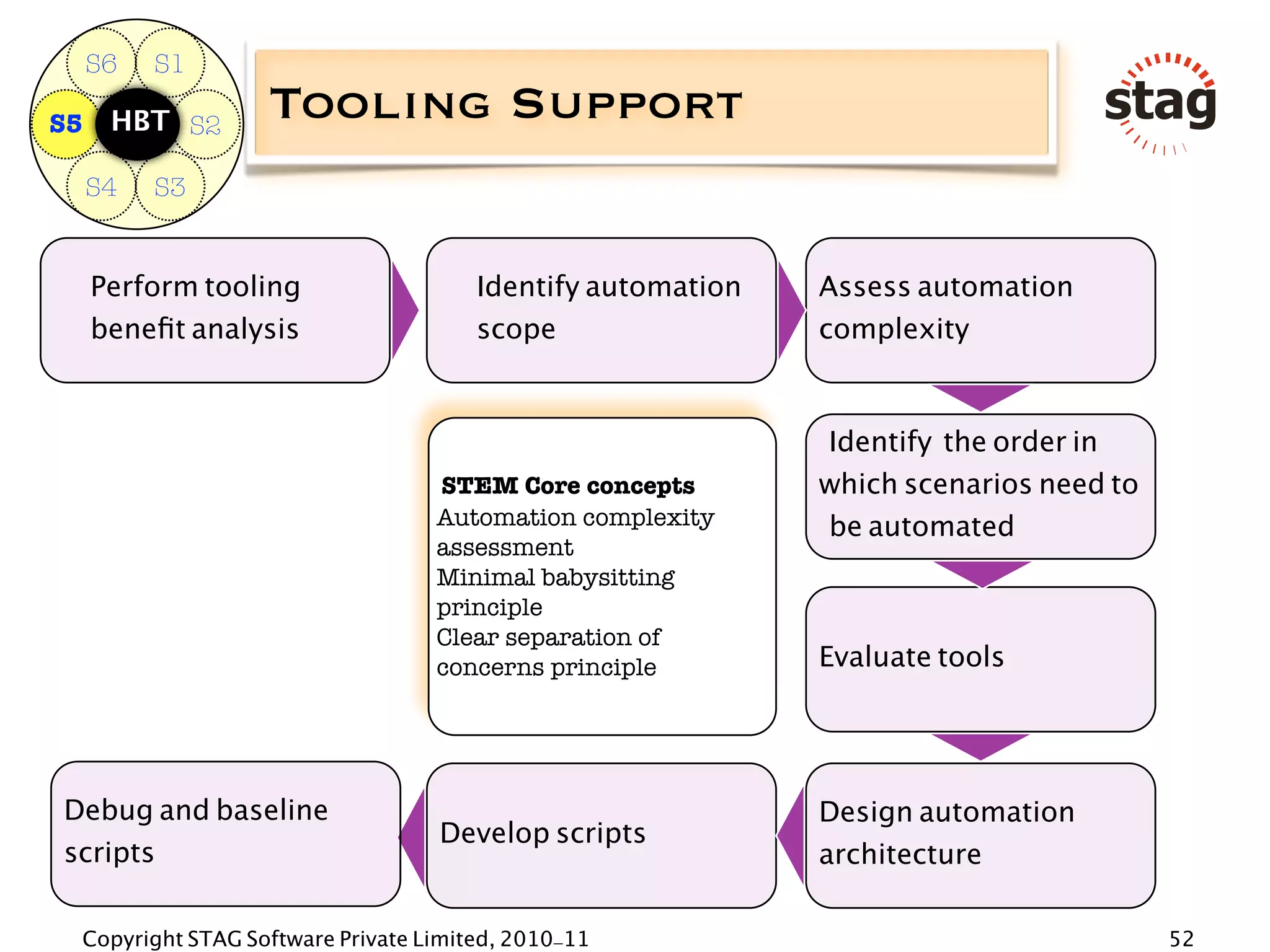

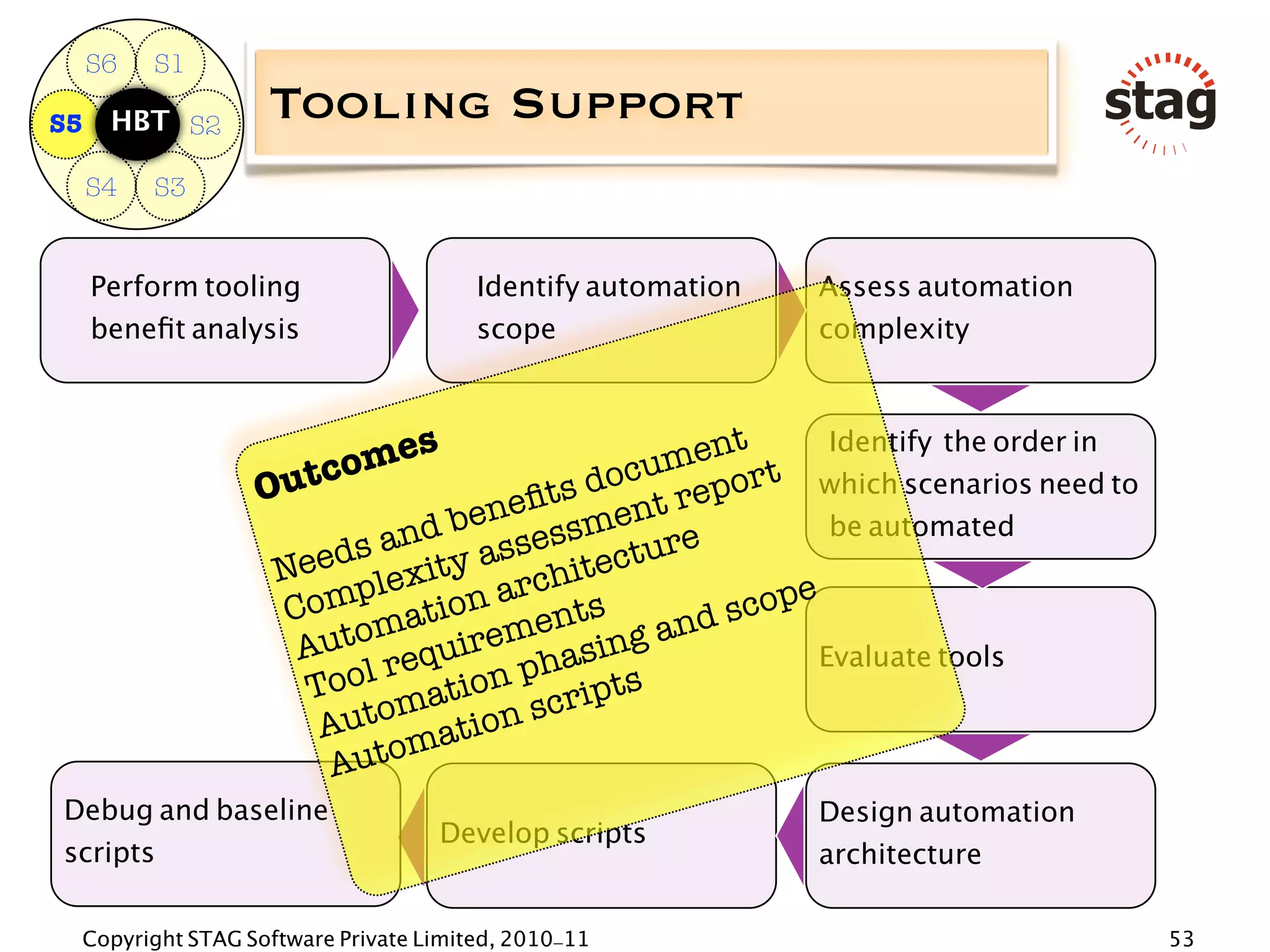

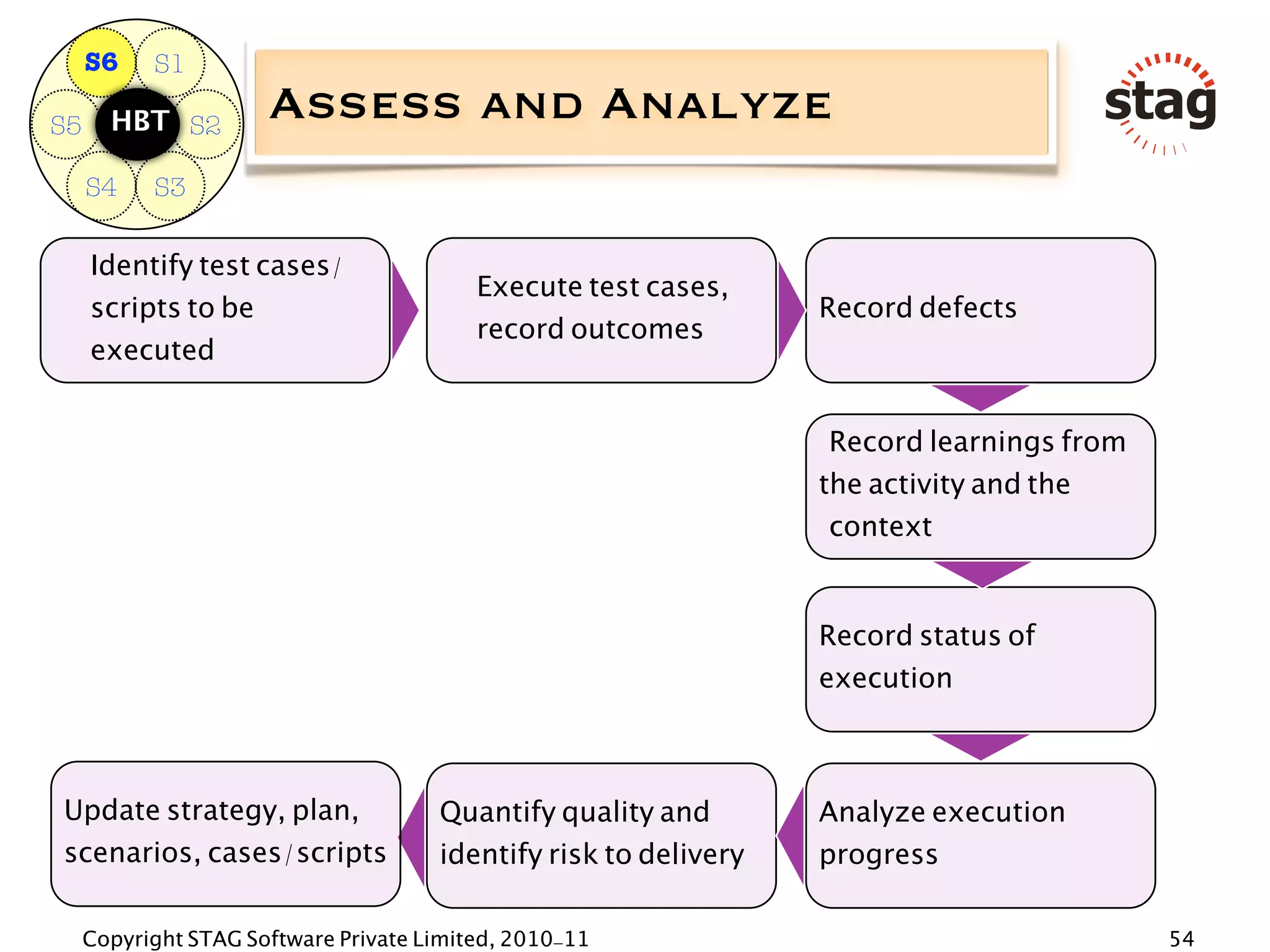

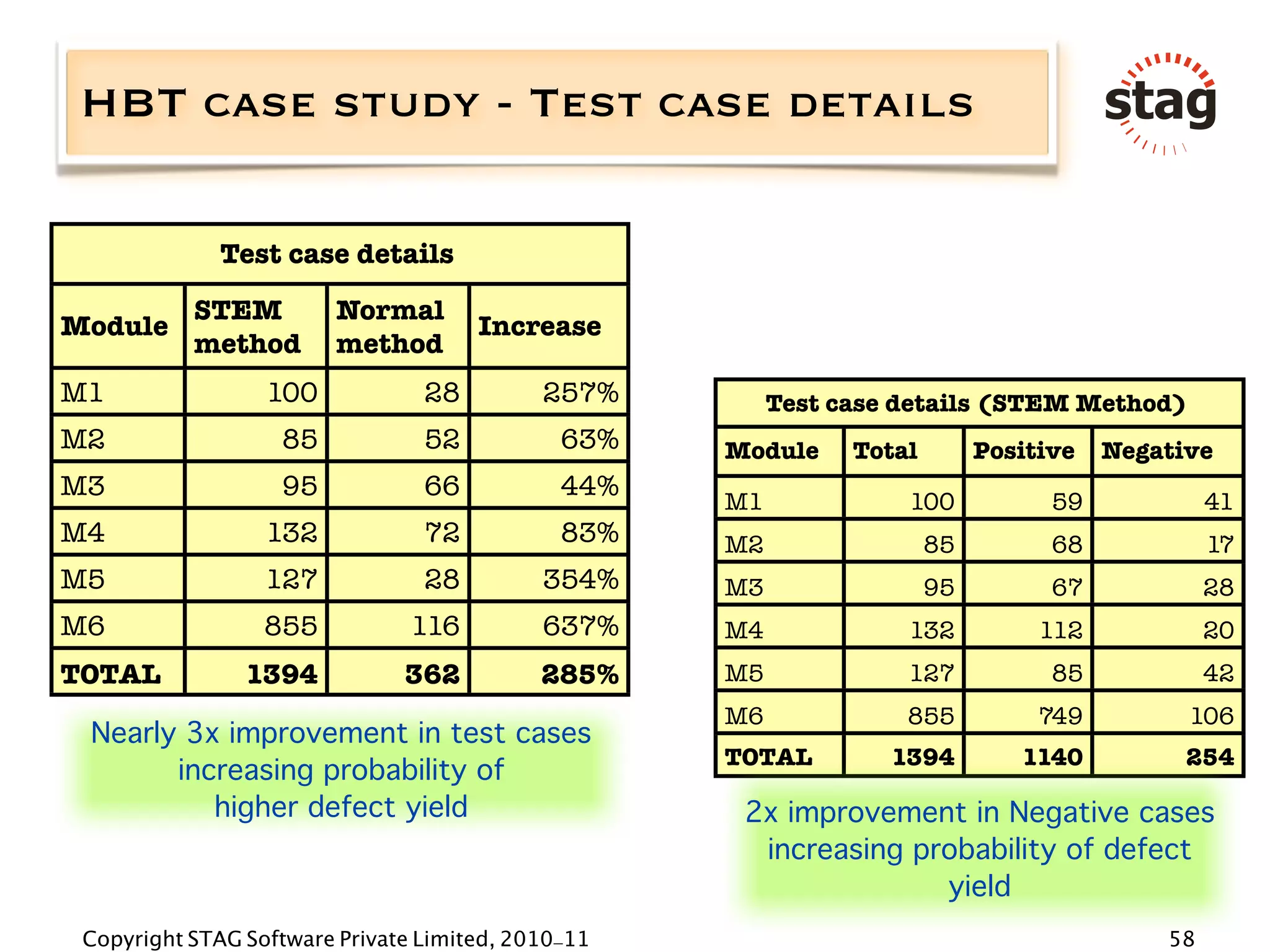

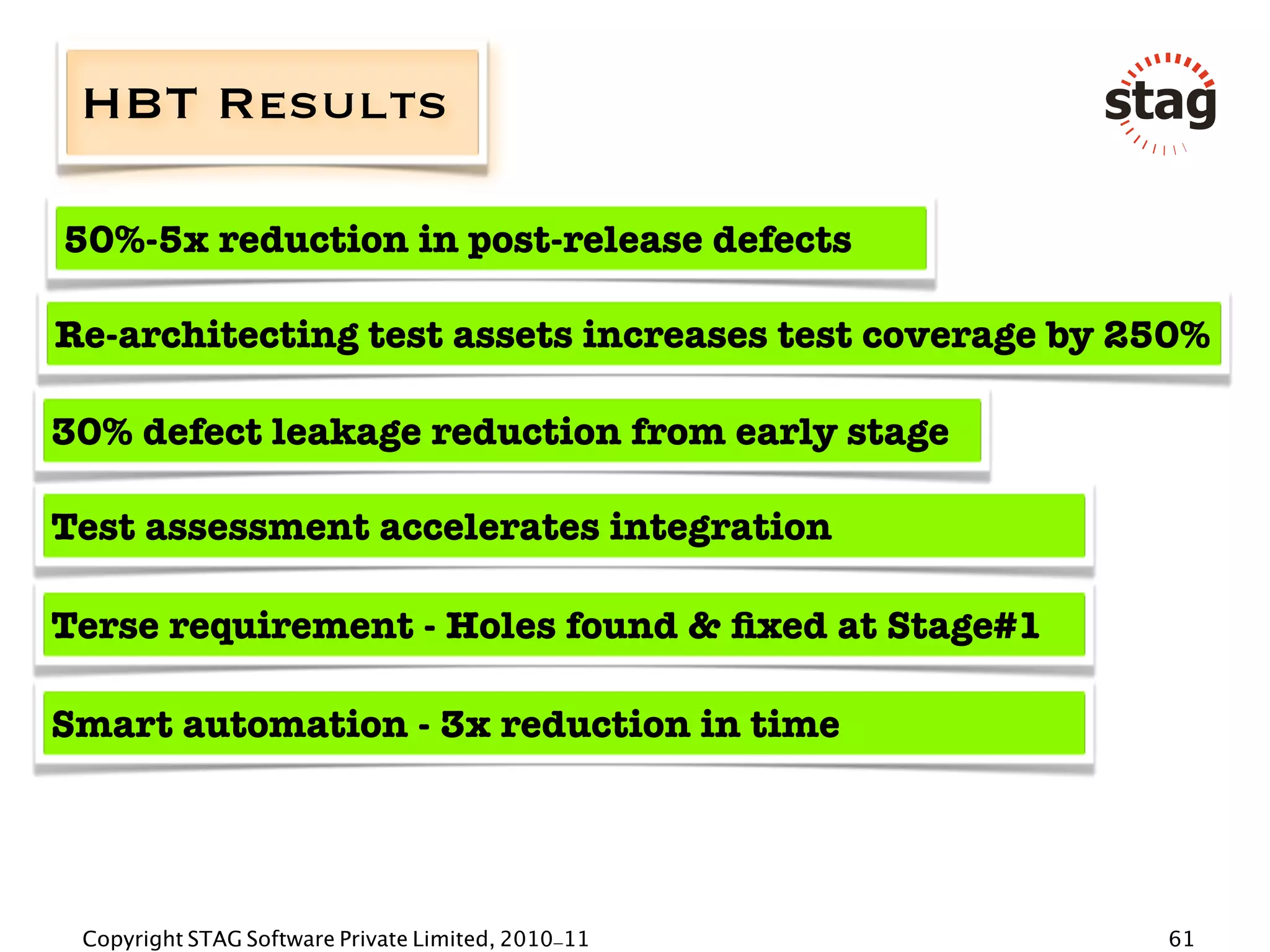

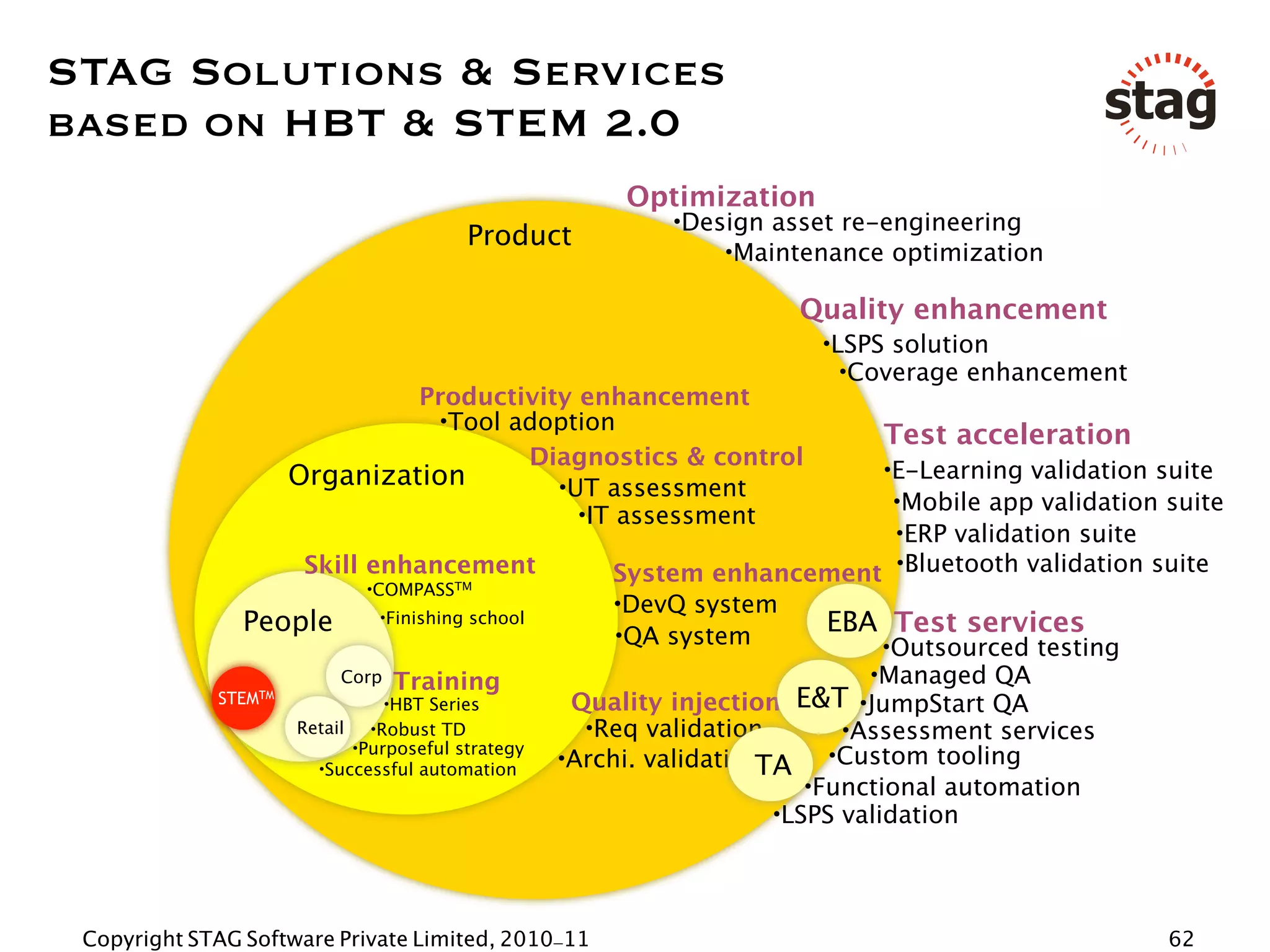

The document introduces Hypothesis Based Testing (HBT) as a goal-focused methodology for software validation that consists of six stages of "doing" powered by eight thinking disciplines, with an emphasis on understanding expectations, context, and formulating hypotheses about potential defects early in order to prove they will not exist. HBT is described as being centered around the goal of delivering clean software rather than activities, and is powered by a defect detection technique called STEM (STAG Test Engineering Method) that provides a scientific basis through 32 core concepts.