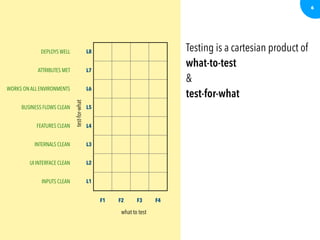

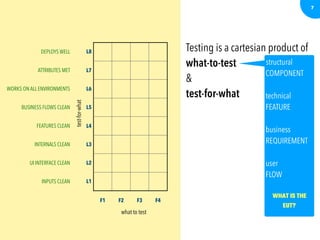

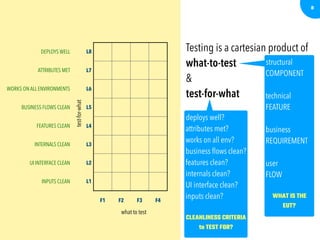

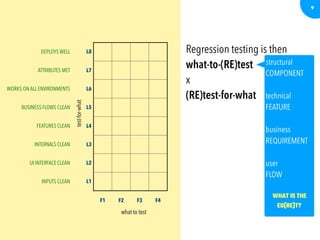

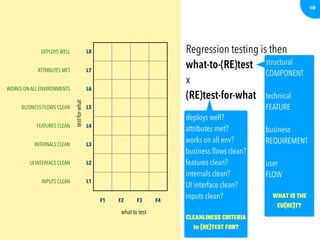

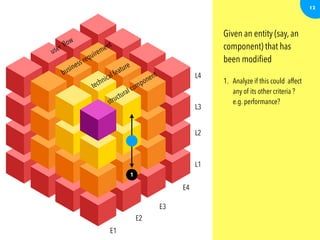

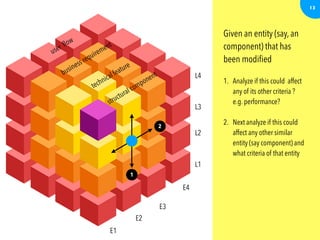

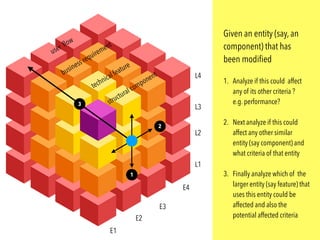

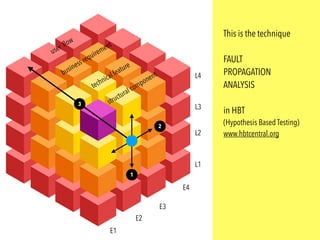

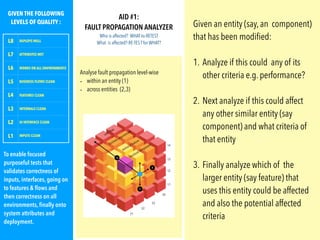

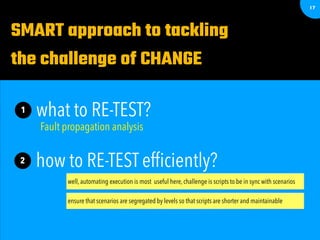

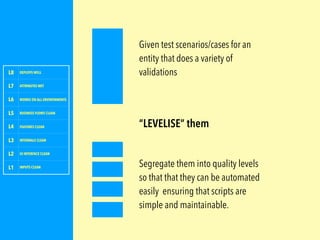

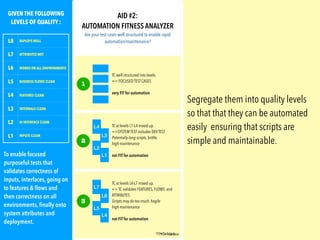

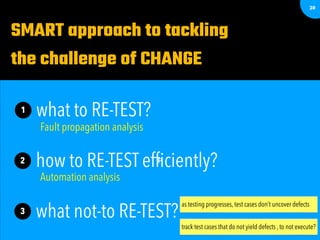

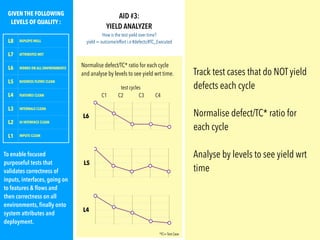

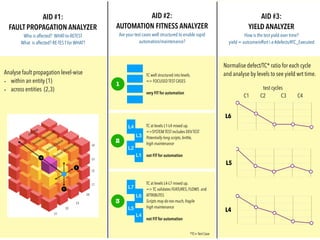

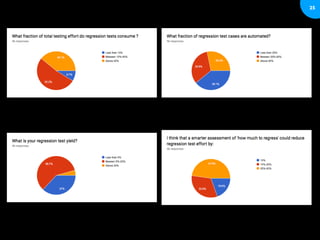

The document discusses the challenges of regression testing in software development, emphasizing the need for efficient re-testing strategies to avoid hindering progress. It introduces methods such as fault propagation analysis and automation fitness analysis to determine what to re-test, how to do it efficiently, and what not to re-test. The goal is to ensure that testing effectively validates software correctness while managing the associated costs and complexities of new feature deployment.