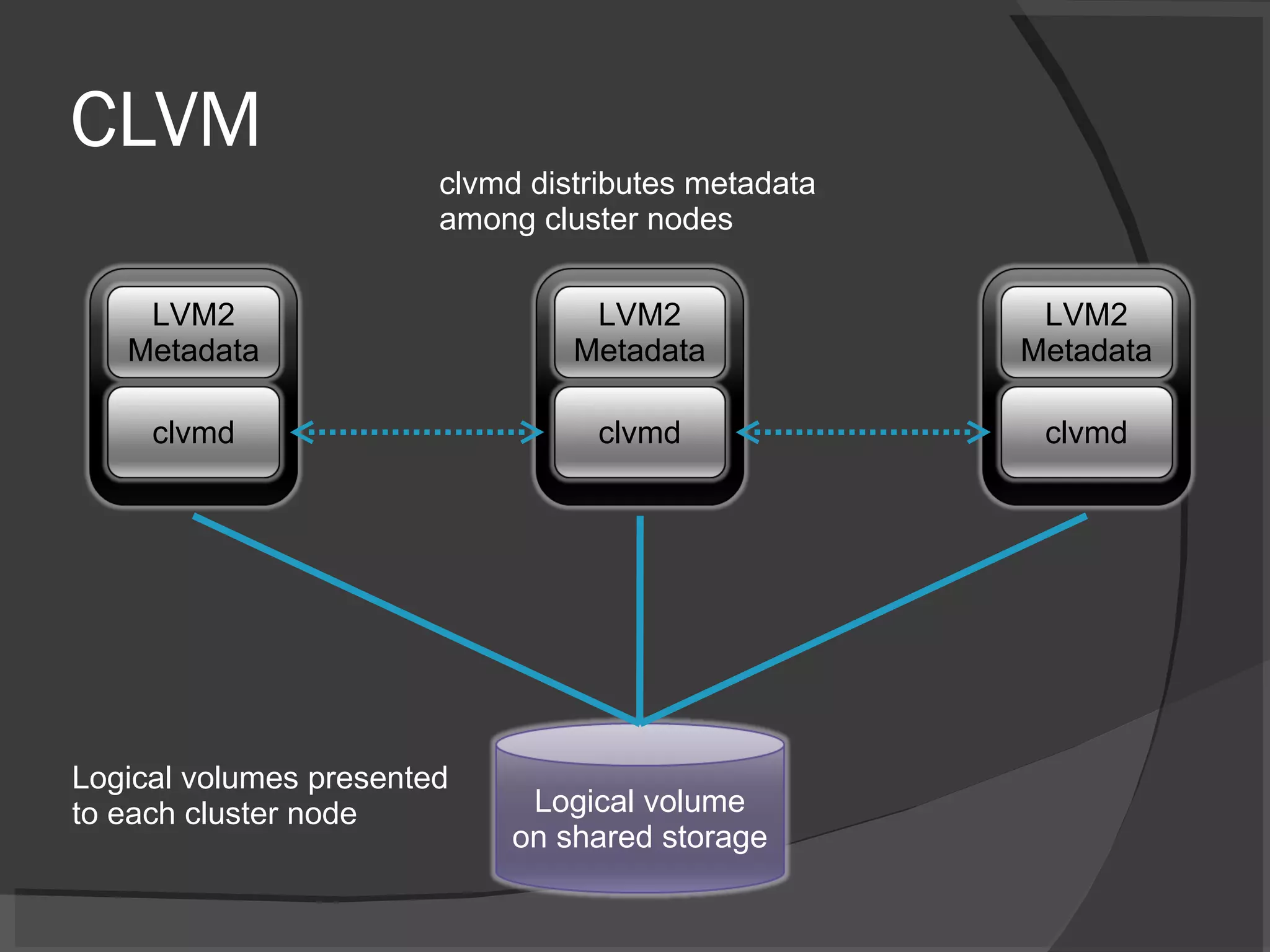

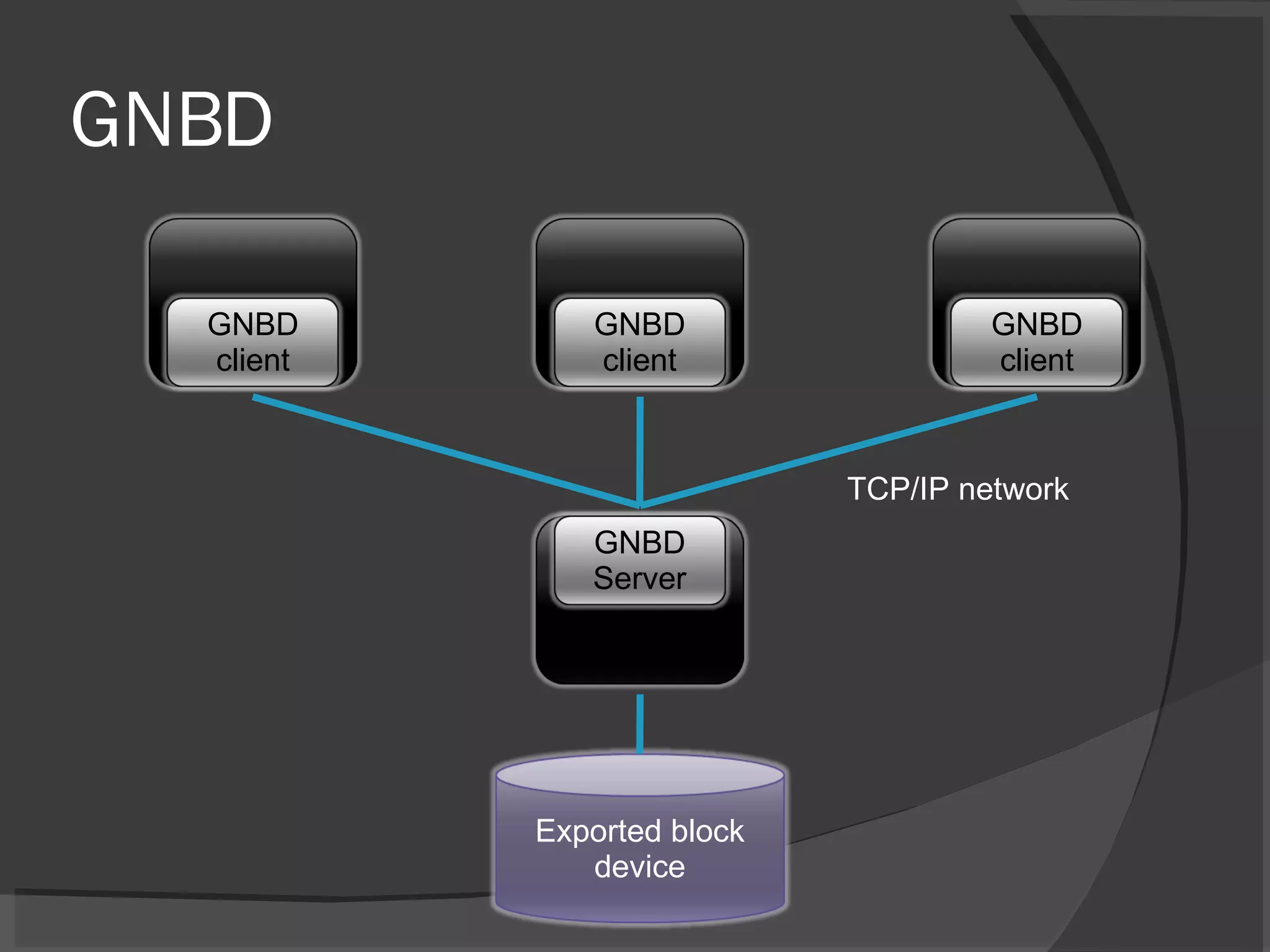

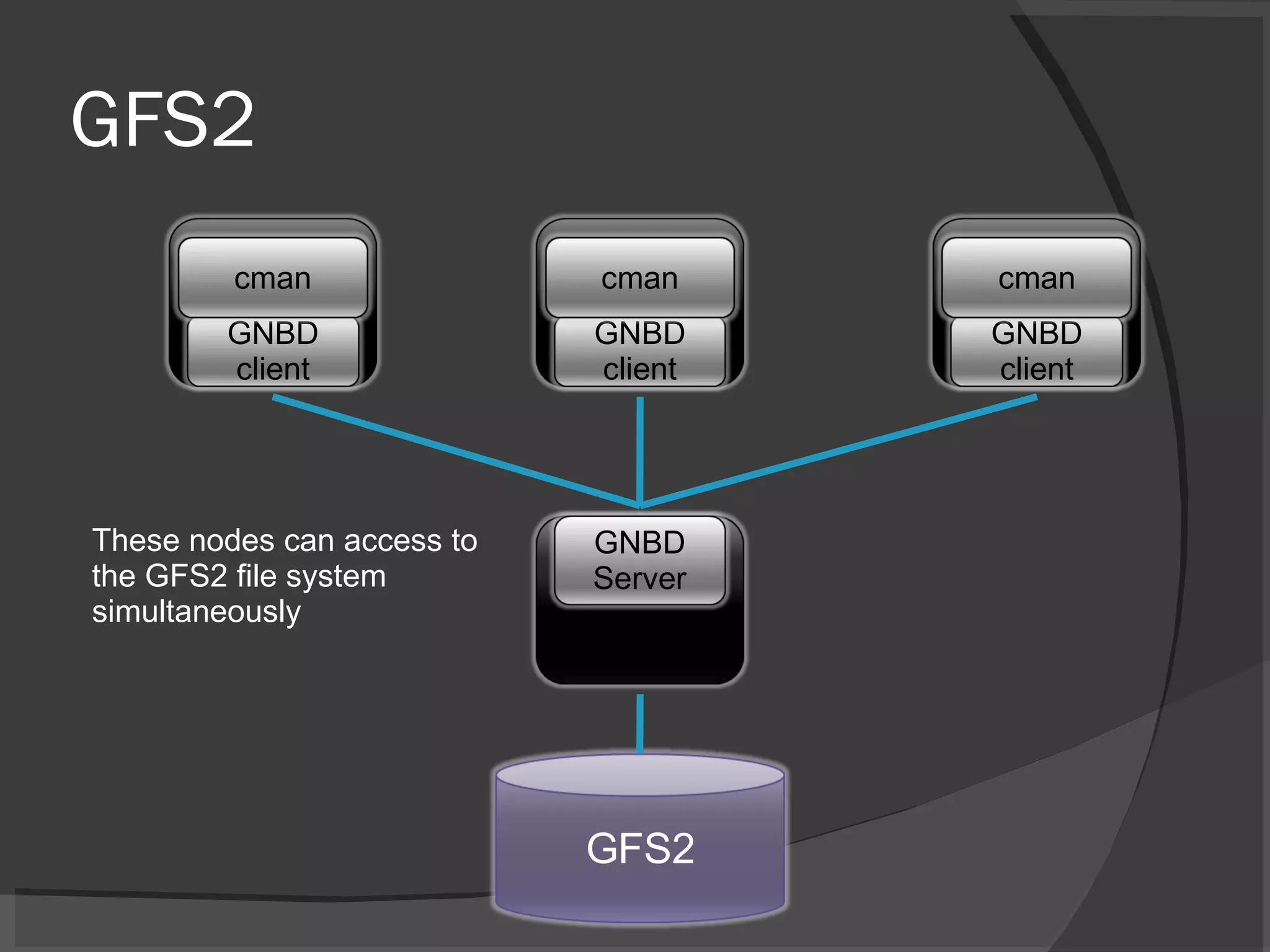

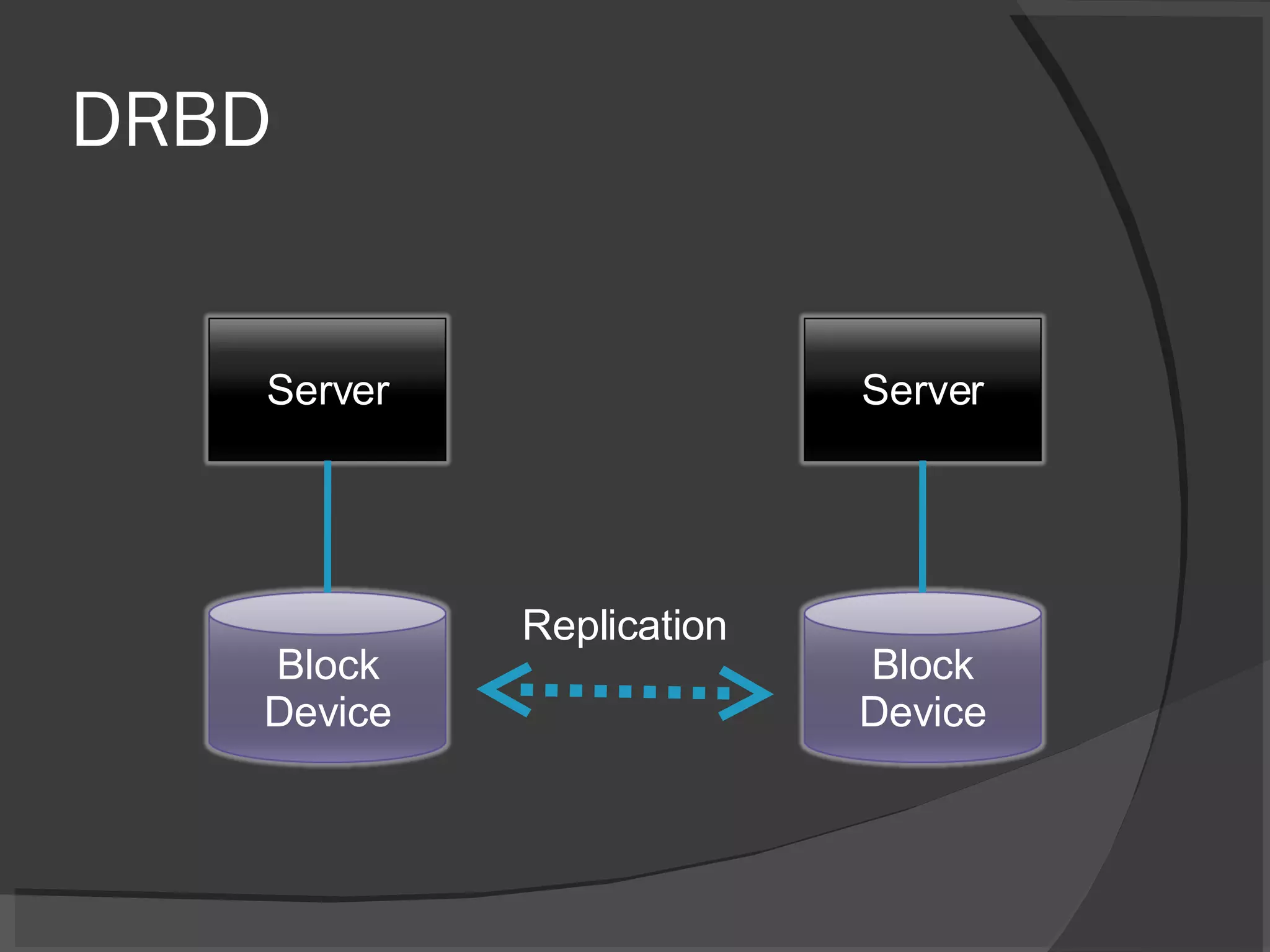

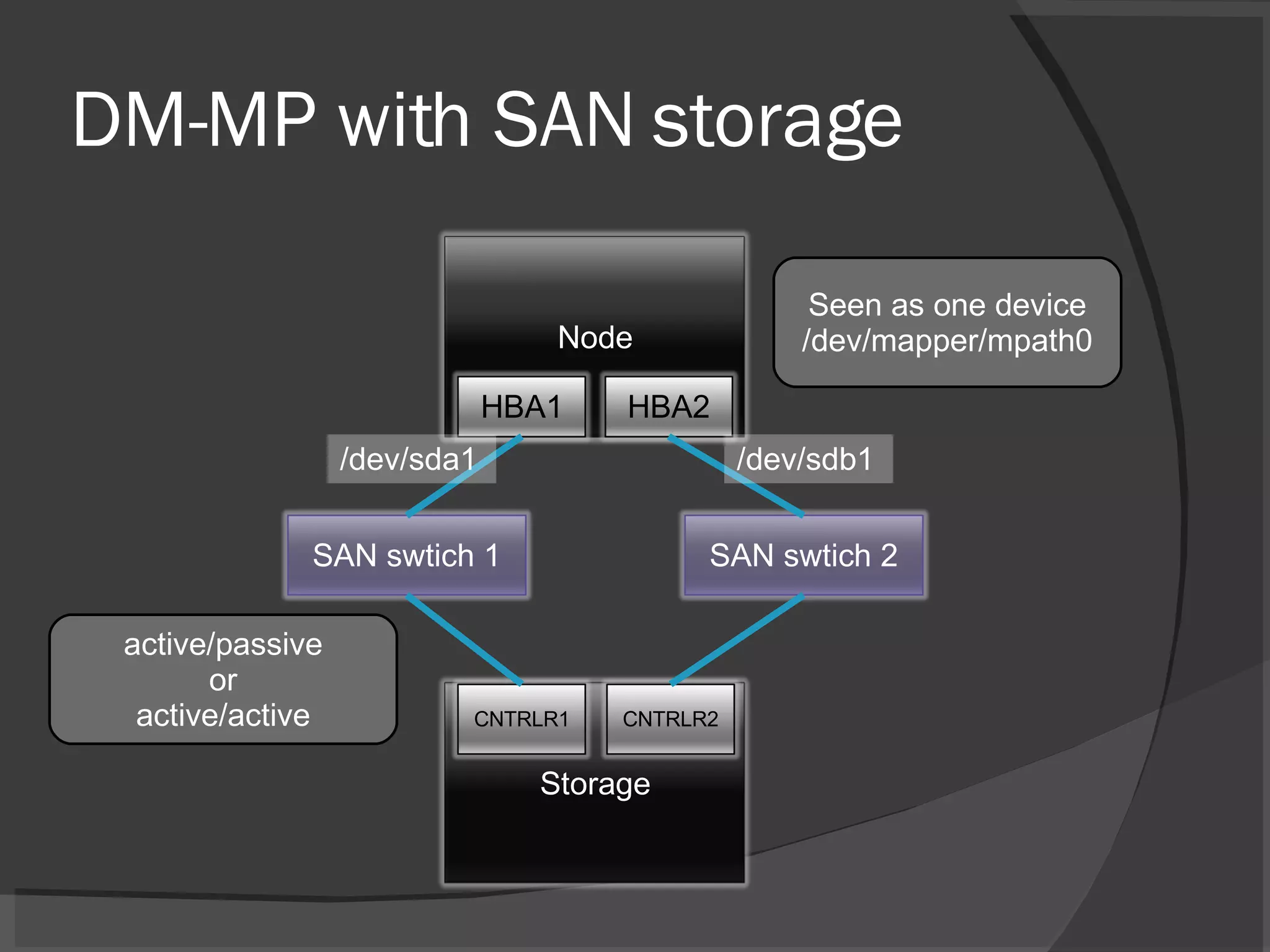

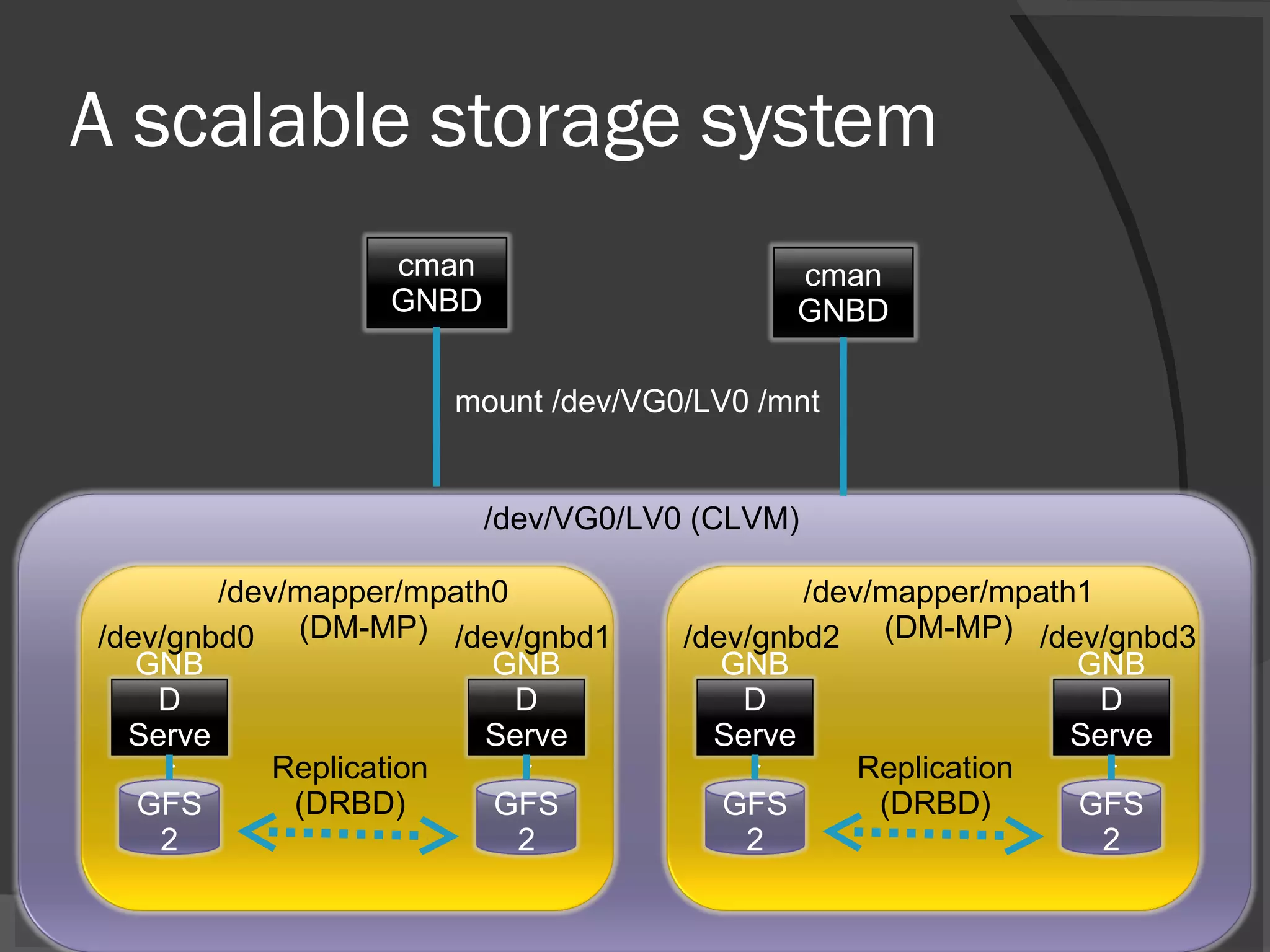

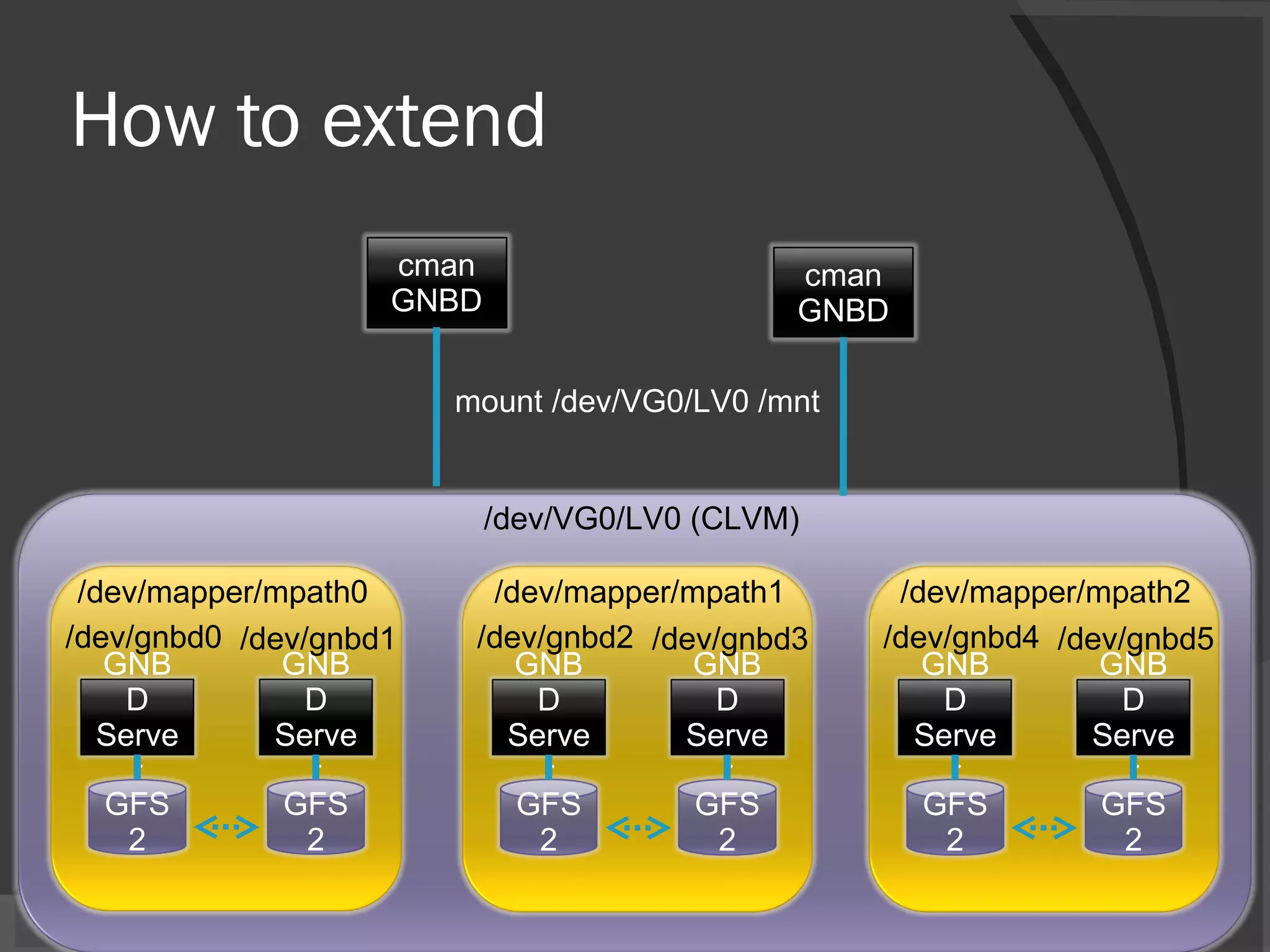

The document discusses Gosuke Miyashita's goal of building a scalable storage system for his company's web hosting service. He is exploring the use of several open source technologies including cman, CLVM, GFS2, GNBD, DRBD, and DM-MP to create a storage system that provides high availability, flexible I/O distribution, and easy extensibility without expensive hardware. He outlines how each technology works and shows some example configurations, but notes that integrating many components may introduce issues around complexity, overhead, performance, stability and compatibility with non-Red Hat Linux.