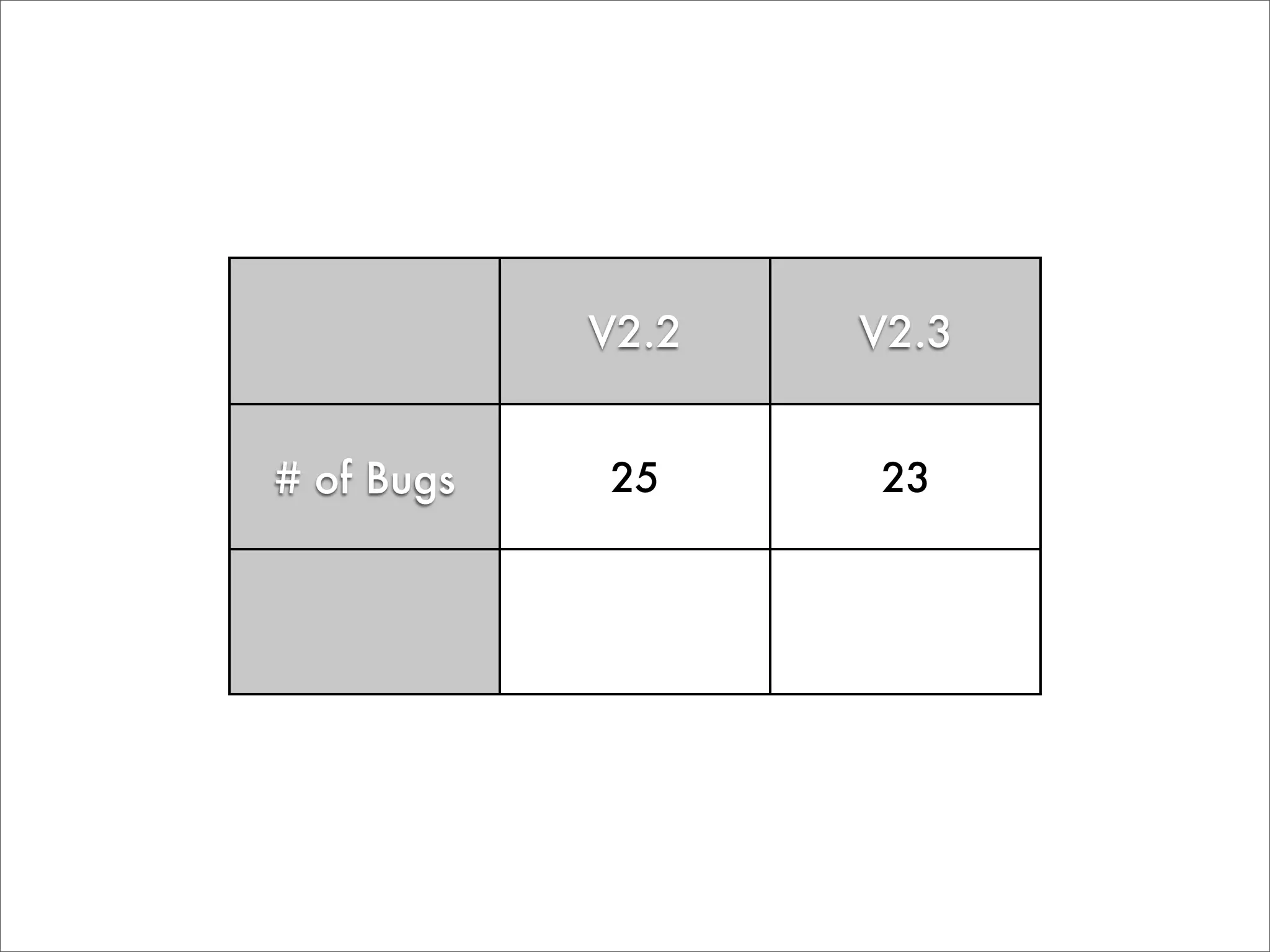

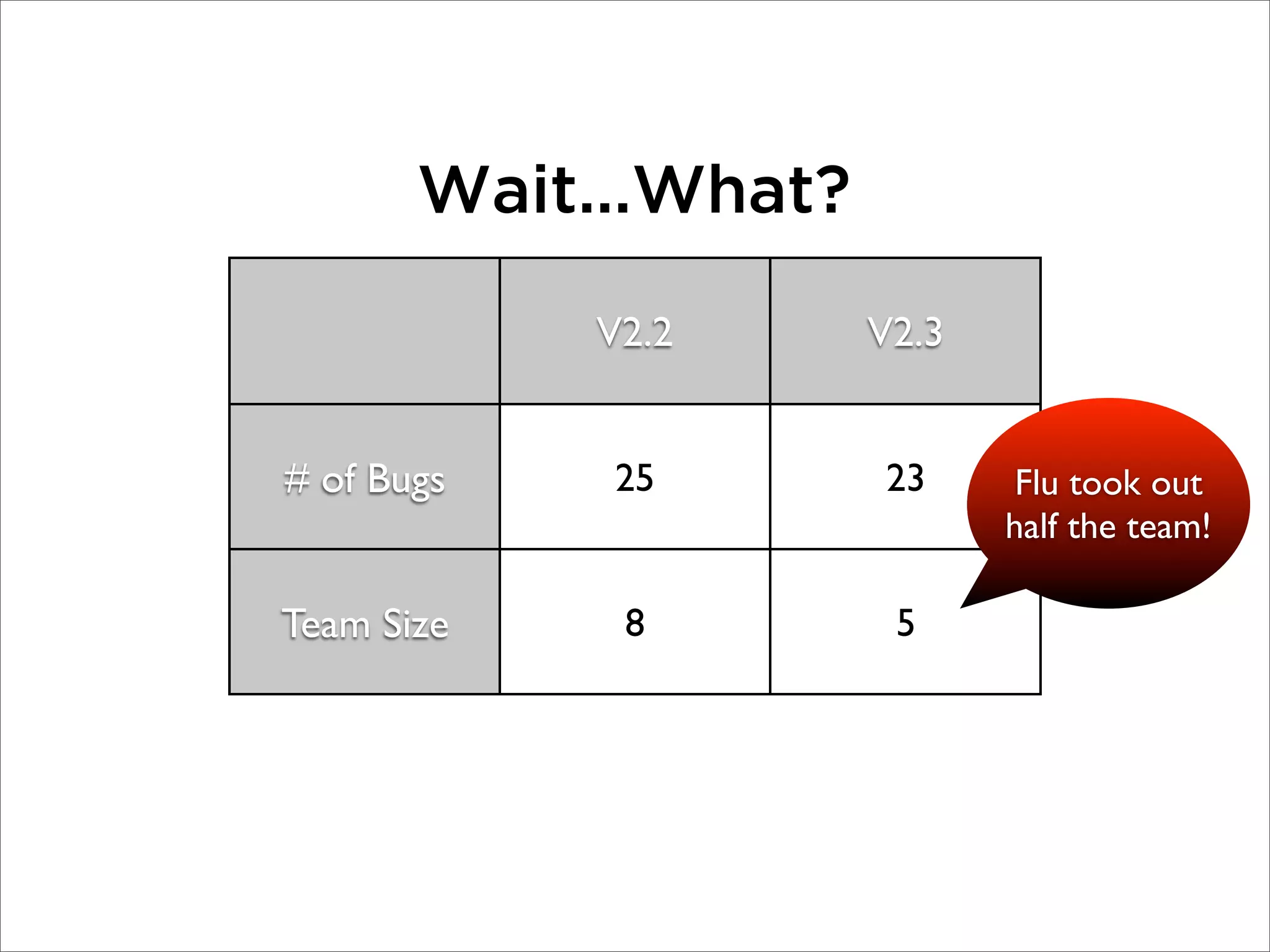

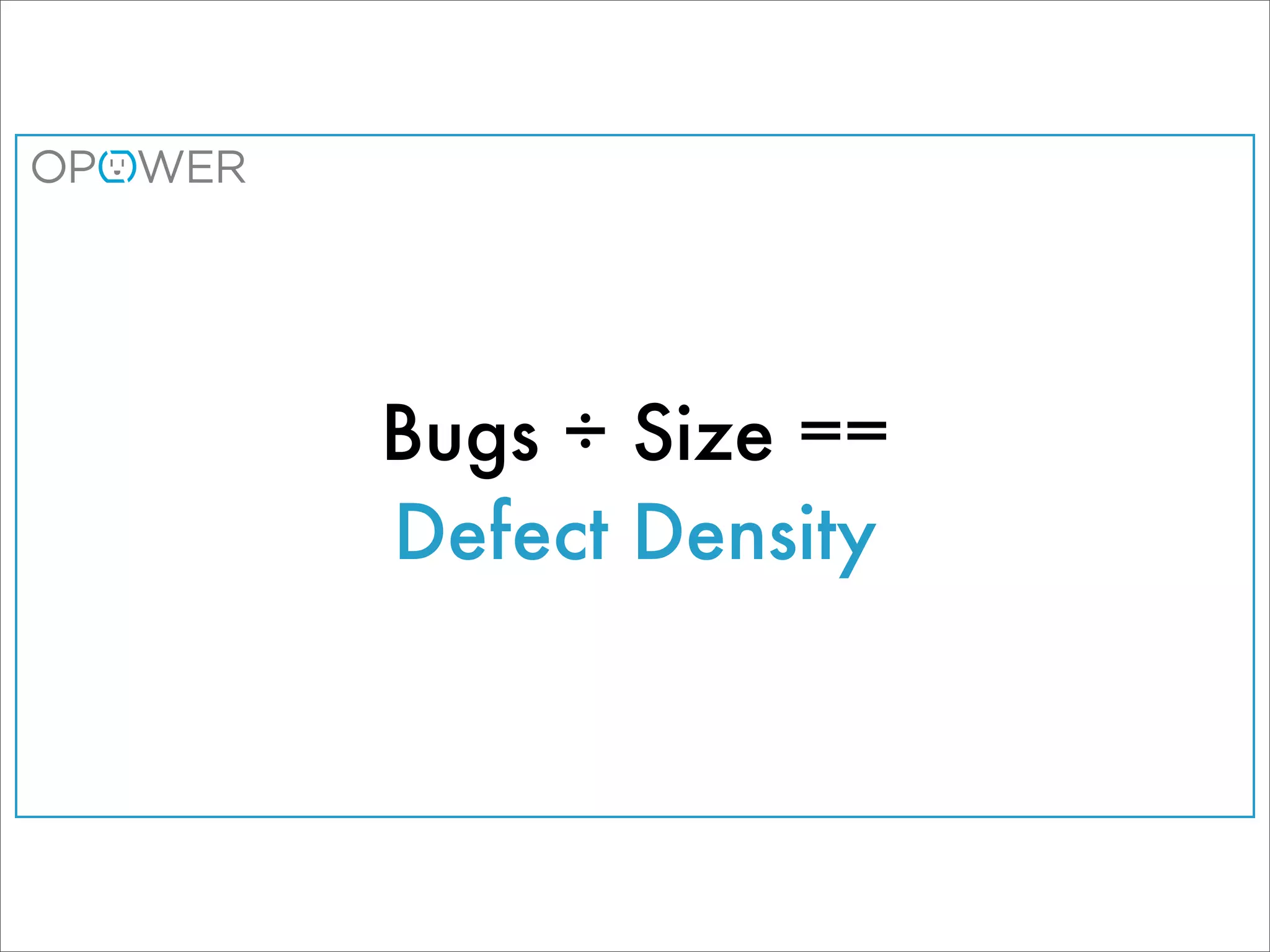

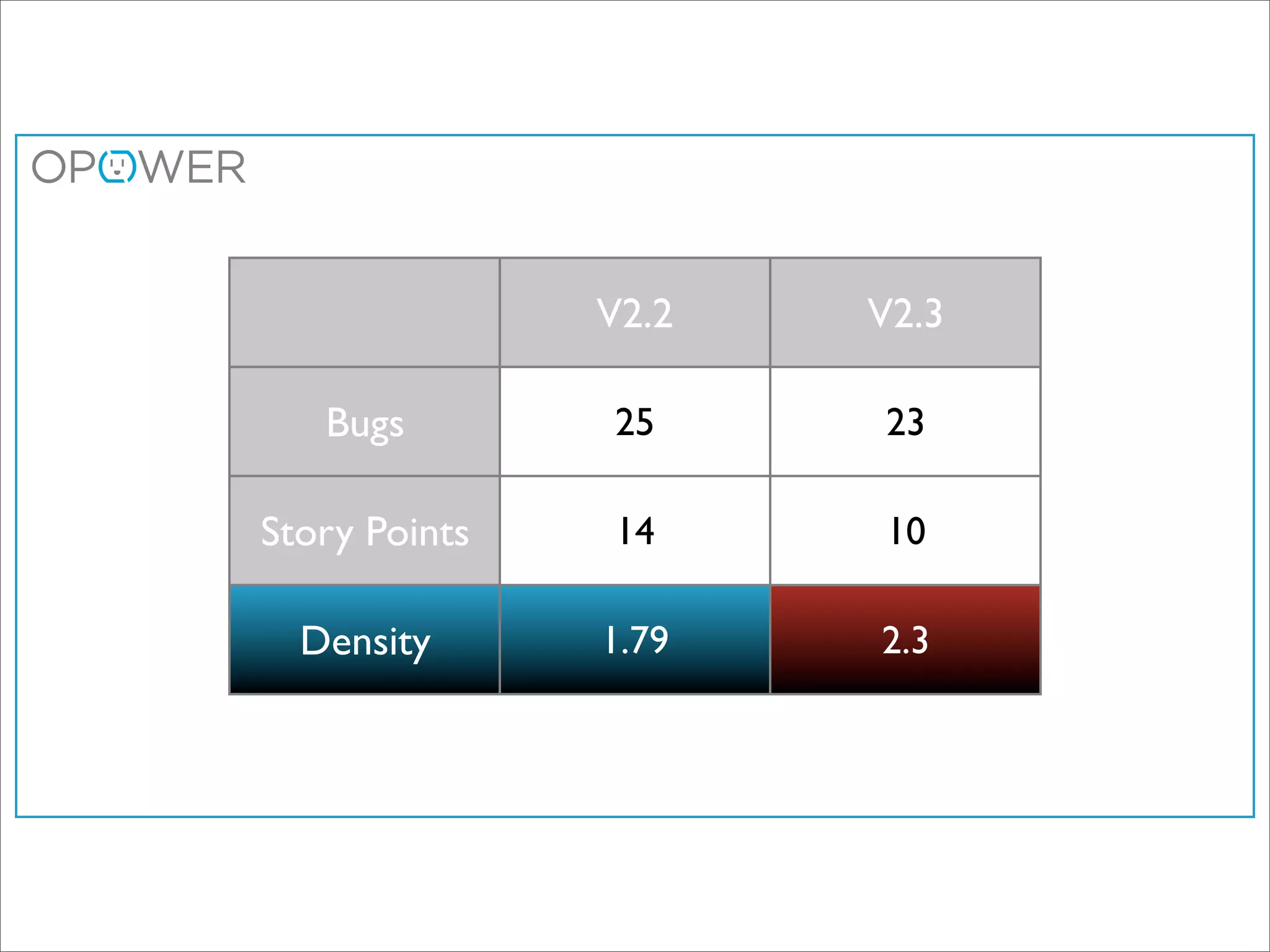

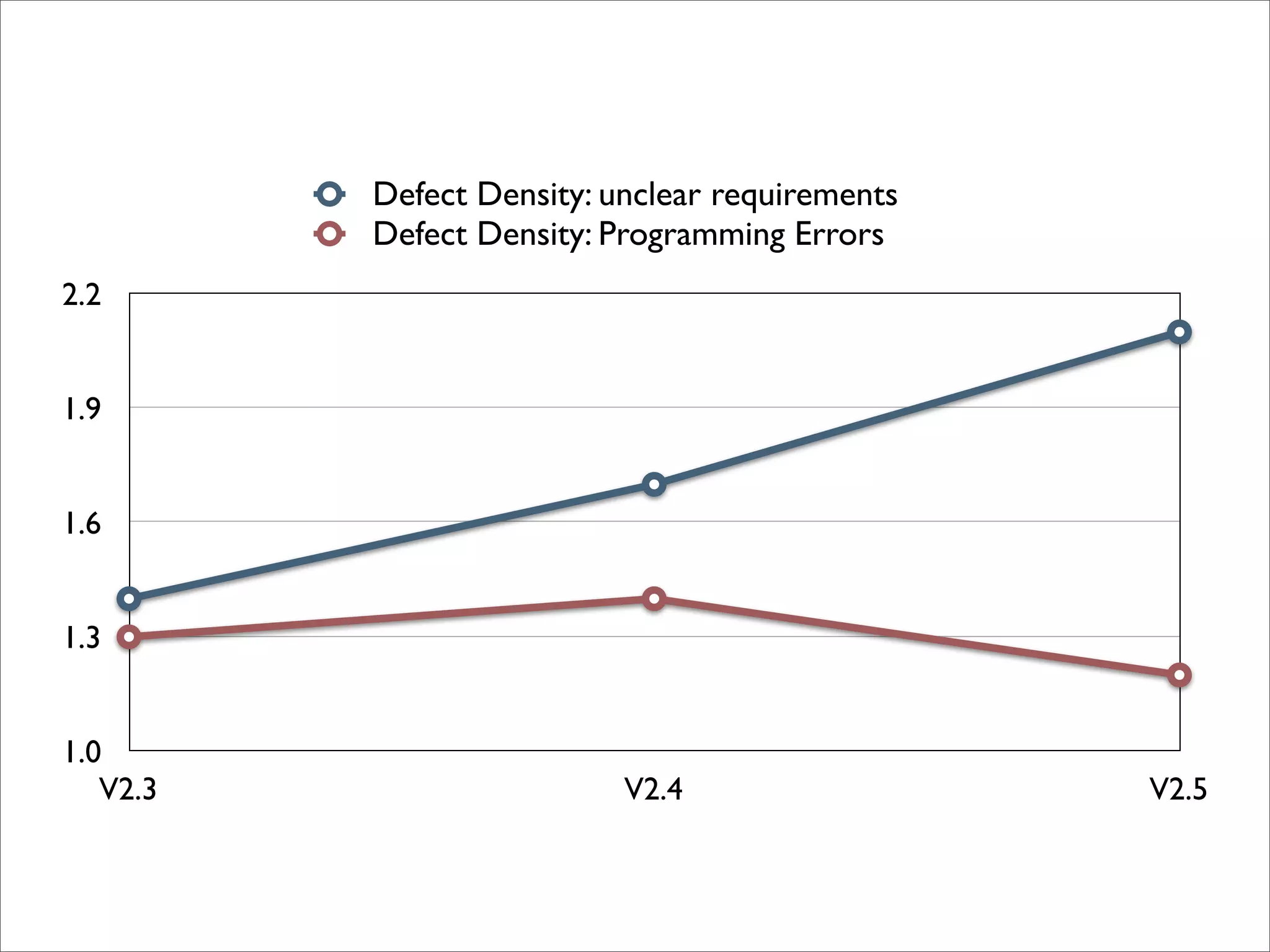

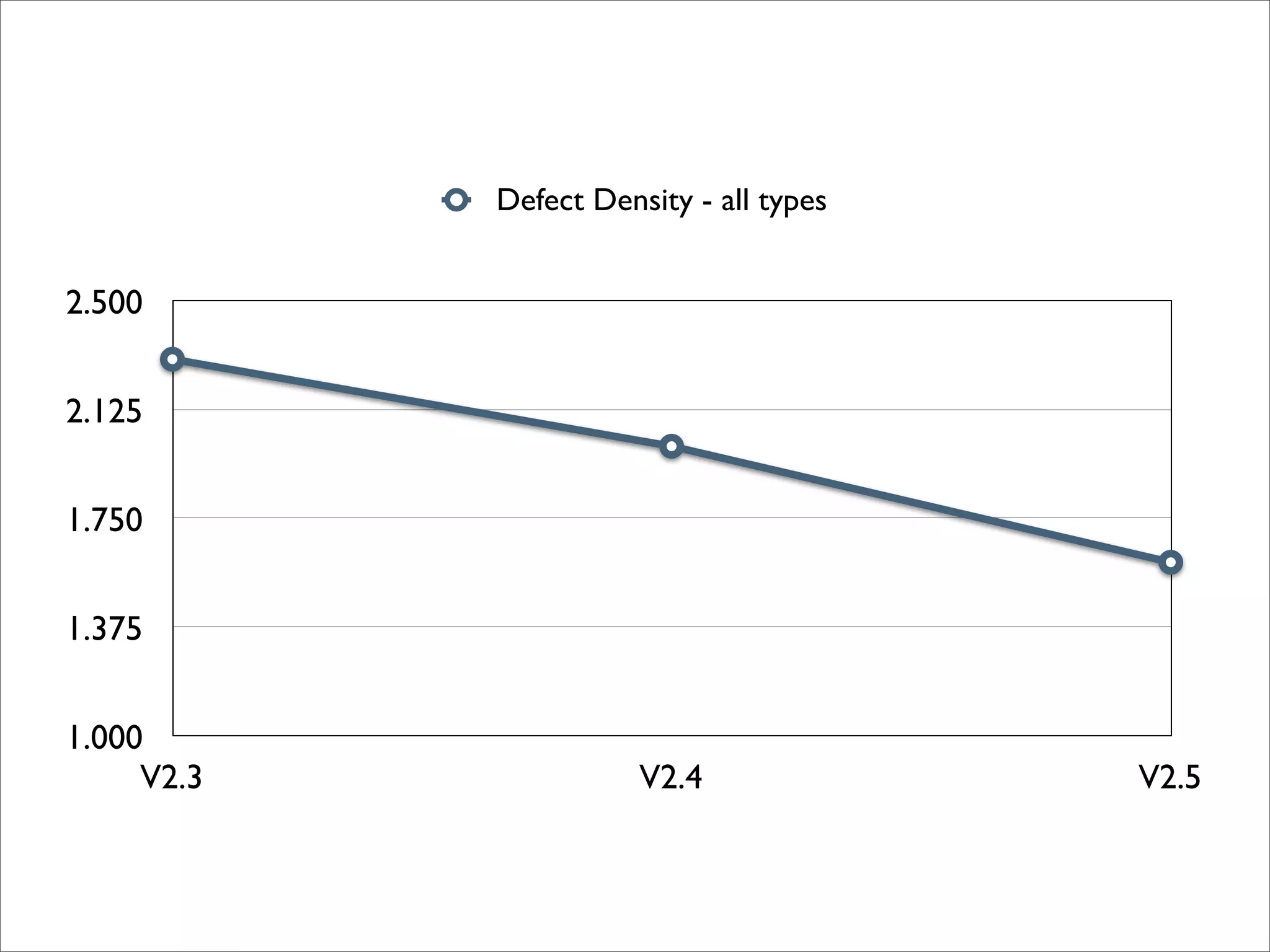

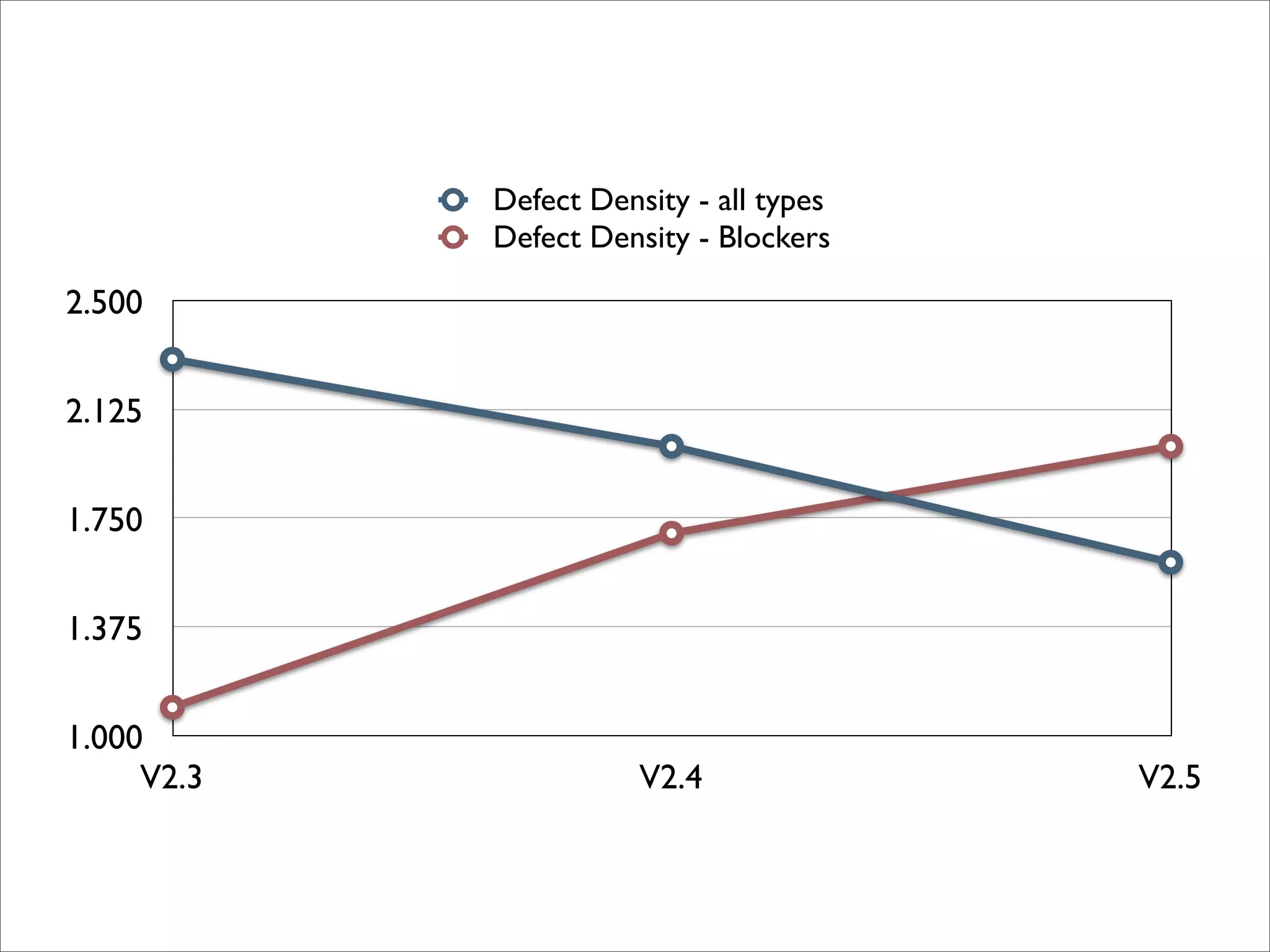

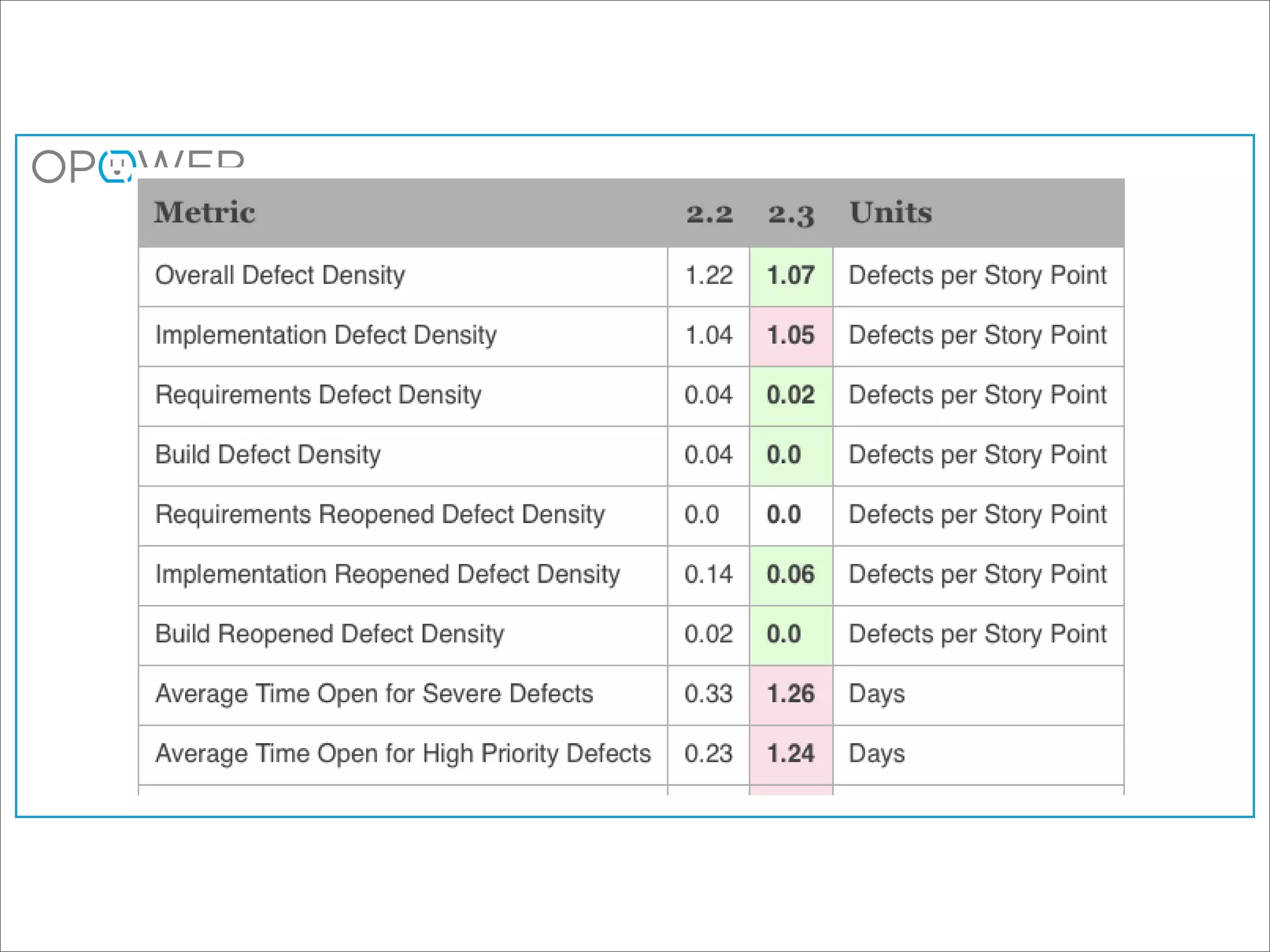

The document discusses improving agile processes by proposing a methodical approach to metric analysis and experimentation. It highlights the importance of identifying problem areas, hypothesizing solutions, conducting experiments, and analyzing results to enhance agile practices like test coverage and pair programming. The author's real-world example illustrates the iterative process of measuring and adapting practices to reduce defects while tracking progress over time.