This document discusses challenges for testers in agile development environments. It outlines several strategies testers can use to address these challenges, including:

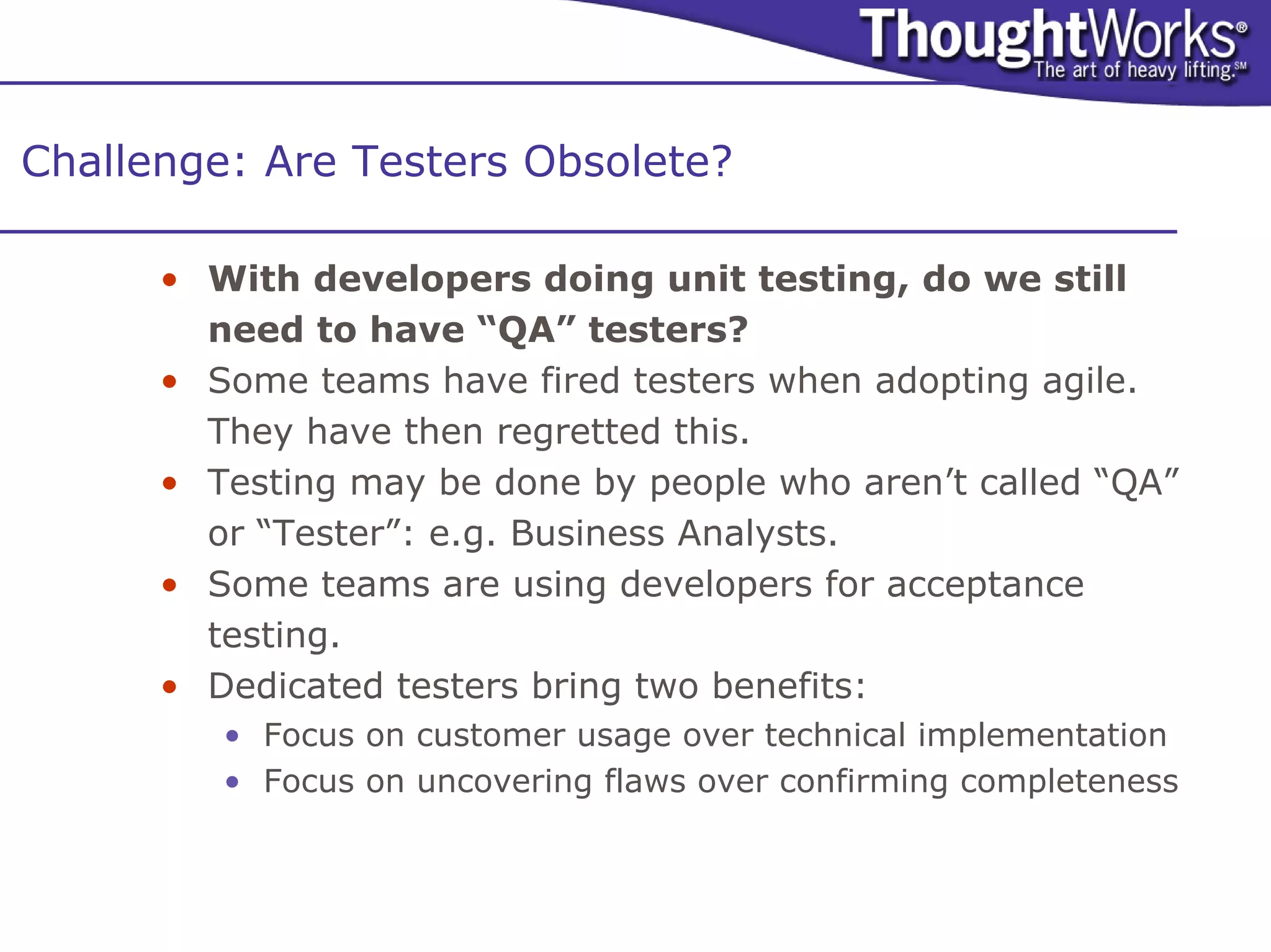

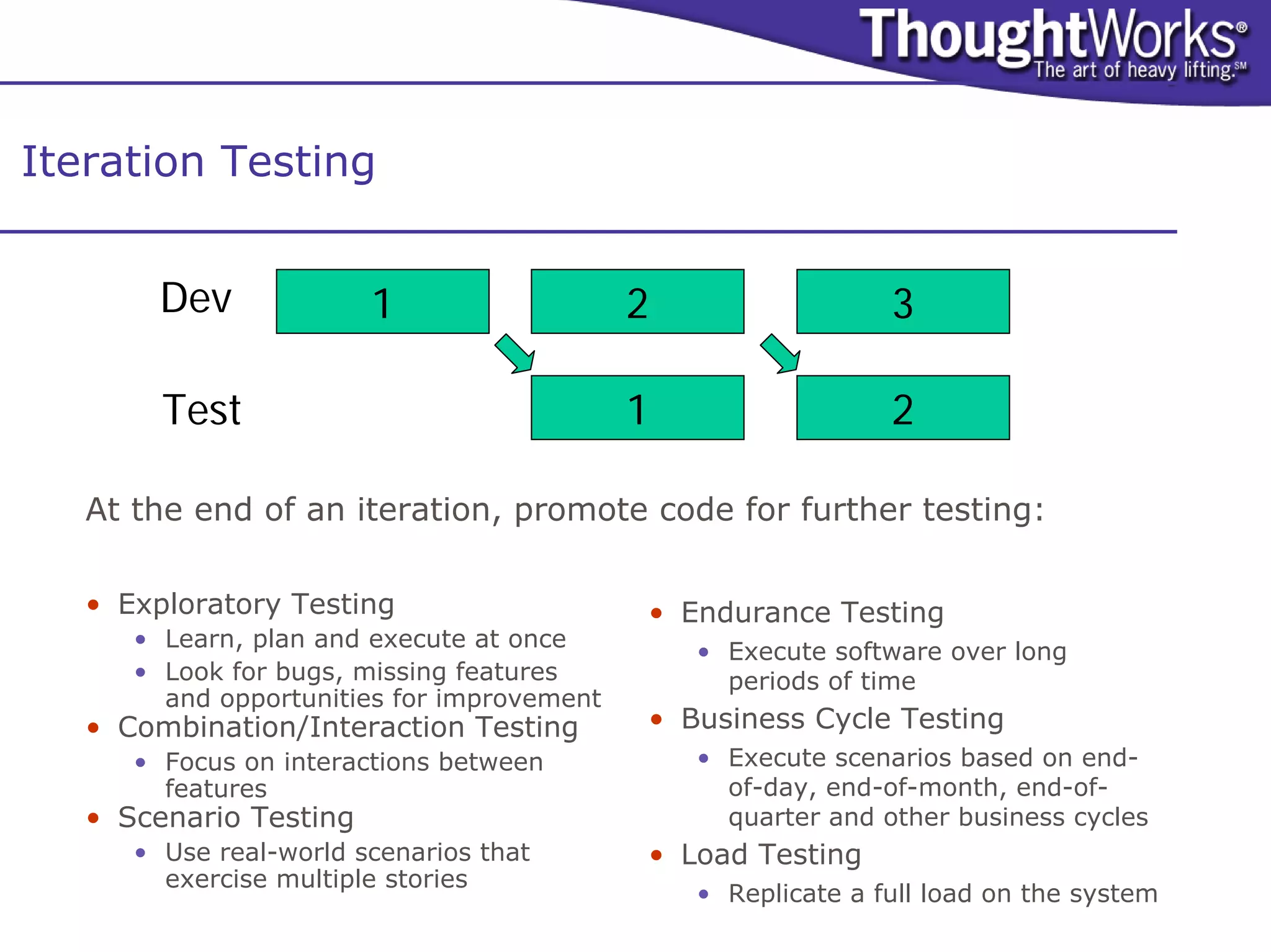

- Pairing testers with developers to facilitate exploratory and interaction testing. This helps testers understand the codebase and developers understand testing needs.

- Pairing testers with analysts to help define requirements by example, clarify expectations, and drive development of acceptance tests.

- Prioritizing testing to address important risks rather than trying to do complete testing. A good tester is never done but must justify testing in terms of risk.

- Tracking bugs when testing completed iterations, even if fixes are made quickly, so issues can be prioritized like stories.