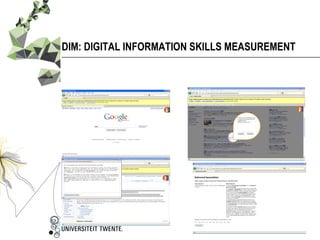

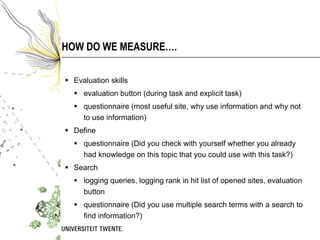

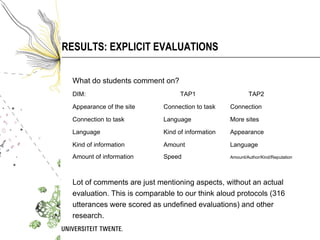

The document discusses the development of an online instrument called DIM (Digital Information Skills Measurement) to measure students' information skills. DIM aims to combine multiple measurement methods into one online tool to provide a clear picture of students' information skills during an entire search process using the actual Internet. An initial study was conducted using DIM with 84 students, and results were compared to think-aloud protocols. DIM showed potential but also room for improvement, such as providing more context for student evaluations during searches. Further validation steps are outlined.

![A recent study [W]e’ve come to use our laptops, tablets and smartphones as a ‘form of external or transactive memory, where information is stored collectively outside of ourselves. … We are becoming symbiotic with our computer tools, growing into interconnected systems that remember less by knowing information than by knowing where information can be found.’](https://image.slidesharecdn.com/ecer2011-110915152448-phpapp01/85/Ecer-2011-2-320.jpg)

![Dr. Amber Walraven Universiteit Twente Faculteit Gedragswetenschappen Vakgroep Curriculumontwerp & Onderwijsinnovatie [email_address] http://amberwalraven.edublogs.org Twitter: @amberwalraven](https://image.slidesharecdn.com/ecer2011-110915152448-phpapp01/85/Ecer-2011-18-320.jpg)