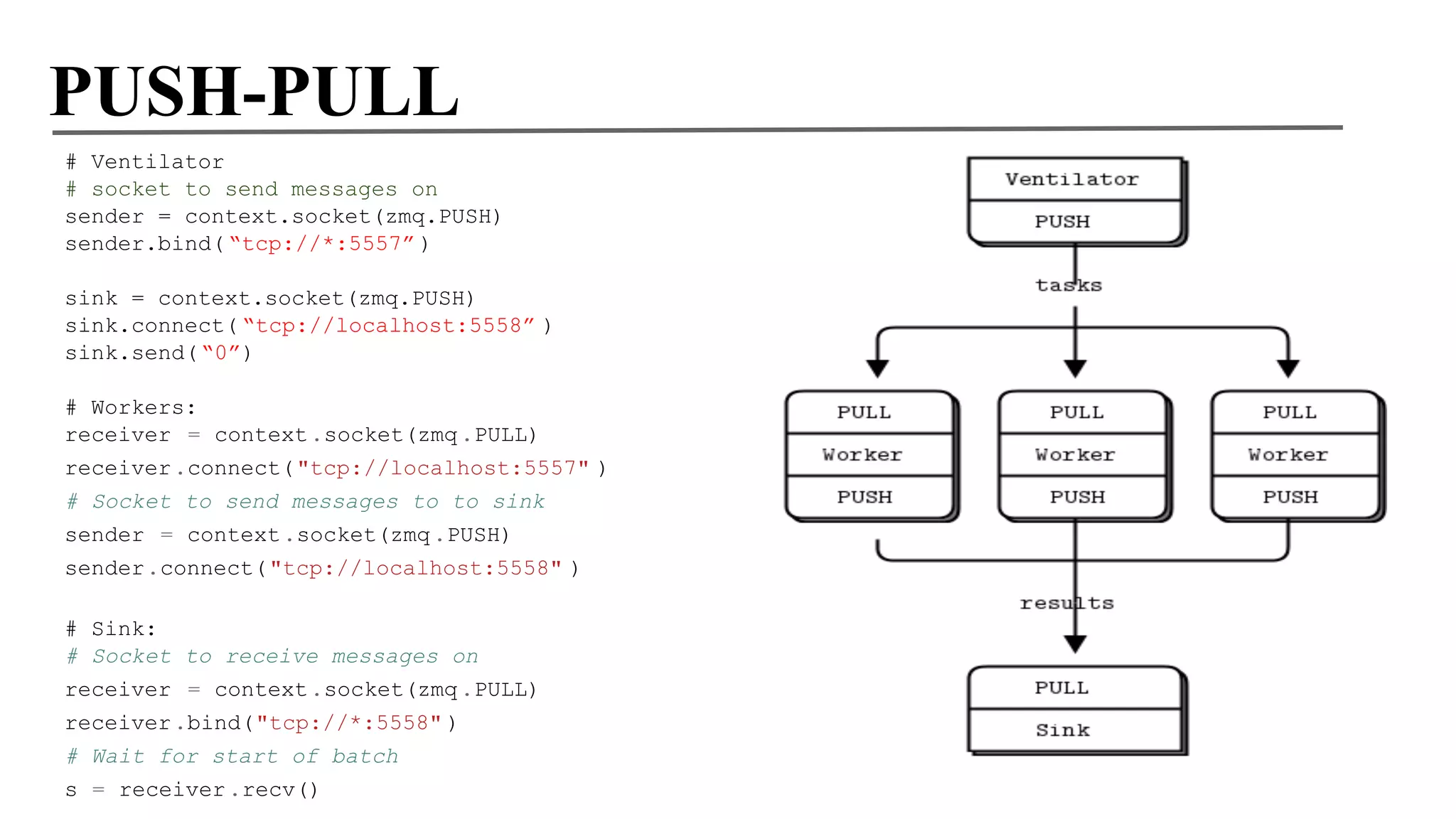

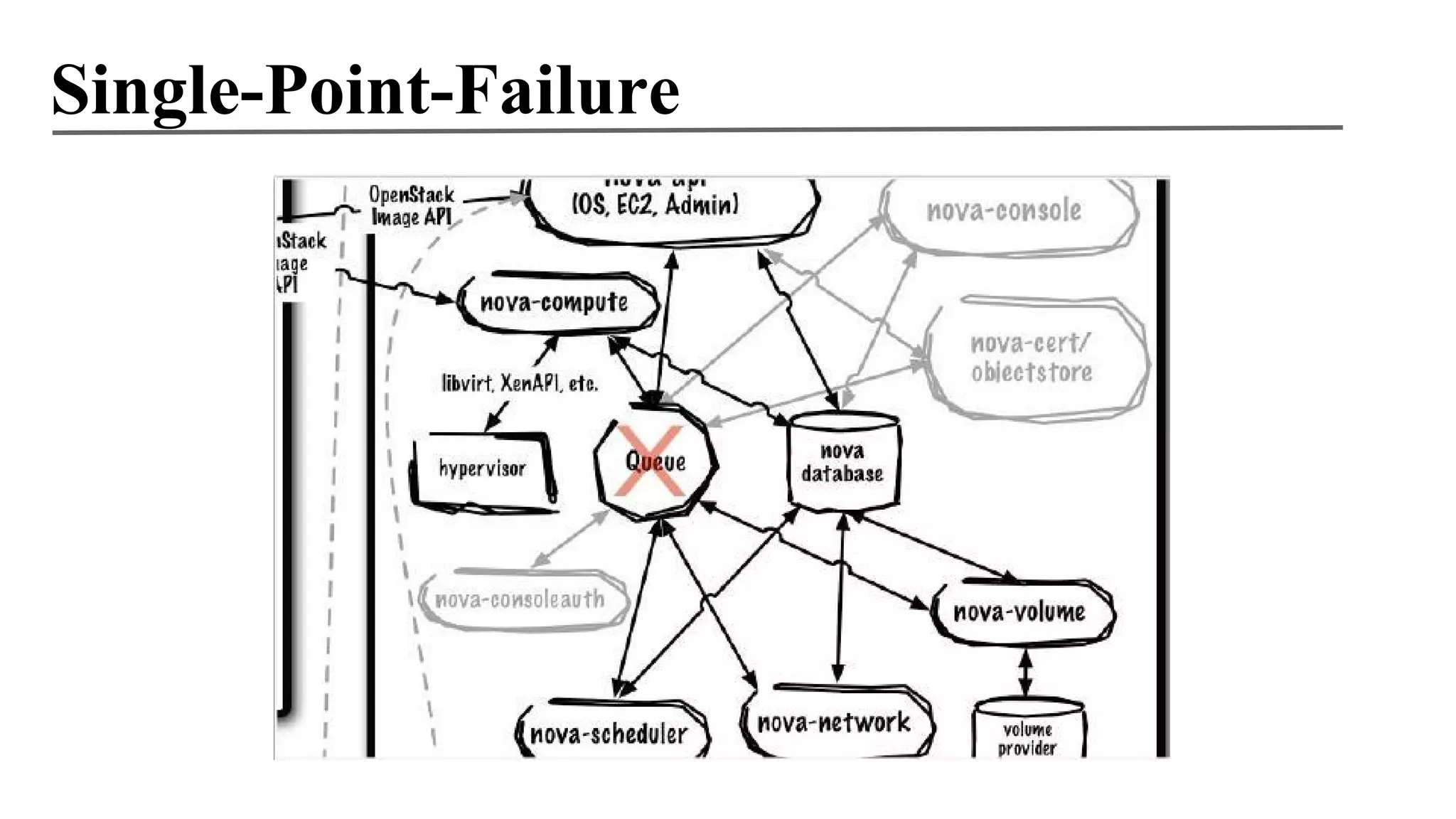

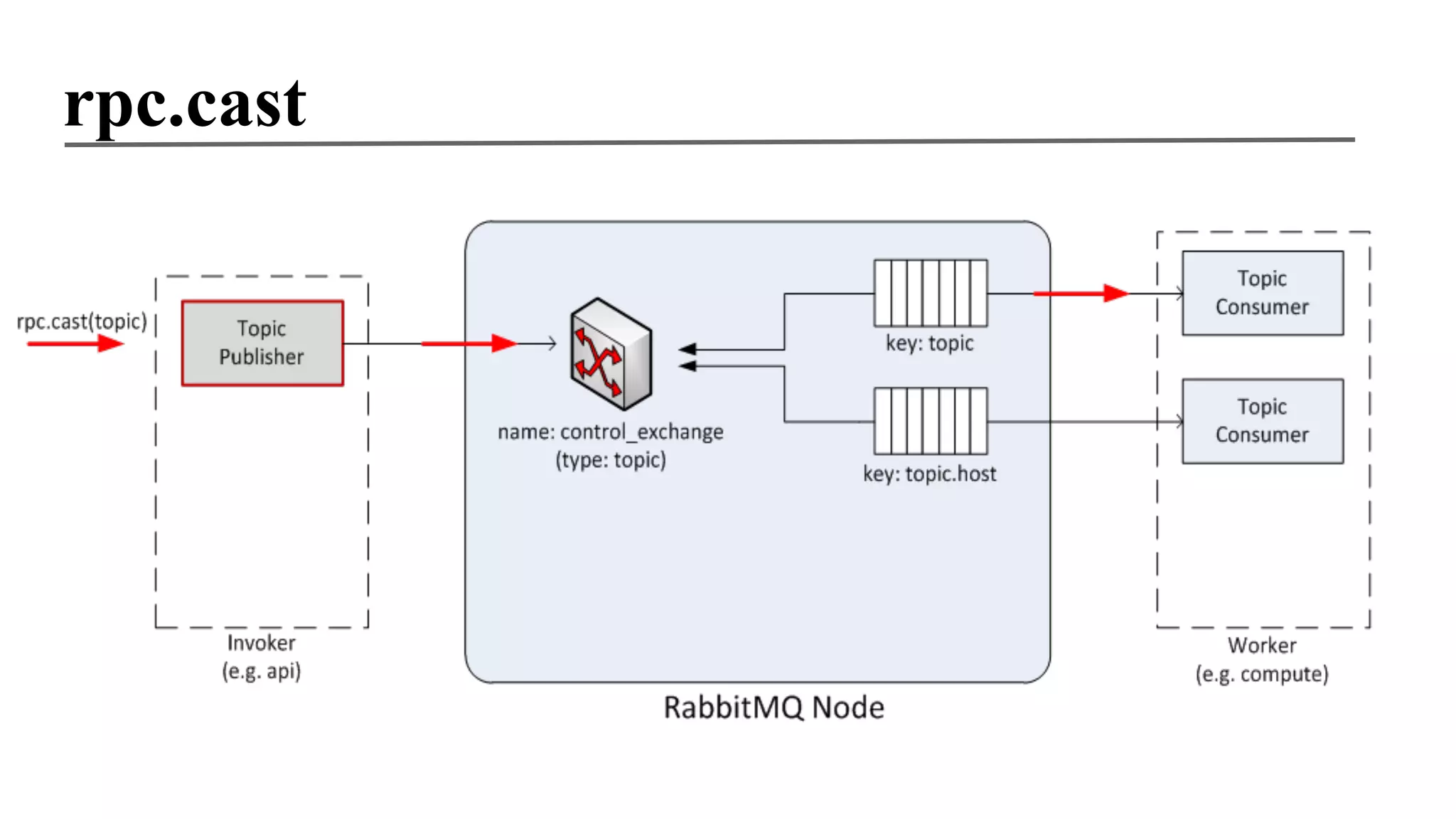

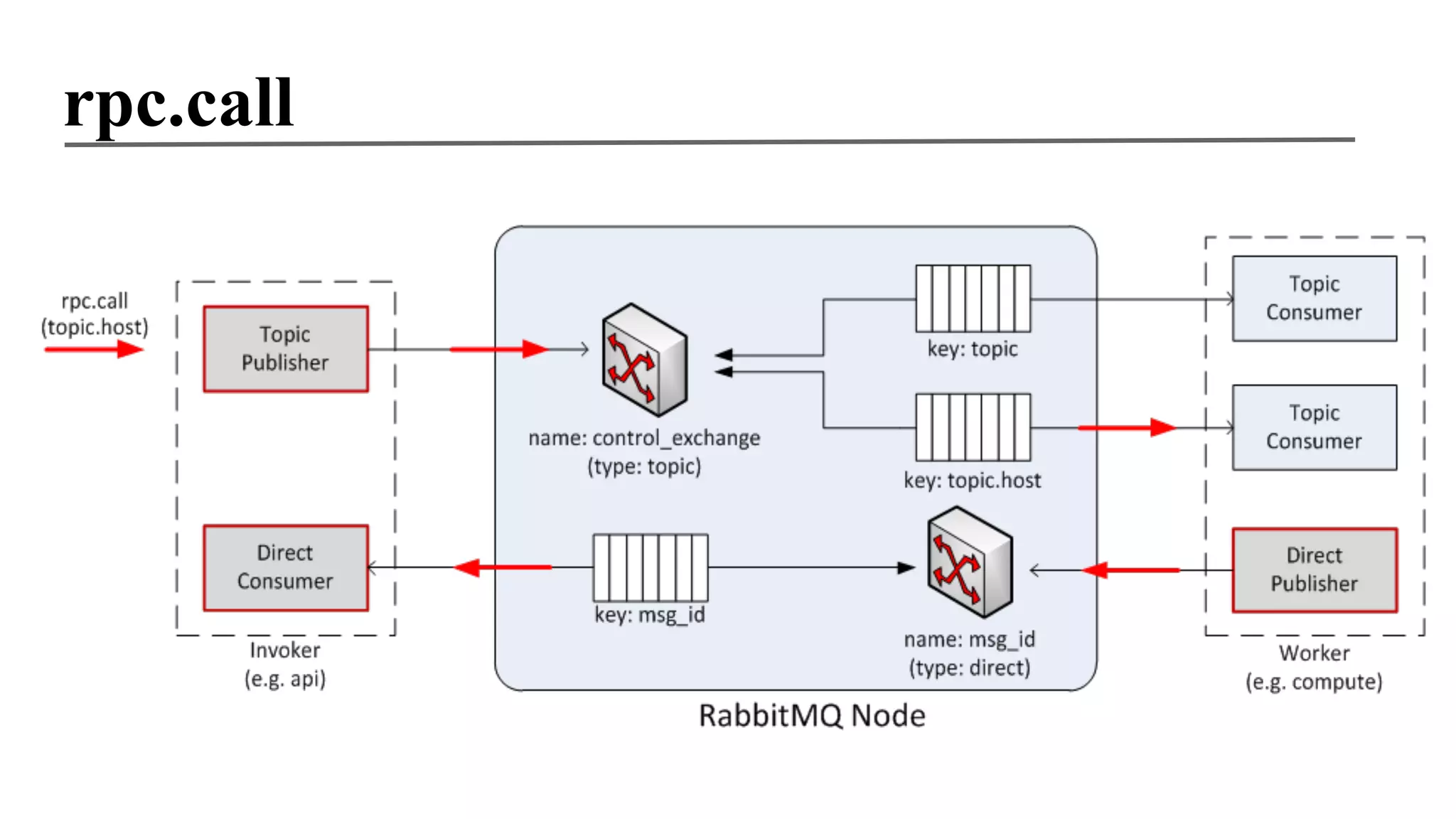

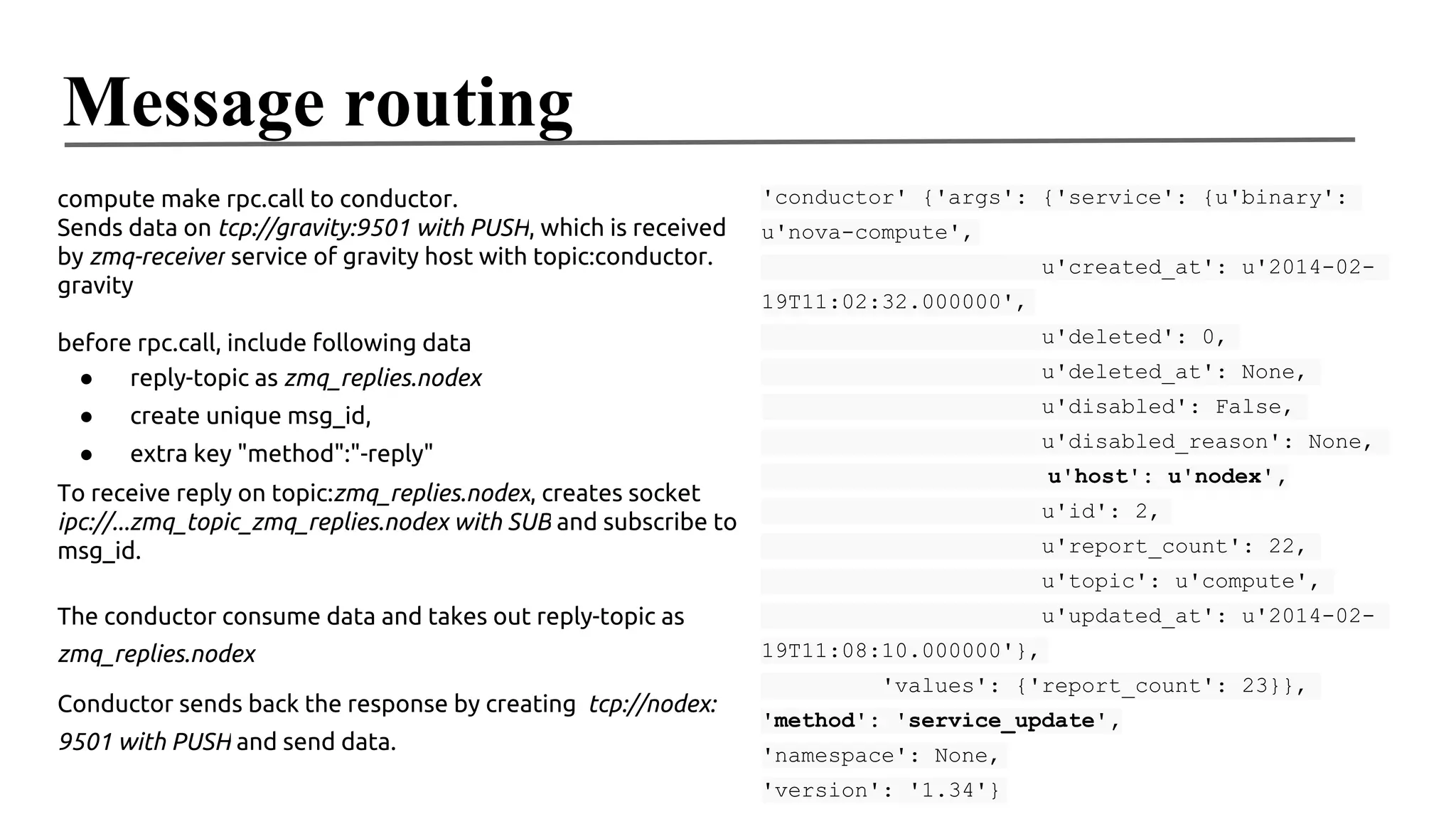

This document provides an overview of using ZeroMQ (zmq) for messaging in OpenStack, including distributed RPC implementations. It details how various socket types such as req-rep, pub-sub, and push-pull are utilized for communicating between services like nova and rpc-zmq-receiver. Additionally, it explains message routing, configuration settings, and the handling of responses in the context of OpenStack architecture.

![REQ-REP

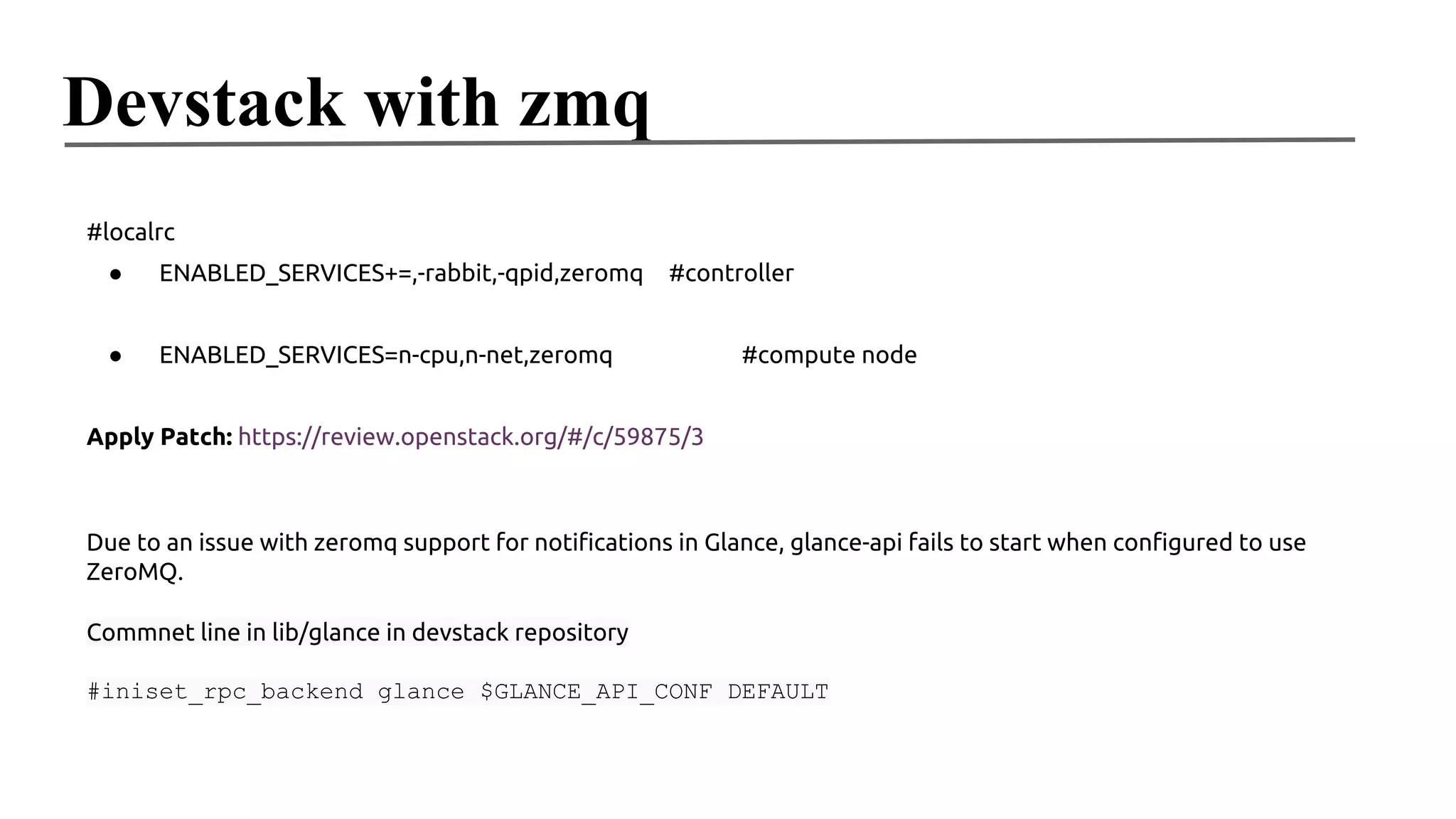

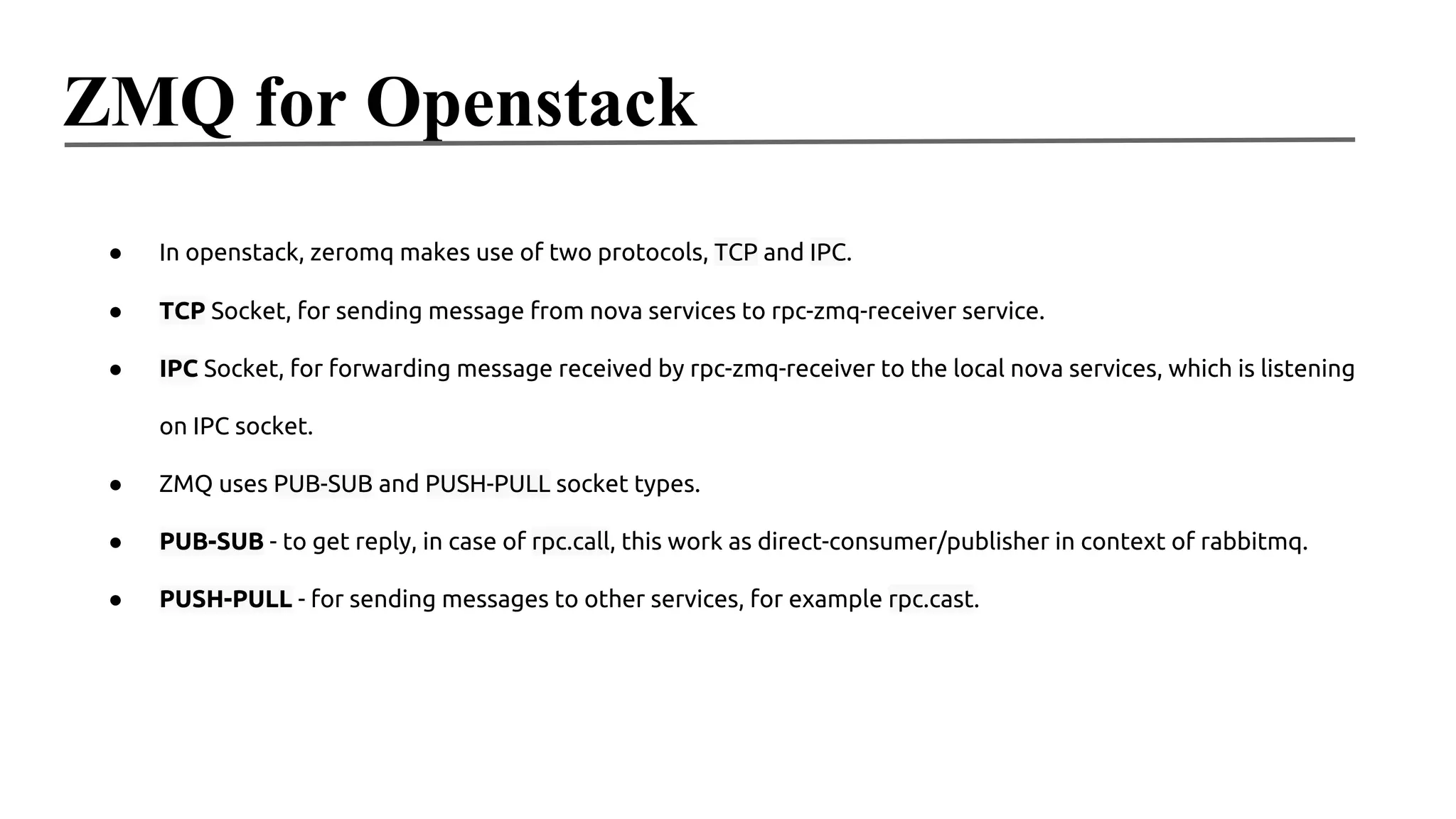

# Client

import zmq

context = zmq.Context()

print("Connecting to hello world server…")

socket = context.socket(zmq.REQ)

socket.connect("tcp://localhost:5555")

for request in range(10):

print("Sending request %s …" % request)

socket.send("Hello")

#

Get the reply.

message = socket.recv()

print("Received reply %s [ %s ]" %

(request, message))

# Server

import zmq

context = zmq.Context()

# Socket to talk to server

print("Connecting to hello world server…")

socket = context.socket(zmq.REP)

socket.bind("tcp://*:5555")

while True:

print("waiting for client request")

msg = socket.recv()

print("Message received %s “ % msg)

message = socket.send("World")](https://image.slidesharecdn.com/zmqincontextofopenstack-140306003156-phpapp01/75/Zmq-in-context-of-openstack-3-2048.jpg)

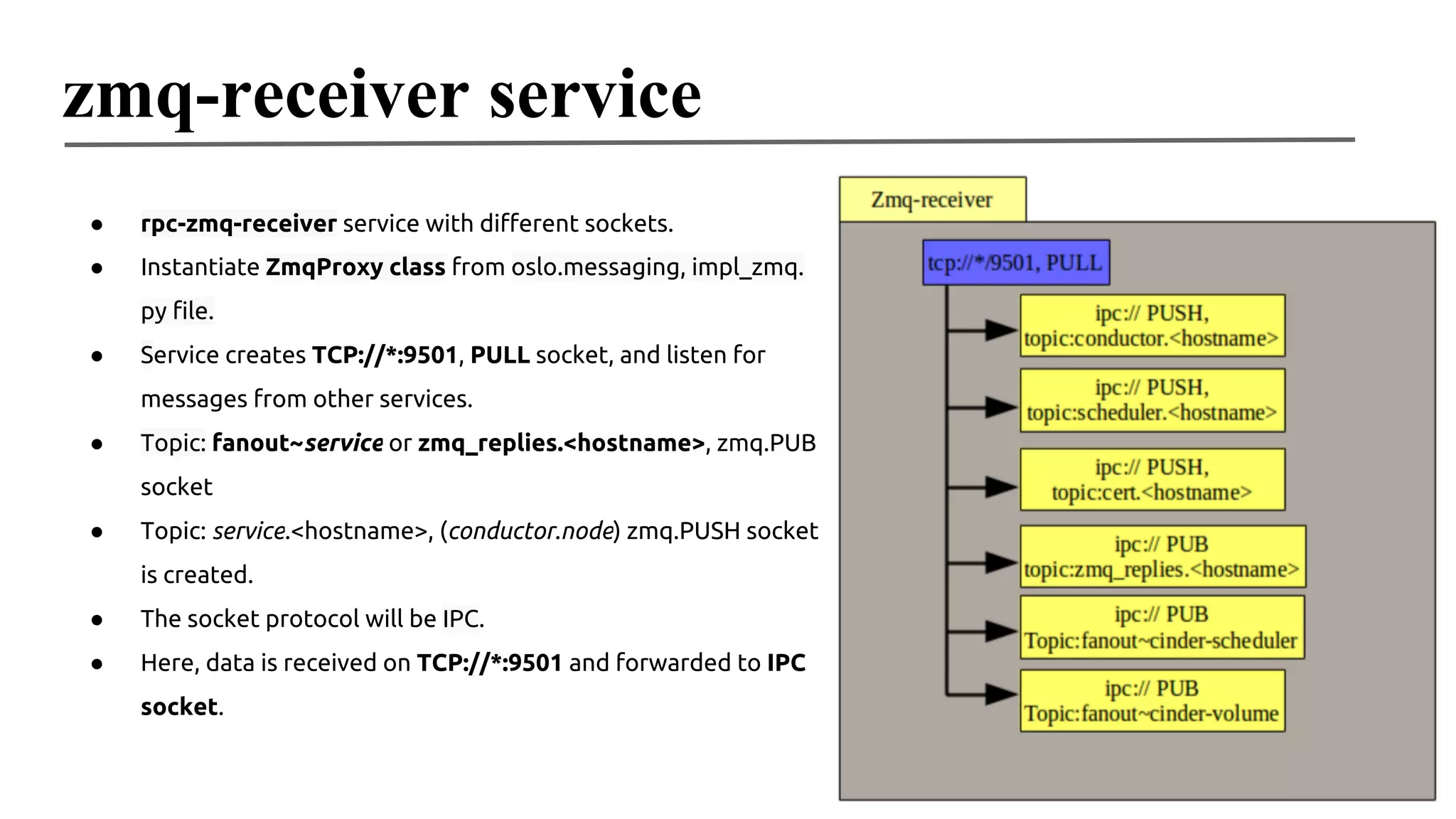

![PUB-SUB

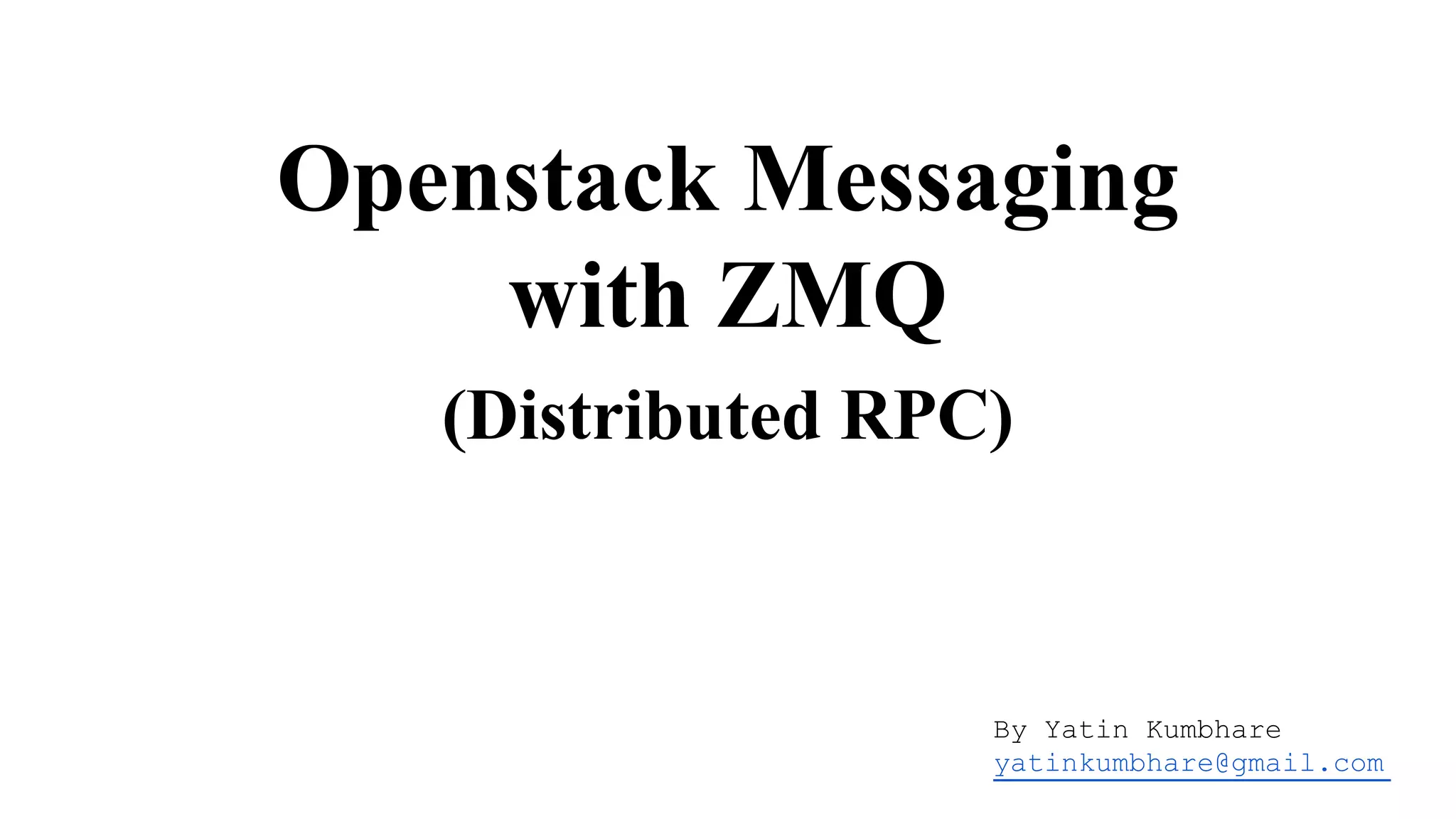

import zmq

from random import randint

context = zmq.Context()

socket = context.socket(zmq.PUB)

socket.bind("tcp://*:5556")

import zmq

import sys

context = zmq.Context()

socket = context.socket(zmq.SUB)

socket.connect("tcp://localhost:5556")

while True:

zipcode = randint(1, 10)

temperature = randint(-20, 50)

zip_filter = sys.argv[1] if len(sys.argv) > 1

else “”

humidity = randint(10, 15)

print “Publish data:”,(zipcode,

temperature, humidity)

socket.send("%d %d %d" % (zipcode,

temperature, relhumidity))

print “Collectin updates from weather service

%s” % zip_filter

socket.setsockopt(zmq.SUBSCRIBE, zip_filter)

#process 5 records

for record in range(5):

data = socket.recv()

print data](https://image.slidesharecdn.com/zmqincontextofopenstack-140306003156-phpapp01/75/Zmq-in-context-of-openstack-4-2048.jpg)

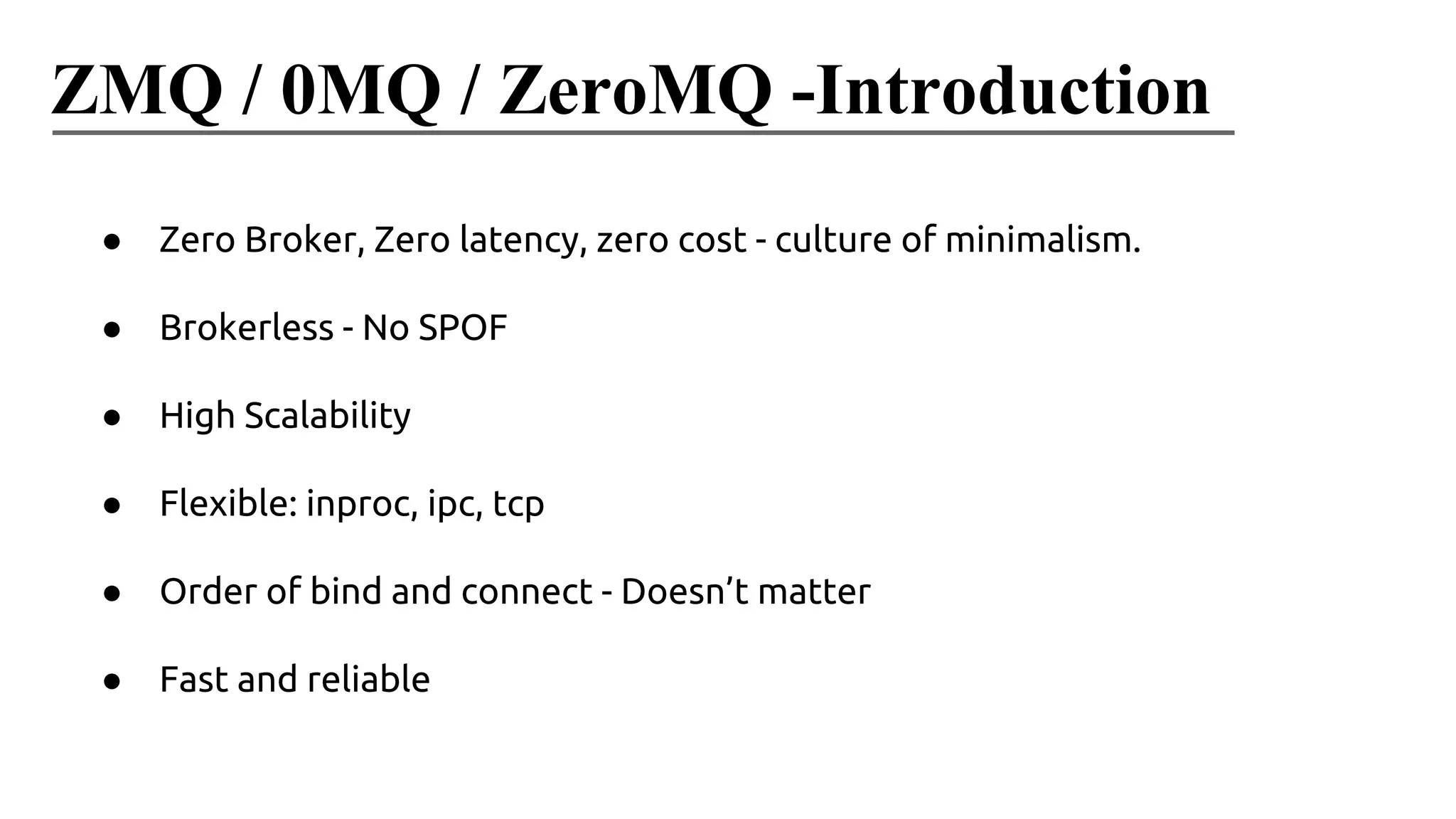

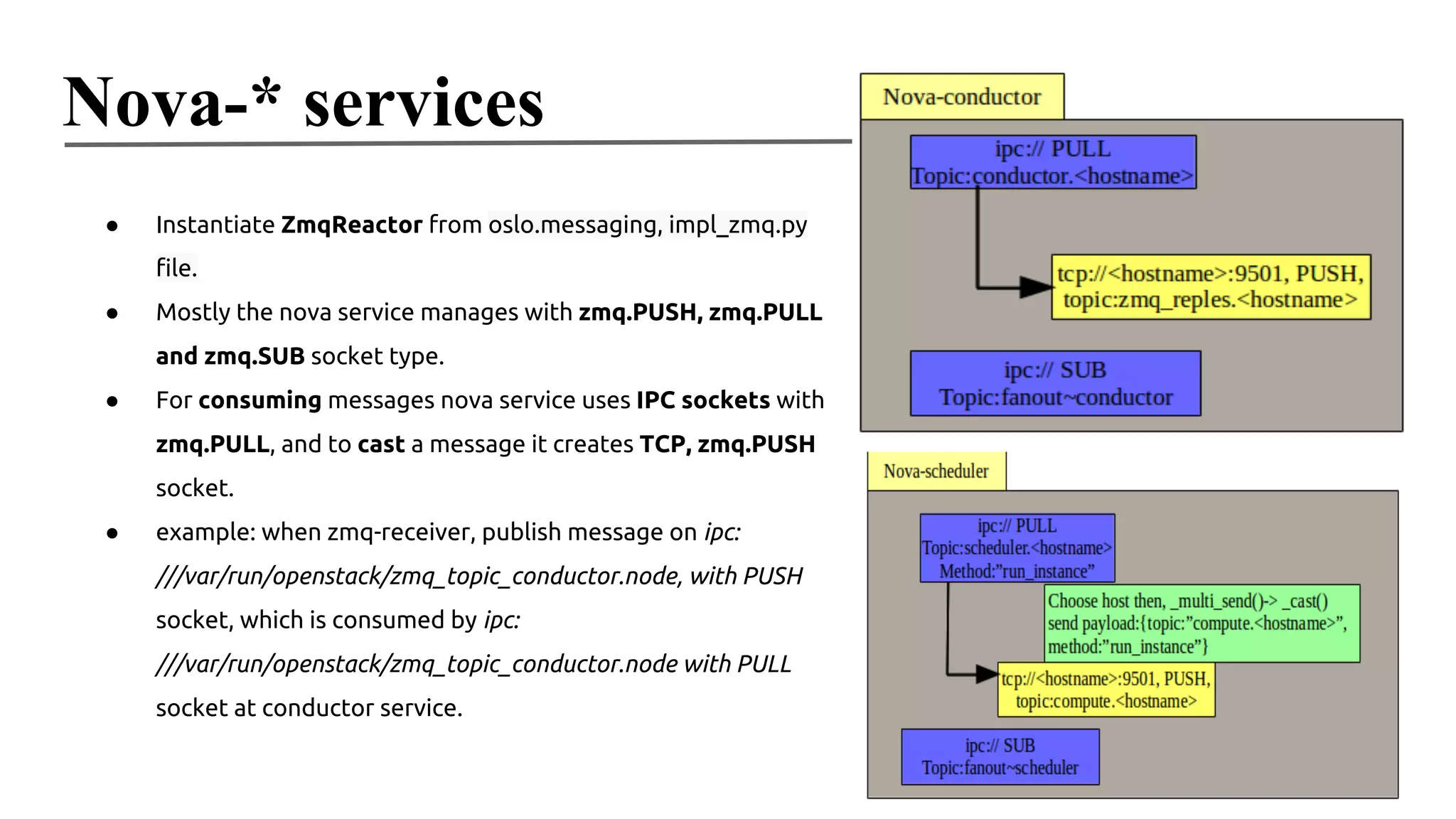

![MatchMakerRing file

{

"conductor": [

"controller"

],

"scheduler": [

"controller"

],

"compute": [

"controller","computenode"

],

"network": [

"controller","computenode"

],

"zmq_replies": [

"controller","computenode"

],

"cert": [

"controller"

],

"cinderscheduler": [

"controller"

],

"cinder-volume": [

"controller"

],

"consoleauth": [

"controller"

]

}](https://image.slidesharecdn.com/zmqincontextofopenstack-140306003156-phpapp01/75/Zmq-in-context-of-openstack-14-2048.jpg)

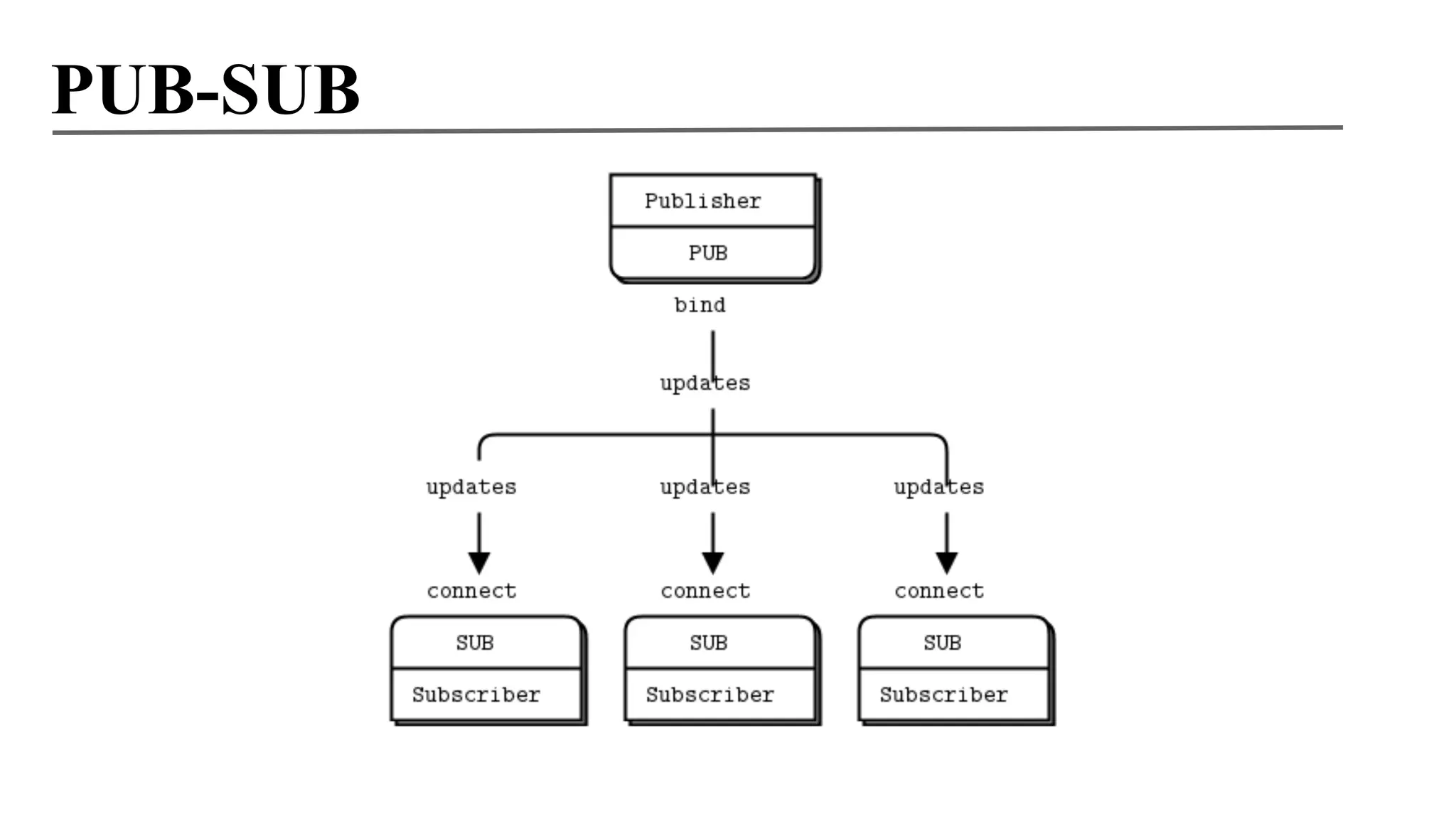

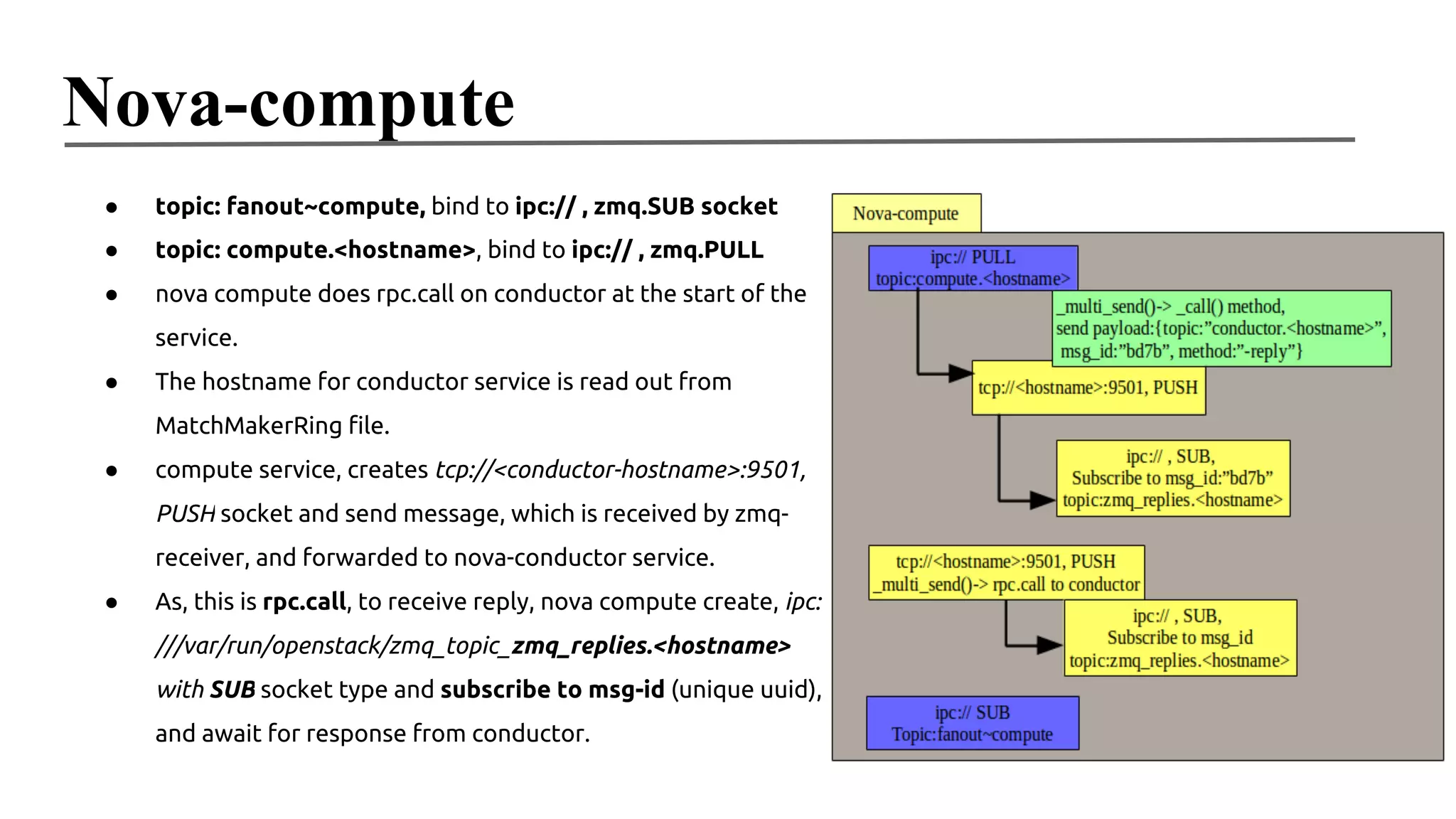

![Message routing

●

nodex zmq-receiver service, receiver data and

check for topic, based on topic which is

u'zmq_replies.nodex' {'args': {' msg_id':

u'103b95cc64ab4e39924b6240a8dbaac8',

'response': [[{'binary': u'nova-compute',

zmq_replies.nodex, create ipc://....

/zmq_topic_zmq_replies.nodex with PUB and

'created_at': '2014-0219T11:02:32.000000',

send data.

●

'deleted': 0L,

Now, the compute already has reply socket ipc://

'deleted_at': None,

with SUB, and is already subscribe to msg_id,

'disabled': False,

which will get back the response of rpc.call.

'disabled_reason': None,

'host': u'nodex',

'id': 2L,

'report_count': 23,

'topic': u'compute',

'updated_at': '2014-0219T11:08:33.572453'}]]},

'method': '-process_reply'}](https://image.slidesharecdn.com/zmqincontextofopenstack-140306003156-phpapp01/75/Zmq-in-context-of-openstack-16-2048.jpg)