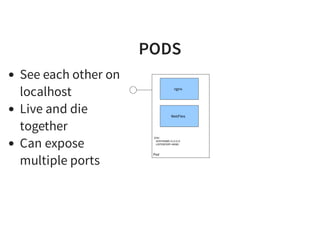

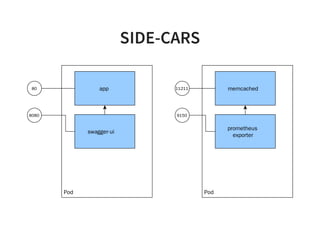

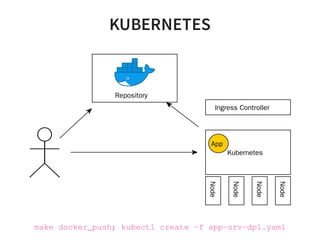

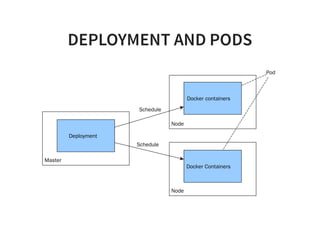

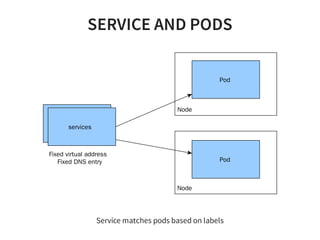

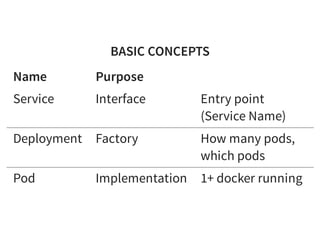

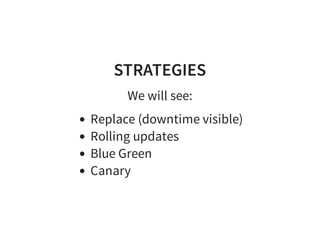

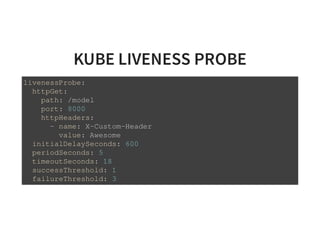

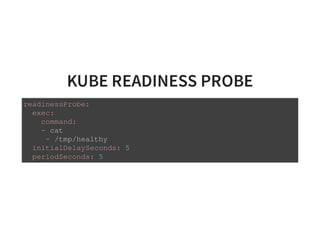

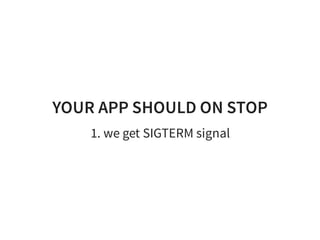

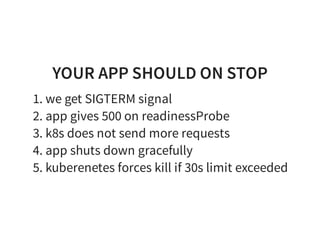

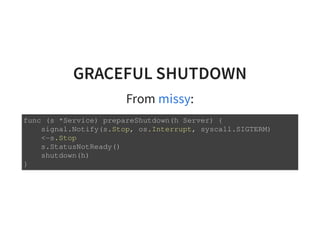

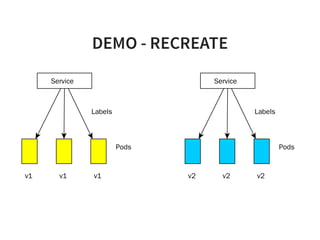

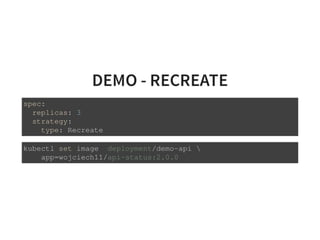

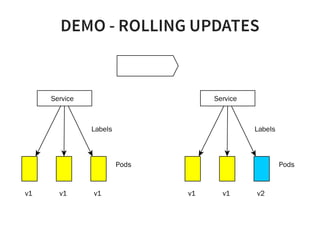

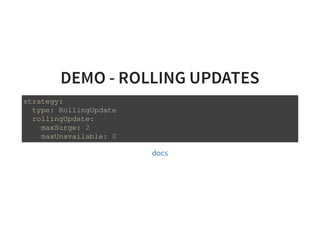

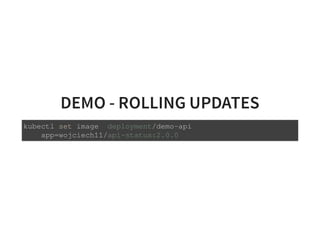

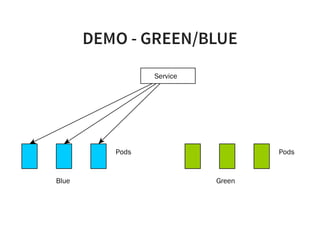

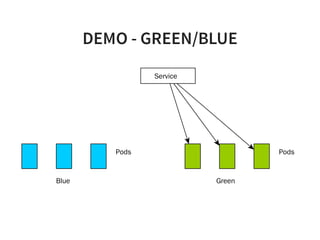

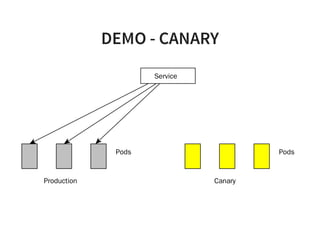

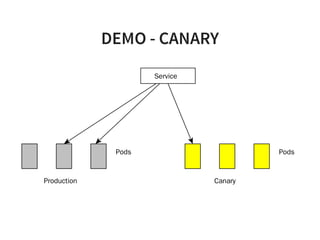

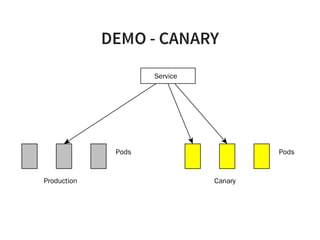

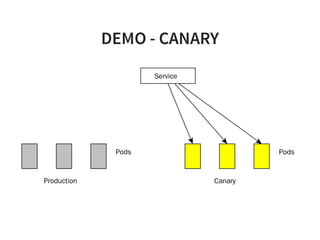

The document discusses zero downtime deployment strategies using Kubernetes, focusing on methods such as rolling updates, blue-green deployment, and canary releases. It outlines essential Kubernetes components like pods, services, and deployment strategies, emphasizing the importance of implementing readiness and liveness probes for maintaining service stability. Additionally, it highlights a practical example involving the deployment of a demo API and the functionality of various deployment strategies within Kubernetes.

![STORY

Go + Kubernetes

SMACC - Fintech / ML - [10.2017- ...]

Lyke - Mobile Fashion app - [12.2016, 07.2017]](https://image.slidesharecdn.com/index-181128060109/85/Zero-downtime-deployment-of-micro-services-with-Kubernetes-3-320.jpg)

![STORY

Lyke - [12.2016 - 07.2017]

SMACC - [10.2017 - present]](https://image.slidesharecdn.com/index-181128060109/85/Zero-downtime-deployment-of-micro-services-with-Kubernetes-57-320.jpg)