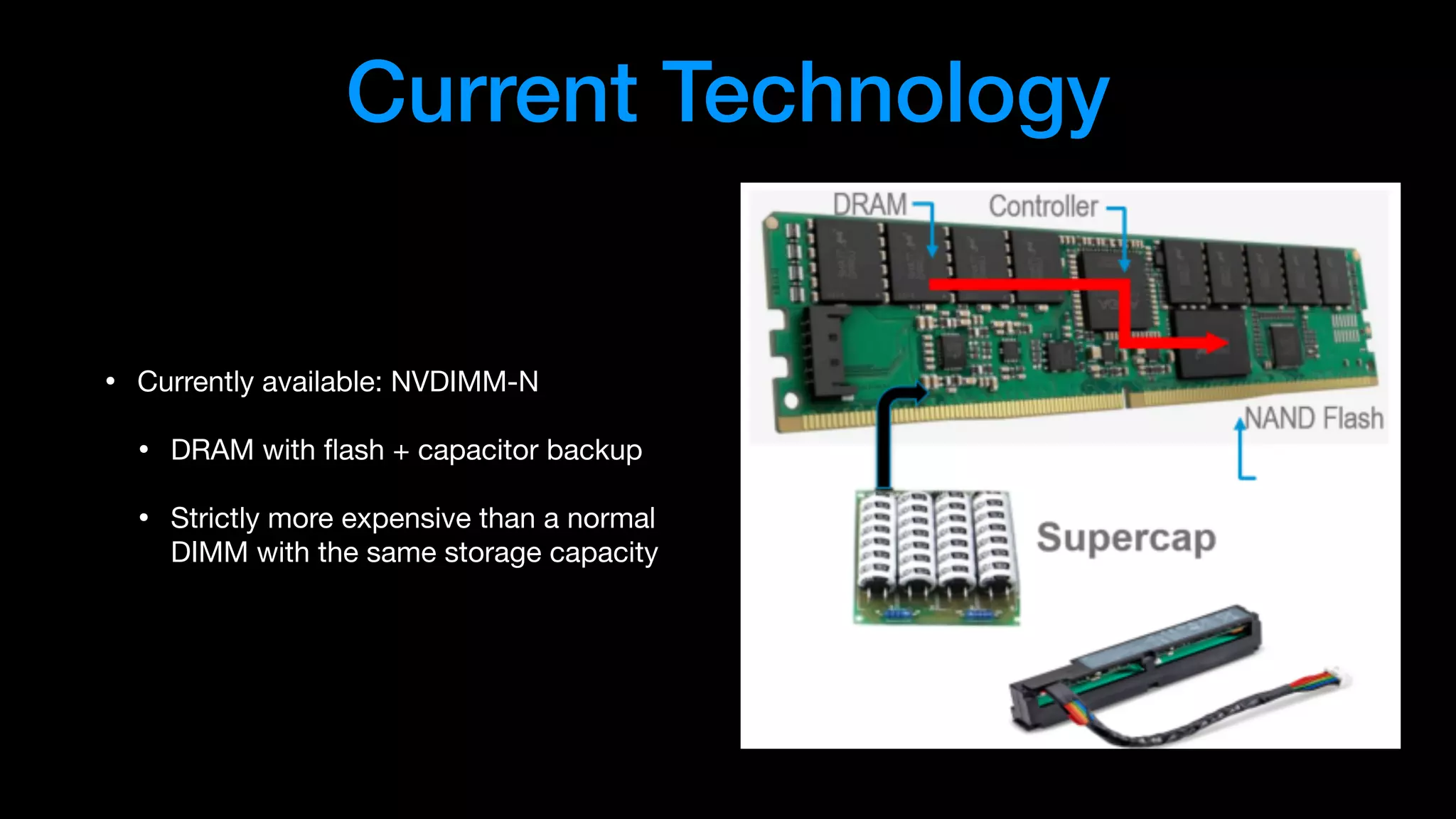

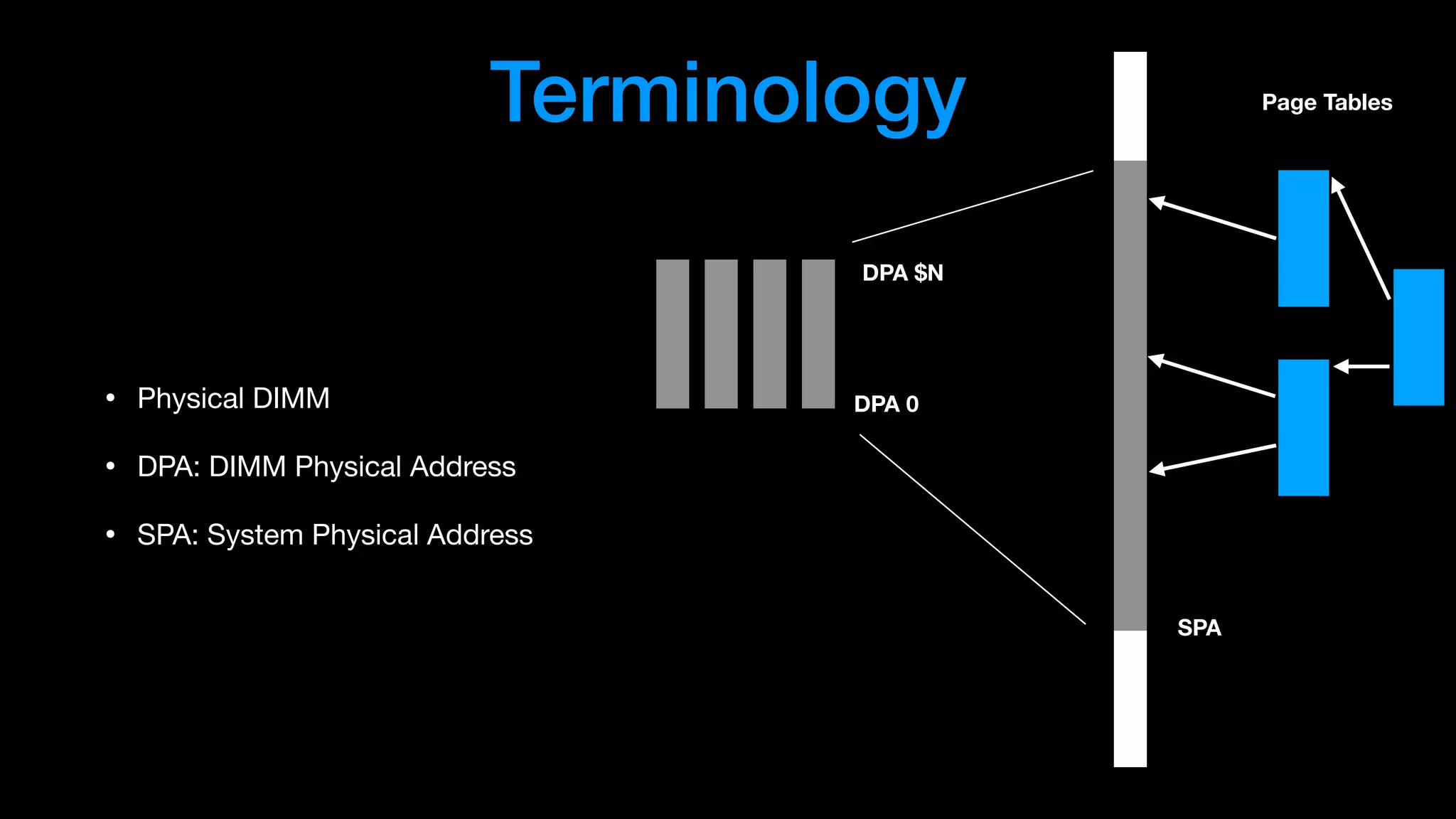

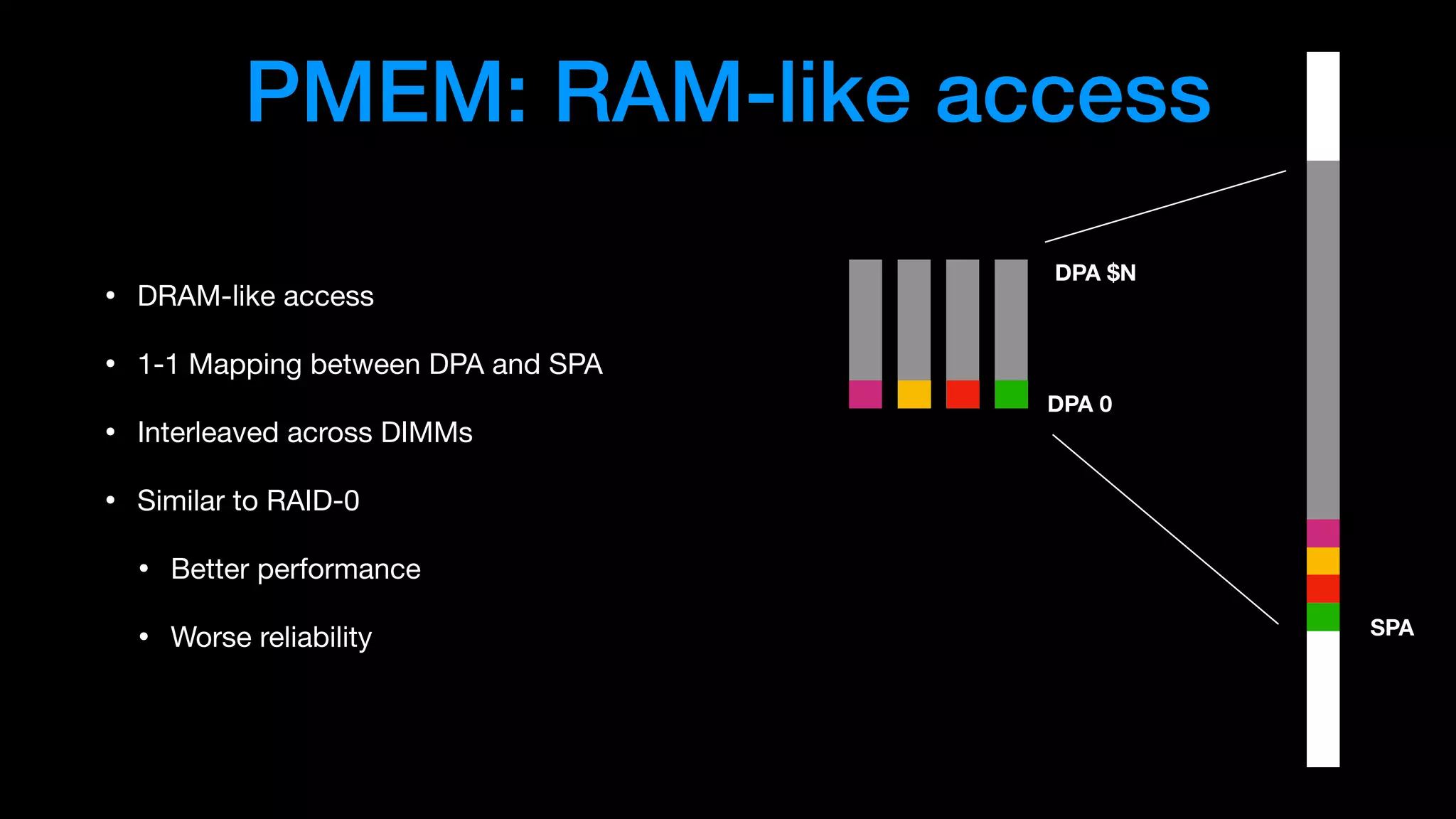

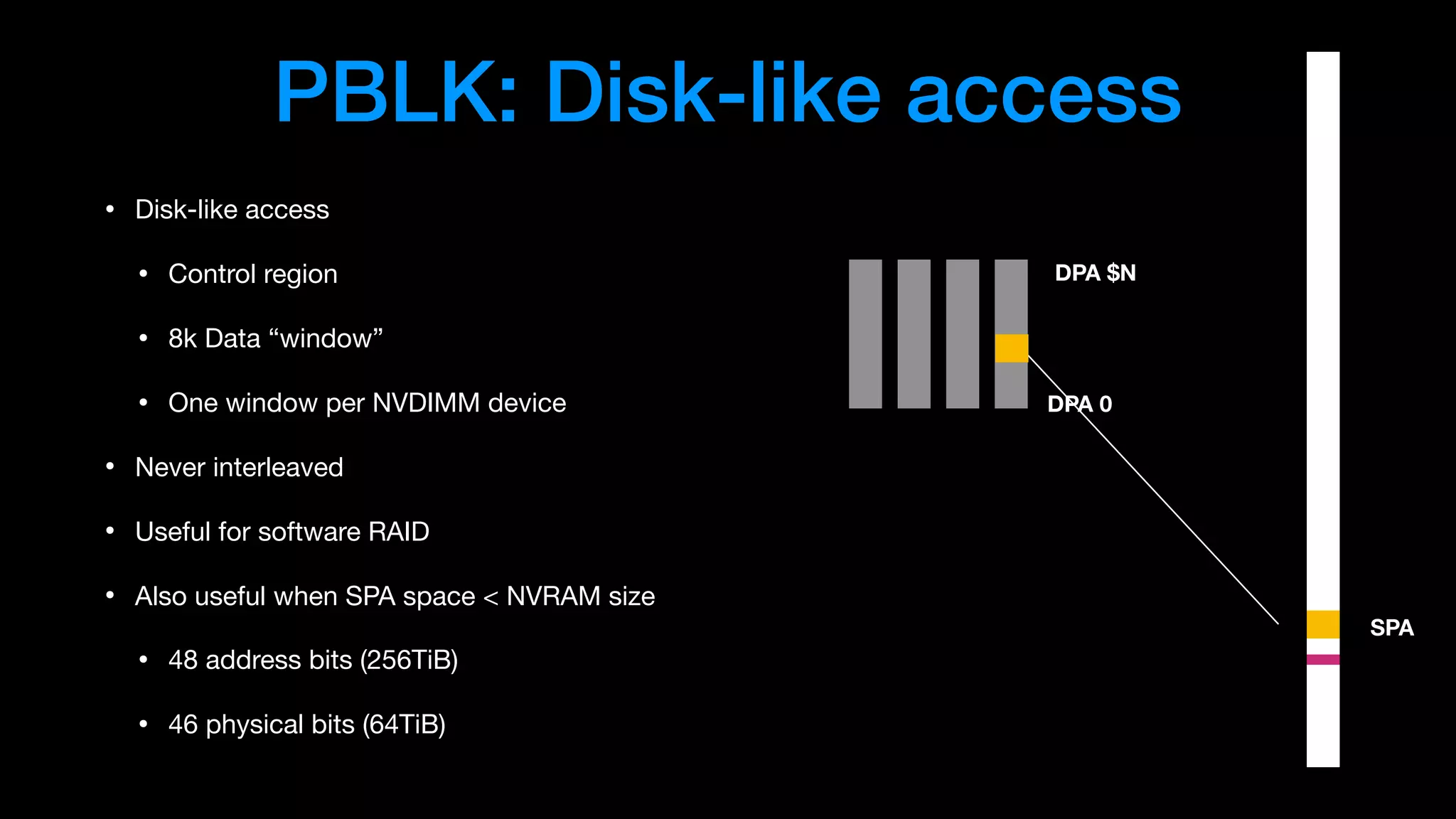

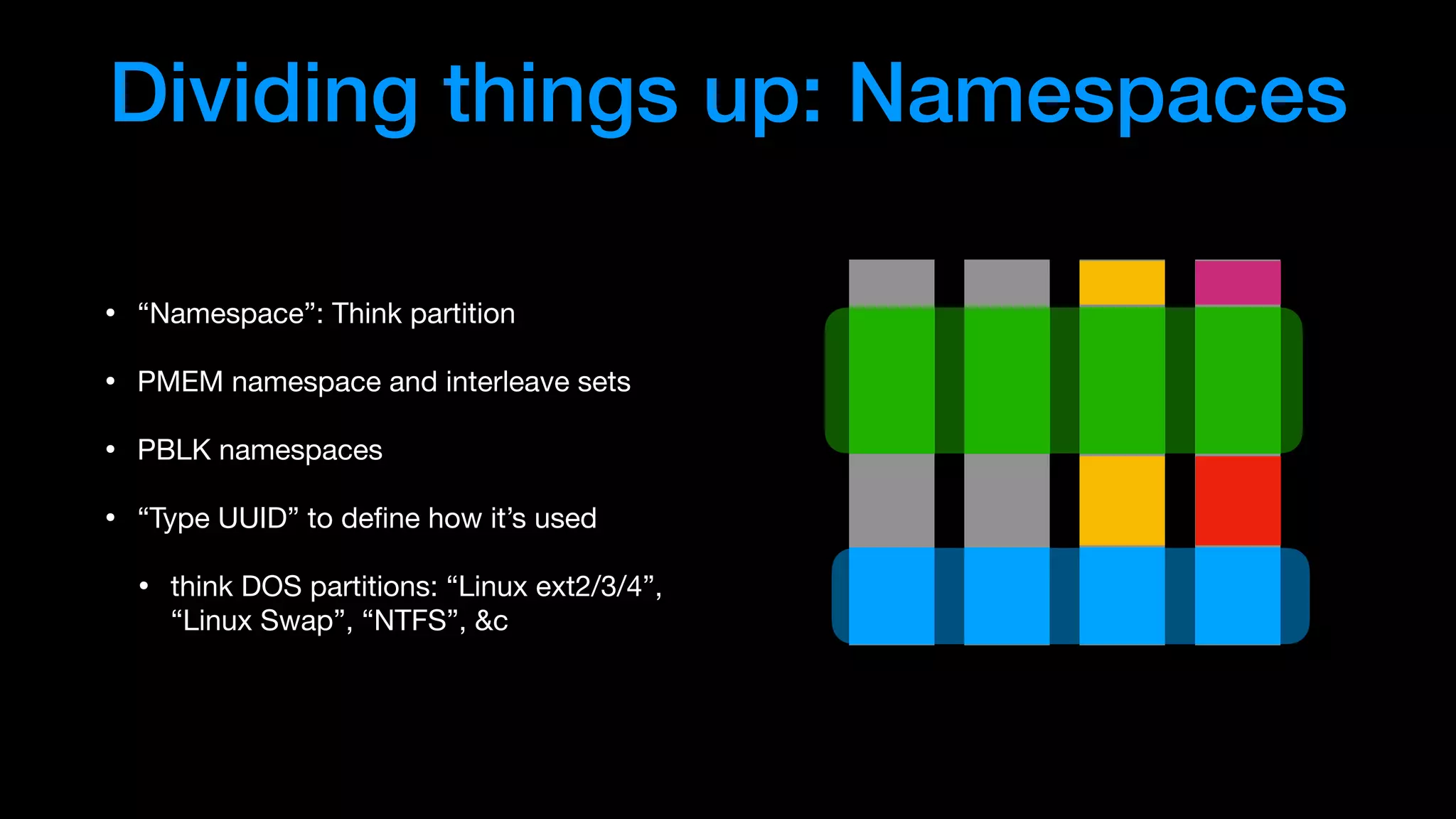

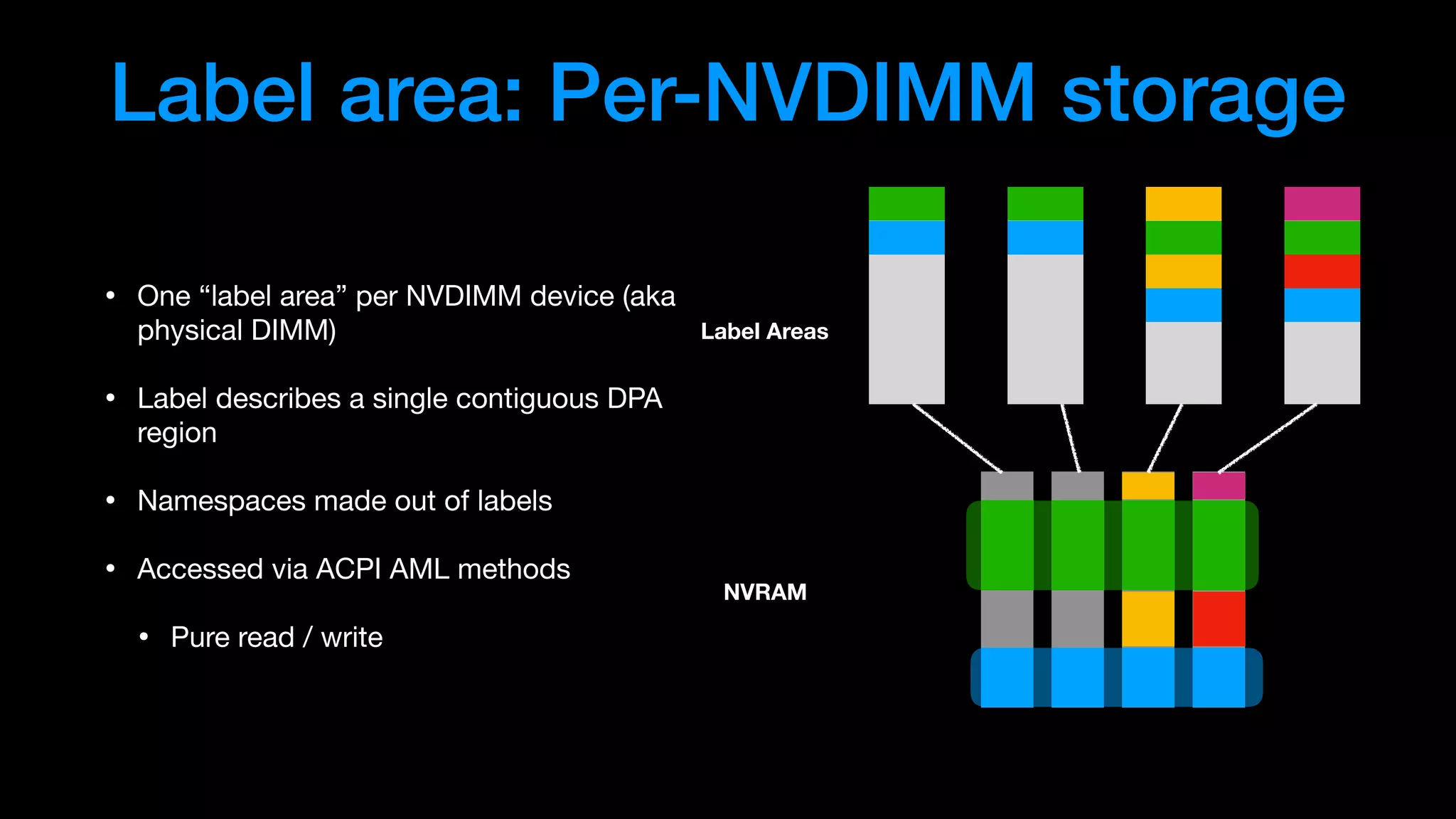

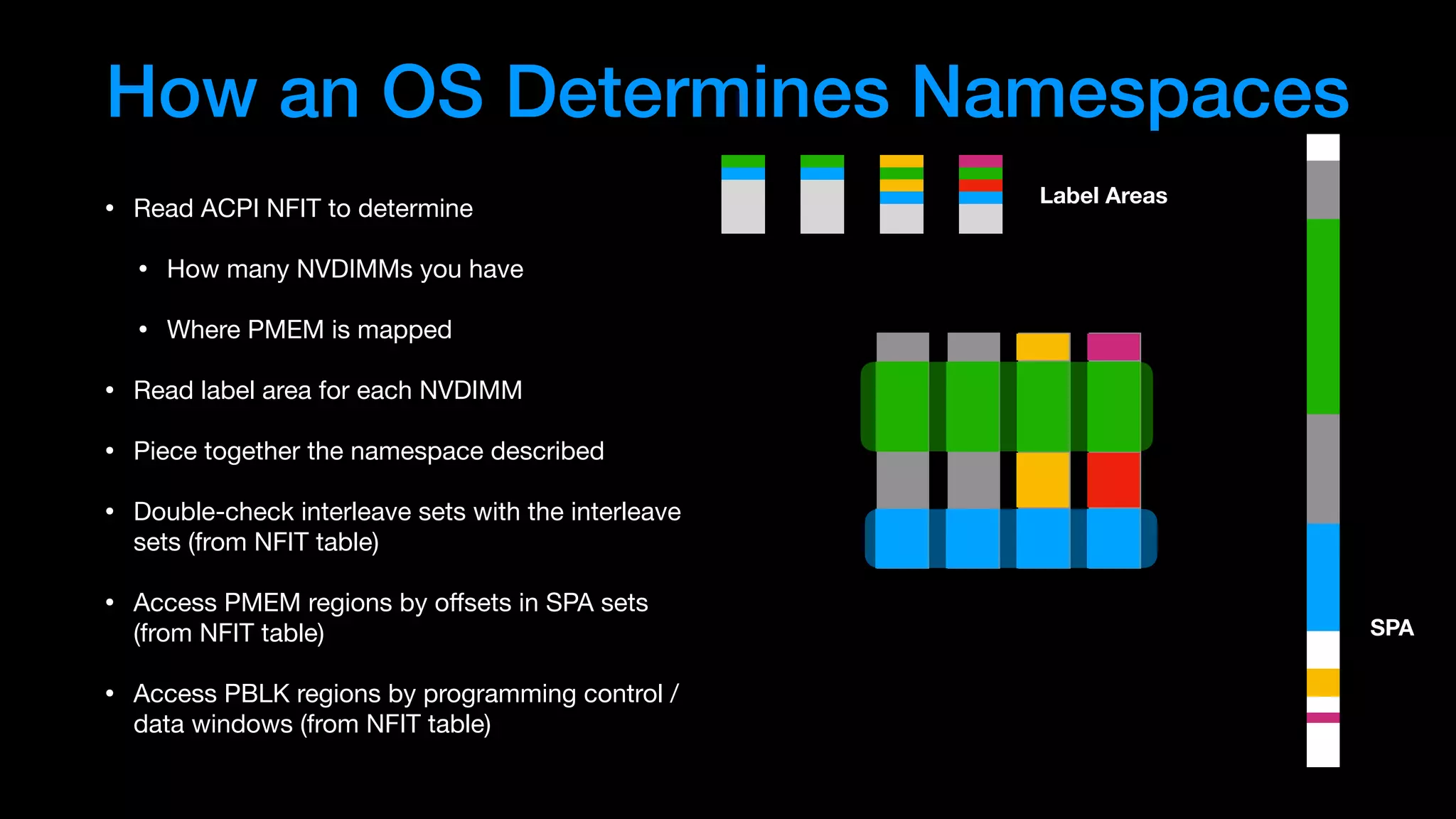

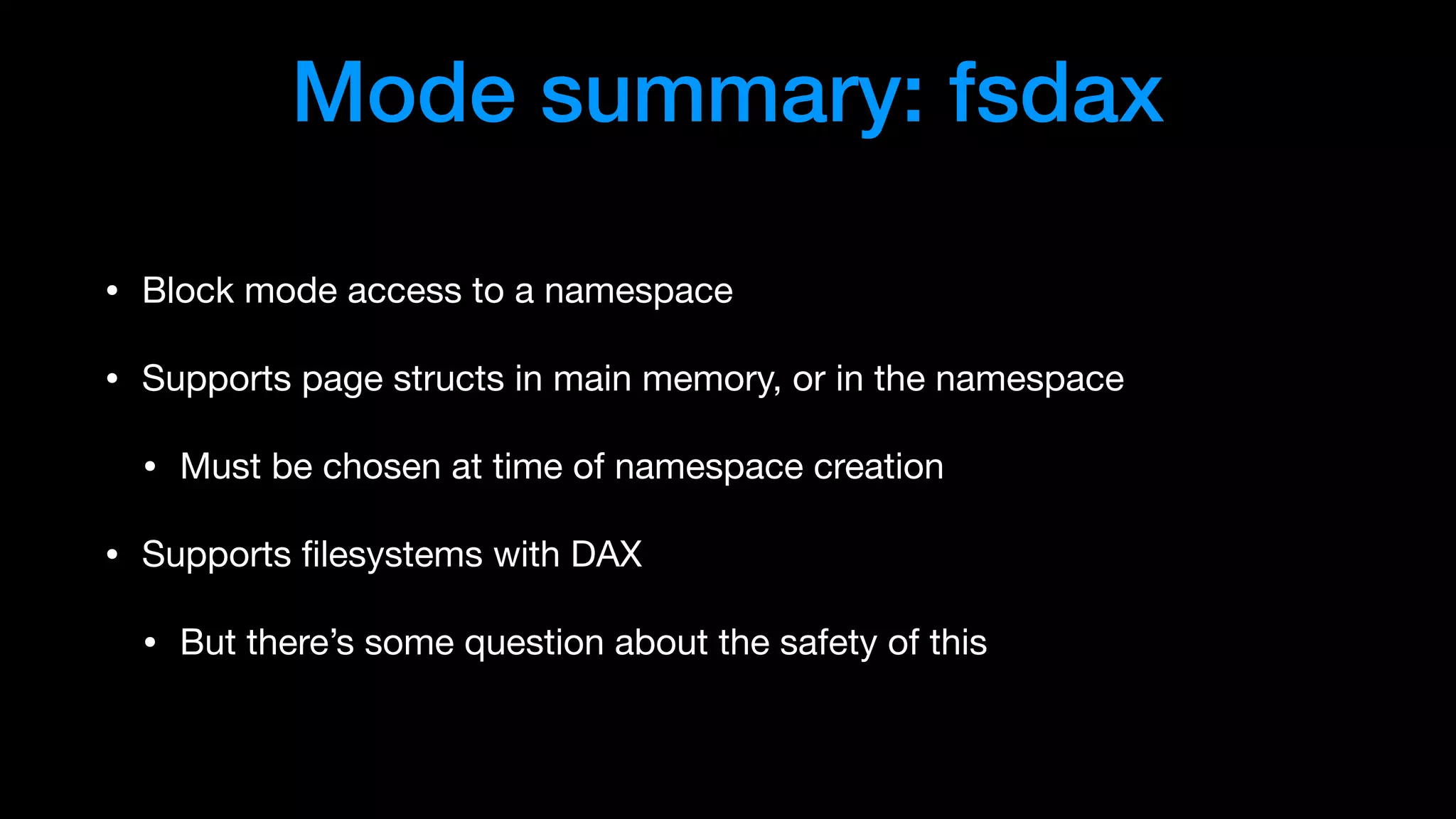

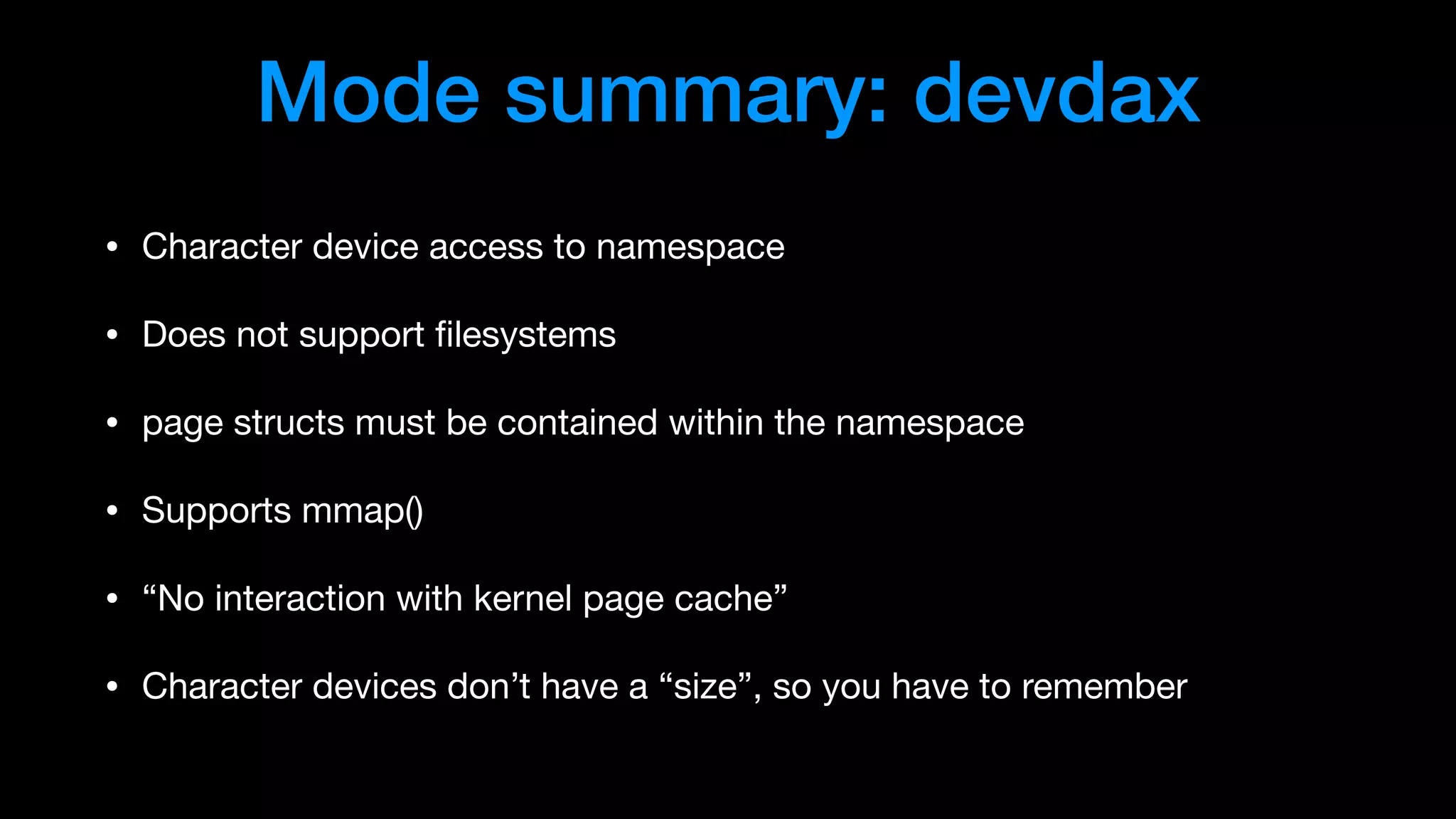

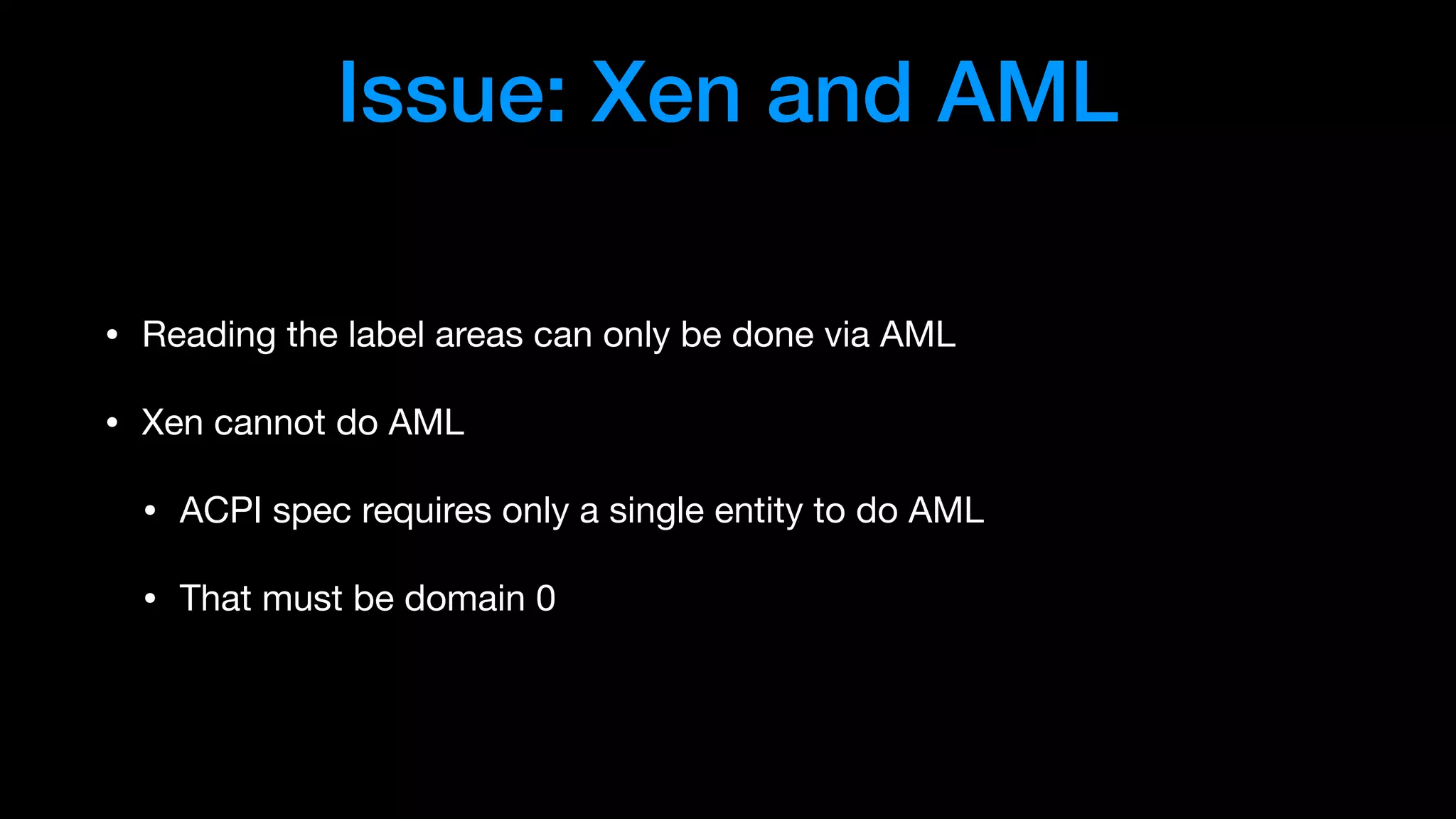

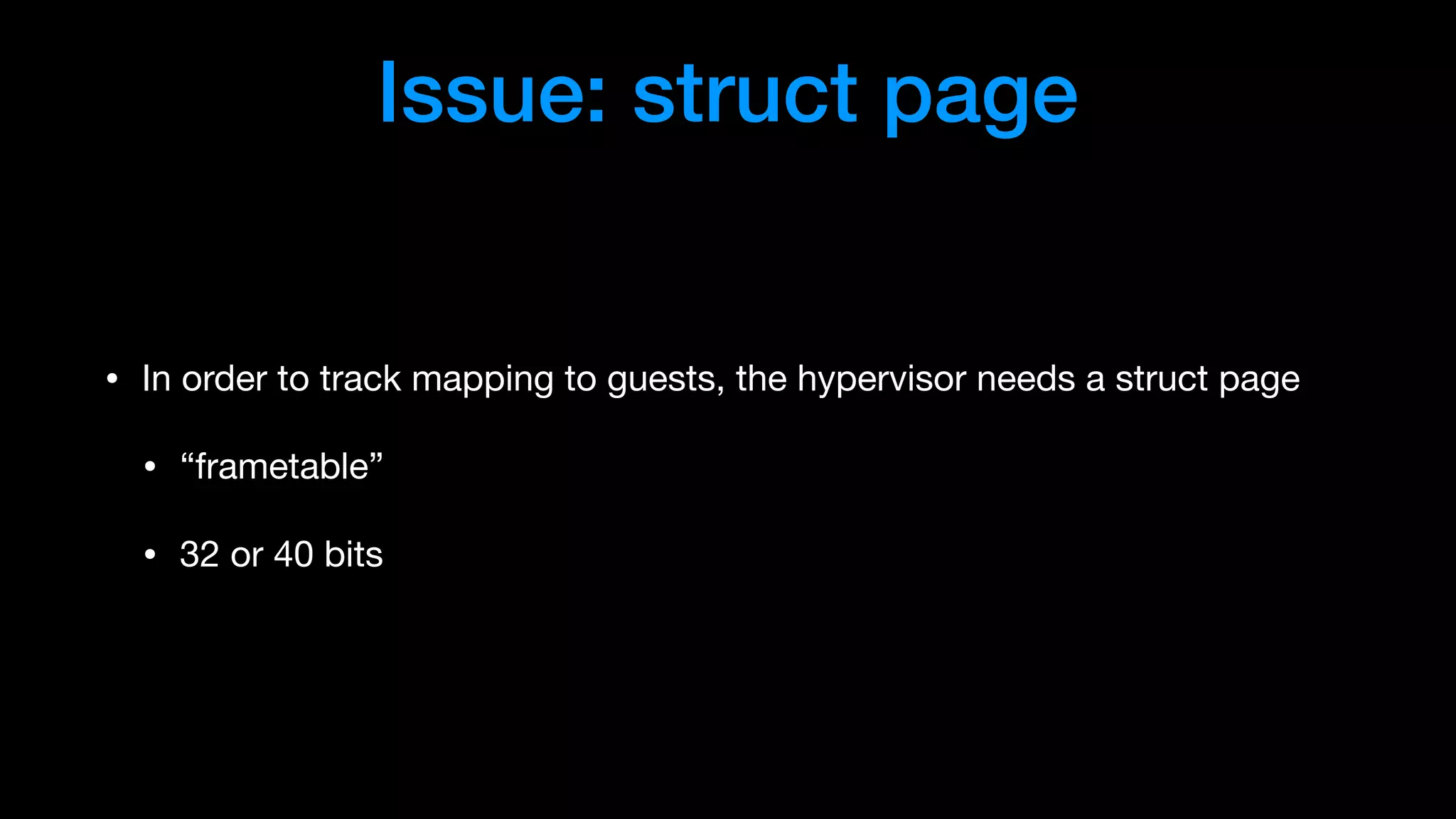

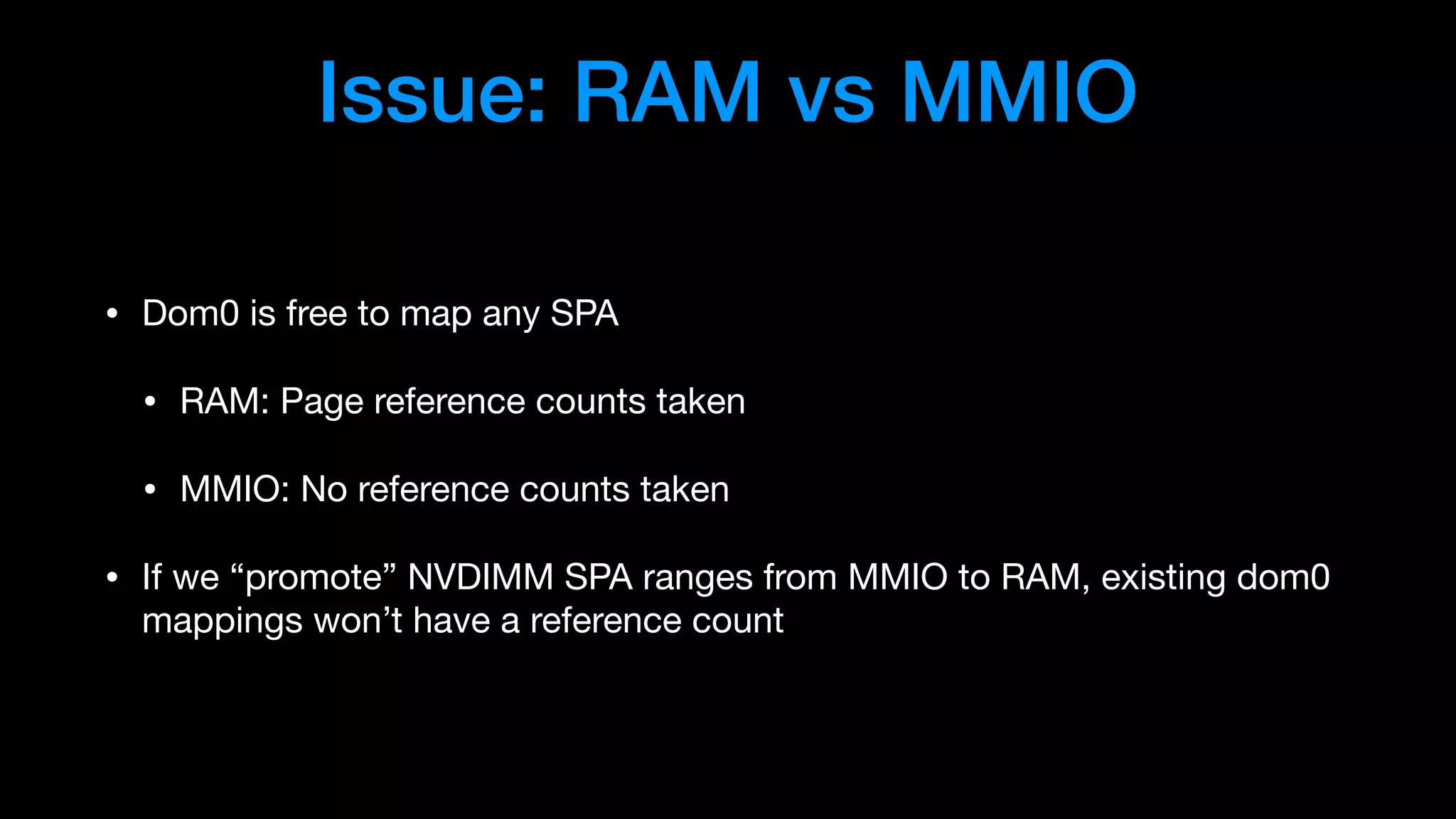

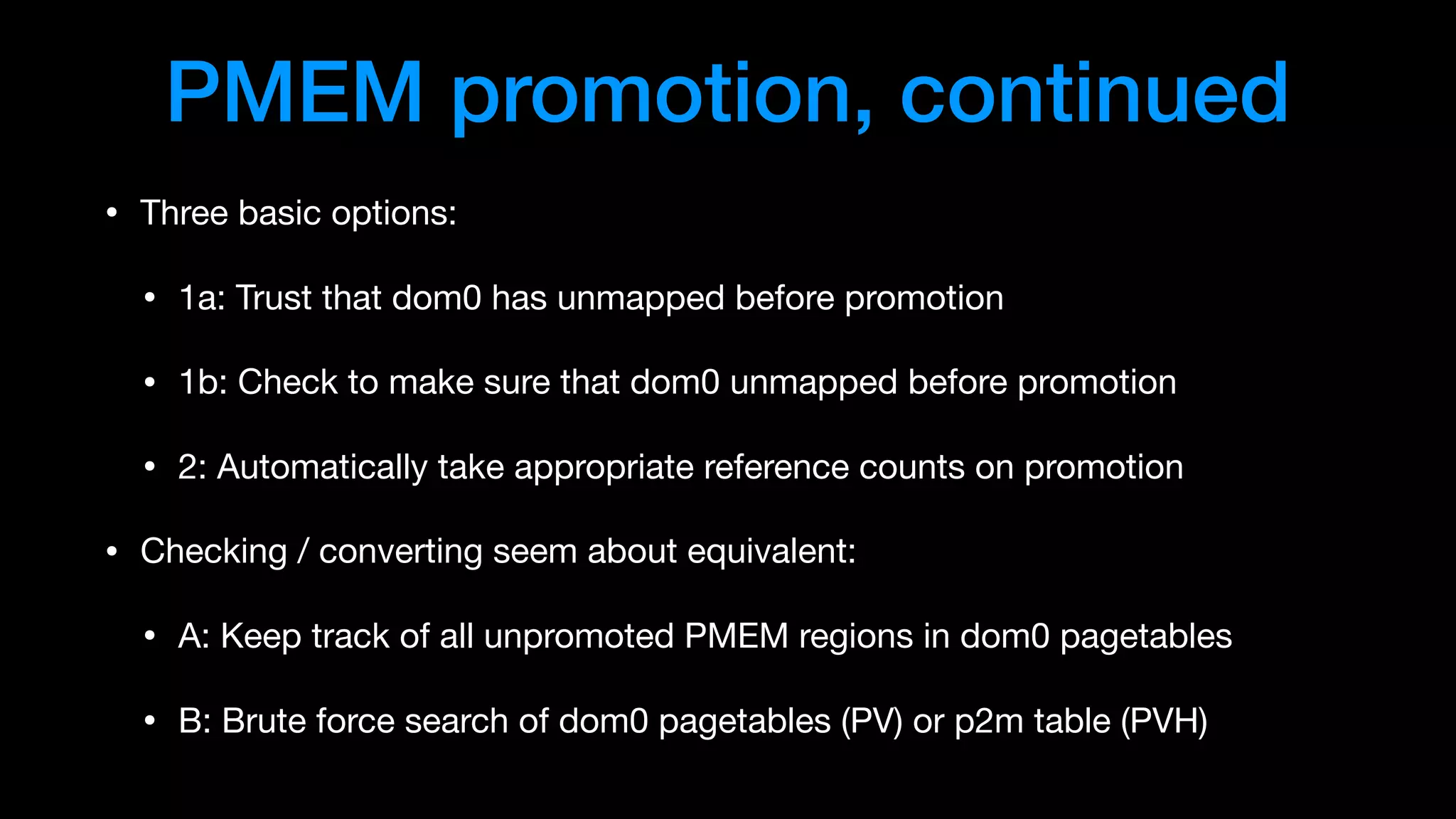

The document provides an overview of NVDIMMs, outlining their architecture and potential benefits for operating systems, particularly in relation to Linux and Xen environments. It details current technology, issues with namespace management, and the complexities regarding accessing NVDIMM memory regions. Additionally, it discusses methods for addressing the integration challenges of NVDIMMs in virtualization and proposals for improving NVDIMM mappings in Xen.