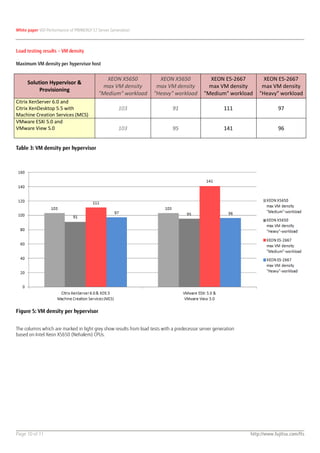

The white paper evaluates the performance of Fujitsu's Primergy S7 servers within a virtual desktop infrastructure (VDI), focusing on CPU and RAM requirements while considering various workloads. Key findings indicate that local storage and RAM configurations significantly impact VM density, with recommendations for optimizing resources to avoid wait states caused by slower disk I/O. The results serve as a foundation for sizing VDI environments using Citrix XenDesktop and VMware View technologies.