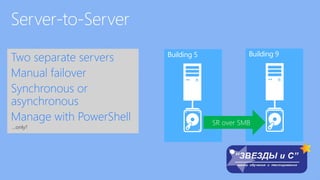

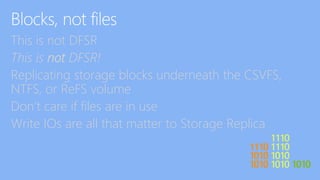

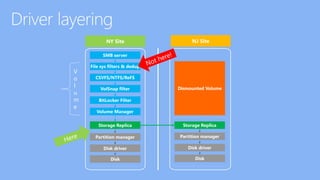

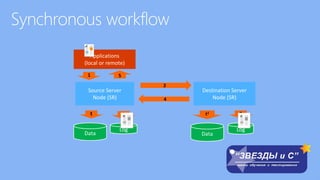

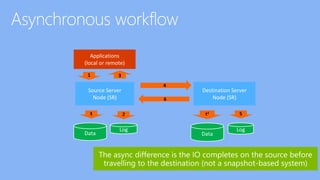

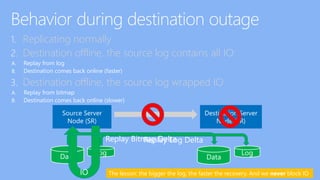

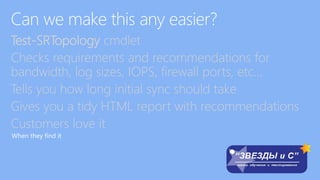

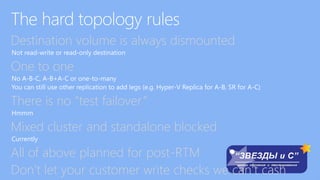

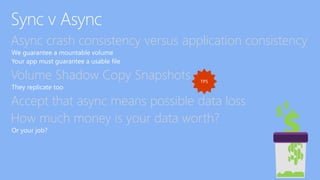

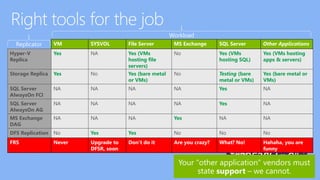

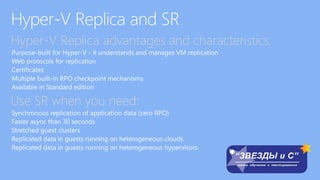

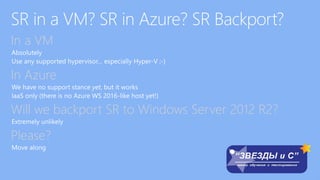

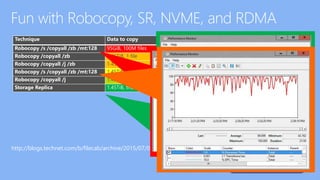

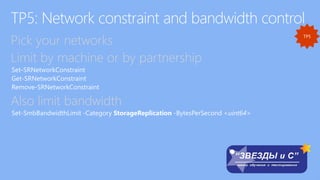

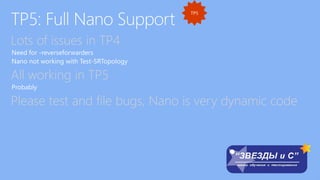

The document discusses the features and functionalities of Storage Replica, including its capabilities for synchronous and asynchronous replication over SMB 3.1.1. It highlights various use cases, management tools, performance metrics, and distinctions from other replication technologies. Additionally, the document emphasizes the importance of system requirements, log management, and optimizations for effective data replication across clusters.