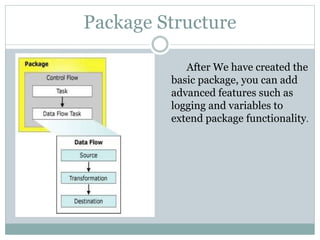

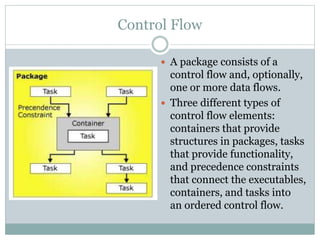

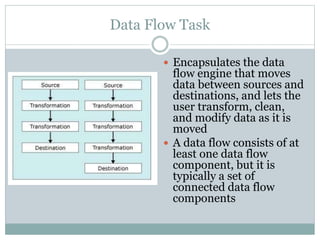

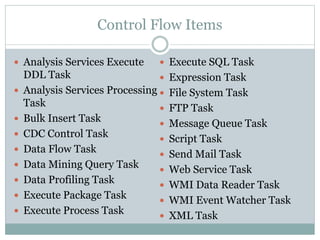

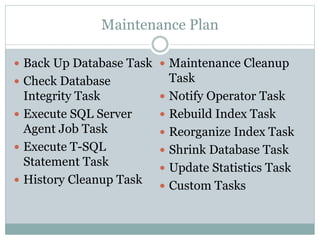

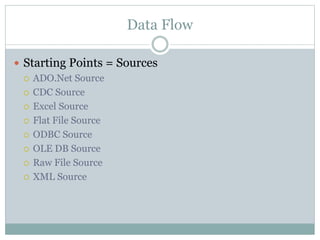

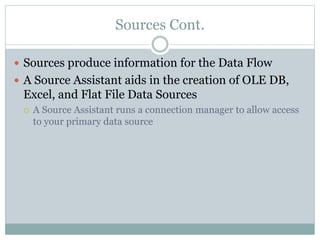

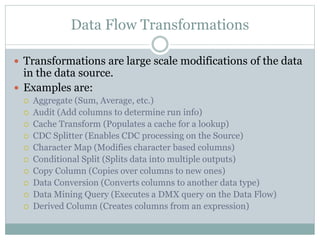

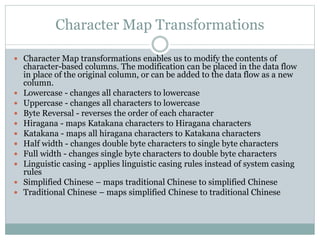

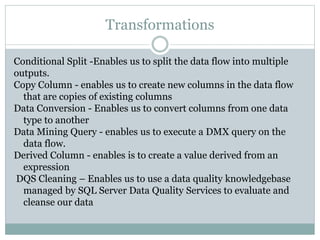

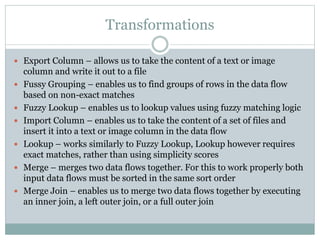

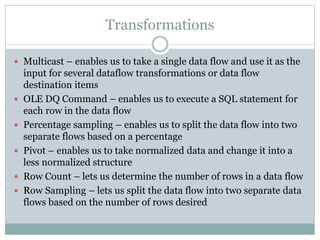

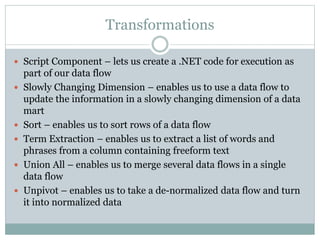

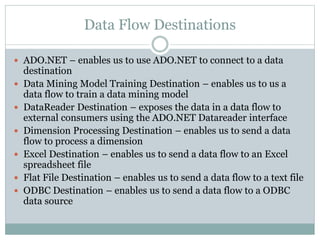

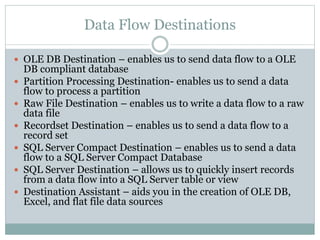

The document discusses Microsoft Integration Services (SSIS), which is a platform for building data integration and transformation solutions. It describes the structure of SSIS packages, which contain connections, tasks, data flows, and other elements. It provides details on the control flow, which sequences tasks and containers, and the data flow, which moves data between sources and destinations while transforming the data. It lists the various tasks, transformations, and destinations that can be used in SSIS packages to integrate, cleanse, and load data.