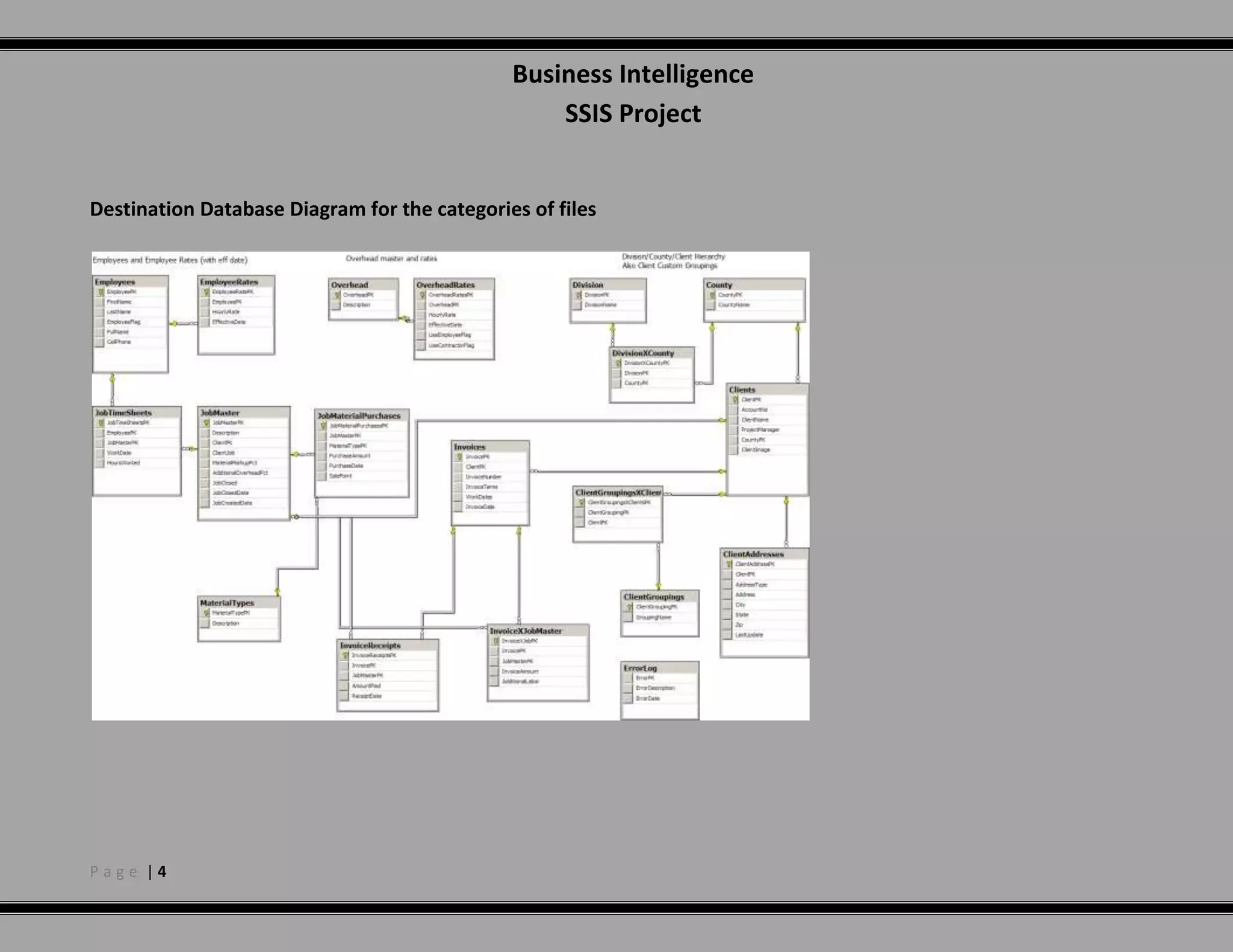

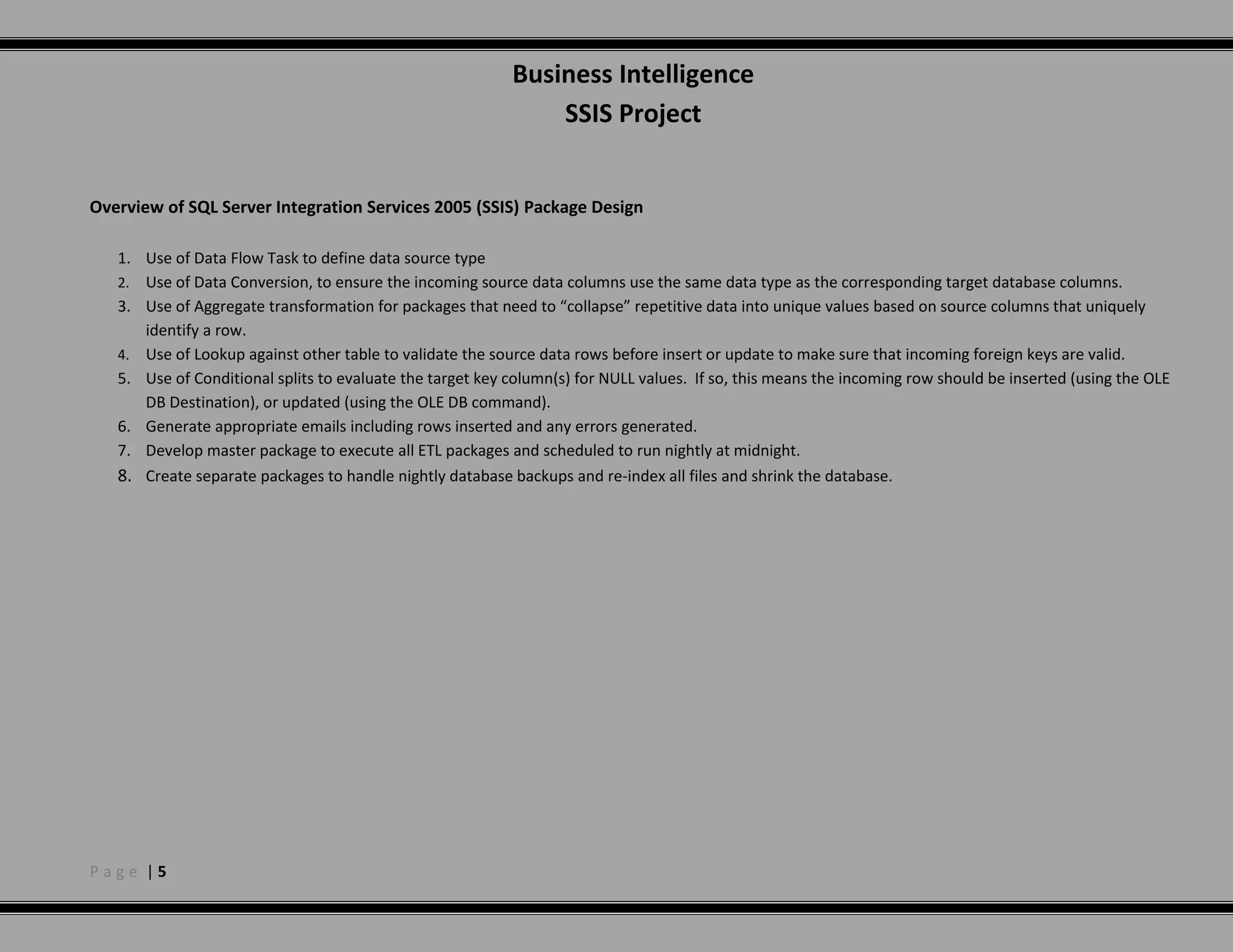

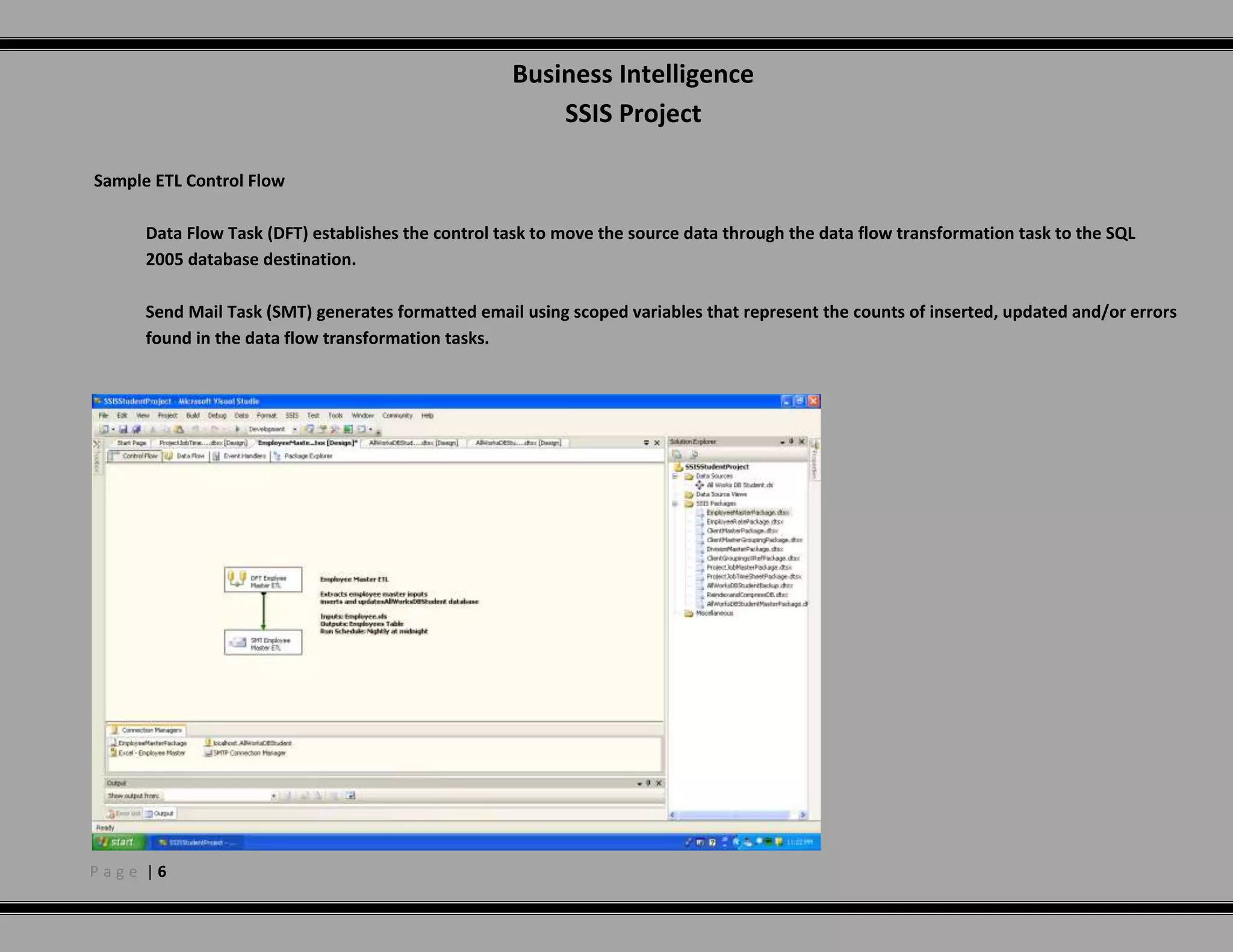

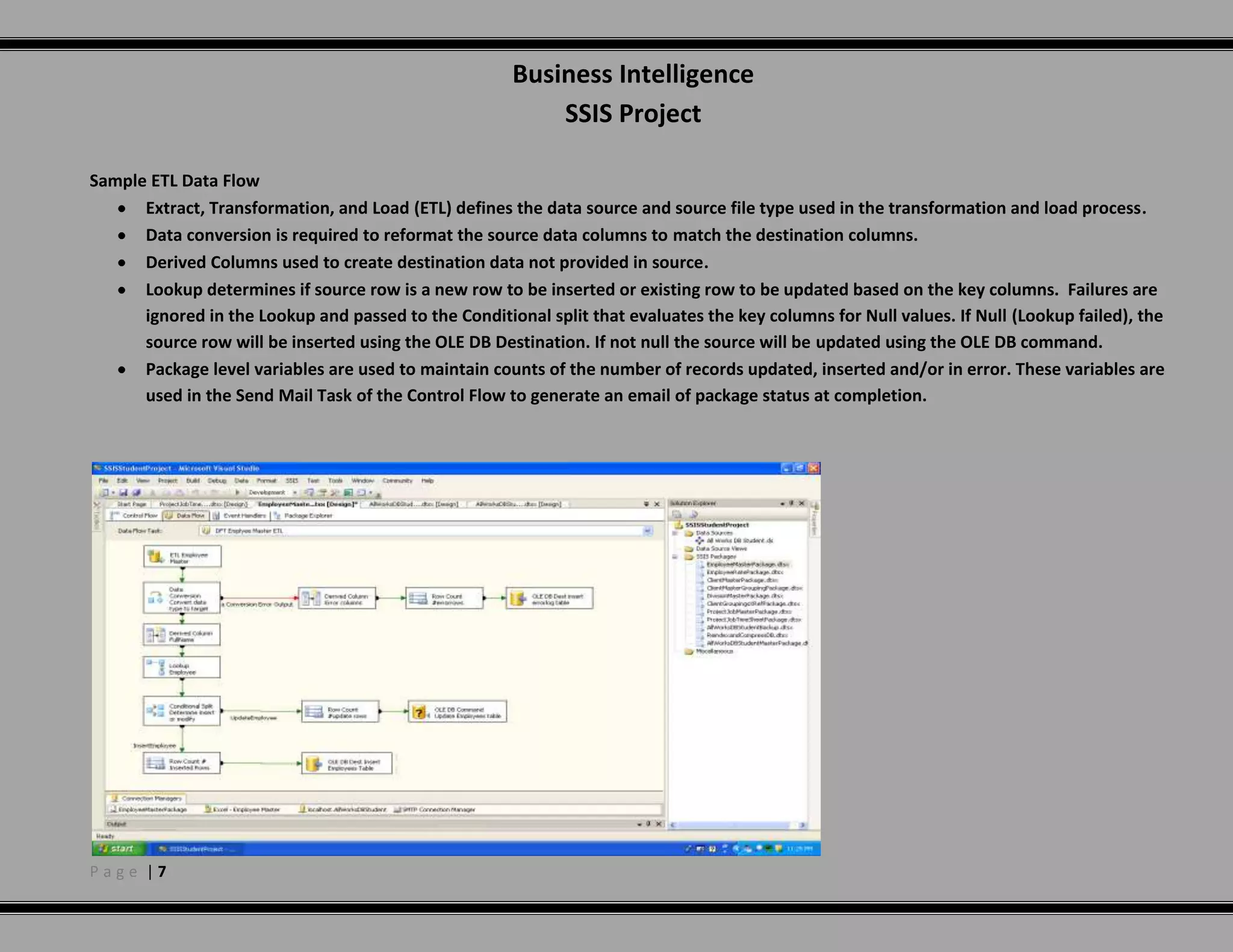

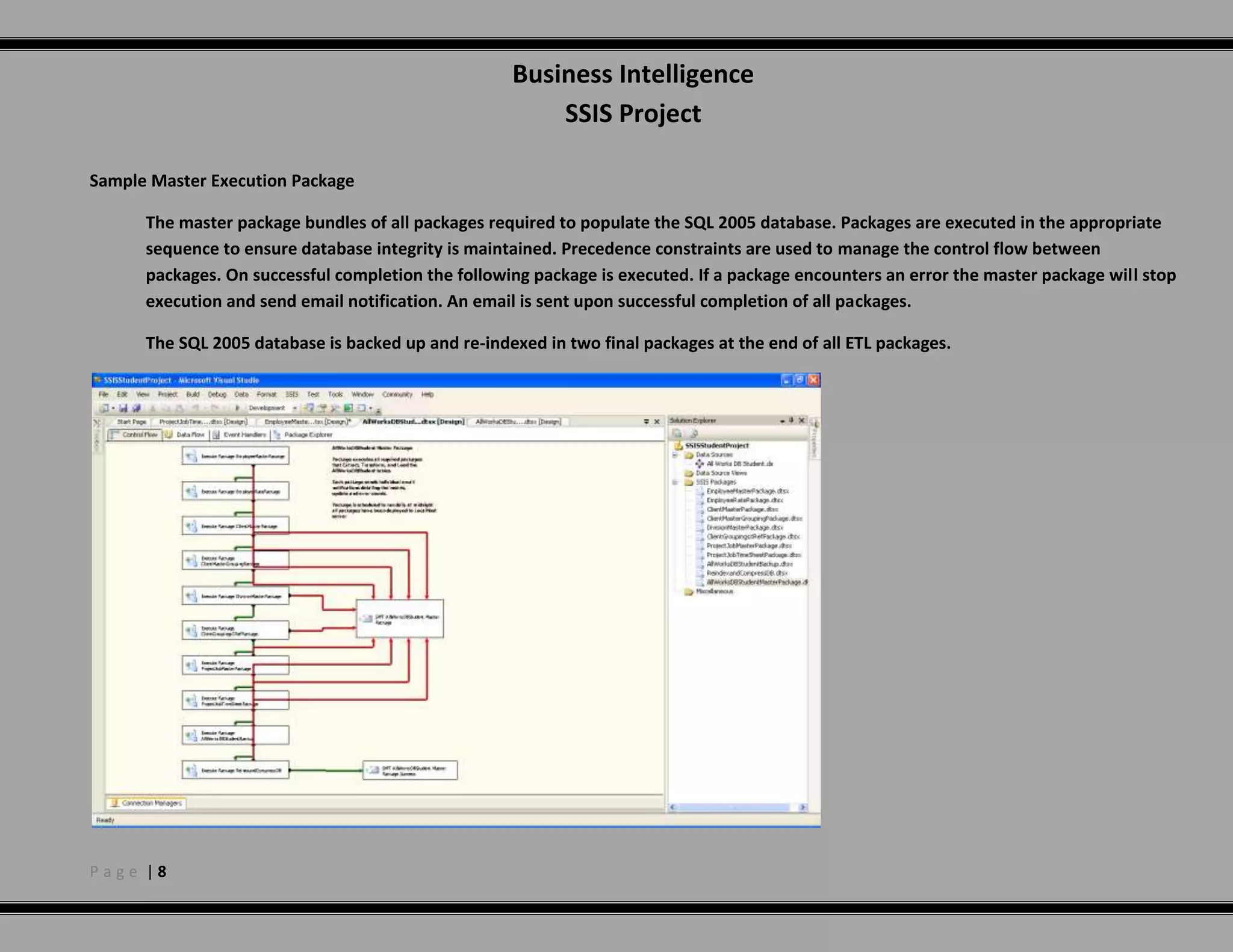

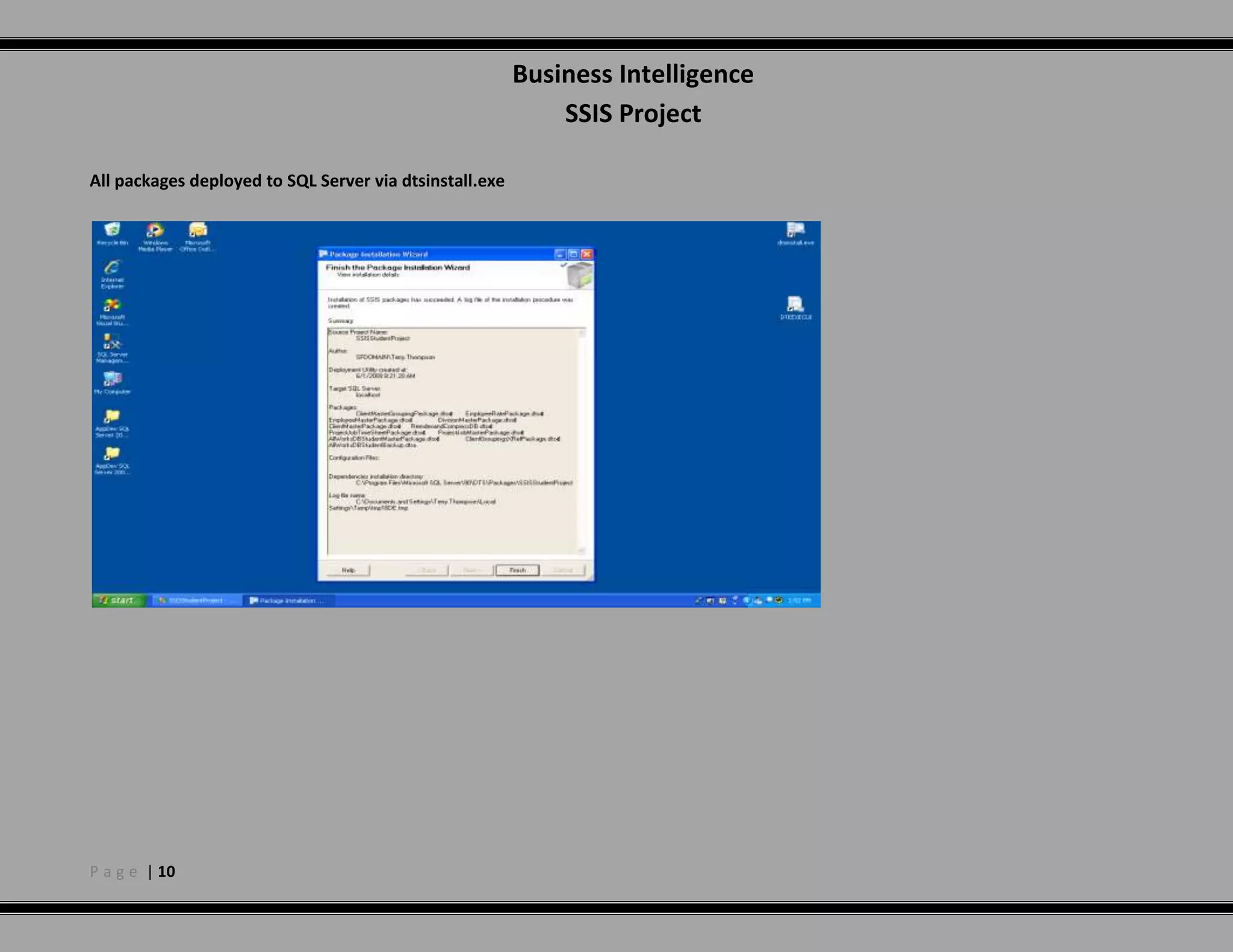

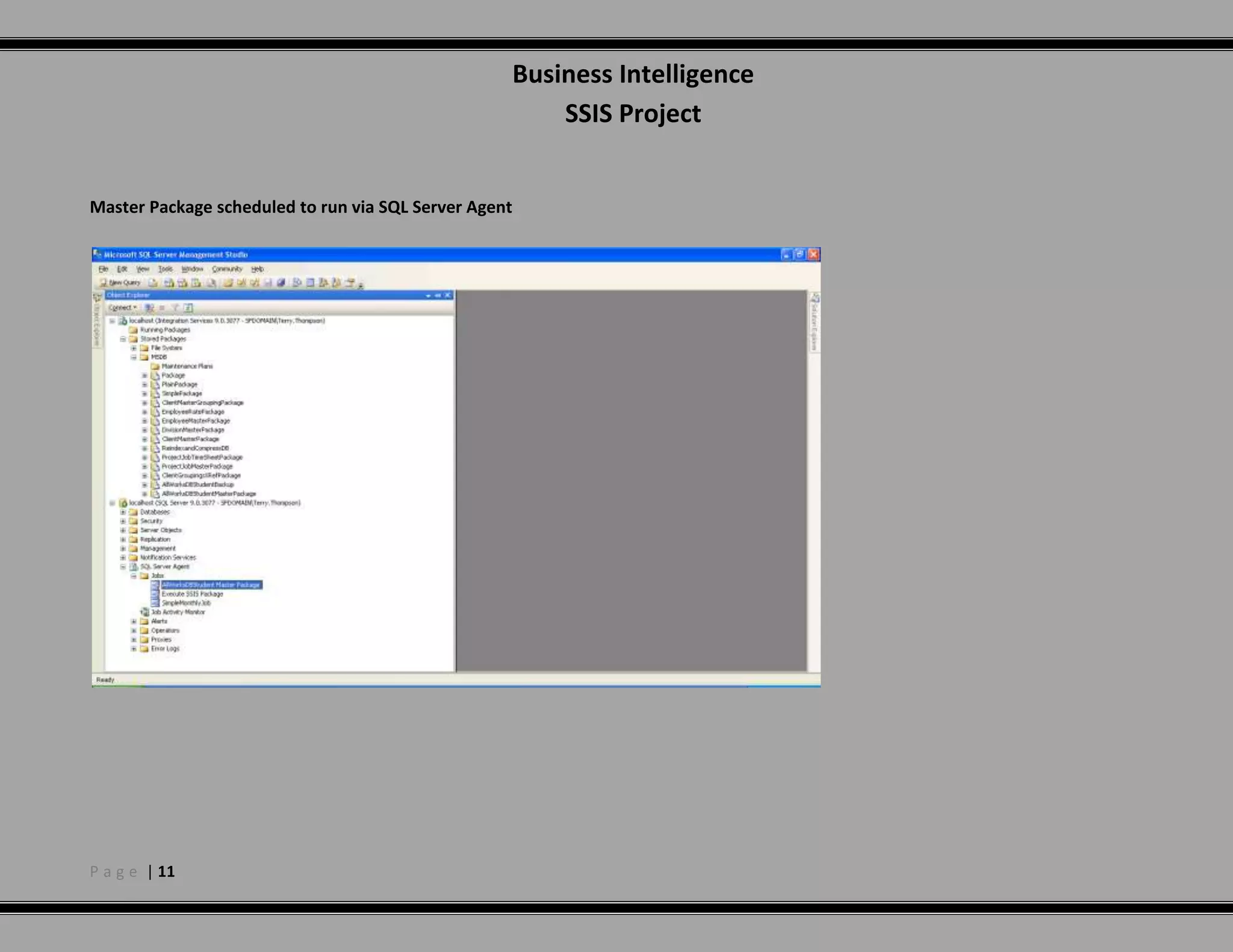

The document describes a project to use SQL Server Integration Services (SSIS) to integrate data from various Excel spreadsheets and CSV files into a SQL Server 2005 database. The SSIS packages are designed to extract, transform and load the source data into the destination database tables while performing data validation and error handling. A master package executes each of the ETL packages in sequence and sends completion emails.