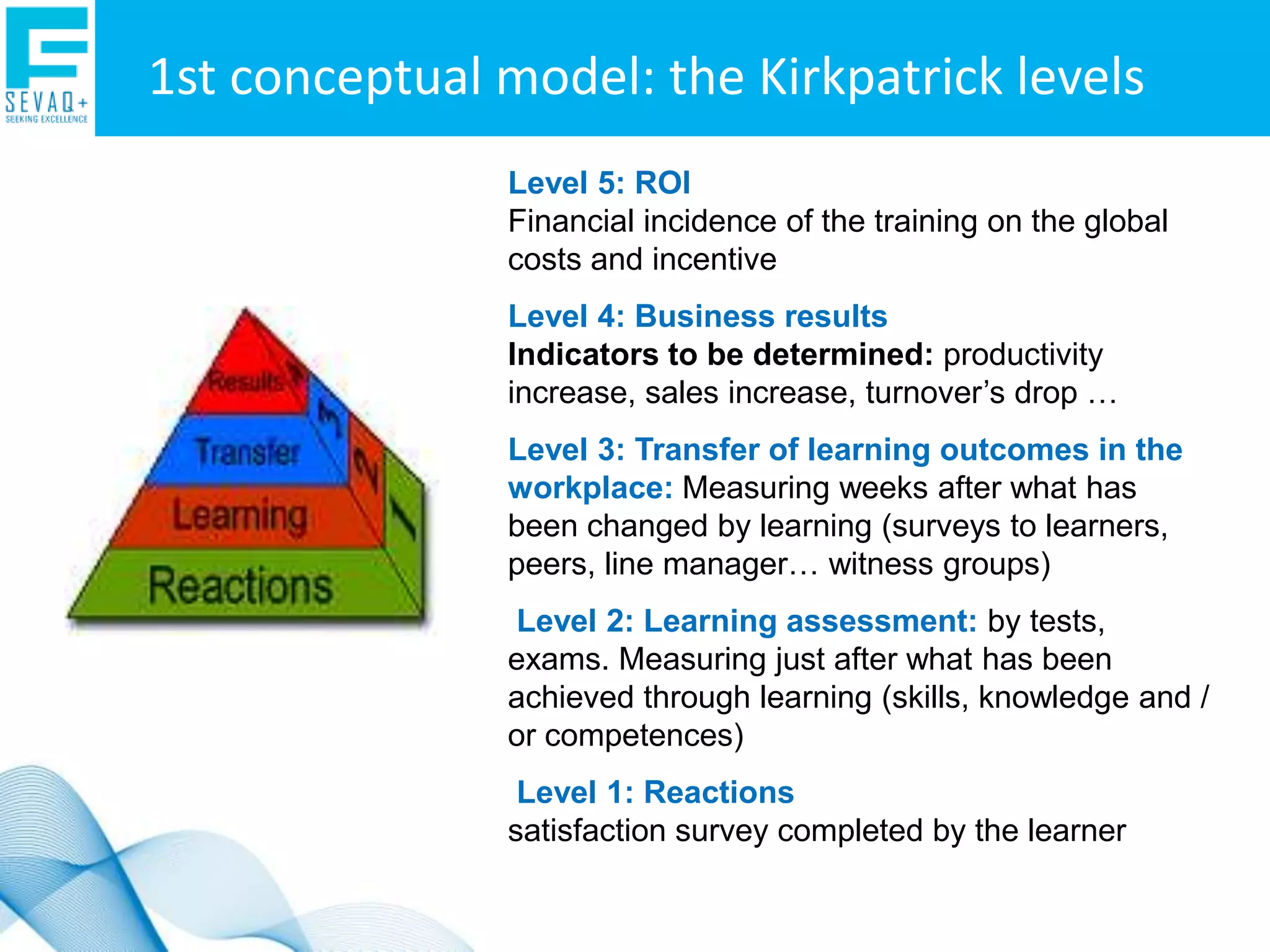

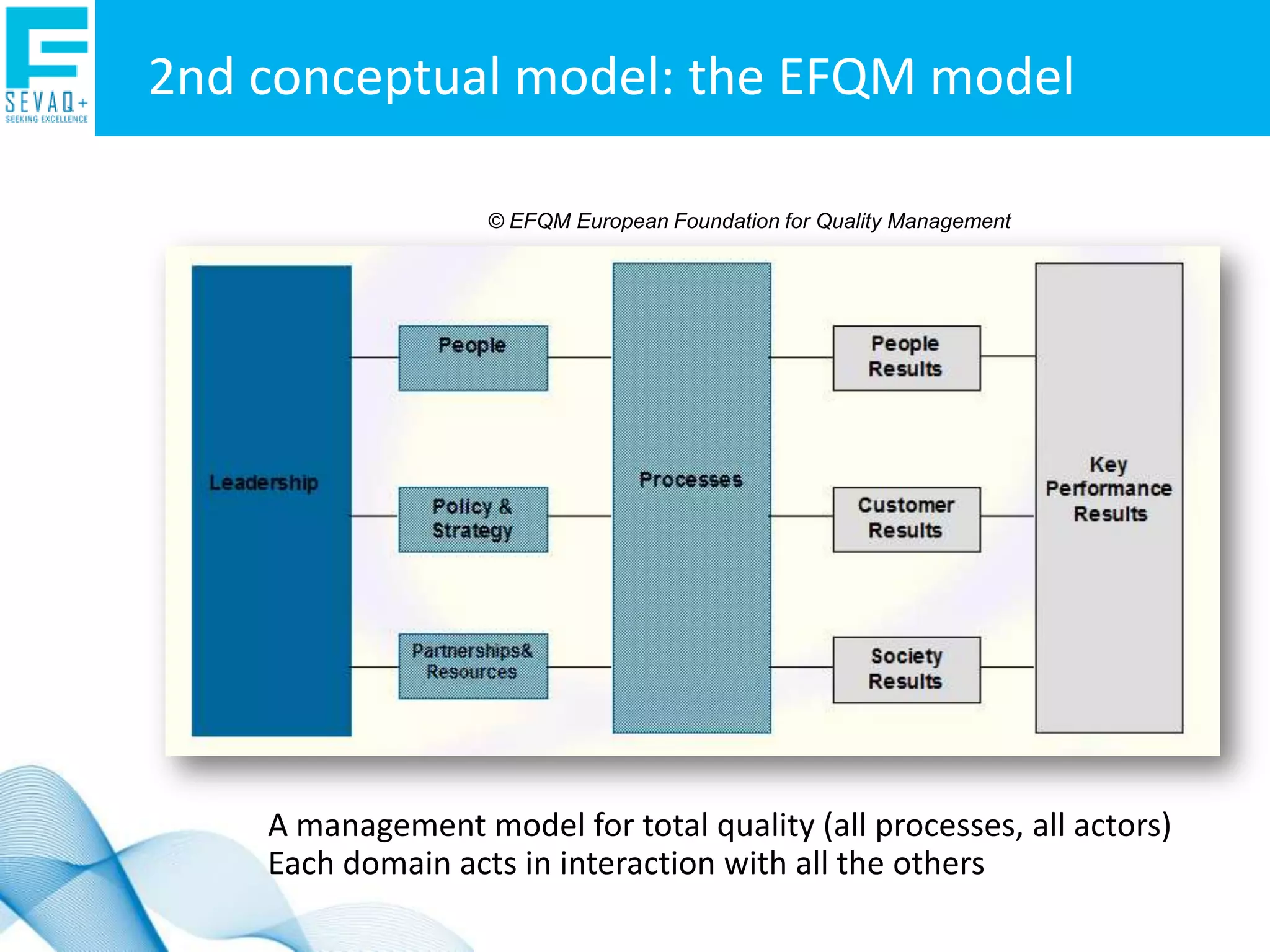

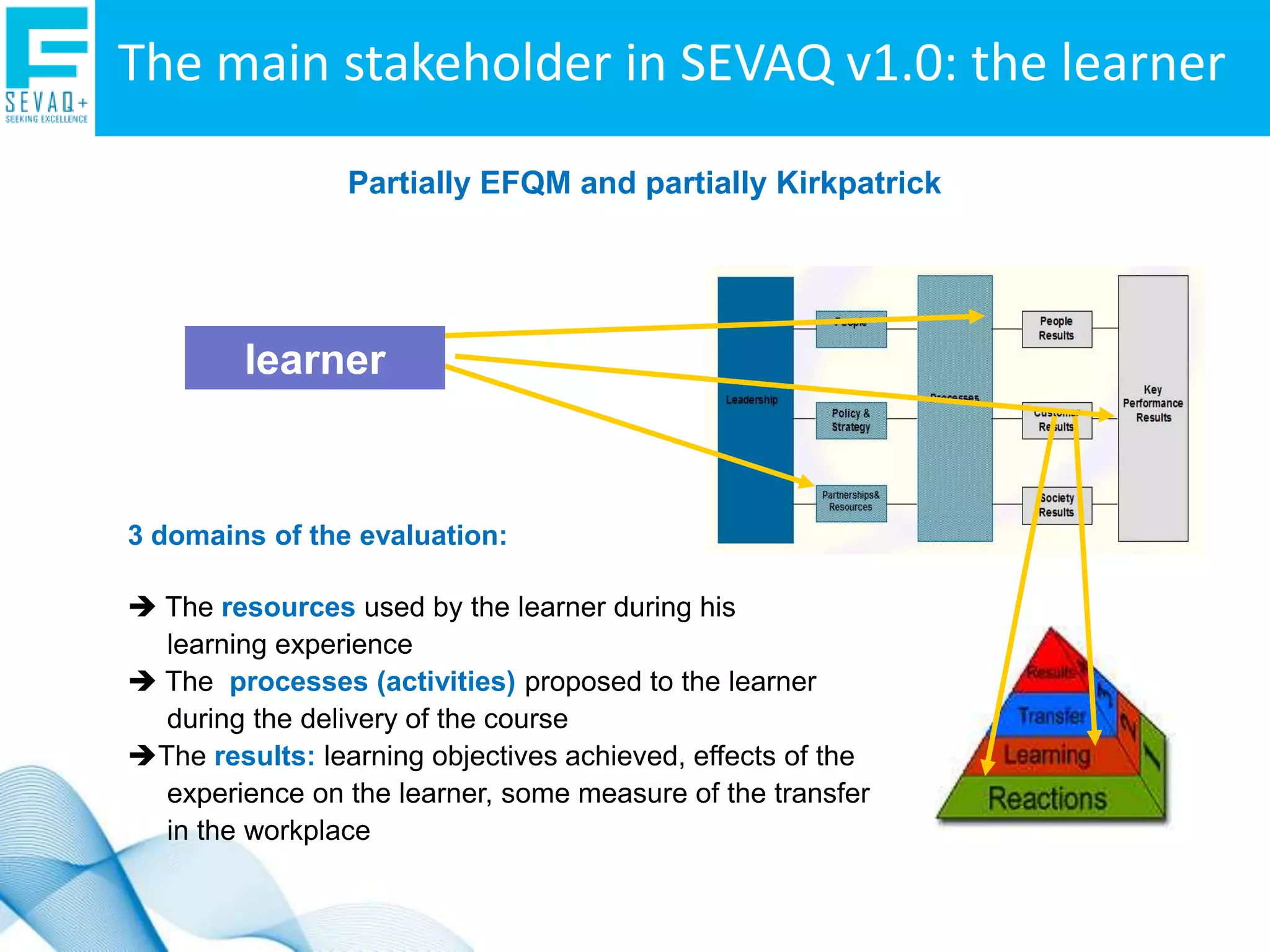

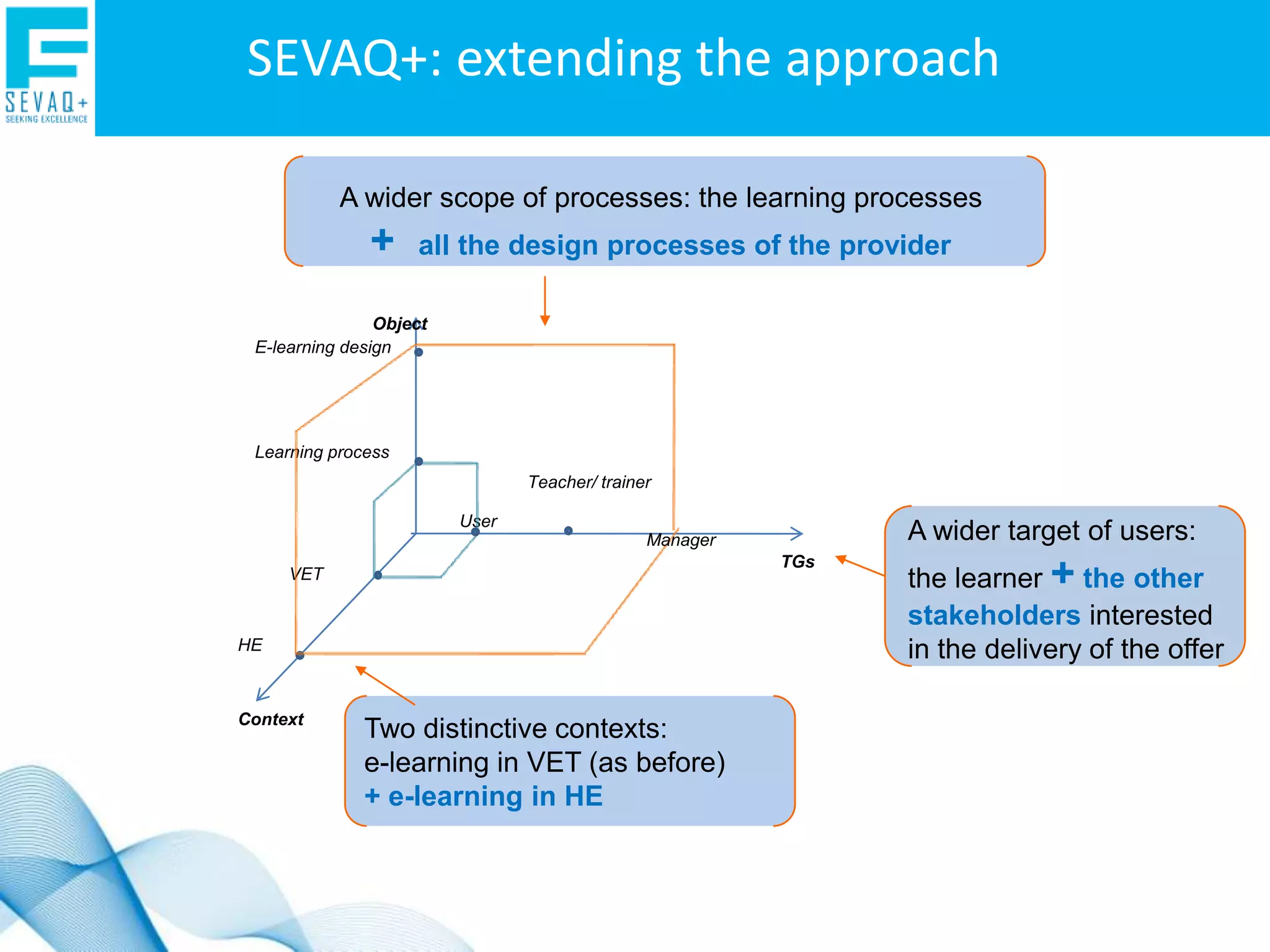

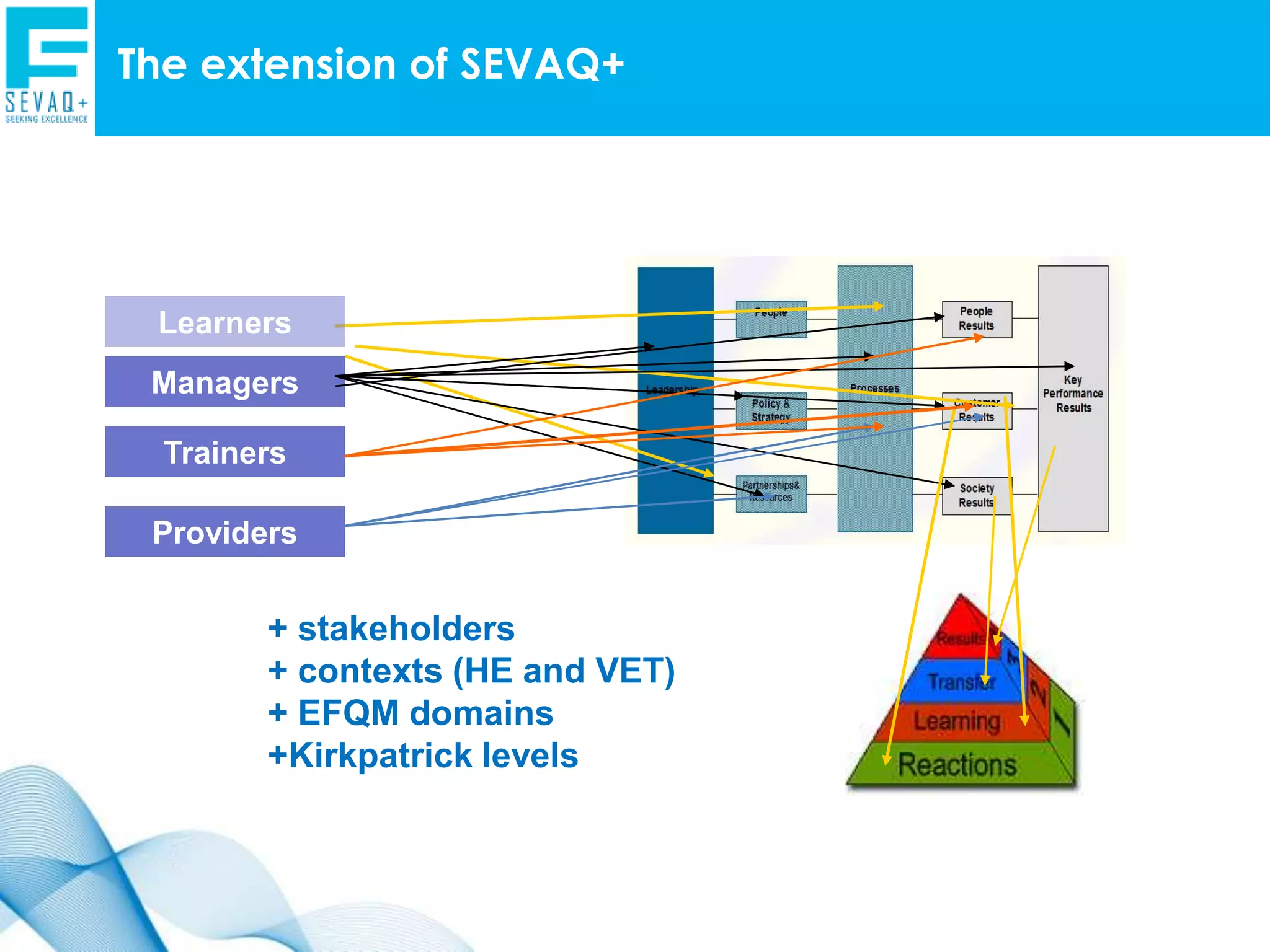

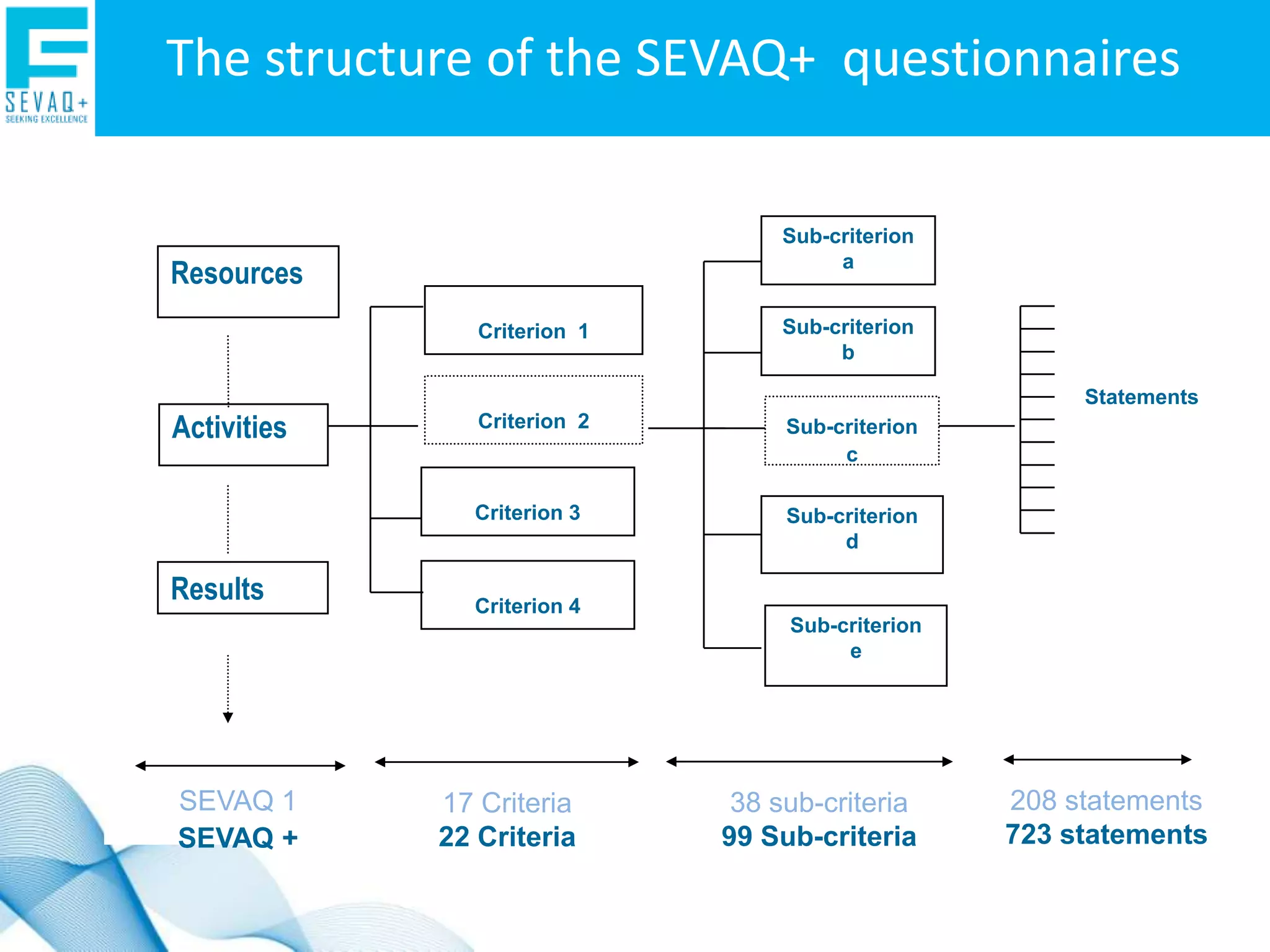

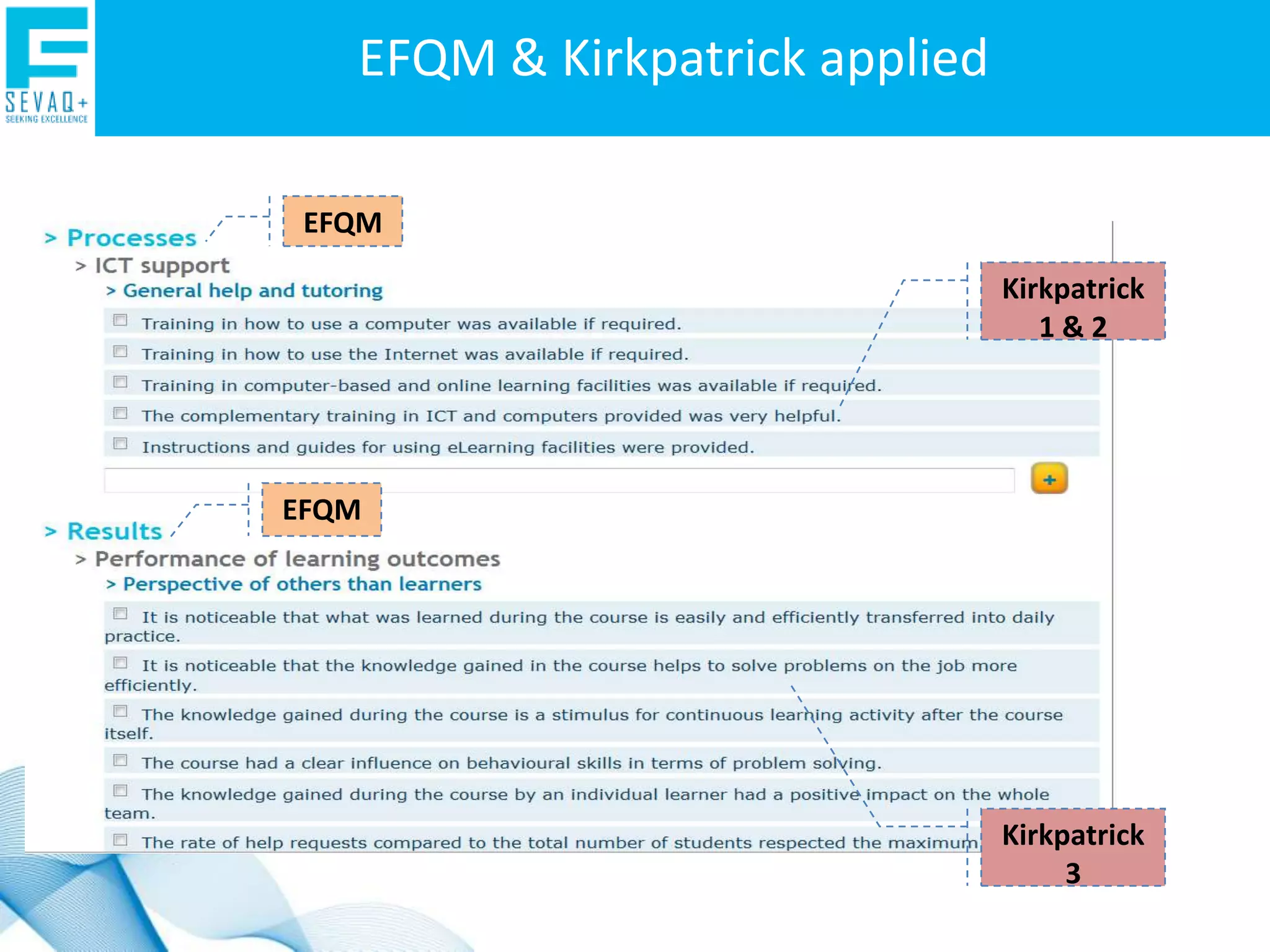

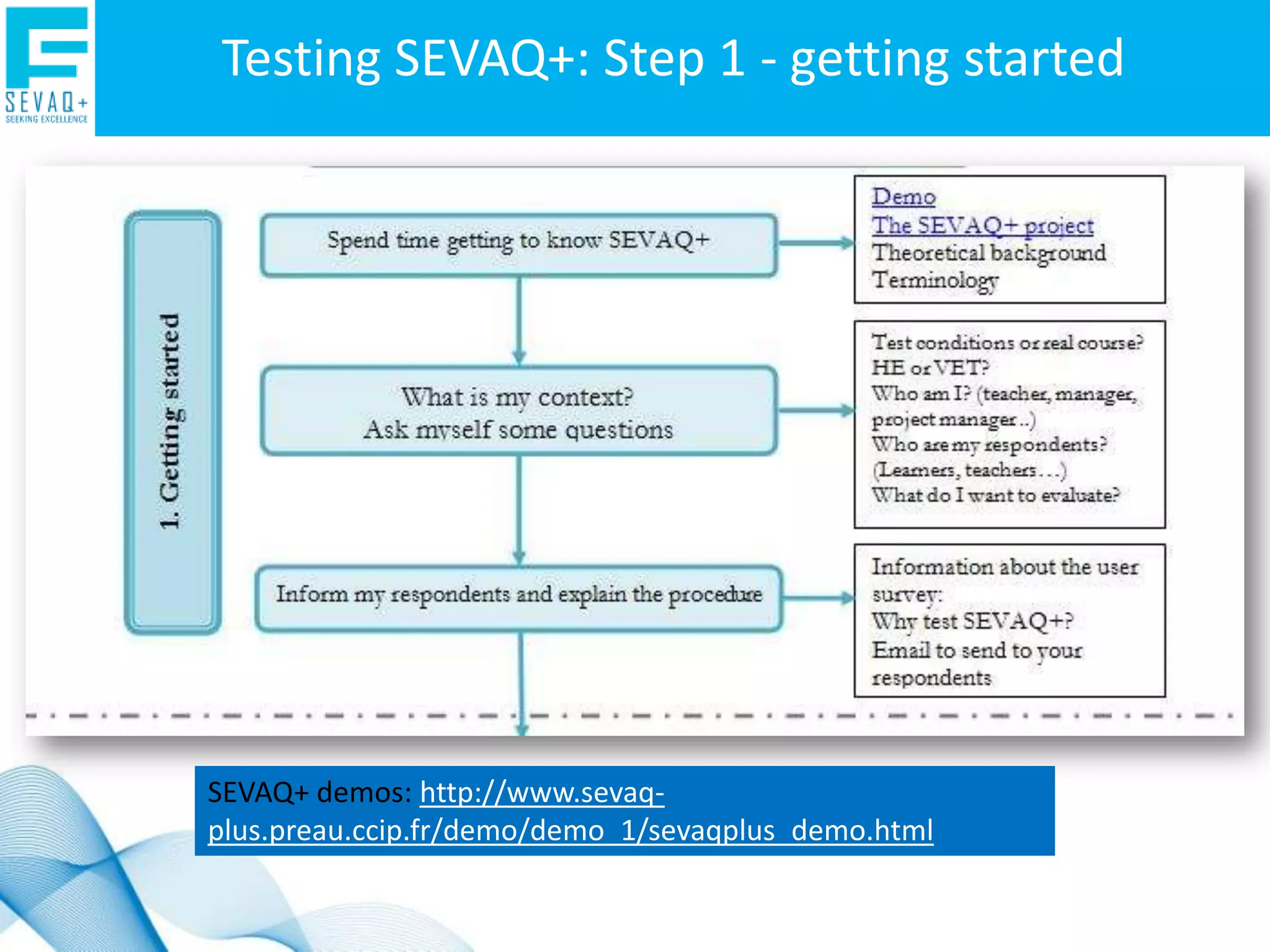

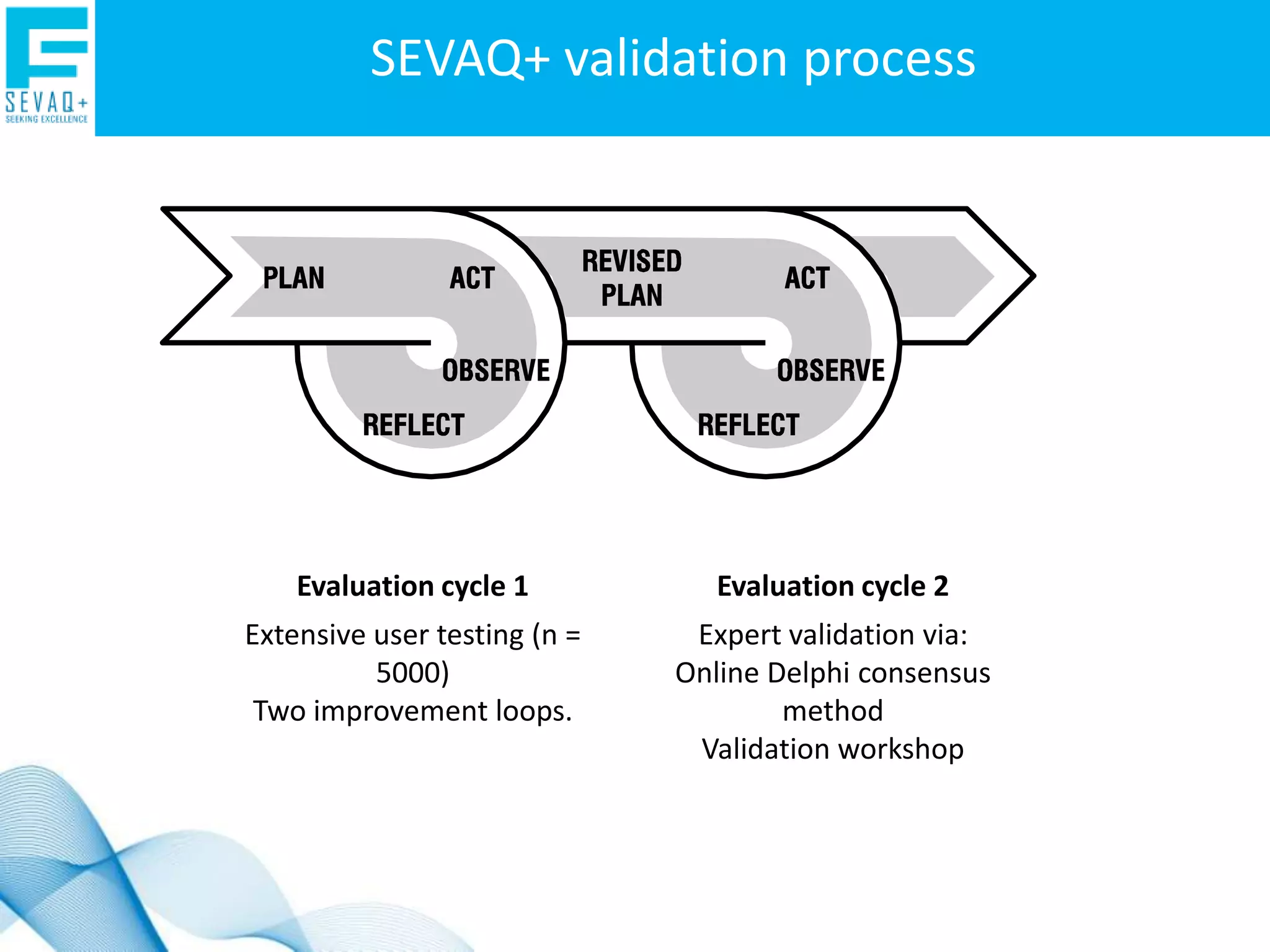

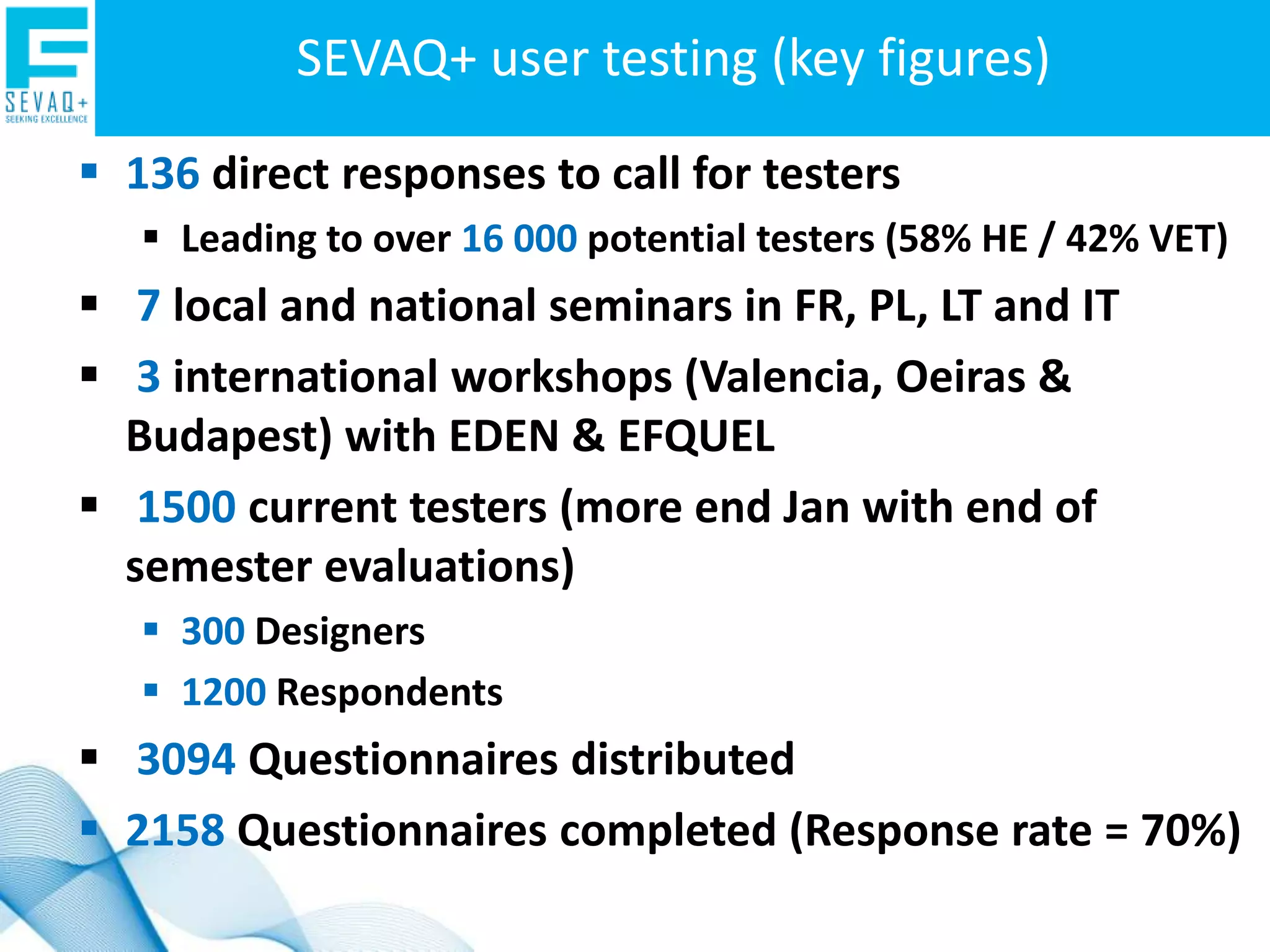

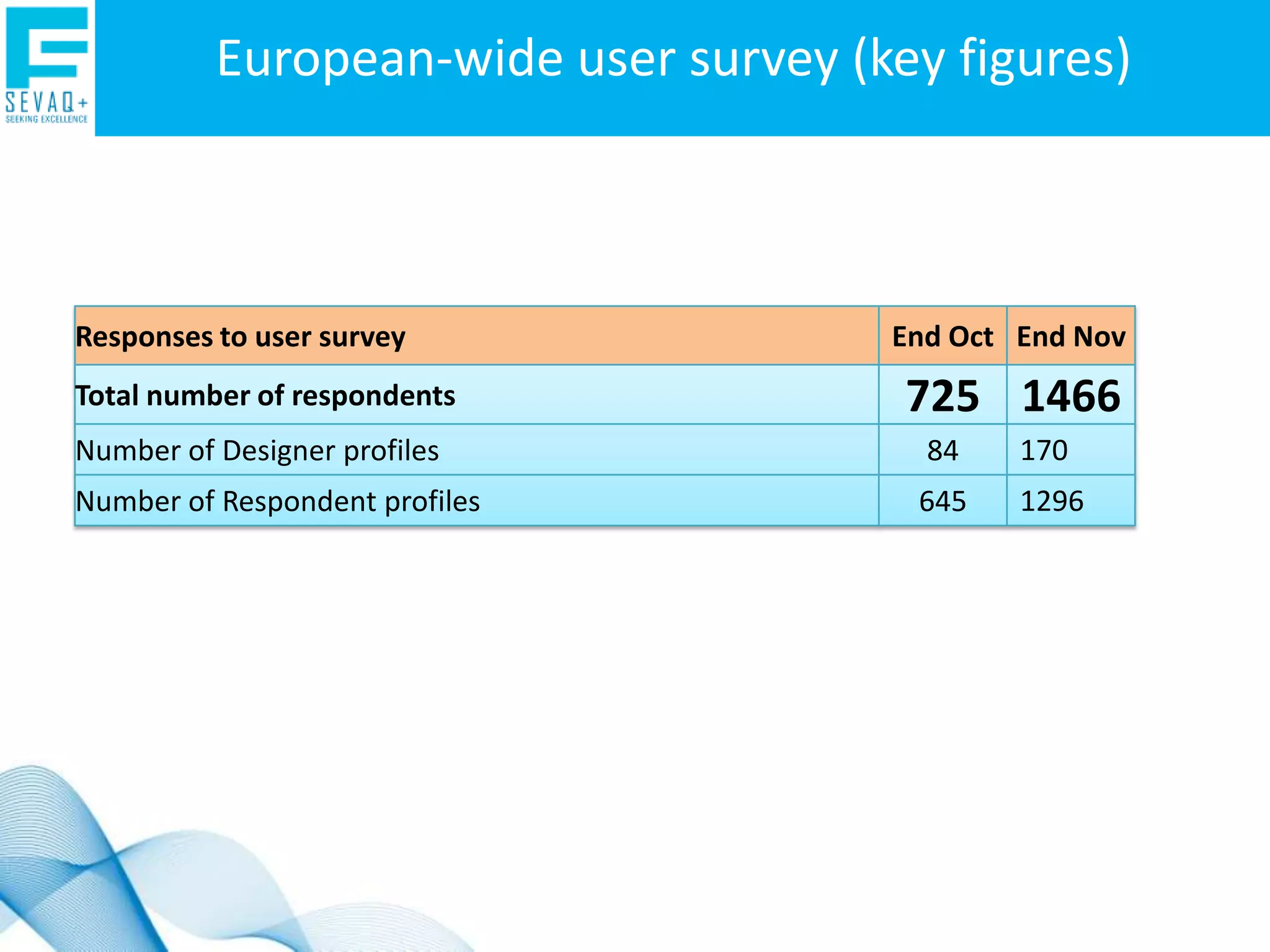

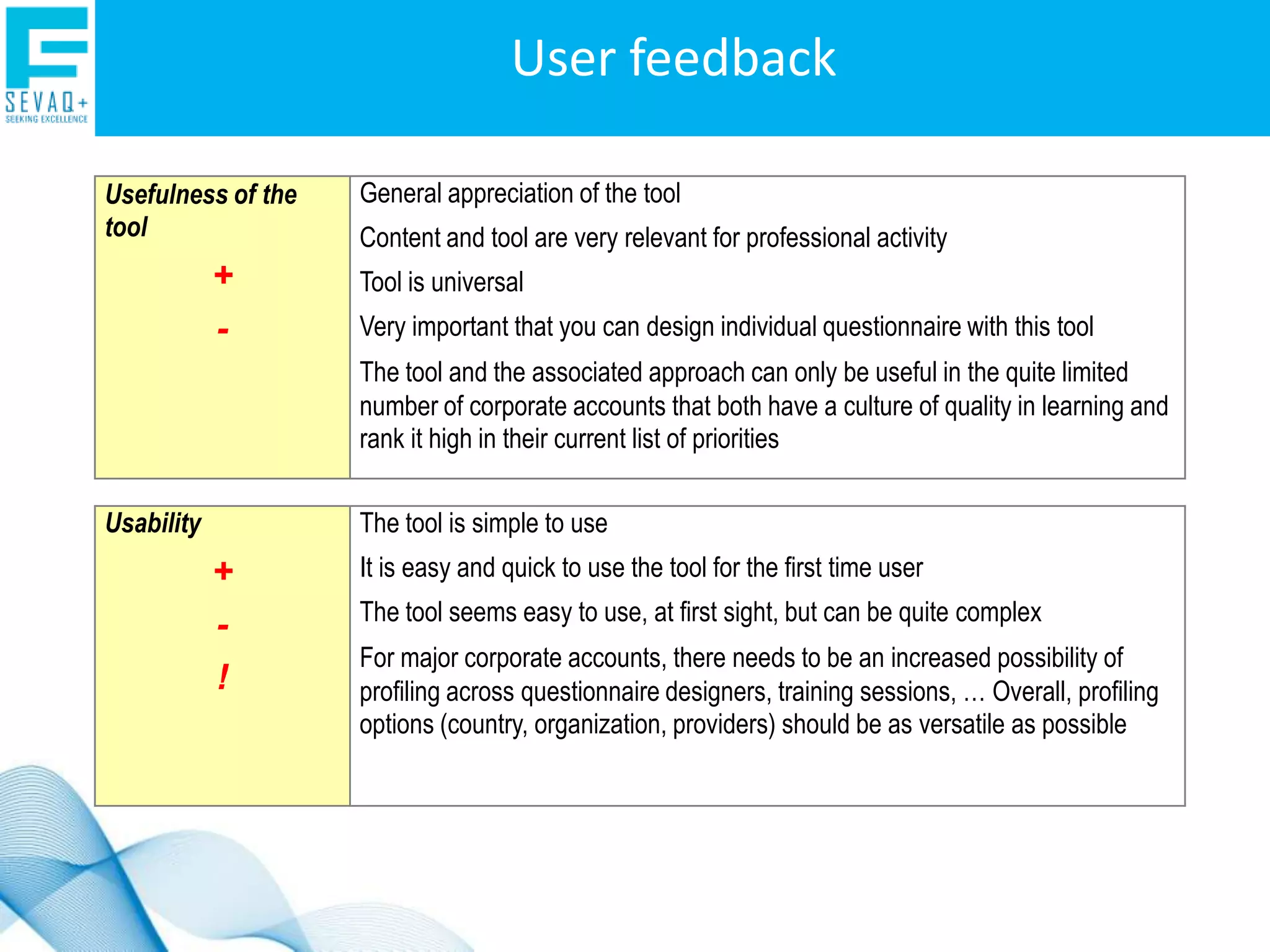

SEVAQ+ is a tool and approach for self-evaluating the quality of technology-enhanced learning. It allows users to create questionnaires based on recognized quality frameworks, analyze results from different stakeholders, and improve courses. The project aims to support quality and attractiveness of vocational and higher education. SEVAQ+ extends an earlier version to include additional stakeholders like managers and trainers, contexts like vocational and higher education, and frameworks like EFQM and Kirkpatrick models. It was tested by over 1500 current testers across Europe to validate the tool.