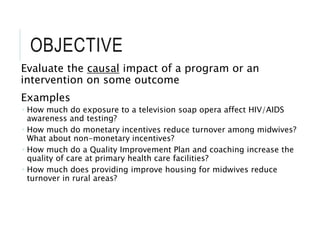

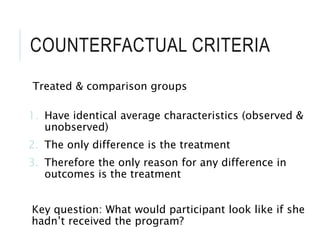

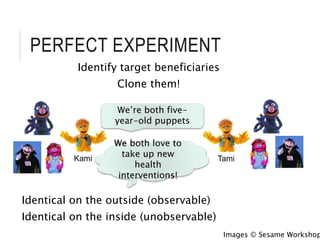

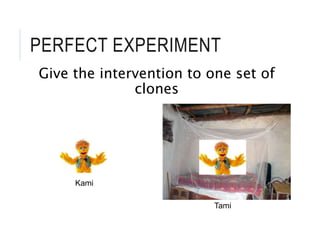

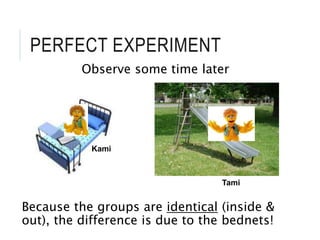

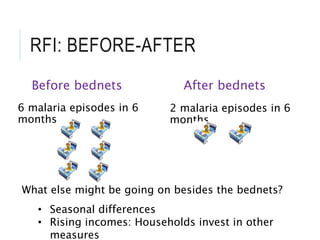

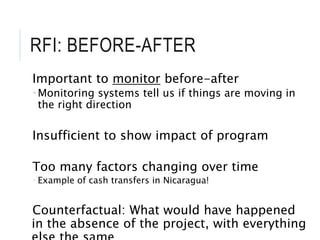

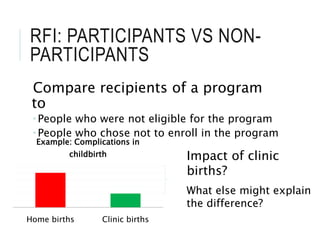

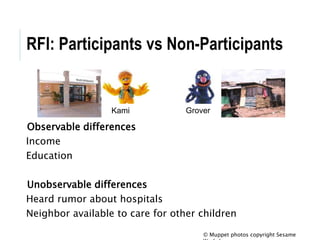

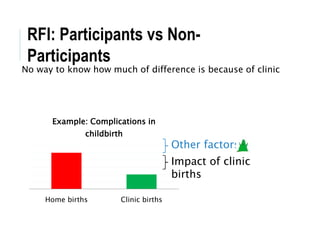

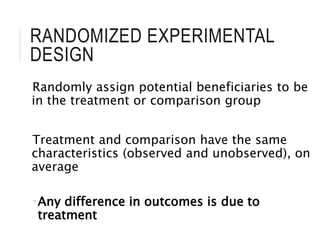

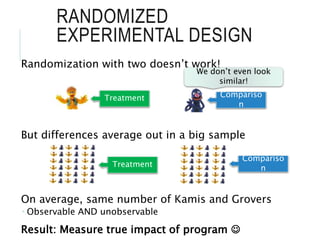

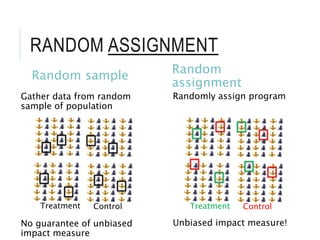

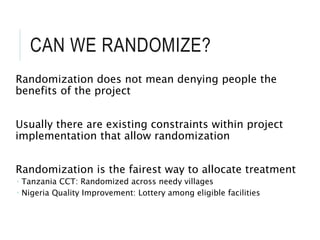

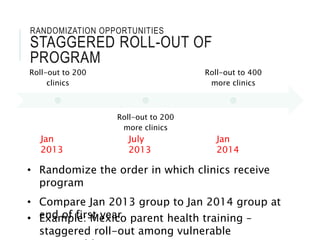

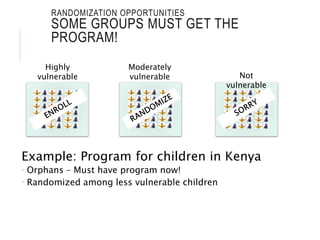

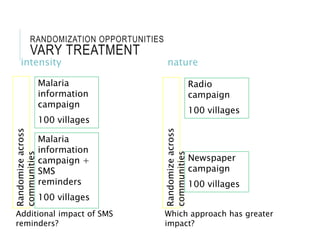

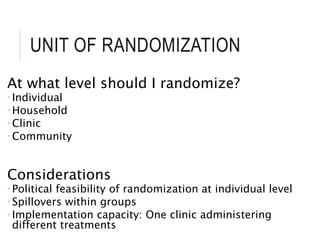

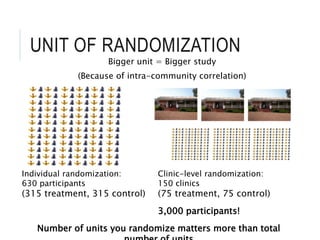

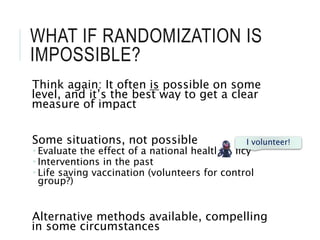

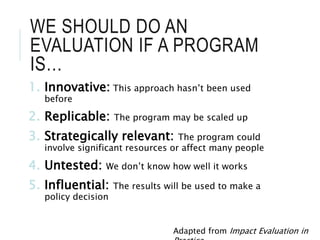

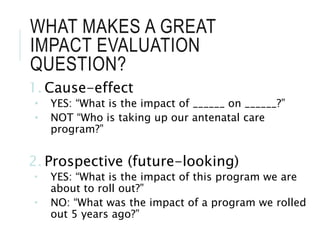

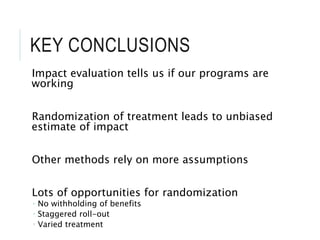

The document outlines the significance of impact evaluation in assessing the effects of programs on well-being in Nigeria, emphasizing the need for a counterfactual to determine true impacts. It discusses methodologies like randomized experimental design for unbiased assessment and provides examples of interventions for health improvements. Key conclusions underscore the necessity of evaluation to determine program effectiveness and opportunities for randomization in various contexts.