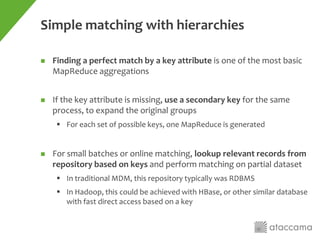

This document discusses using Hadoop as a platform for master data management. It begins by explaining what master data management is and its key components. It then discusses how MDM relates to big data and some of the challenges of implementing MDM on Hadoop. The document provides a simplified example of traditional MDM and how it could work on Hadoop. It outlines some common approaches to matching and merging data on Hadoop. Finally, it discusses a sample MDM tool that could implement matching in Hadoop through MapReduce jobs and provide online MDM services through an accessible database.

![Step 1 | Bulk matching

Matching Engine

[MapReduce]

MDM Repository

[HDFS file]

Source 1

[Full Extract]

Source 2

[Full Extract]](https://image.slidesharecdn.com/th1420-1500glazenslidedecknew1120a-140424203519-phpapp02/85/Using-Hadoop-as-a-platform-for-Master-Data-Management-23-320.jpg)

![Source Increment Extract

[HDFS file]

Step 2 | Incremental bulk matching

Matching Engine

[MapReduce]

New MDM Repository

[HDFS file]

Old MDM Repository

[HDFS file]](https://image.slidesharecdn.com/th1420-1500glazenslidedecknew1120a-140424203519-phpapp02/85/Using-Hadoop-as-a-platform-for-Master-Data-Management-24-320.jpg)

![Step 3 | Online MDM Services

Matching Engine

[Non-Parallel Execution]

MDM Repository

[Online Accessible DB]

Online or Microbatch

[Increment]

1. Online request comes through designated interface

2. Matching engine asks MDM repository for all related records,

based on defined matching keys

3. Repository returns all relevant records that were previously

stored

4. Matching engine computes the matching on the available dataset

and stores new results (changes) back into the repository

1

2

3

4](https://image.slidesharecdn.com/th1420-1500glazenslidedecknew1120a-140424203519-phpapp02/85/Using-Hadoop-as-a-platform-for-Master-Data-Management-25-320.jpg)

![Step 4 | Complex Scenario

MDM Repository

[Online Accessible DB]

Online or Microbatch

[Increment]

Matching Engine

SMALL DATASET

[Non-Parallel Execution]

LARGE DATASET

[MapReduce]Size?

Source 1

[Full Extract]

Update

Repository

Full scan

Get](https://image.slidesharecdn.com/th1420-1500glazenslidedecknew1120a-140424203519-phpapp02/85/Using-Hadoop-as-a-platform-for-Master-Data-Management-26-320.jpg)

![Step 4 | Complex Scenario

MDM Repository

[Online Accessible DB]

Online or Microbatch

[Increment]

Matching Engine

SMALL DATASET

[Non-Parallel Execution]

LARGE DATASET

[MapReduce]Size?

Source 1

[Full Extract]

Full scan

Get

Update

Repository

Delta Detection

[MapReduce]](https://image.slidesharecdn.com/th1420-1500glazenslidedecknew1120a-140424203519-phpapp02/85/Using-Hadoop-as-a-platform-for-Master-Data-Management-27-320.jpg)