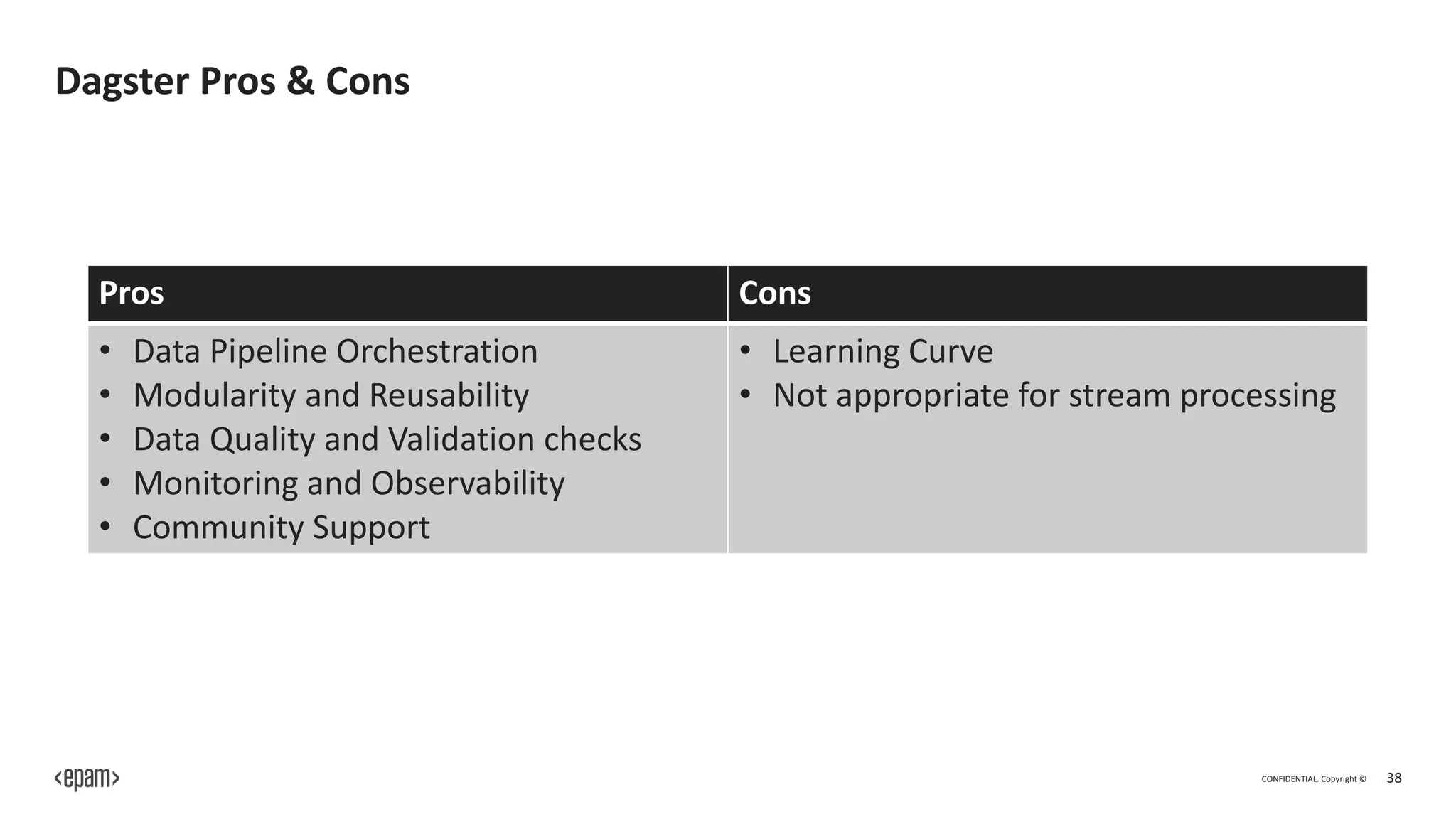

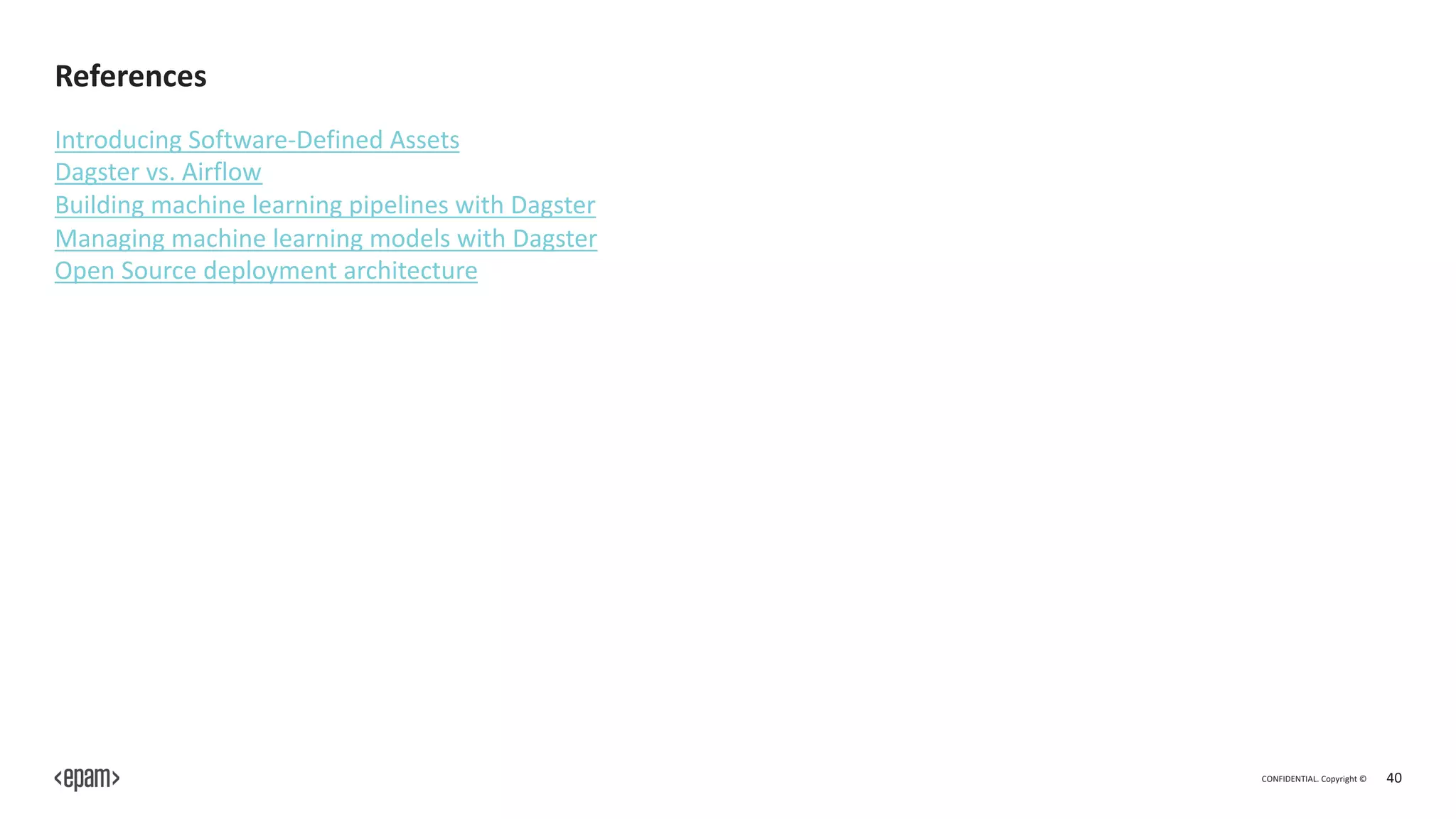

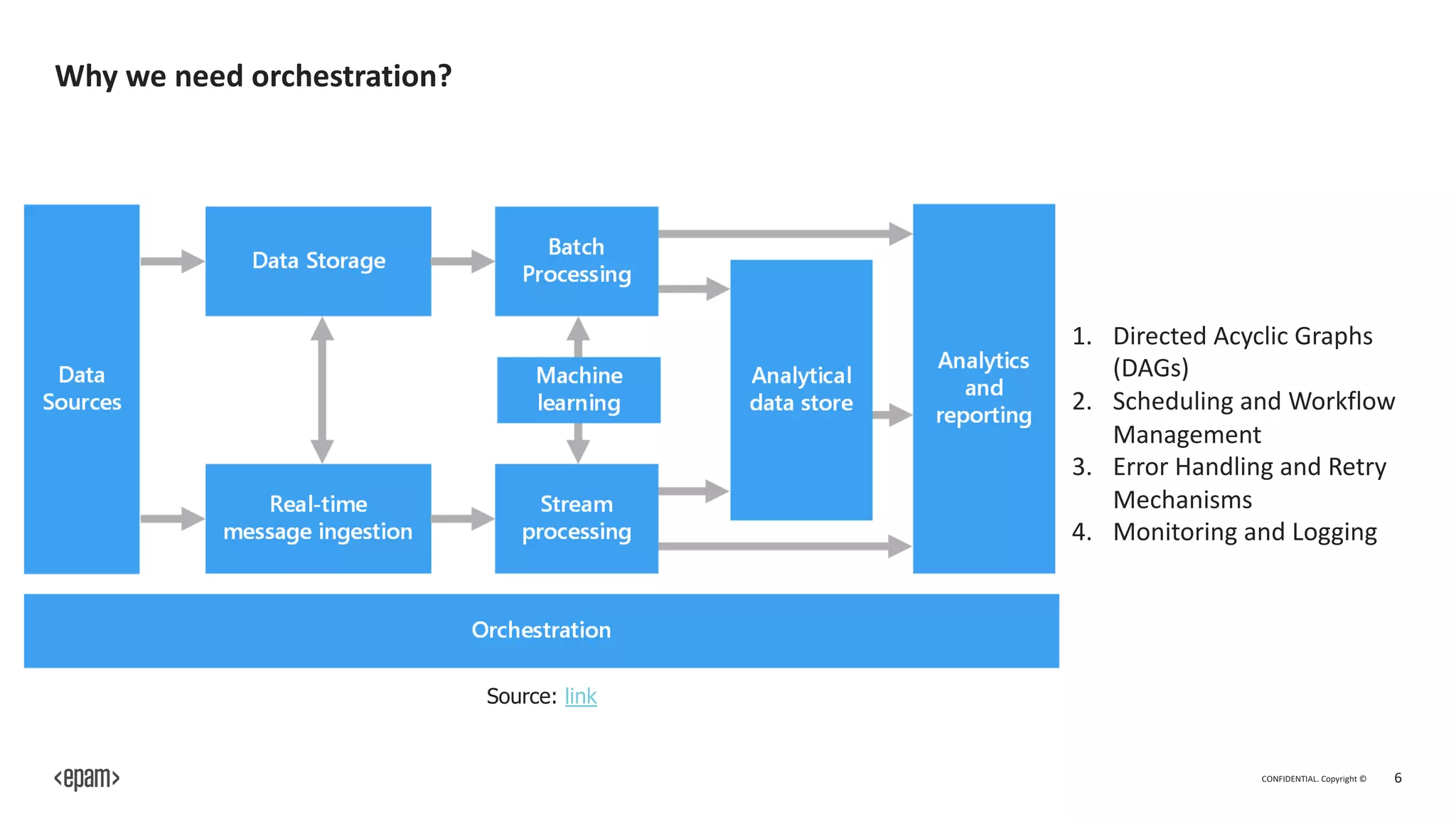

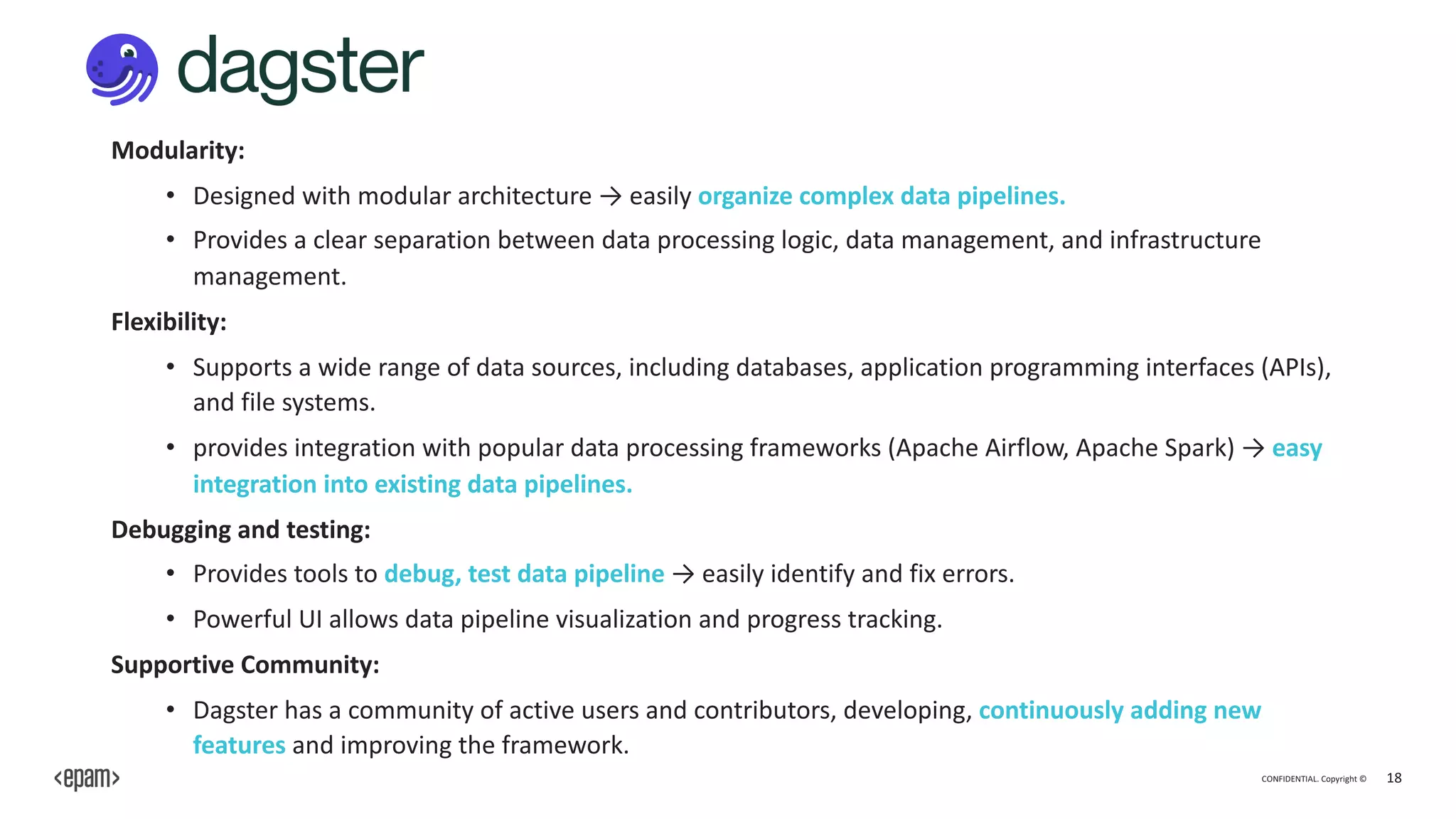

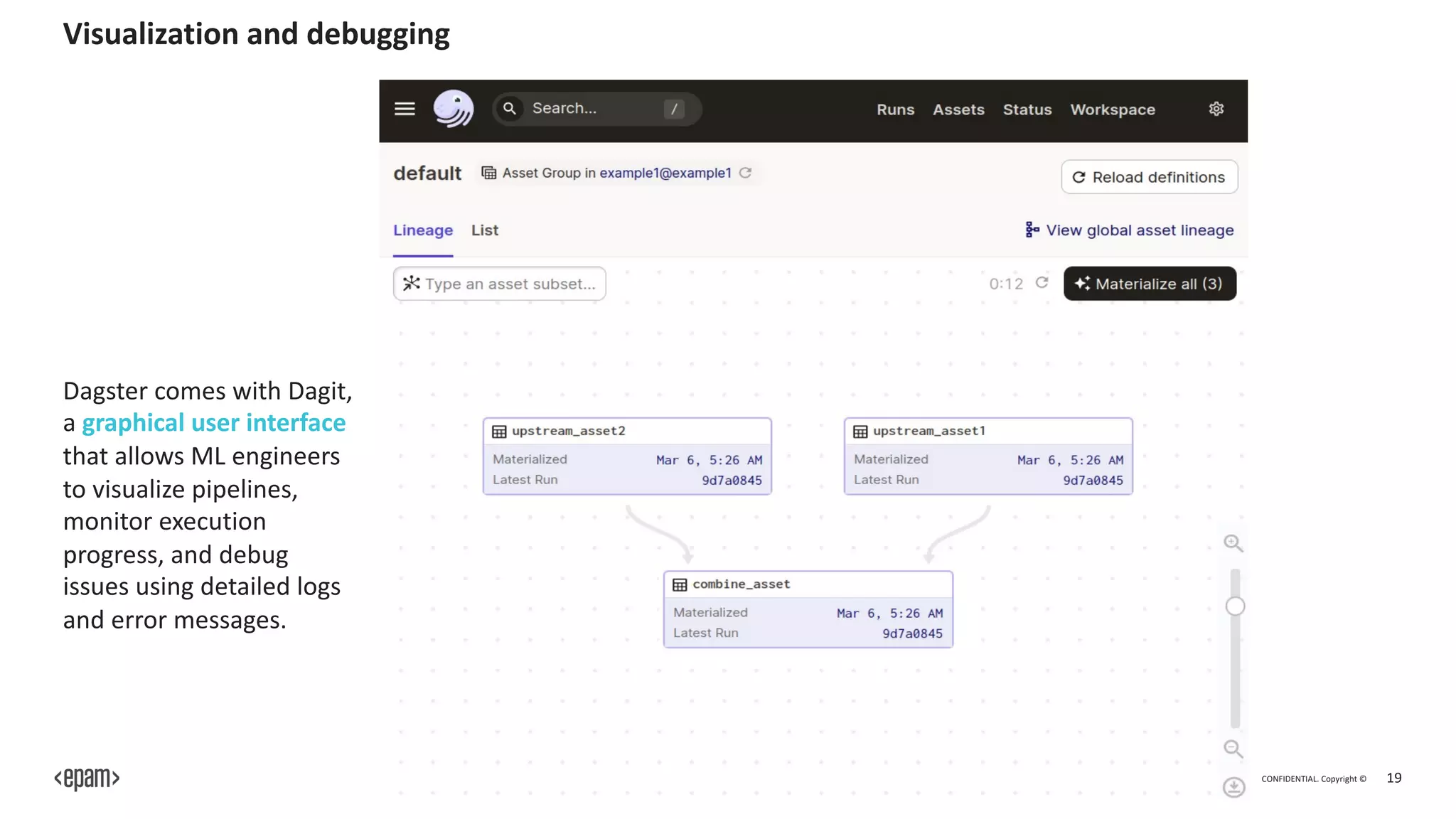

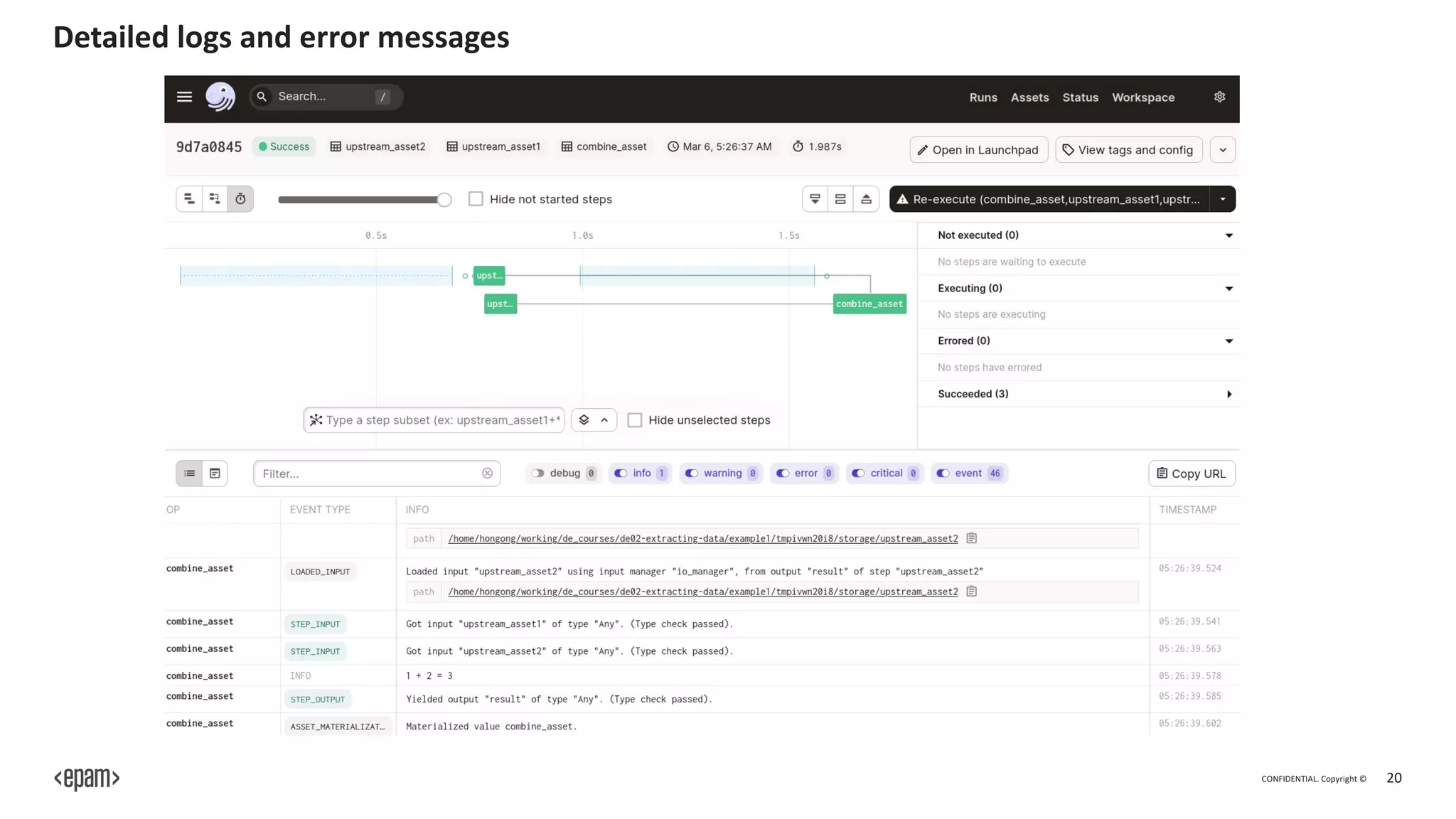

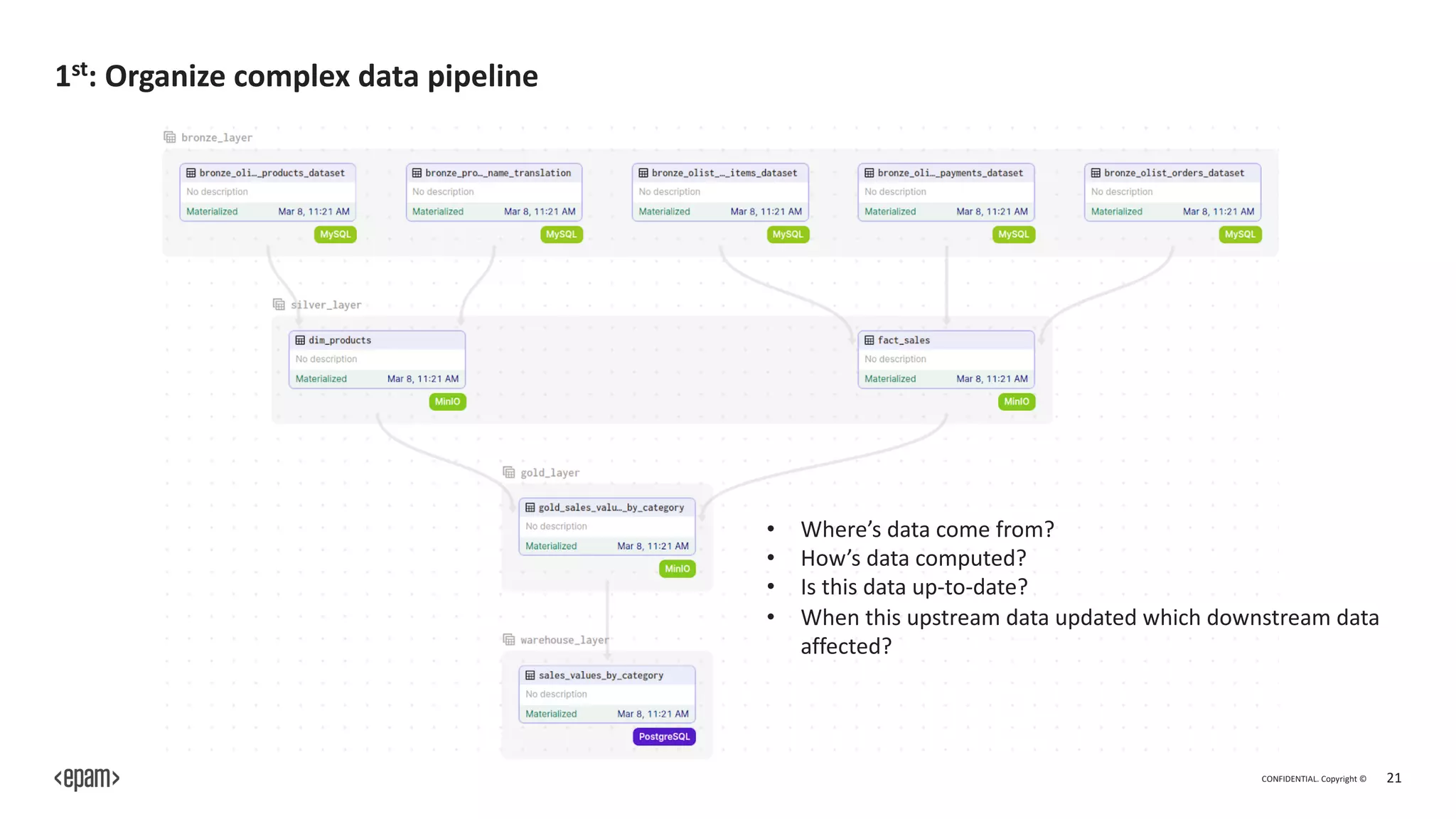

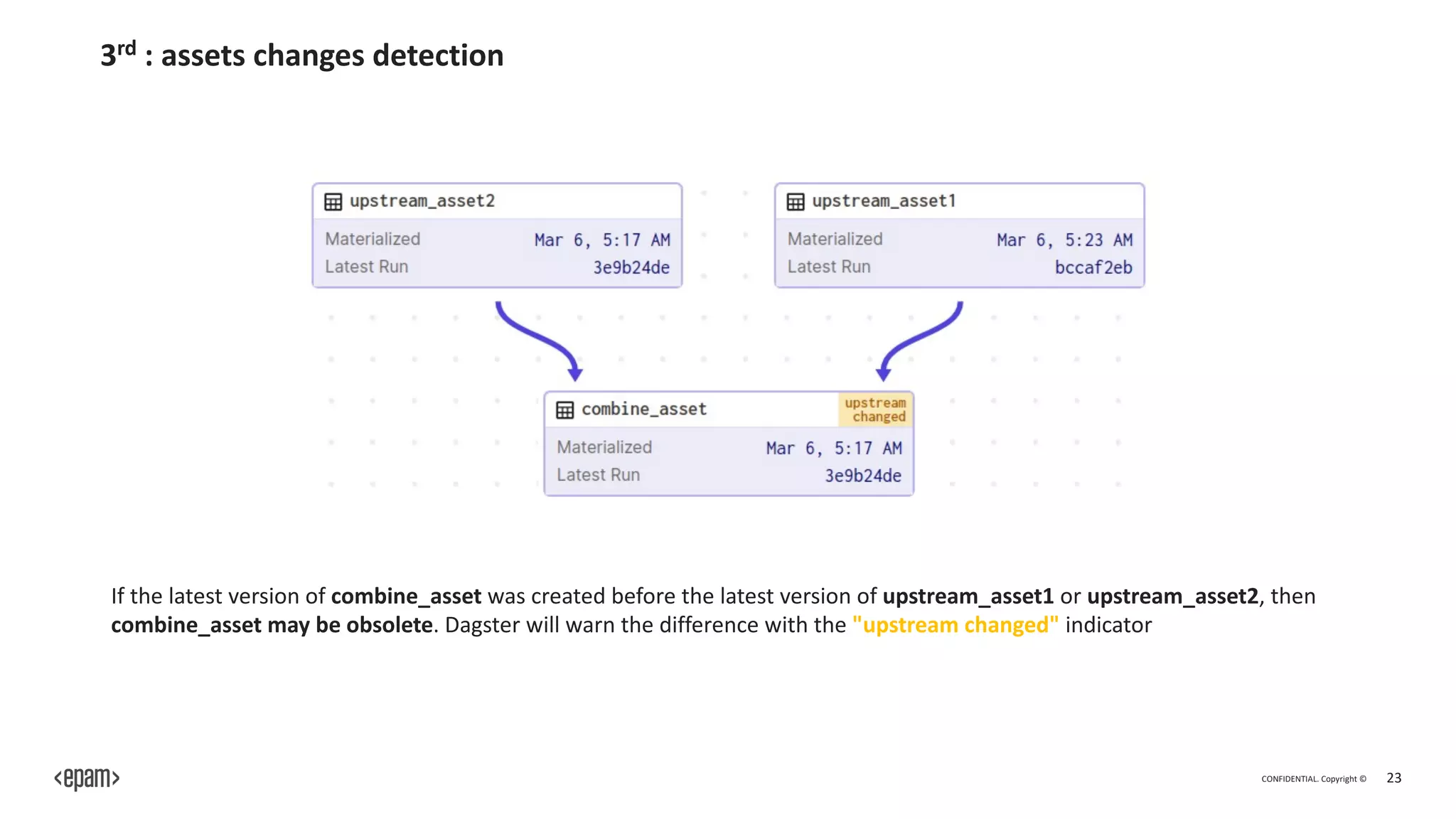

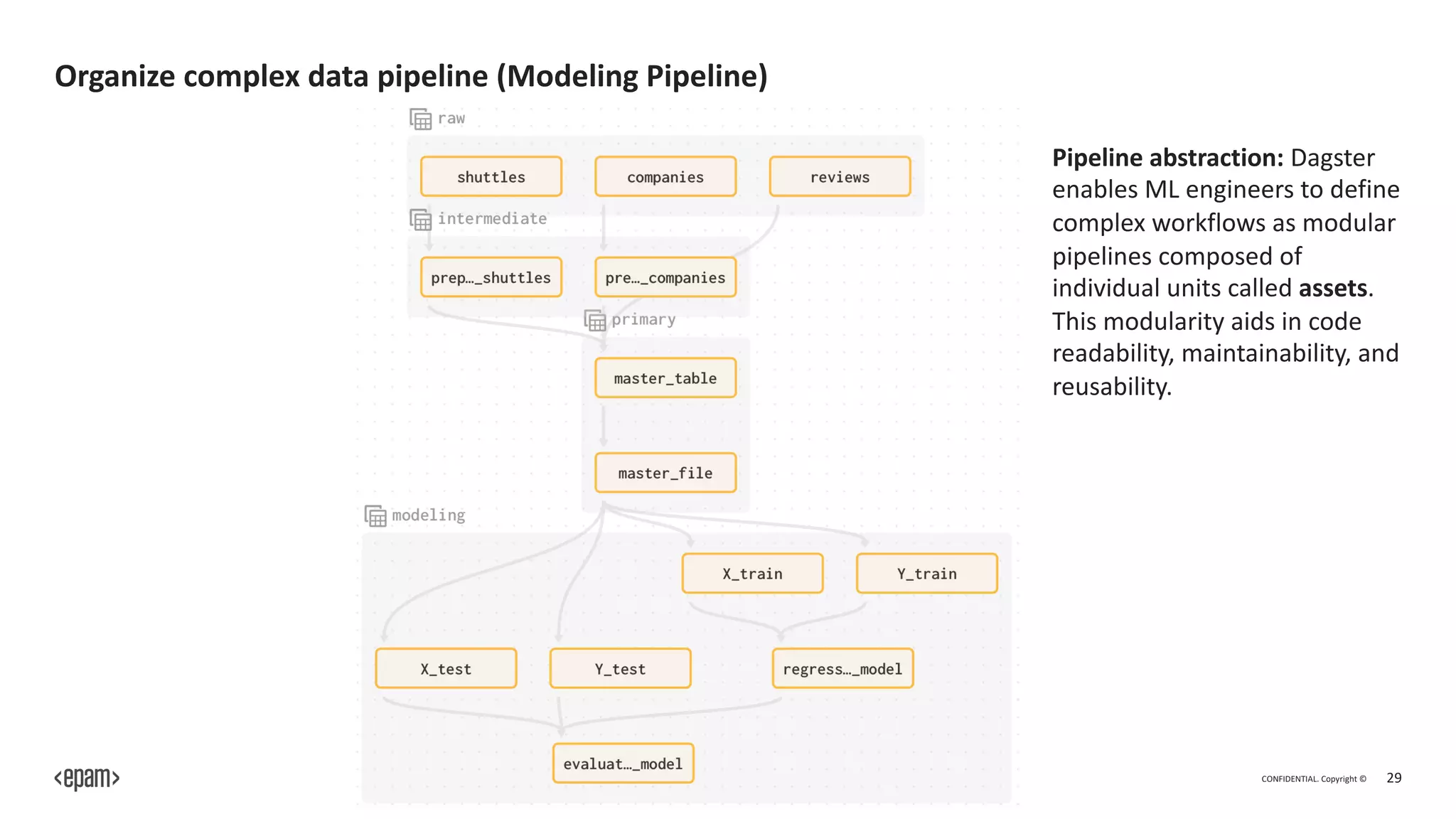

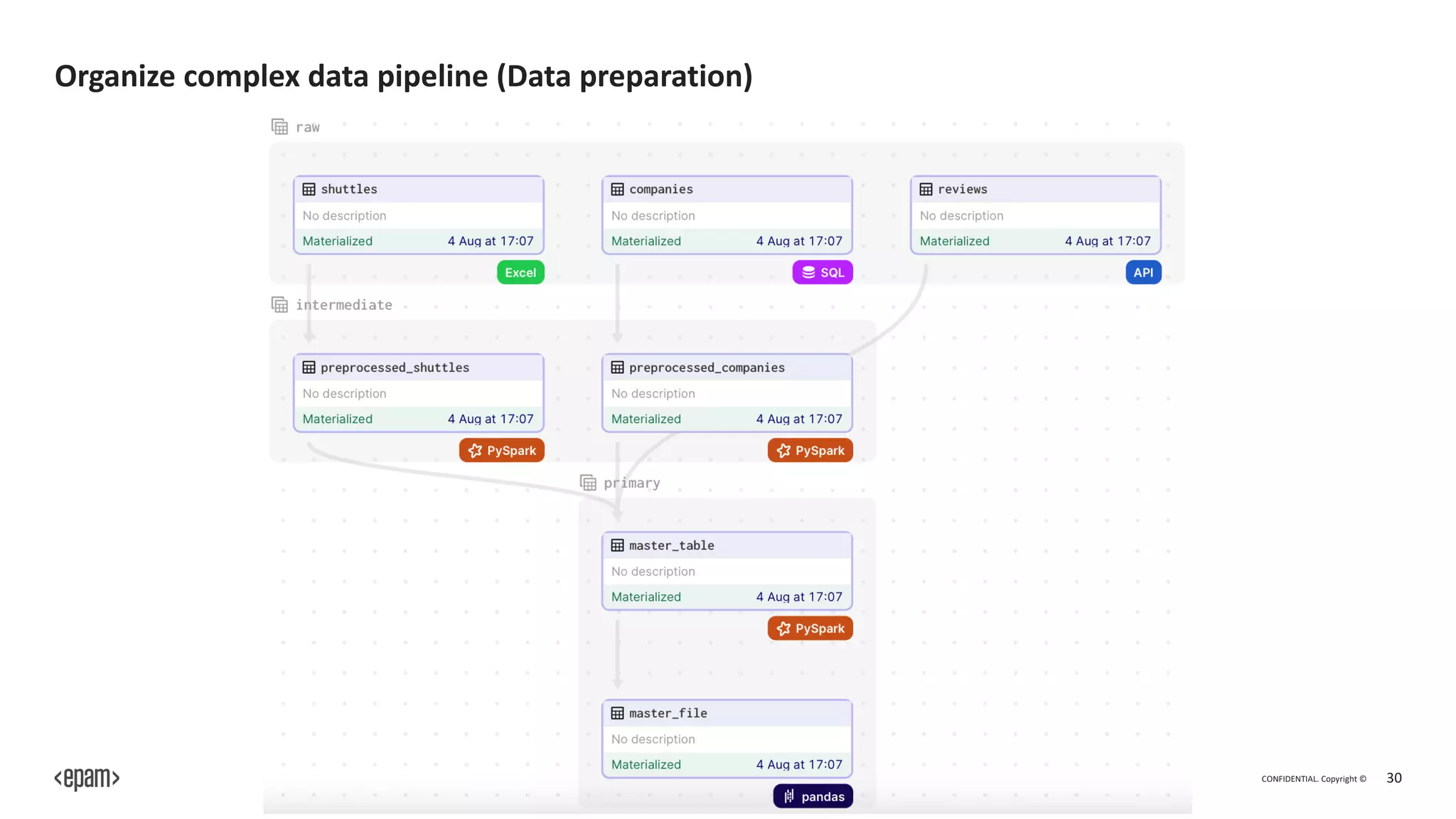

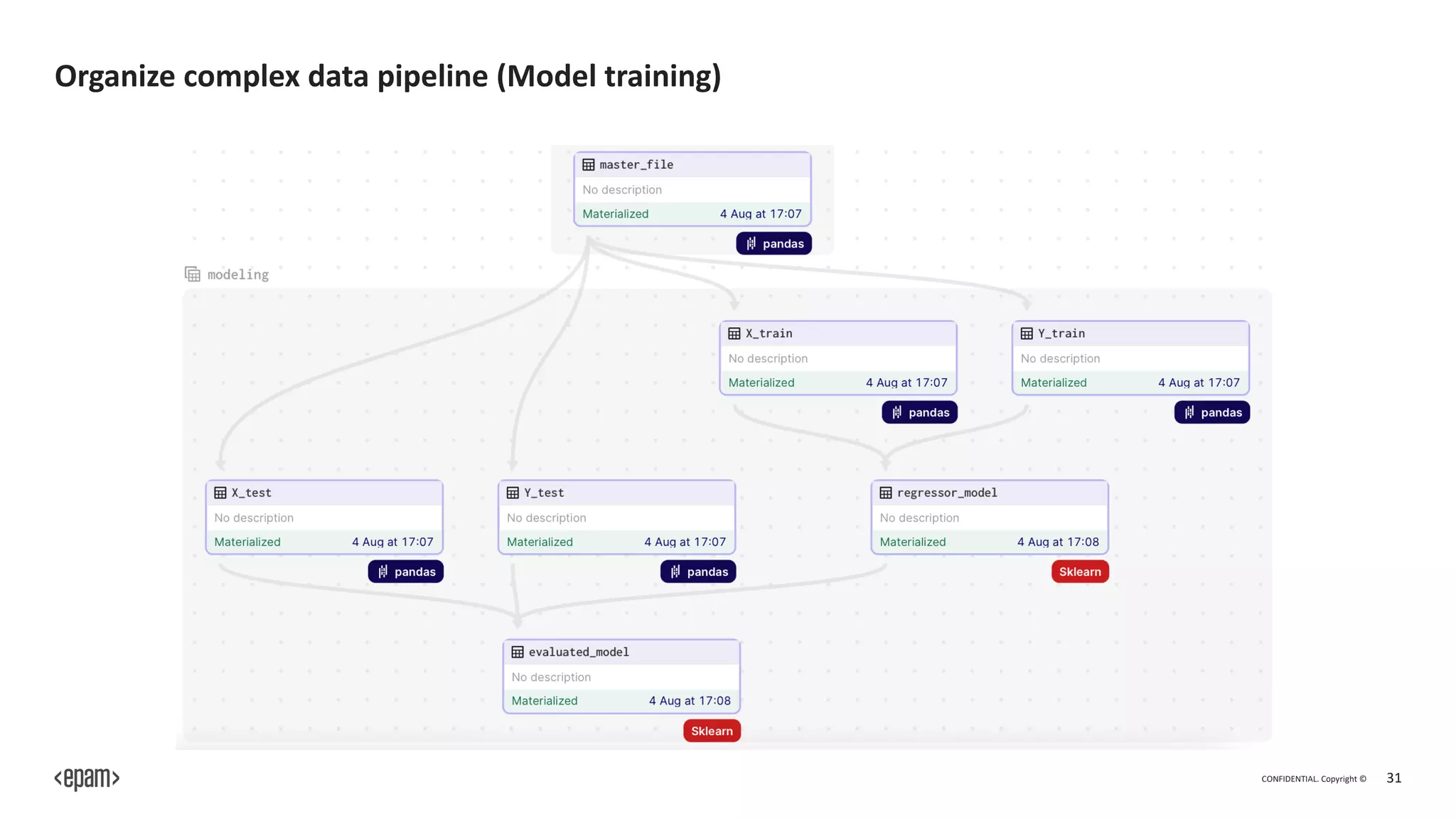

The document outlines Dagster, an open-source library for building ETL and machine learning systems, emphasizing its modular architecture, integration capabilities, and benefits for managing complex data pipelines. It presents features like orchestration, monitoring, and error handling, which facilitate the orchestration and execution of data workflows, as well as debugging and testing tools for machine learning engineers. The document also discusses the community support and extensibility of Dagster, along with its advantages and disadvantages.

![CONFIDENTIAL. Copyright © 22

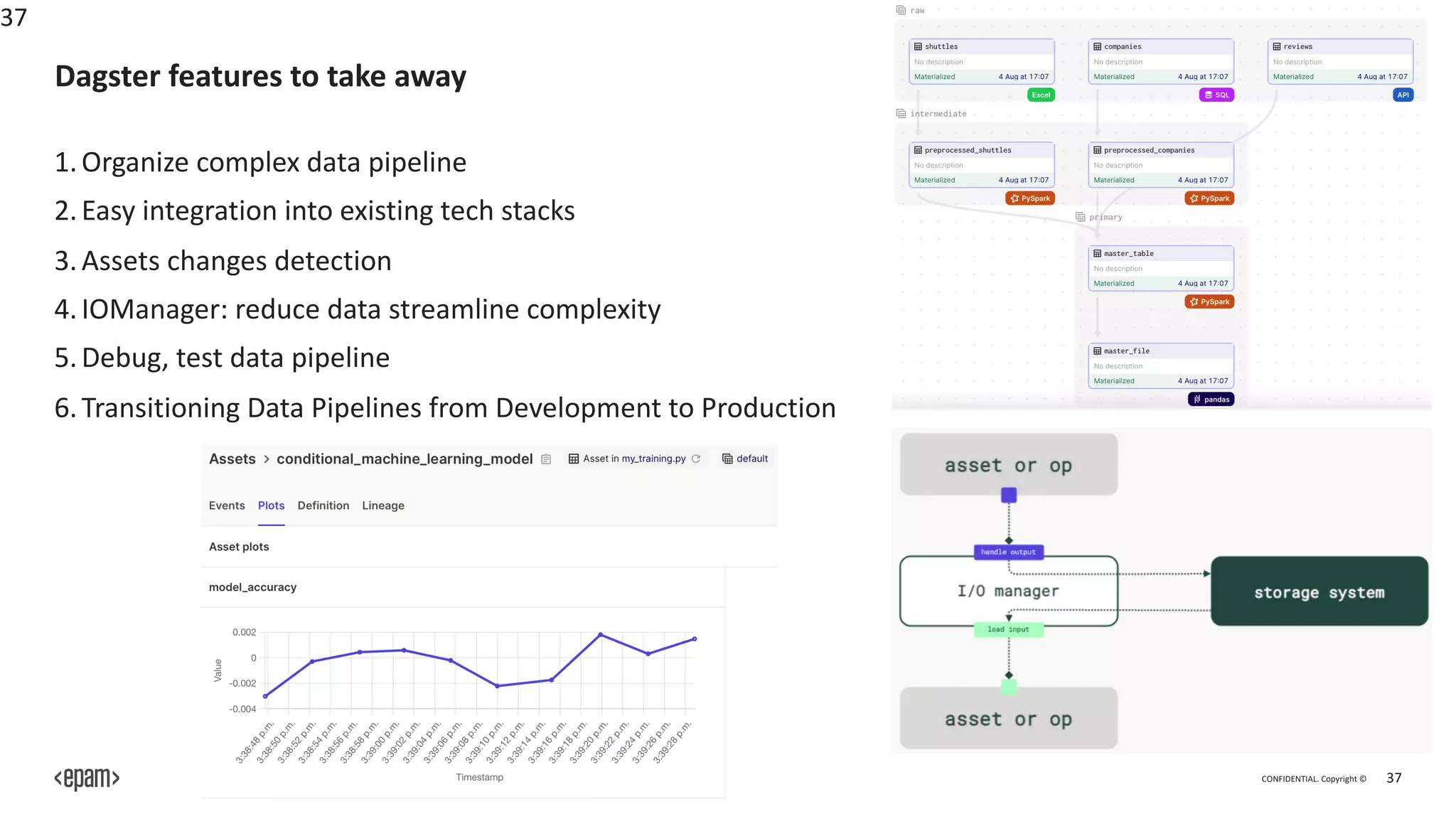

2nd : Easy integration into existing tech stacks

from dagster import materialize

if __name__ == "__main__":

result = materialize(assets=[my_first_asset])

pip install dagster dagit

Just install

And materialize your assets

Extensibility and integration: Dagster has a rich ecosystem

of libraries and plugins that support various tools and

platforms related to machine learning, data processing,

and infrastructure. This extensibility allows ML engineers

to integrate Dagster with existing tools and systems.](https://image.slidesharecdn.com/dagster-dataopsandmlopsformachinelearningengineers-230814084609-92abfc05/75/Dagster-DataOps-and-MLOps-for-Machine-Learning-Engineers-pdf-22-2048.jpg)

![CONFIDENTIAL. Copyright © 32

5th : Debug, test data pipeline

from dagster import asset

@asset

def my_first_asset(context):

context.log.info("This is my first asset")

return 1

from dagster import materialize, build_op_context

def test_my_first_asset():

result = materialize(assets=[my_first_asset])

assert result.success

context = build_op_context()

assert my_first_asset(context) == 1

my_assets.py

test_my_assets.py

Testing and development: Dagster supports

local development and testing by enabling

execution of individual assets or entire

pipelines independent of the production

environment, fostering faster iteration and

experimentation.](https://image.slidesharecdn.com/dagster-dataopsandmlopsformachinelearningengineers-230814084609-92abfc05/75/Dagster-DataOps-and-MLOps-for-Machine-Learning-Engineers-pdf-32-2048.jpg)