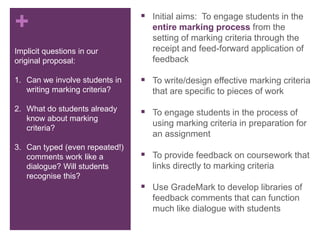

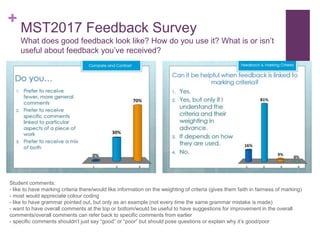

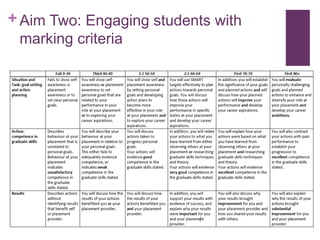

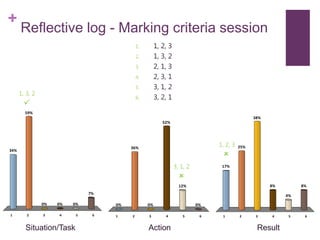

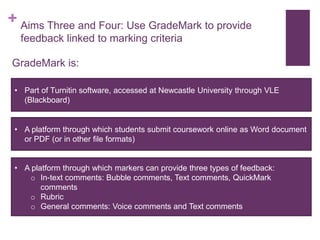

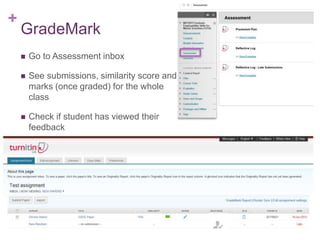

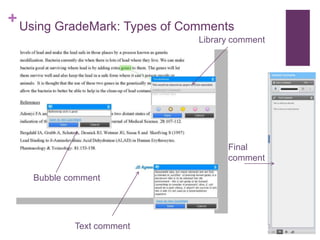

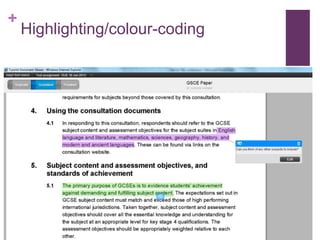

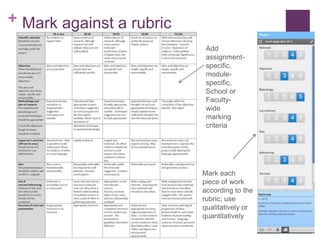

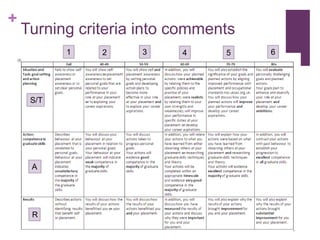

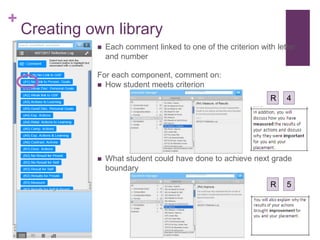

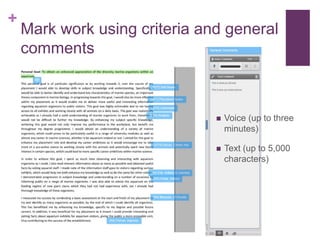

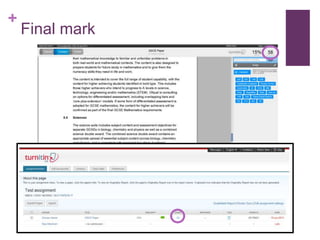

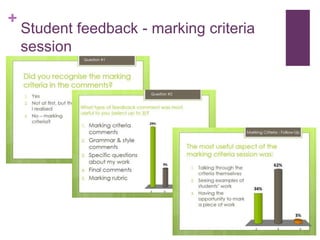

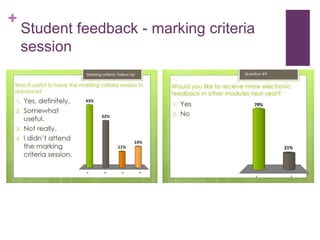

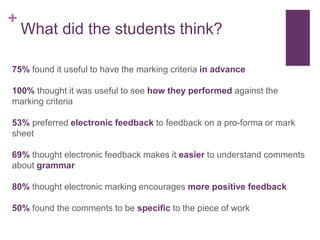

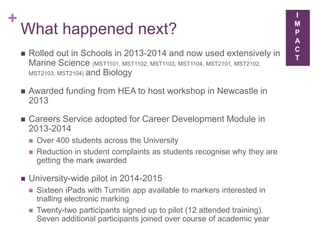

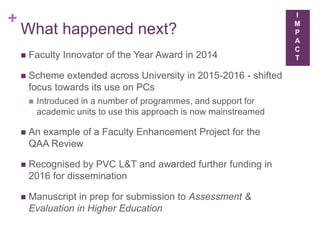

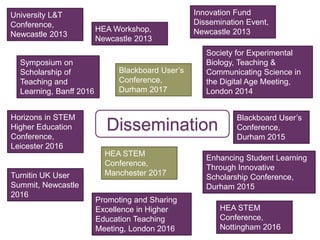

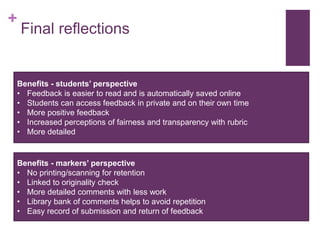

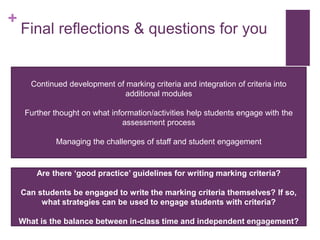

The document discusses a project at Newcastle University to improve feedback and engage students in the marking process using GradeMark. The project aimed to 1) involve students in writing marking criteria, 2) engage students with criteria before submitting assignments, and 3) provide feedback linked directly to criteria using GradeMark. Feedback from students found the electronic feedback easier to understand and more specific. The approach was then expanded across the university and disseminated more widely. Benefits included more detailed feedback for students and markers. Questions remain around further engaging students and managing challenges of staff and student adoption.