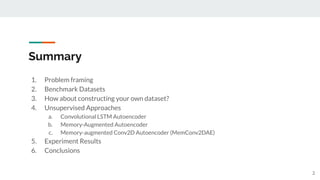

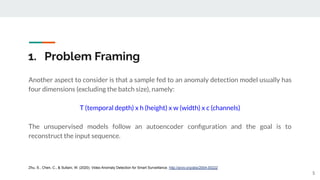

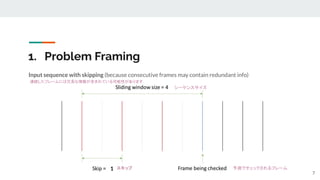

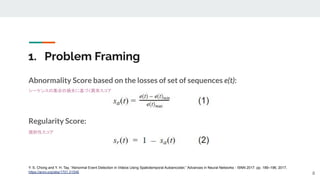

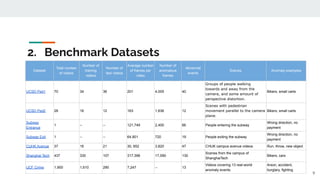

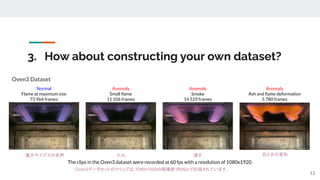

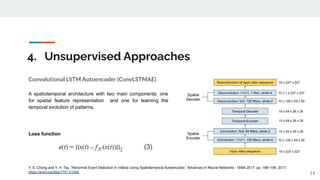

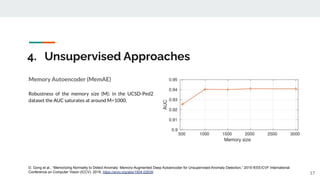

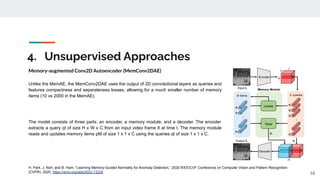

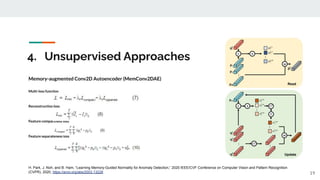

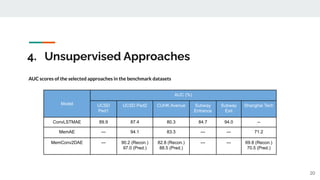

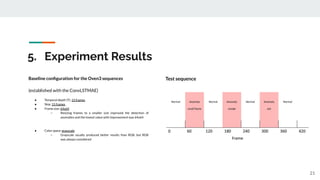

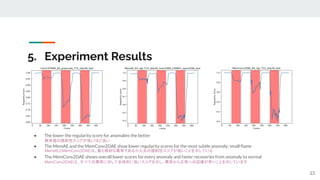

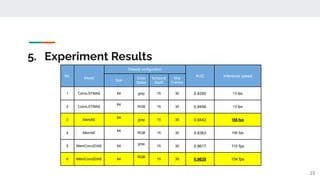

The presentation provided an overview of unsupervised video anomaly detection techniques. It discussed benchmark datasets, approaches like convolutional LSTM autoencoders, memory-augmented autoencoders, and memory-augmented convolutional autoencoders. Experiments on these approaches were conducted on standard datasets and a new oven dataset. The memory-augmented convolutional autoencoder achieved the best performance, being more sensitive to anomalies while maintaining fast inference speed. The presentation concluded with recommendations on the discussed techniques.