The document discusses user interface (UI) development and testing compatibility for virtual buttons in applications using Unity and the Vuforia engine. It covers the functionality, properties, and implementation of virtual buttons, along with guidelines for designing and arranging them for optimal user interactivity. Additionally, it compares Unity's UI systems and details on layout, rendering, and interaction components to facilitate UI design.

![4. Select the function you wish to be called

5. You can add more than one callback for the event

When configuring a UnityEvent in the Inspector there are two types of function calls that are

supported:

Static. Static calls are preconfigured calls, with preconfigured values that are set in

the UI

. This means that when the callback is invoked, the target function is invoked with the

argument that has been entered into the UI.

Dynamic. Dynamic calls are invoked using an argument that is sent from code, and

this is bound to the type of UnityEvent that is being invoked. The UI filters the

callbacks and only shows the dynamic calls that are valid for the UnityEvent.

Generic UnityEvents

By default a UnityEvent in a Monobehaviour binds dynamically to a void function. This does

not have to be the case as dynamic invocation of UnityEvents supports binding to functions

with up to 4 arguments. To do this you need to define a custom UnityEvent class that

supports multiple arguments. This is quite easy to do:

[Serializable]

public class StringEvent : UnityEvent <string> {}

By adding an instance of this to your class instead of the base UnityEvent it will allow the

callback to bind dynamically to string functions.

This can then be invoked by calling the Invoke() function with a string as argument.

UnityEvents can be defined with up to 4 arguments in their generic definition.

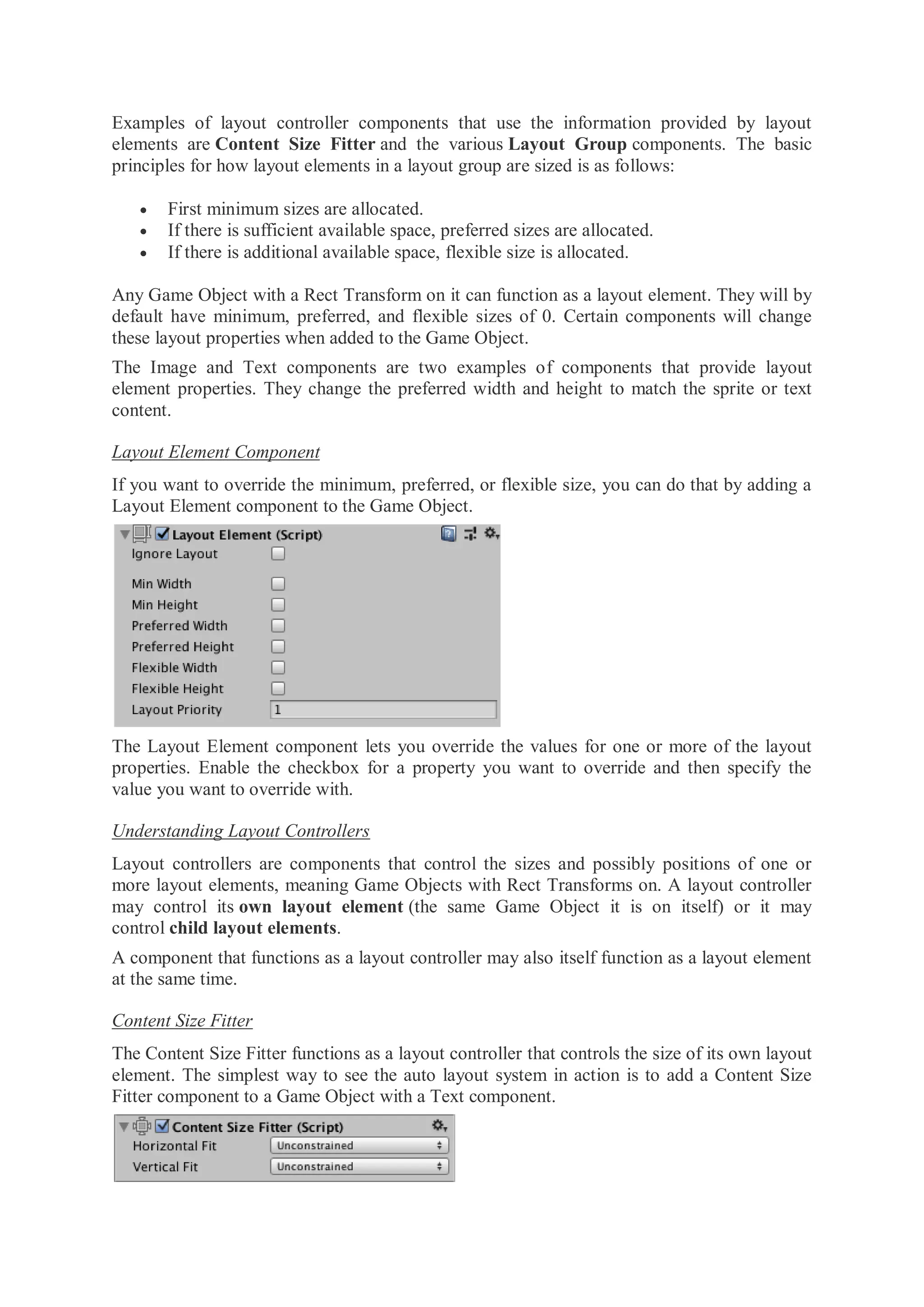

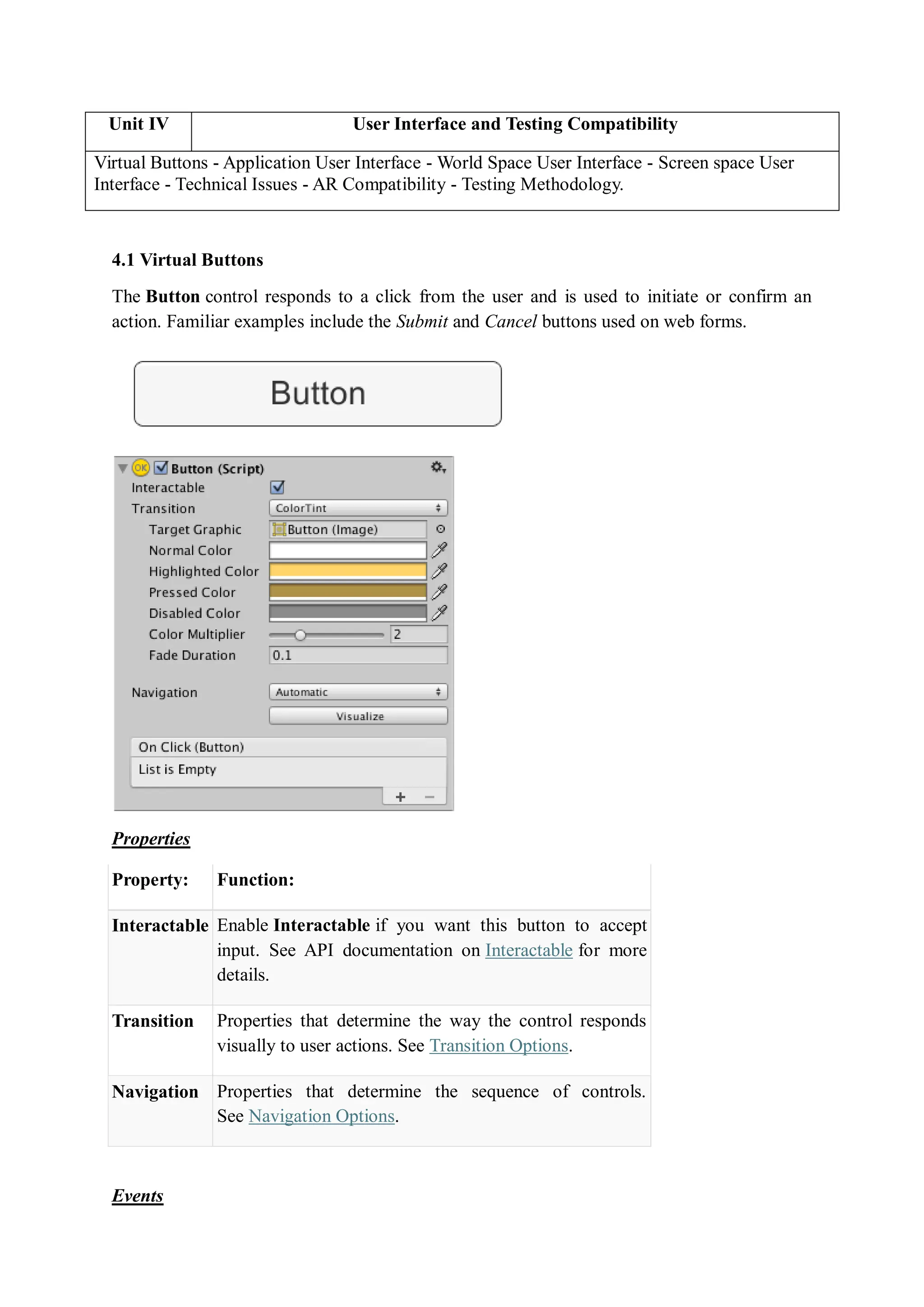

How To Implement Virtual Buttons

This page concerns the Vuforia Engine API version 9.8 and earlier. It has been deprecated and

will no longer be actively updated.

Virtual Buttons invokes interactivity for your Vuforia Targets moving on screen interactions

to the real world. Learn from the Virtual Buttons sample on how to implement and configure

Virtual Buttons and immerse your end users in to your AR application.

Virtual buttons provide a useful mechanism for making image-based targets interactive.

Handle the events with OnButtonPressed and OnButtonReleased when the button is visually

obstructed from the camera. When creating a Virtual Button, the size and placement must be

considered carefully with respect to the user experience. There are several factors that will

affect the responsiveness and usability of Virtual buttons.

The length and width of the button.

The area of the target that it covers.](https://image.slidesharecdn.com/unitiv-241205052343-8cb4aca1/75/Unity-UI-and-Compatibility-Testing-Content-pdf-3-2048.jpg)