This document summarizes key concepts from a PhD dissertation on uncertainty in deep learning:

1) There are two types of uncertainties - epistemic uncertainty from lack of knowledge that decreases with more data, and aleatoric uncertainty from inherent noise that cannot be reduced. Deep learning models need to estimate both to provide predictive uncertainty.

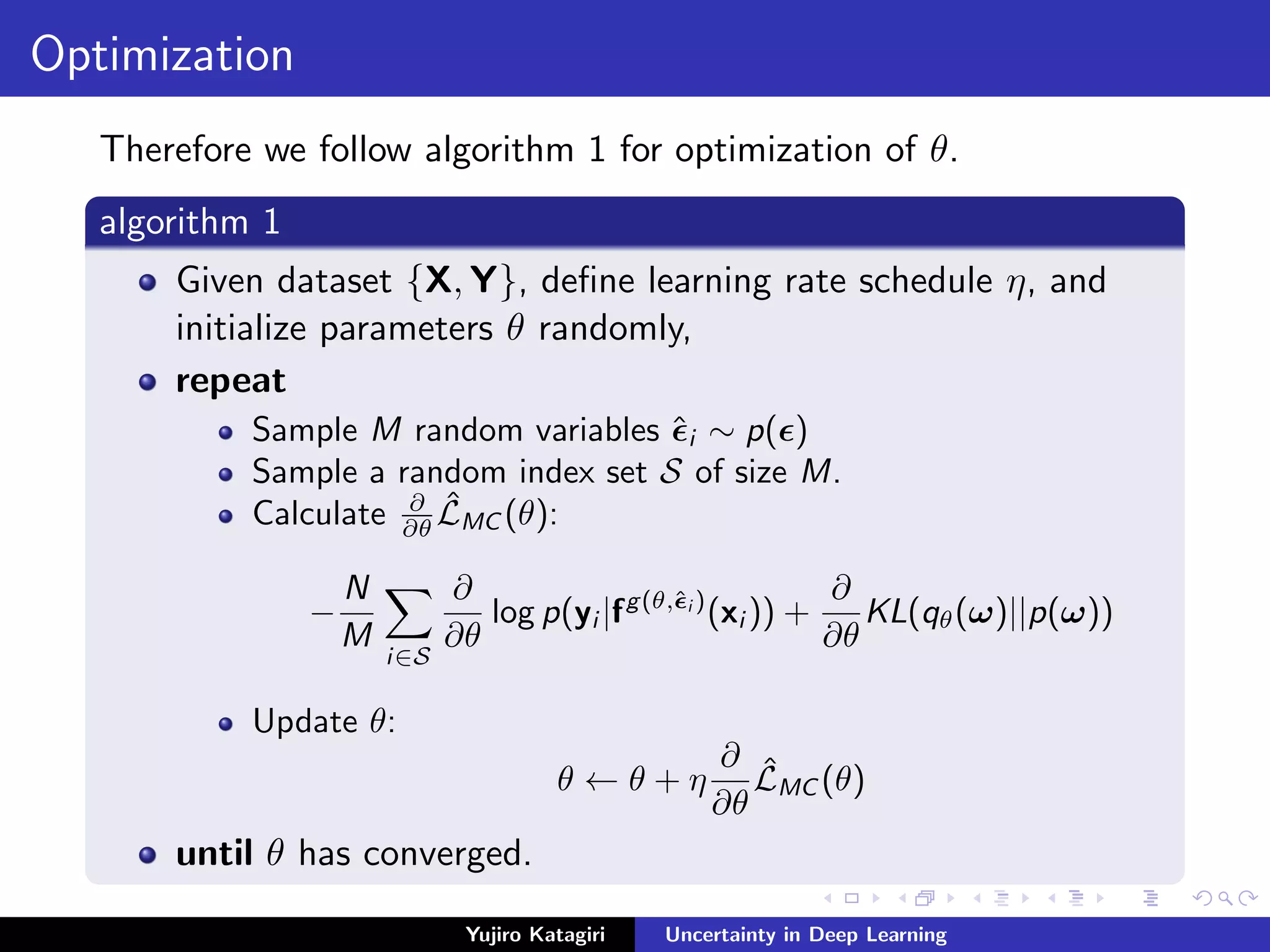

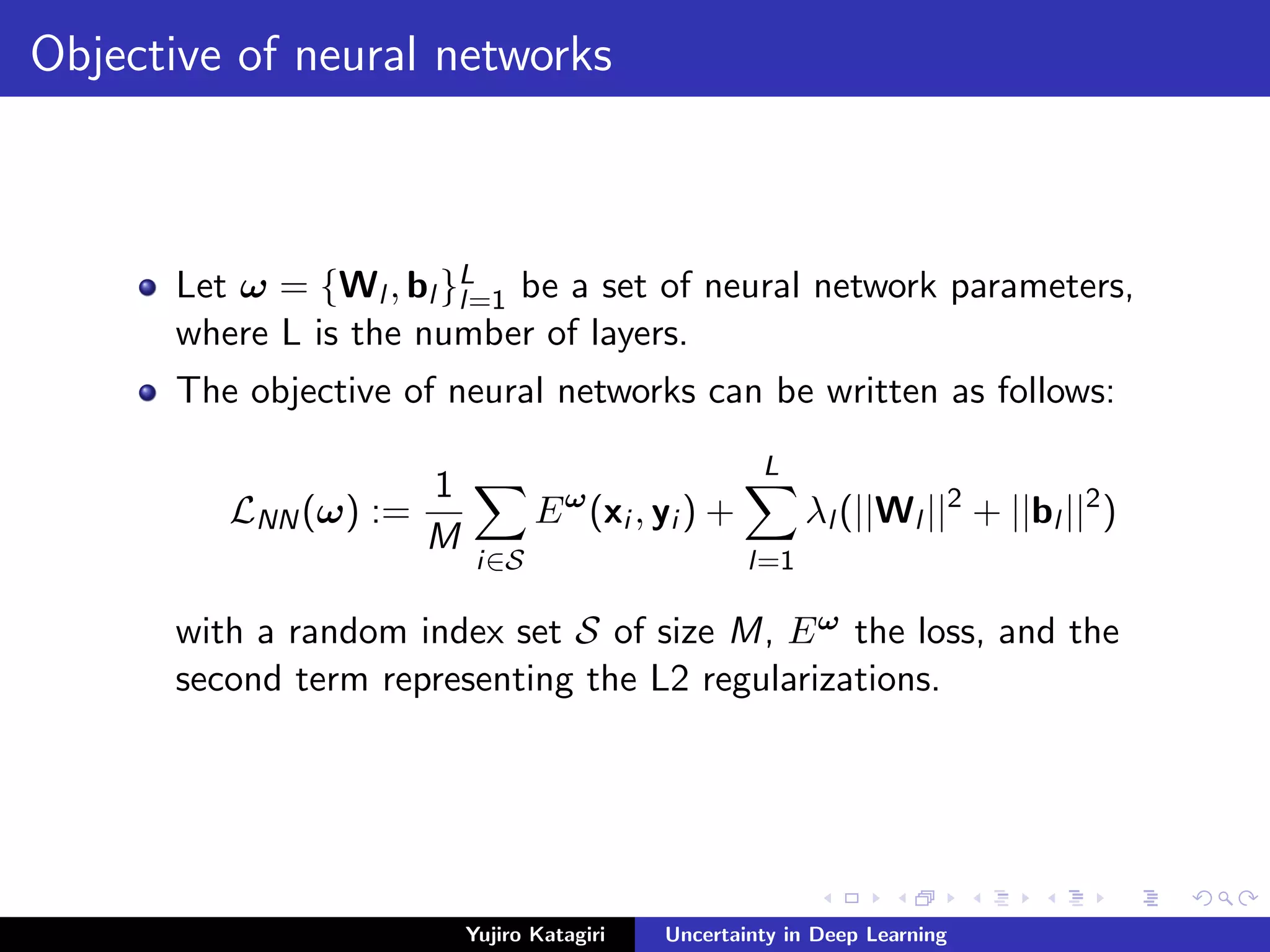

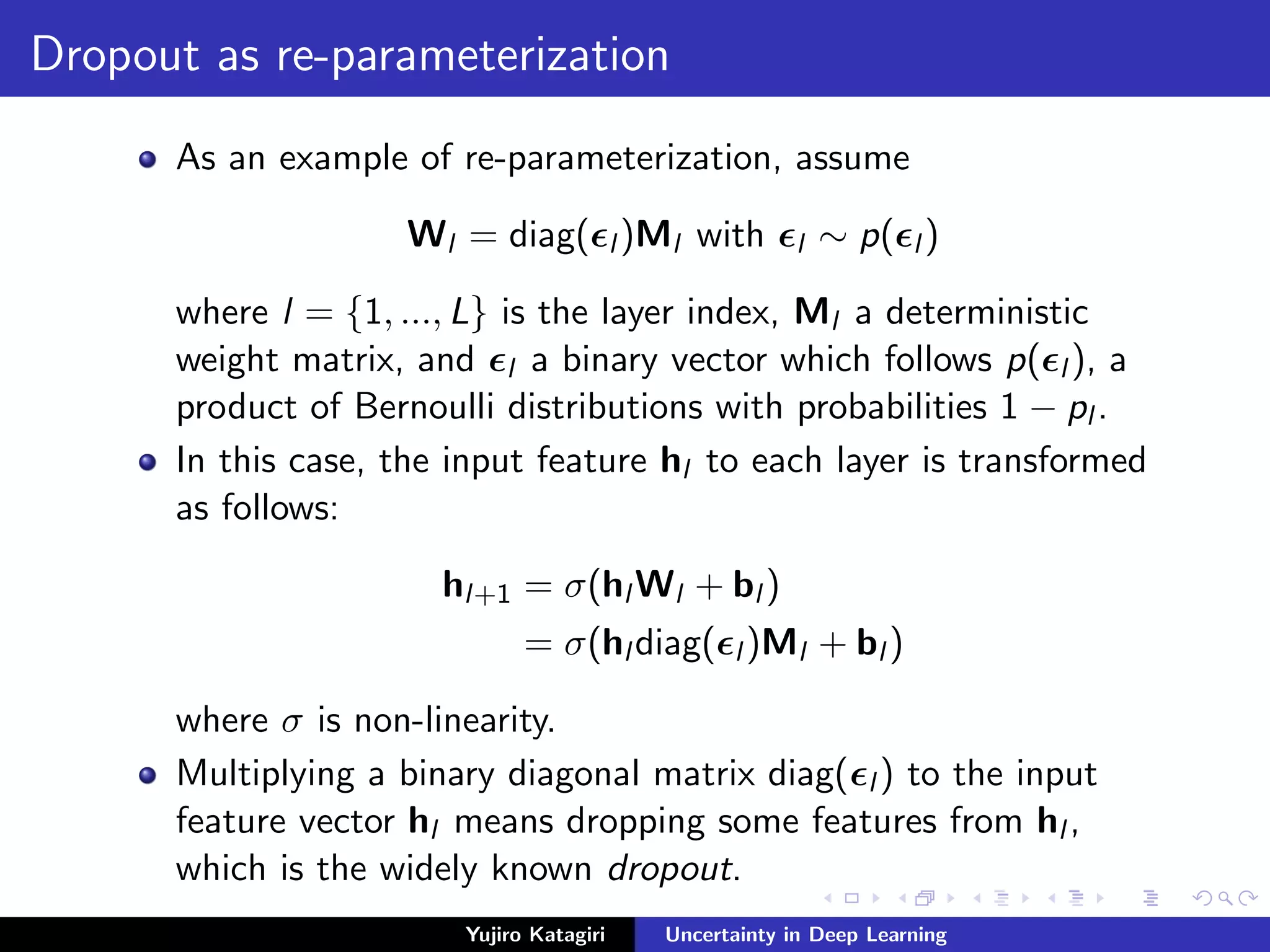

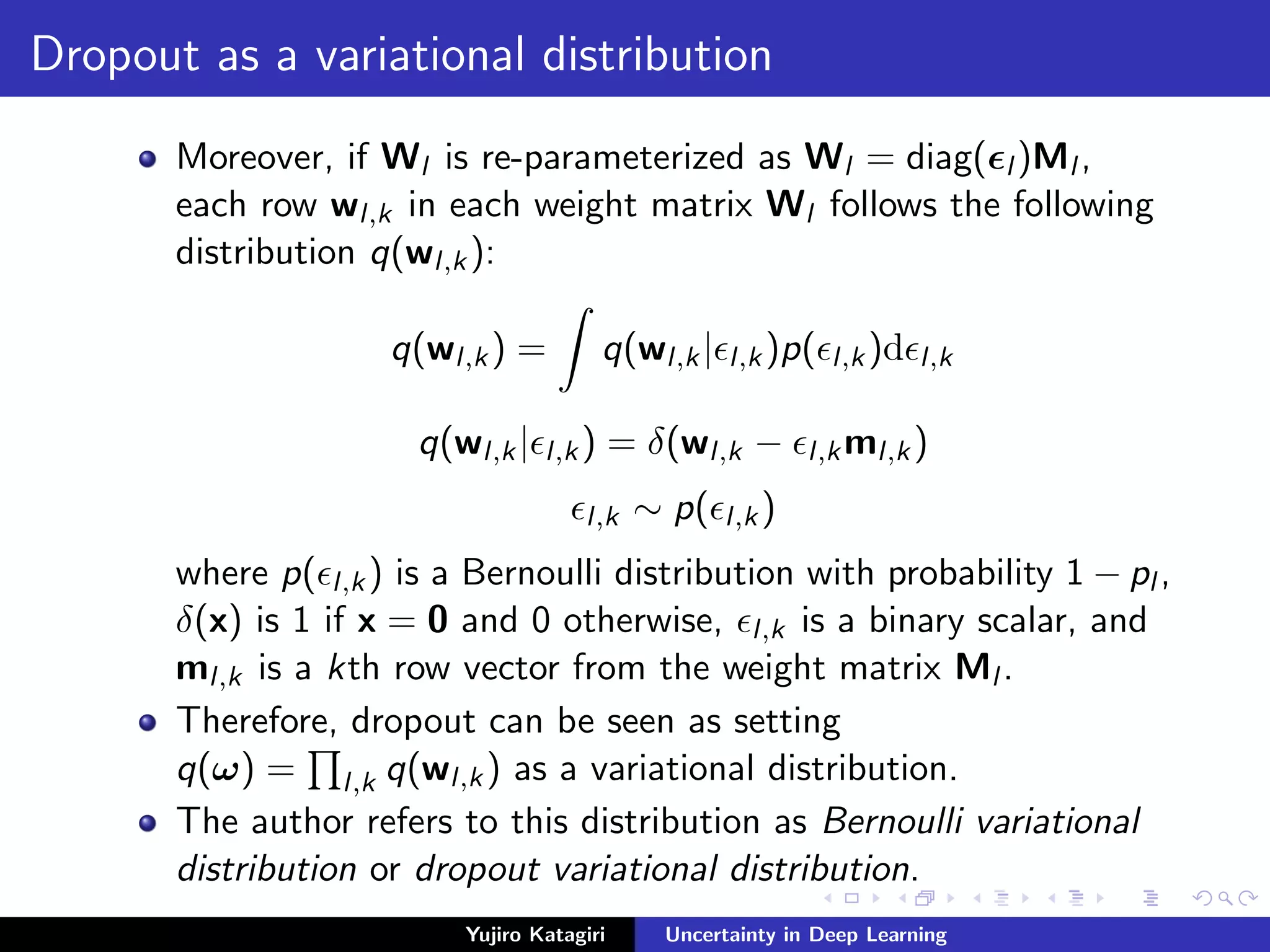

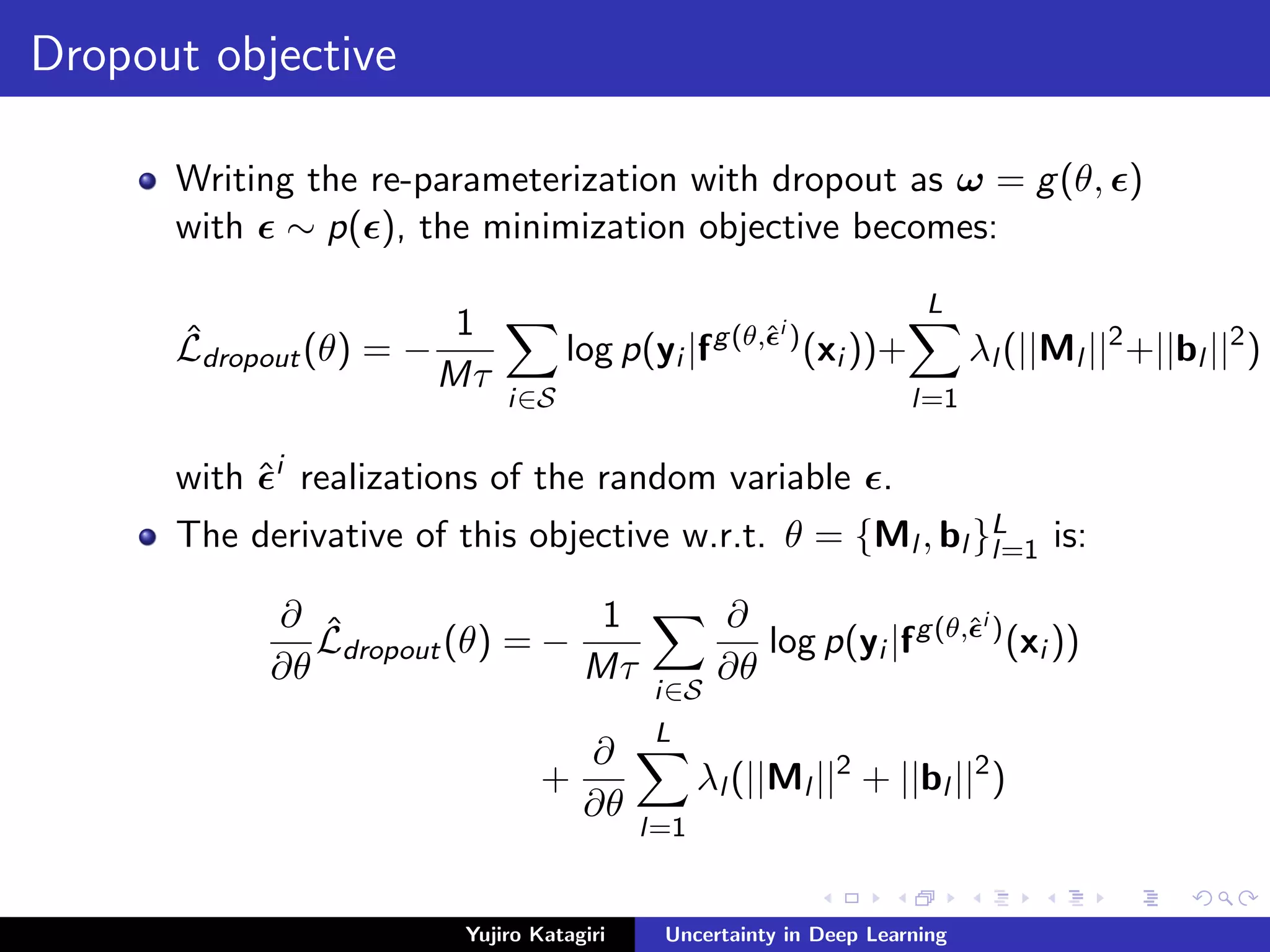

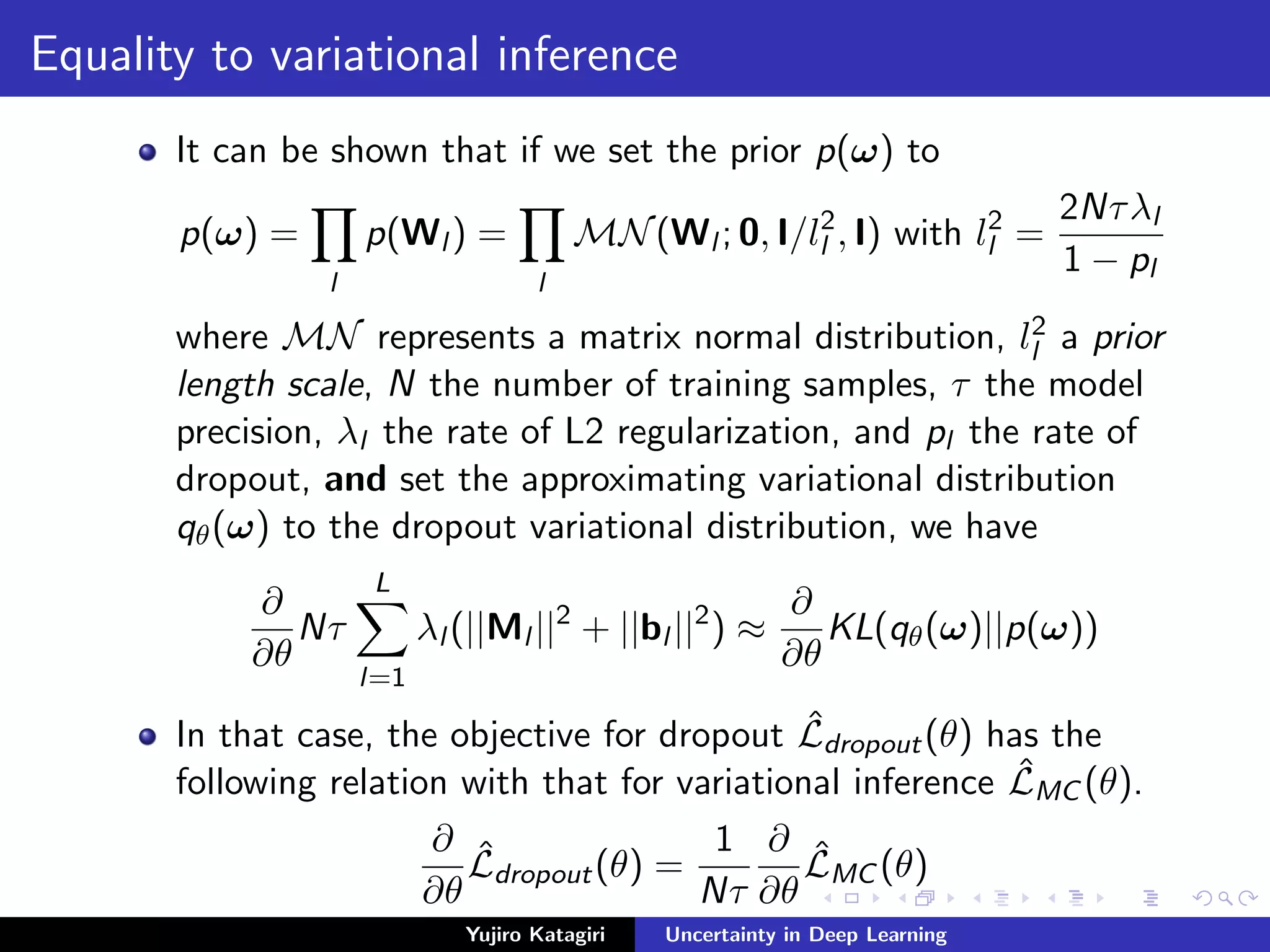

2) Variational inference allows approximating intractable Bayesian posteriors by minimizing the KL divergence between an approximating distribution and the true posterior. Dropout can be seen as a Bayesian approximation where weights follow a Bernoulli distribution.

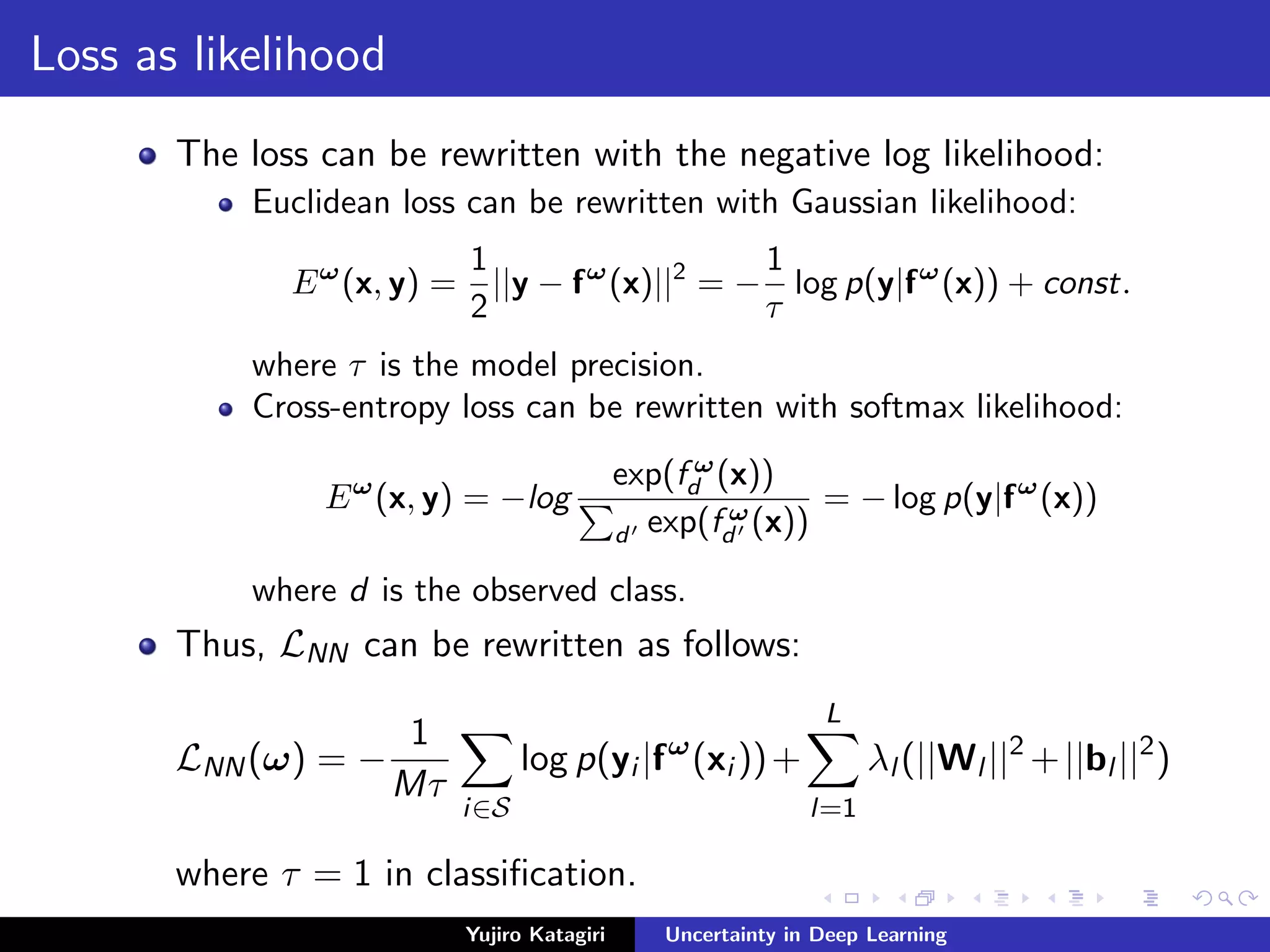

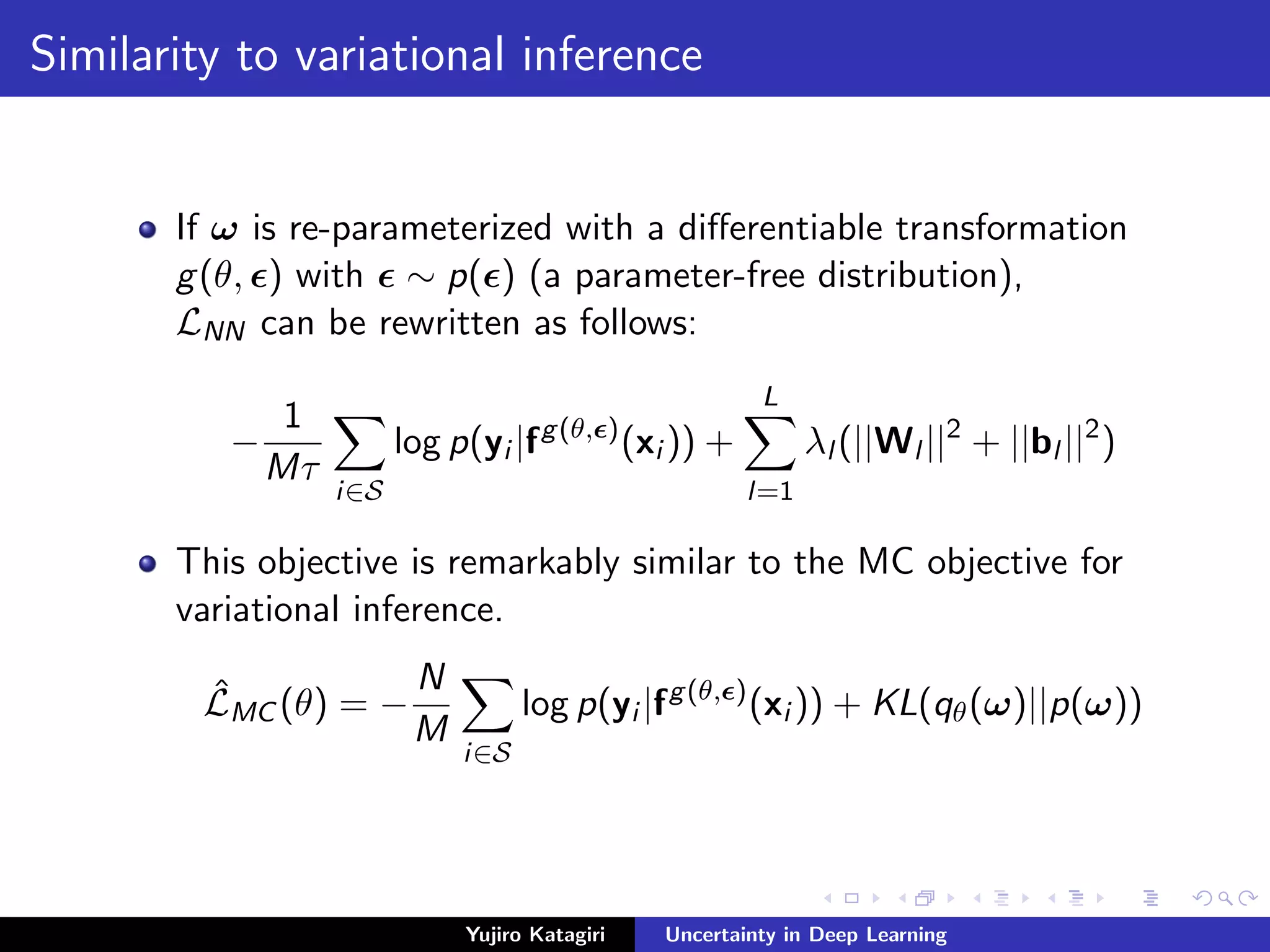

3) With dropout as a variational distribution, predictive uncertainty in regression is estimated from multiple stochastic forward passes, with aleatoric uncertainty from noise and epistem

![Solution to the first problem

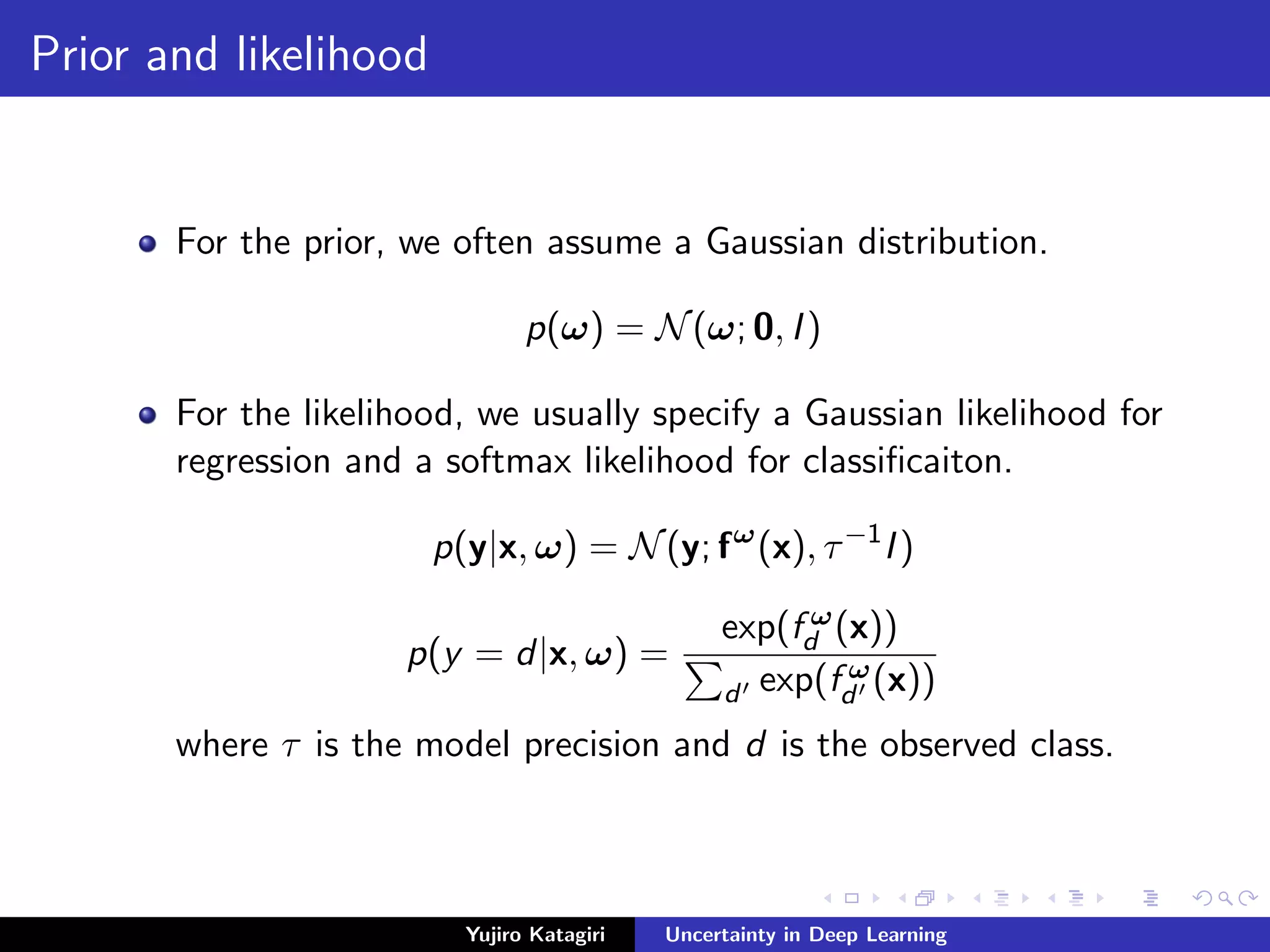

1st problem: The summation over the entire dataset is

computationally expensive.

Solution: Data sub-sampling (also referred to as mini-batch

optimization).

ˆLVI (θ) = −

N

M

i∈S

log p(yi |fω

(xi ))qθ(ω)dω+KL(qθ(ω)||p(ω))

with a random index set S of size M.

It forms an unbiased stochastic estimator to LVI (θ),

meaning that ES[ ˆLVI (θ)] = LVI (θ).

Yujiro Katagiri Uncertainty in Deep Learning](https://image.slidesharecdn.com/20180724uncertaintyindeeplearning-180823030413/75/Uncertainty-in-deep-learning-10-2048.jpg)

![Solution to the second problem

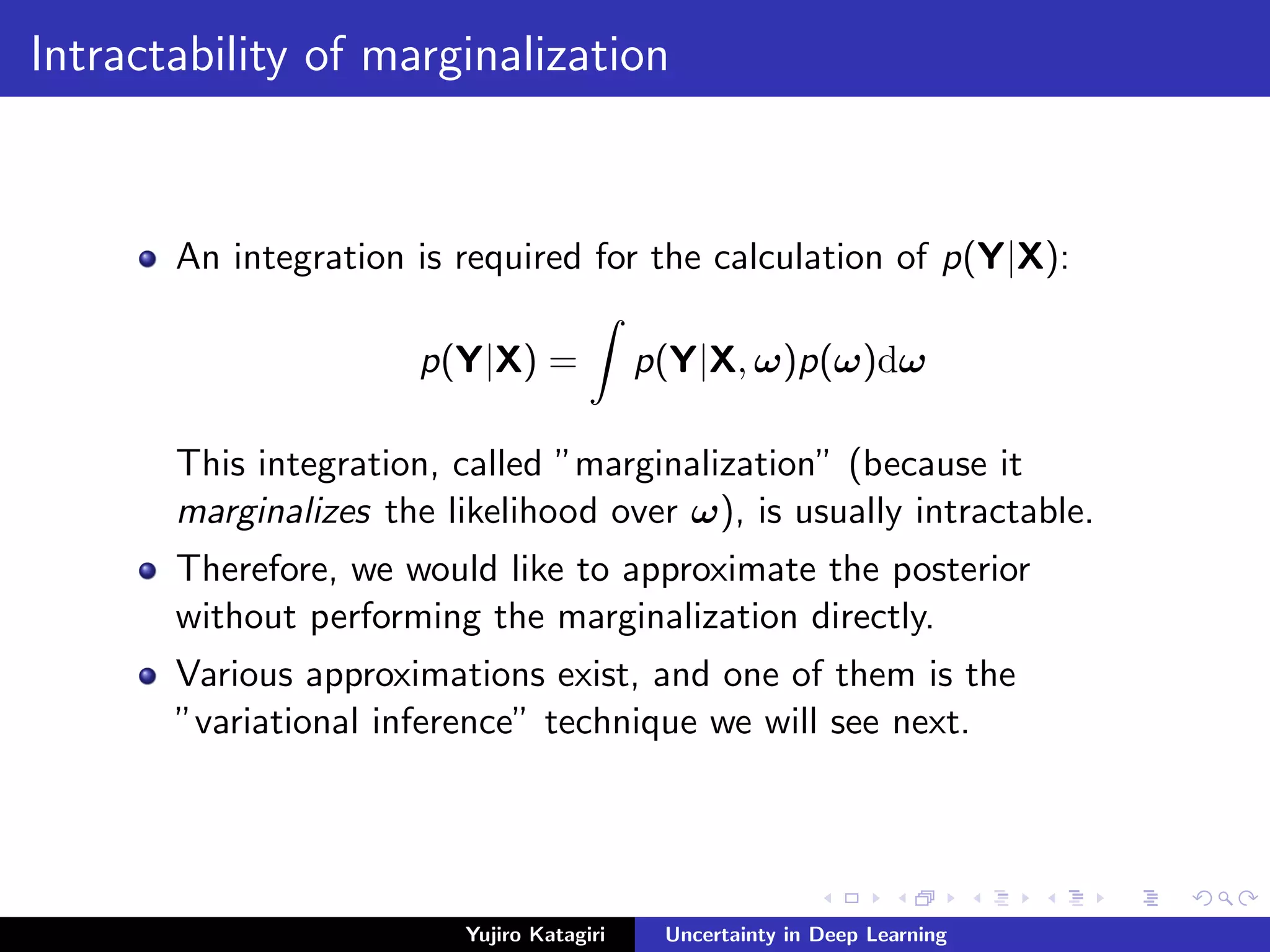

2nd problem: The expected log likelihood

log p(yi |fω(xi ))qθ(ω)dω is usually intractable.

Solution: MC estimation with re-parameterization trick.

If ω is re-parameterized with a differentiable transformation

g(θ, ) with ∼ p( ) (a parameter-free distribution),

ˆLVI (θ) = −

N

M

i∈S

log p(yi |fω

(xi ))qθ(ω)dω + KL(qθ(ω)||p(ω))

= −

N

M

i∈S

log p(yi |fg(θ, )

(xi ))p( )d + KL(qθ(ω)||p(ω))

ˆLVI (θ) can be estimated with a new MC estimator

ˆLMC (θ) = −

N

M

i∈S

log p(yi |fg(θ, )

(xi )) + KL(qθ(ω)||p(ω))

where ∼ p( ), meaning that E [ ˆLMC (θ)] = ˆLVI (θ).

Yujiro Katagiri Uncertainty in Deep Learning](https://image.slidesharecdn.com/20180724uncertaintyindeeplearning-180823030413/75/Uncertainty-in-deep-learning-11-2048.jpg)

![Estimation of predictive mean

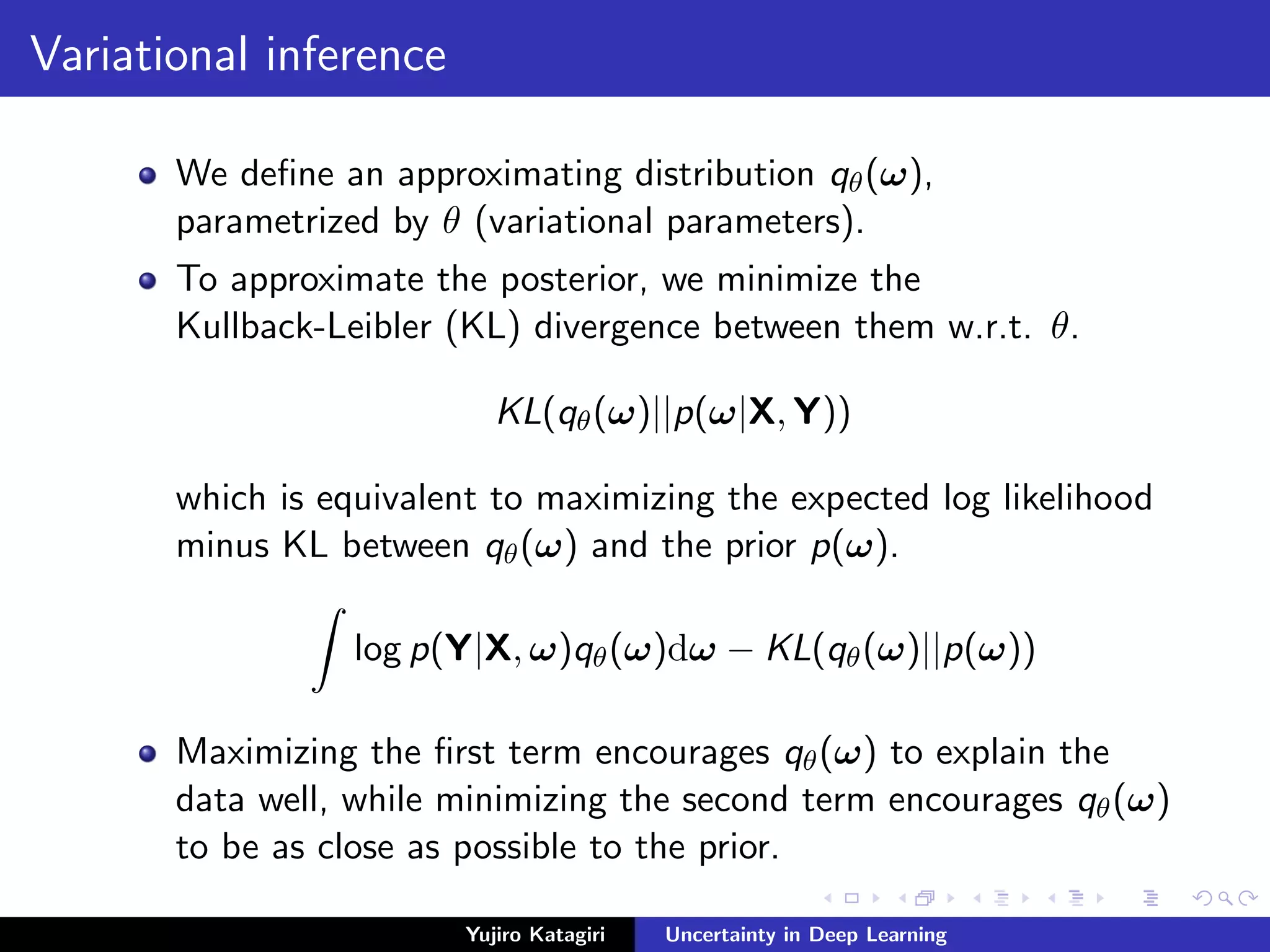

Given our approximate posterior q∗

θ (ω) we can infer the

distribution of an output y∗ for a new input point x∗:

p(y∗

|x∗

, X, Y) := p(y∗

|x∗

, ω)p(ω|X, Y)dω

≈ p(y∗

|x∗

, ω)q∗

θ (ω)dω := q∗

θ (y∗

|x∗

)

The predictive mean is estimated by performing T stochastic

forward passes through the network and averaging the results.

regression

Eq∗

θ (y∗|x∗)[y∗

] ≈

1

T

T

t=1

f ˆωt

(x∗

)

classification

Eq∗

θ (y∗|x∗)[y∗

= d] ≈

1

T

T

t=1

exp(f ˆωt

d (x∗

))

d exp(f ˆωt

d (x∗))

Yujiro Katagiri Uncertainty in Deep Learning](https://image.slidesharecdn.com/20180724uncertaintyindeeplearning-180823030413/75/Uncertainty-in-deep-learning-21-2048.jpg)

![Estimation of uncertainty in regression

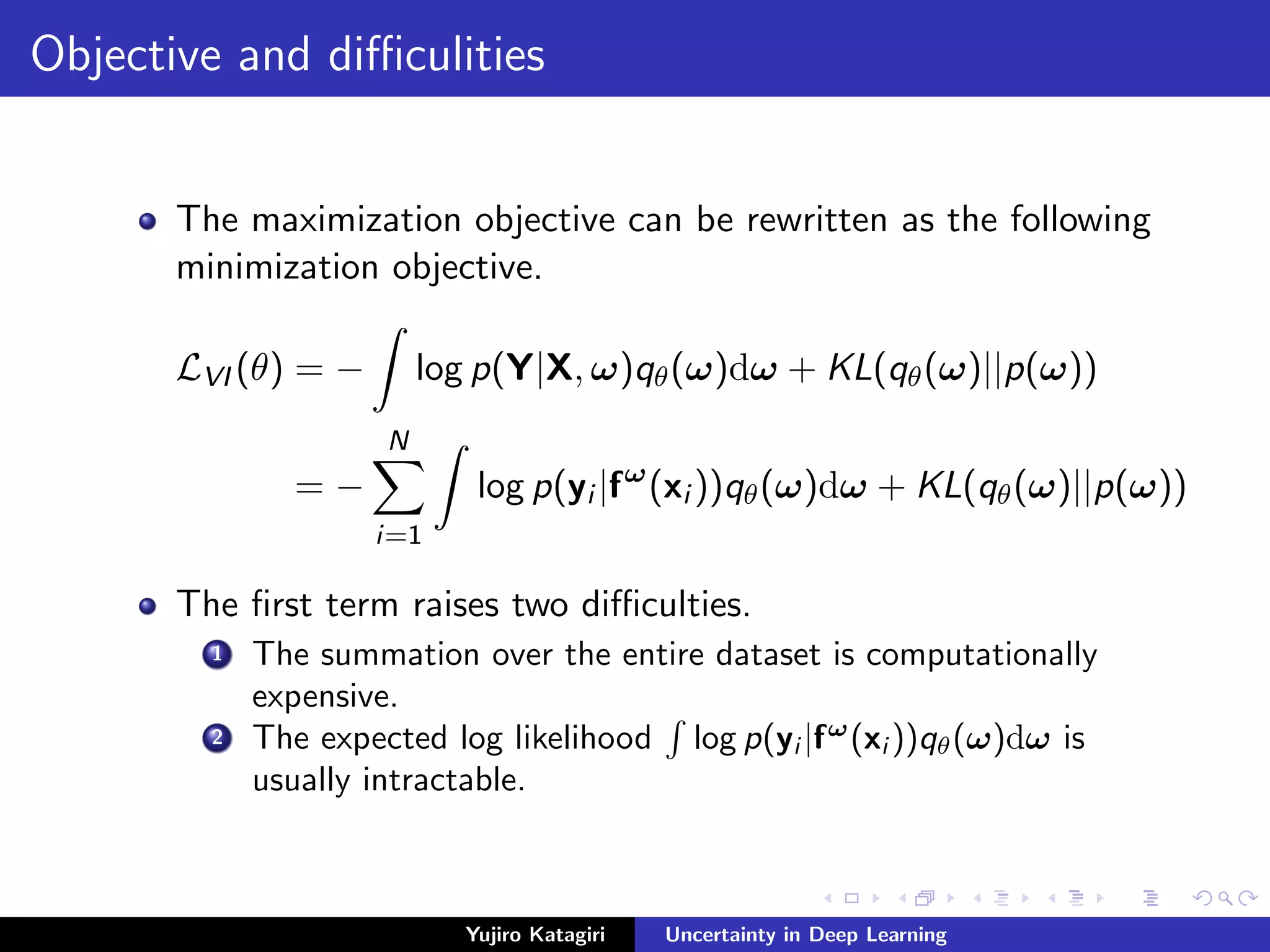

The predictive variance is estimated in regression as

Varq∗

θ (y∗|x∗)[y∗

] ≈ τ−1

I

+

1

T

T

t=1

f ˆωt

(x∗

)T

f ˆωt

(x∗

) − (

1

T

T

t=1

f ˆωt

(x∗

))T

(

1

T

T

t=1

f ˆωt

(x∗

))

where y∗ and f ˆωt (x∗) are row vectors and τ =

(1−pl )l2

l

2Nλl

is

found with grid-search over the hyper parameters (λl , ll , and

pl ) to minimize validation error.

The first term τ−1 captures the aleatoric uncertainty and the

following terms capture the epistemic uncertainty.

Note that the factor of 2 is removed (i.e., τ =

(1−pl )l2

l

Nλl

) when

we use mean-squared-error loss instead of Euclidean loss.

Yujiro Katagiri Uncertainty in Deep Learning](https://image.slidesharecdn.com/20180724uncertaintyindeeplearning-180823030413/75/Uncertainty-in-deep-learning-22-2048.jpg)

![Estimation of uncertainty in classification

The mutual information is estimated in classification as

I[y∗

, ω|x∗

, X, Y] = H[y∗

|x∗

, X, Y] − Ep(ω|X,Y )[H[y∗

|x∗

, ω]]

≈ −

d

(

1

T t

pˆωt

d ) log(

1

T t

pˆωt

d ) −

1

T t

(−

d

pˆωt

d log pˆωt

d )

where

pˆωt

d =

exp(f ˆωt

d (x∗))

d exp(f ˆωt

d (x∗))

.

This captures the epistemic uncertainty.

In the context of active learning, this quantity is called BALD

(Bayesian active learning by disagreement).

Yujiro Katagiri Uncertainty in Deep Learning](https://image.slidesharecdn.com/20180724uncertaintyindeeplearning-180823030413/75/Uncertainty-in-deep-learning-23-2048.jpg)