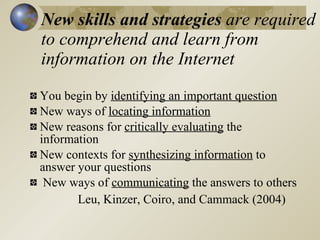

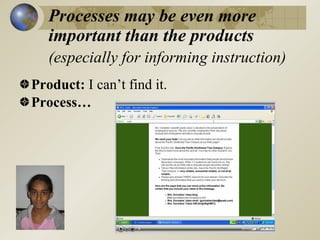

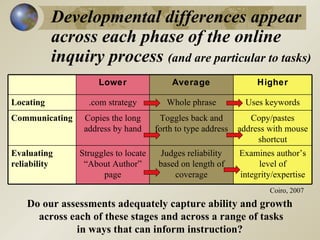

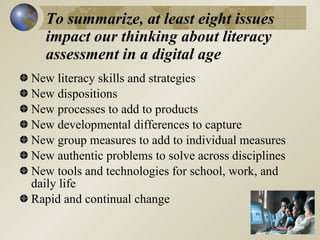

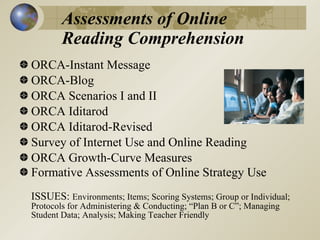

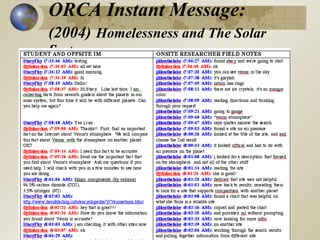

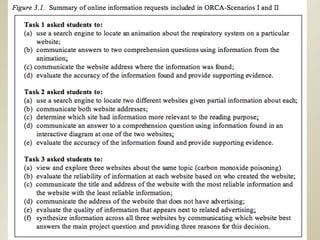

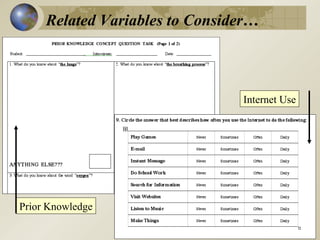

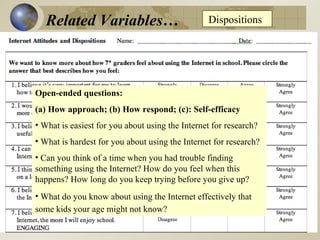

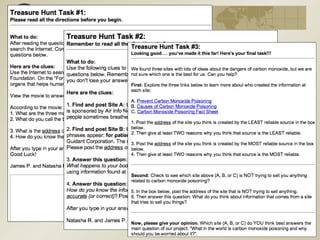

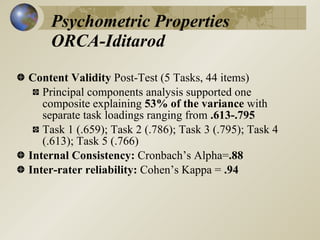

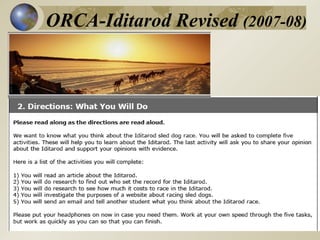

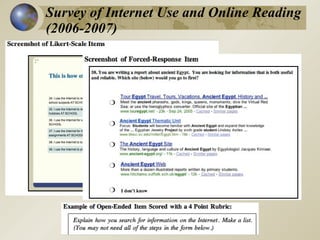

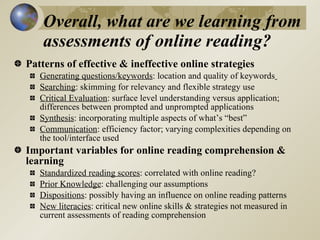

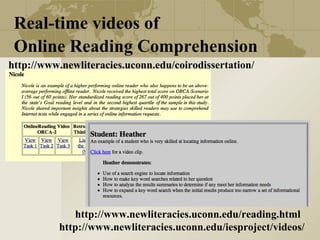

The document discusses the evolving landscape of literacy assessment in the context of the internet, emphasizing the need for new skills and dispositions for online reading comprehension. It identifies various challenges in assessing online literacies, including the necessity for group measures, interdisciplinary problem-solving, and integration of real-world tools. The author advocates for continuous adaptation of assessment methods to keep pace with the rapid changes in digital environments and technologies.