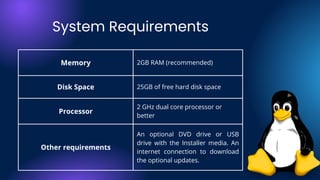

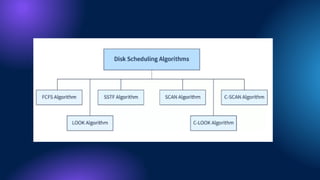

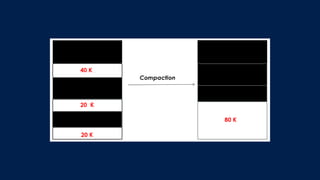

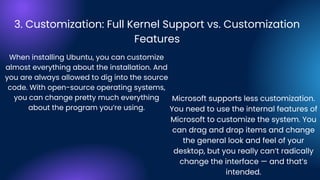

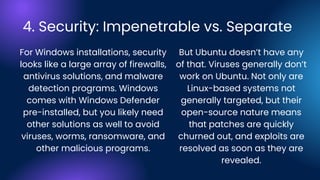

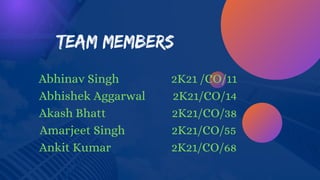

This document contains a list of team members who worked on a project about the Ubuntu operating system. It includes an acknowledgement section thanking their professor for assistance. The document then outlines the topics that will be covered, including an introduction to operating systems, the history of Ubuntu, CPU and disk scheduling, security features, memory management, and system requirements.

![Ubuntu 18.04 LTS (Bionic Beaver)

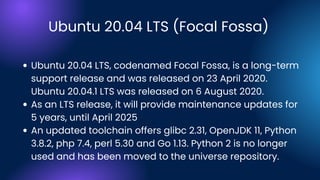

Ubuntu 18.04 LTS Bionic Beaver[296] is a long-term

support version that was released on 26 April 2018.

Ubuntu 18.04 LTS introduced new features, such as colour

emoticons a new To-Do application preinstalled in the

default installation, and added the option of a "Minimal

Install" to the Ubuntu 18.04 LTS installer, which only installs

a web browser and system tools

This release employed Linux kernel version 4.15, which

incorporated a CPU controller for the cgroup v2 interface](https://image.slidesharecdn.com/ubuntu12-230302085334-97470d92/85/UBUNTU-1-2-pdf-17-320.jpg)