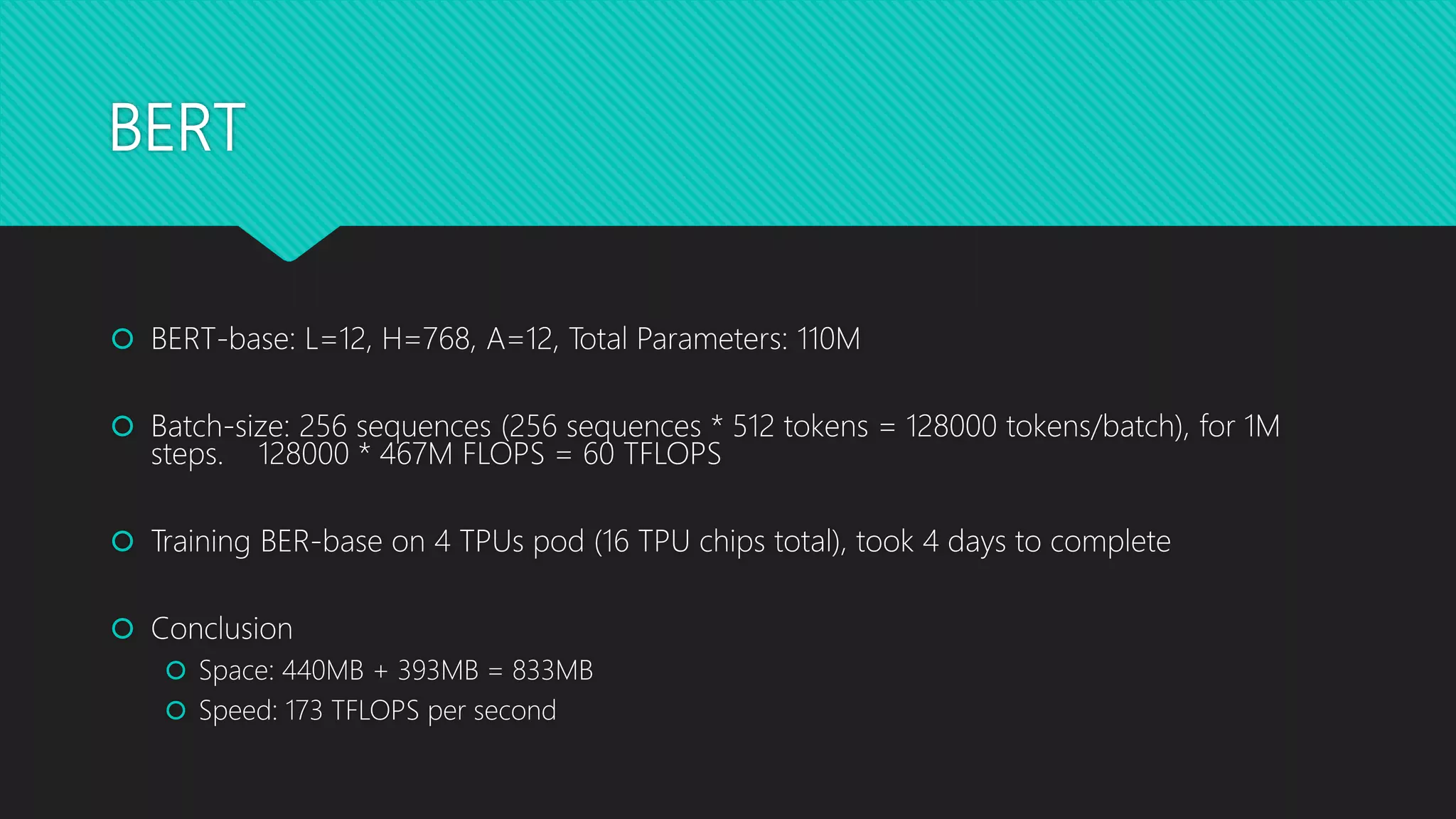

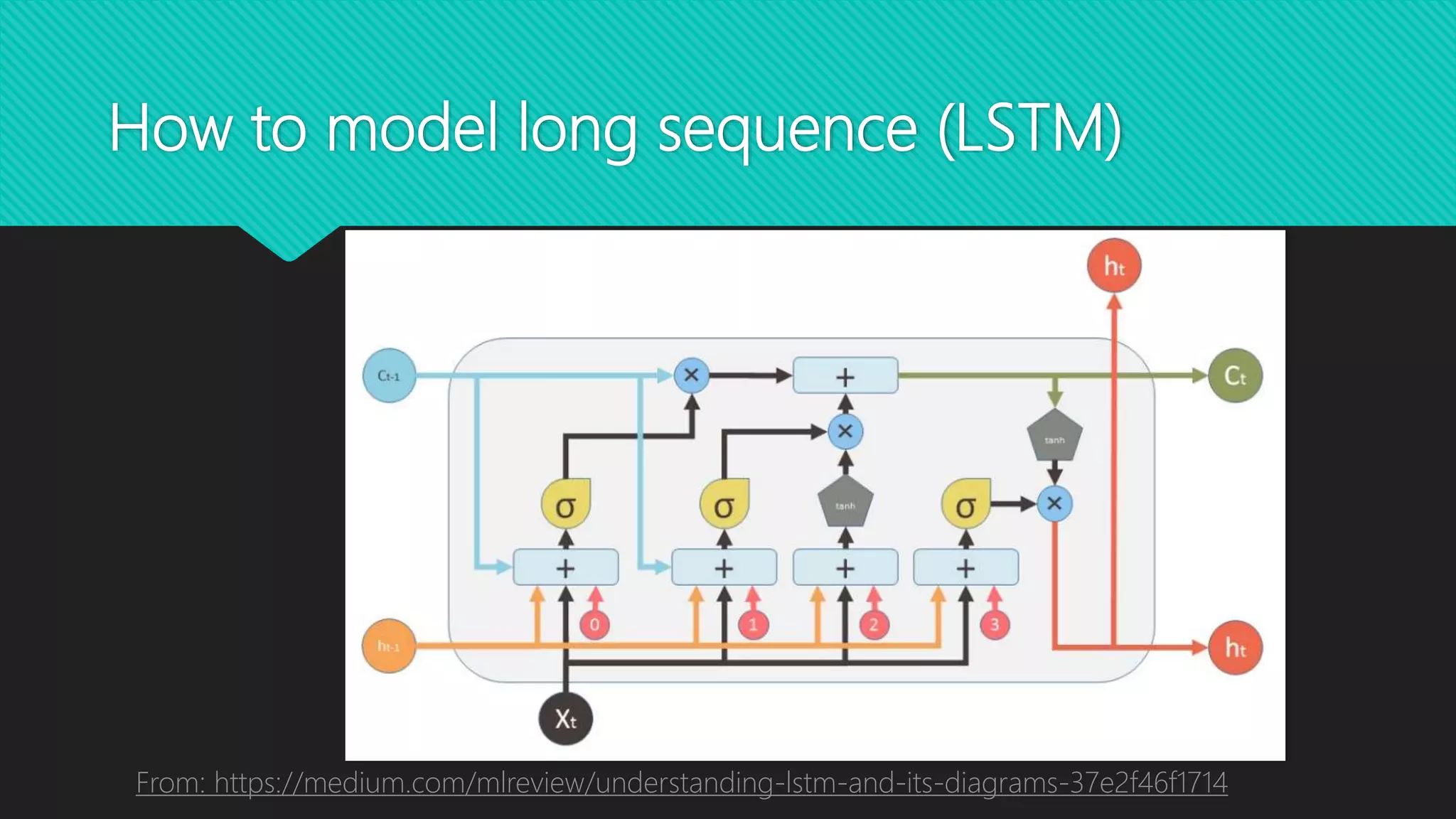

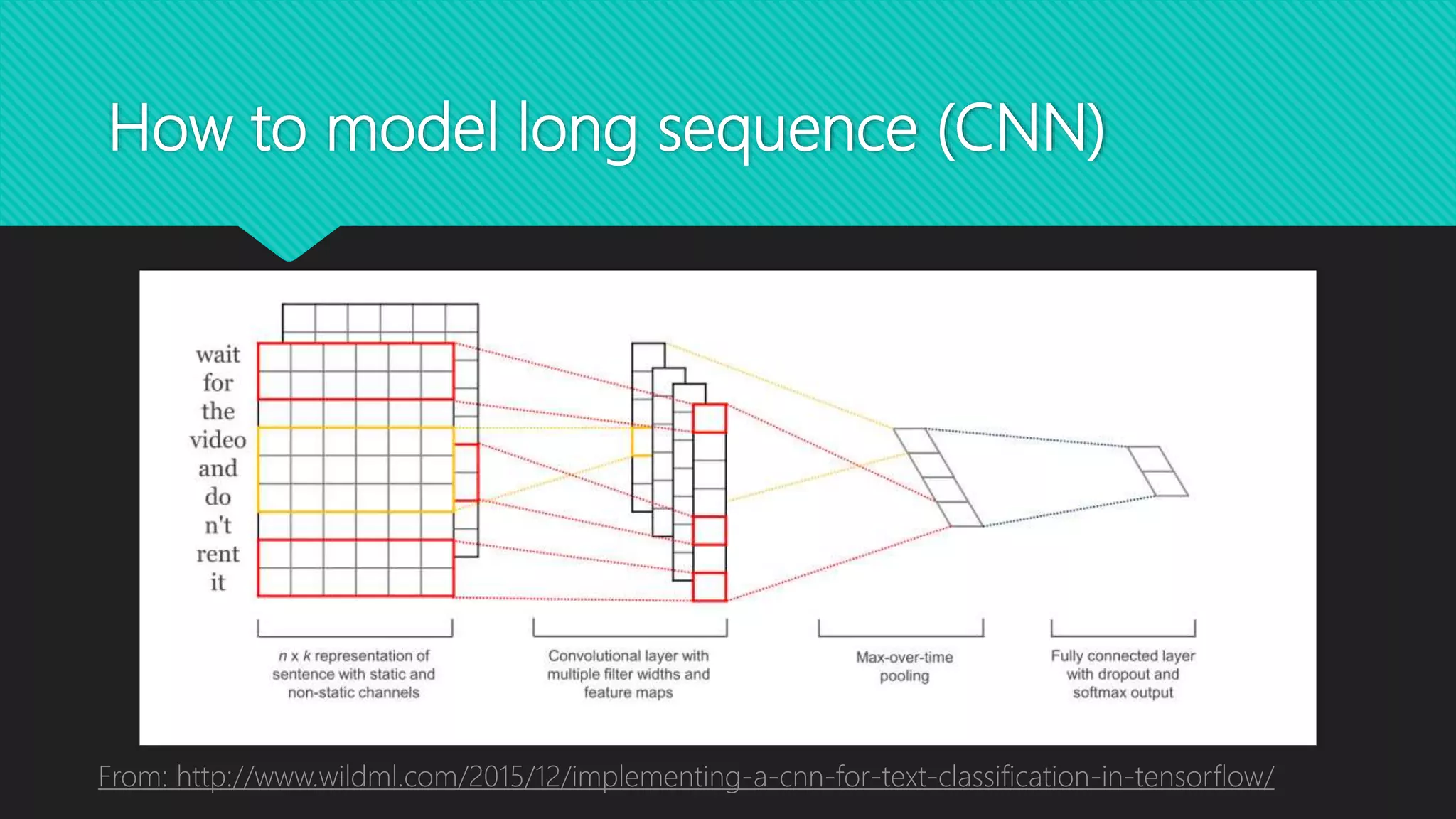

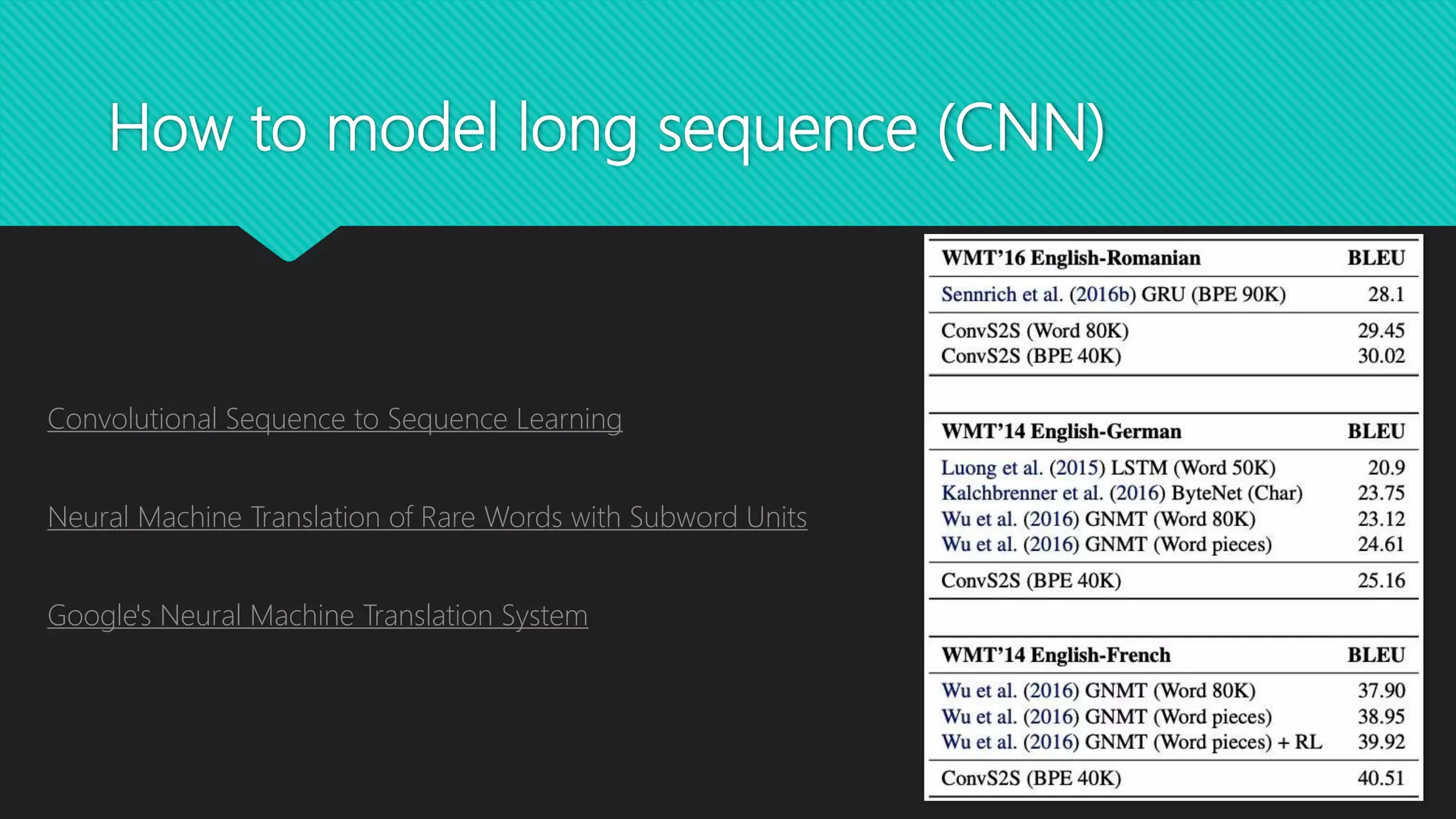

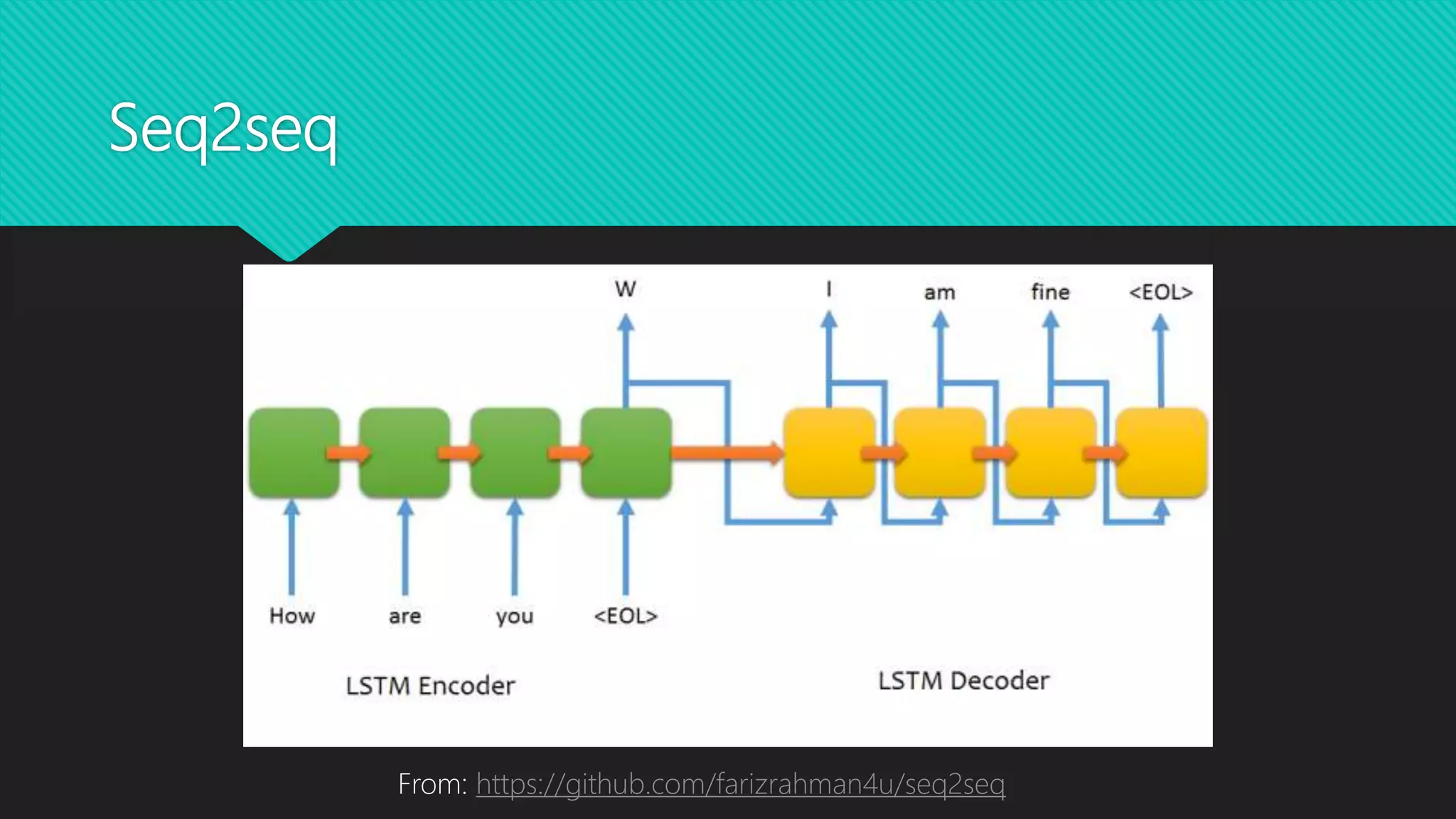

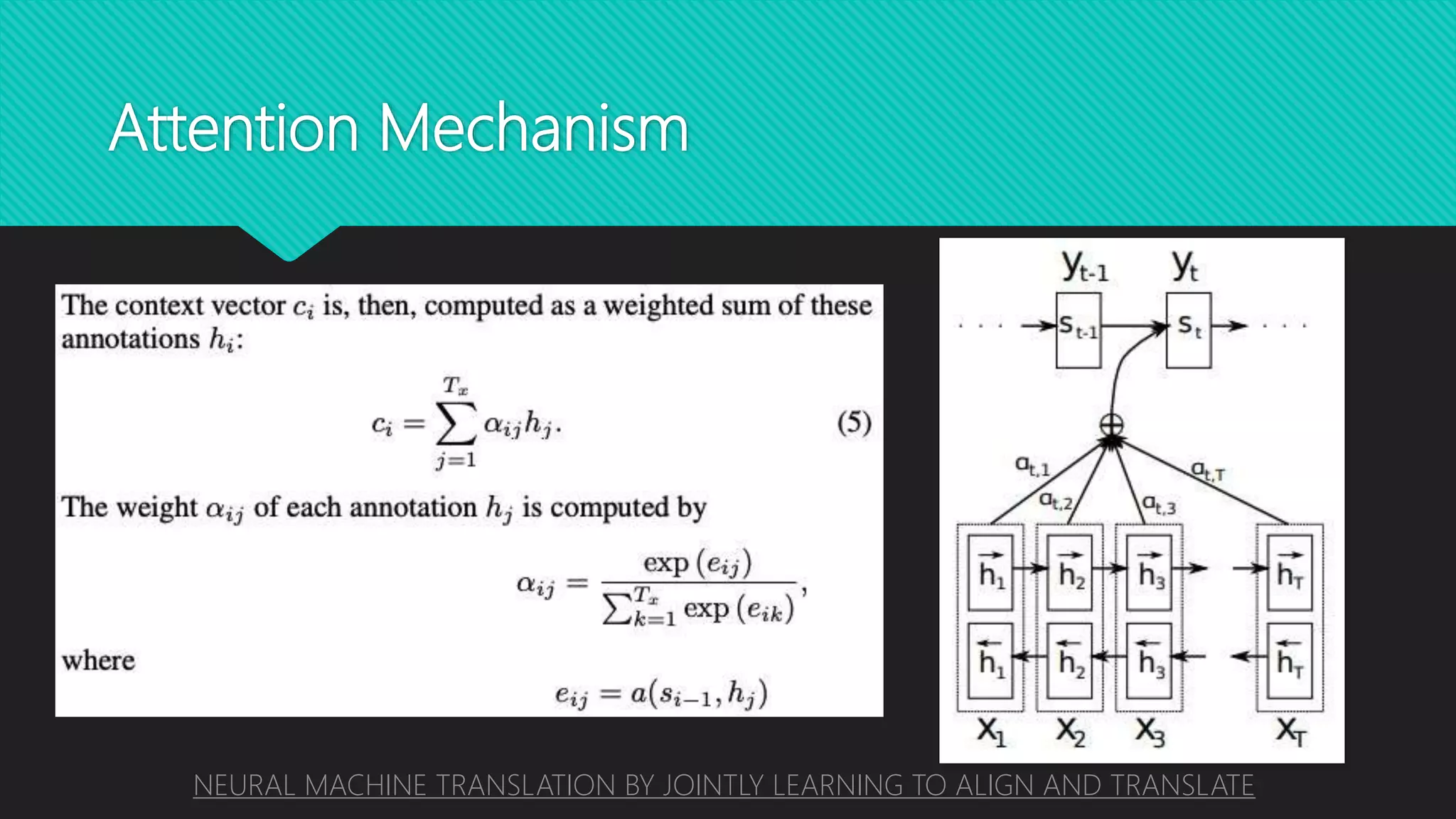

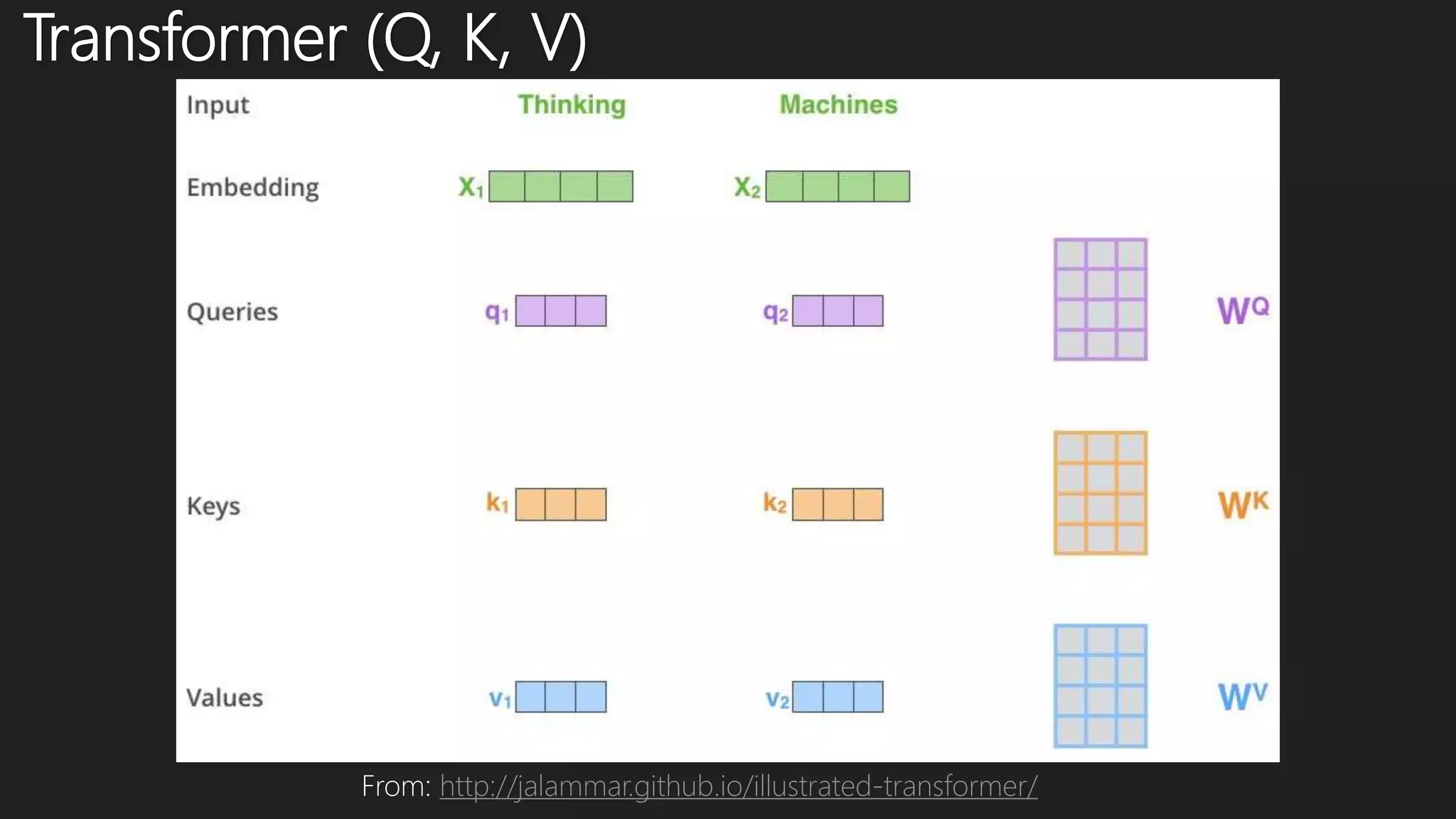

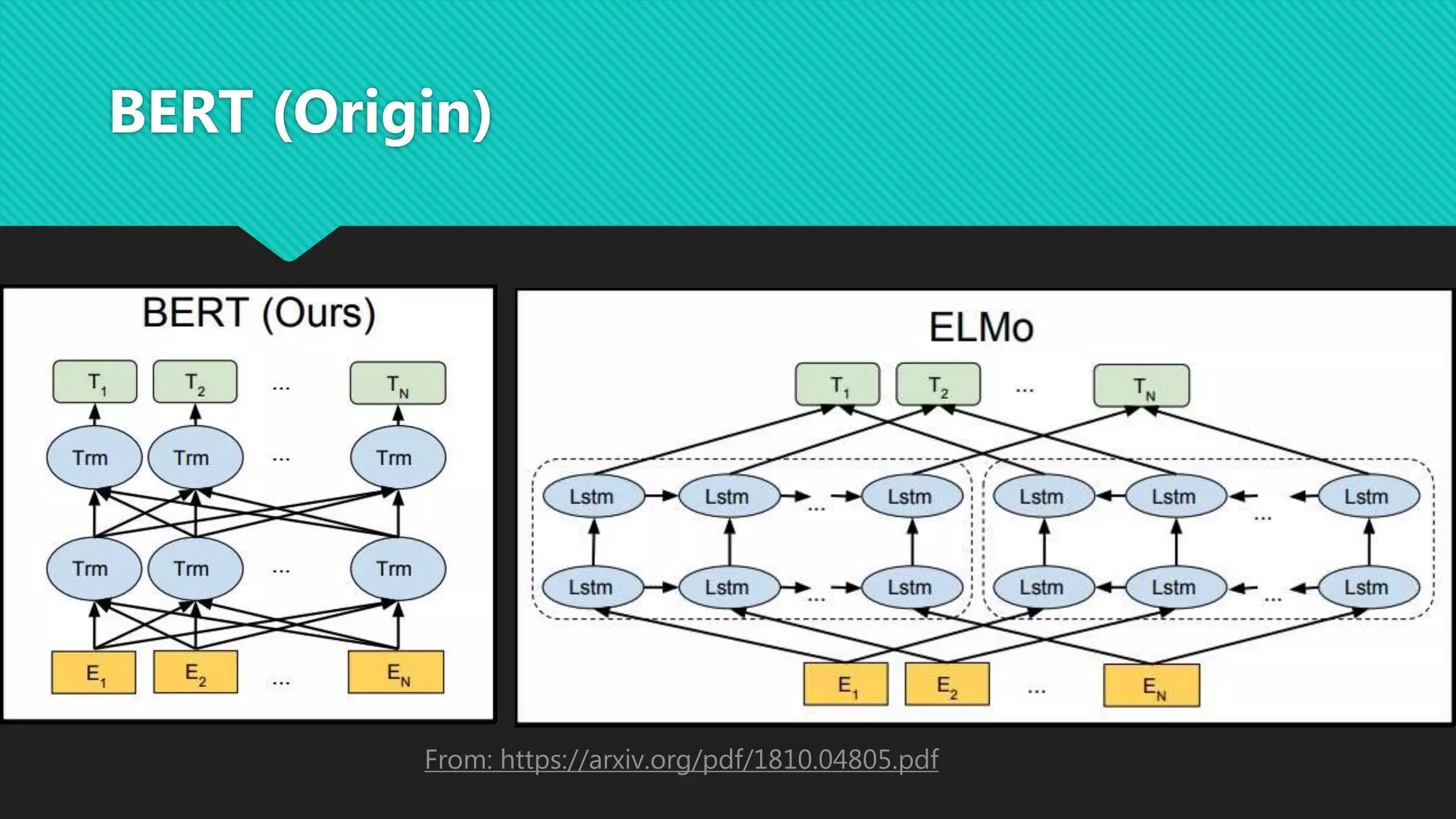

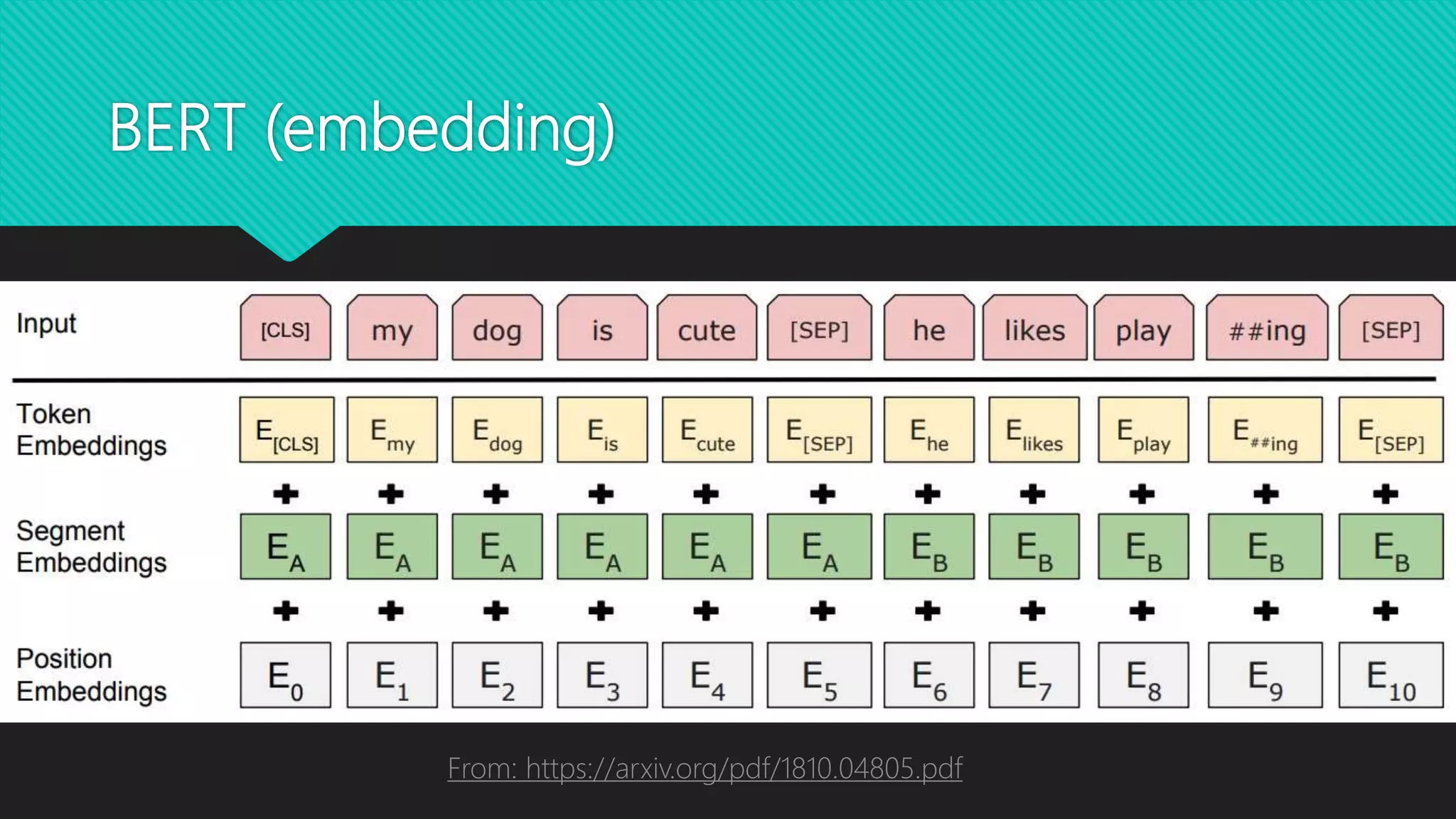

This document discusses various methods for modeling long sequences, including LSTMs, CNNs, attention mechanisms, and Transformers. It provides details on the Transformer architecture such as multi-head attention, feed-forward layers, and computational complexity. BERT is introduced as a Transformer-based model used for natural language understanding tasks. Details are given on BERT's training methodology and the resources required to train BERT-base.

![BERT (training tasks)

Masked Language Model: masked word with the [MASK] token

Next Sentence Prediction](https://image.slidesharecdn.com/ibtd1zwnqggum1eu86h0-signature-75525836ffdd7653e07cc98c4424e2a1474784c088484fc6c26d5e97181bd851-poli-200806110847/75/Transformer-and-BERT-16-2048.jpg)