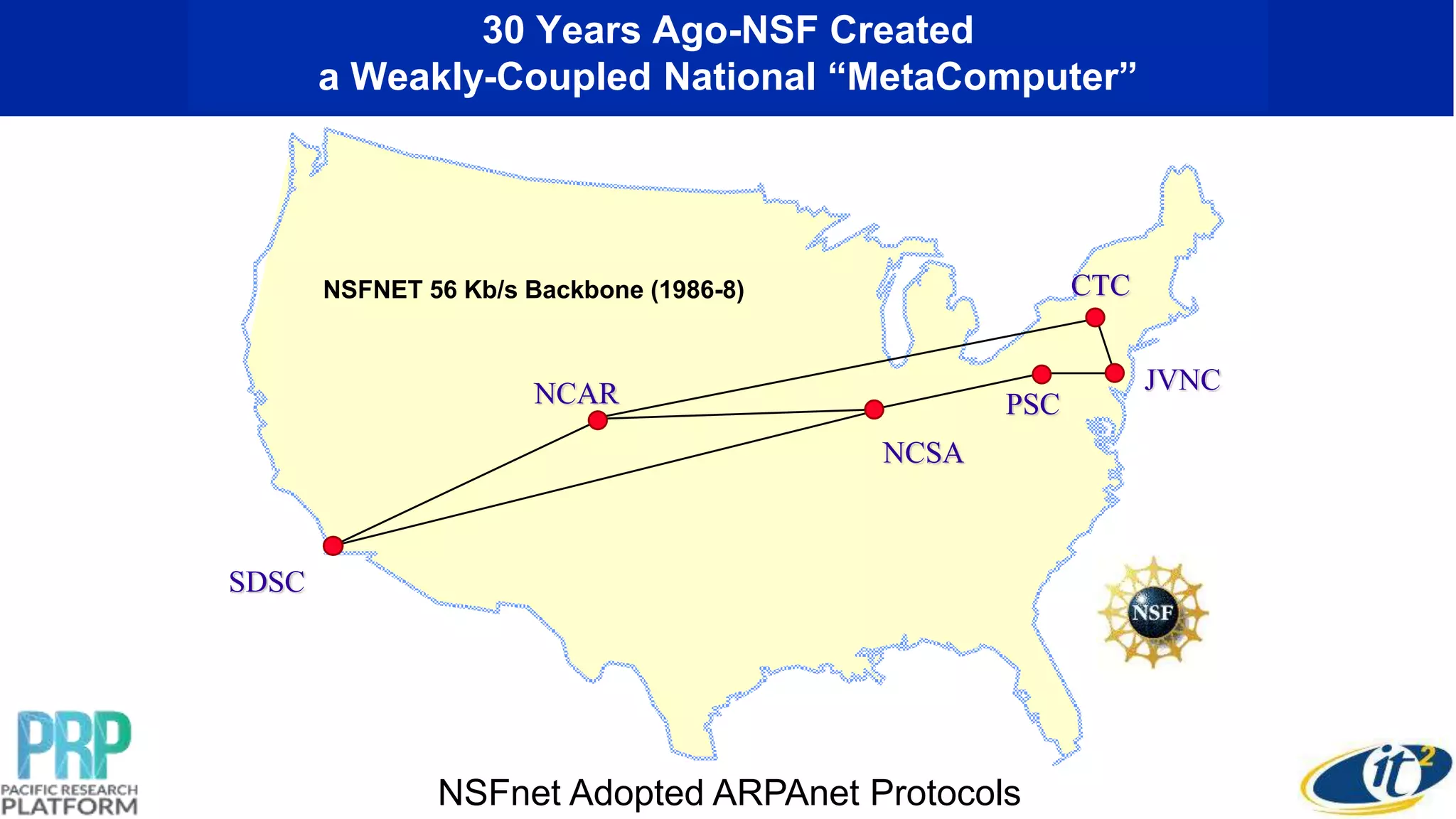

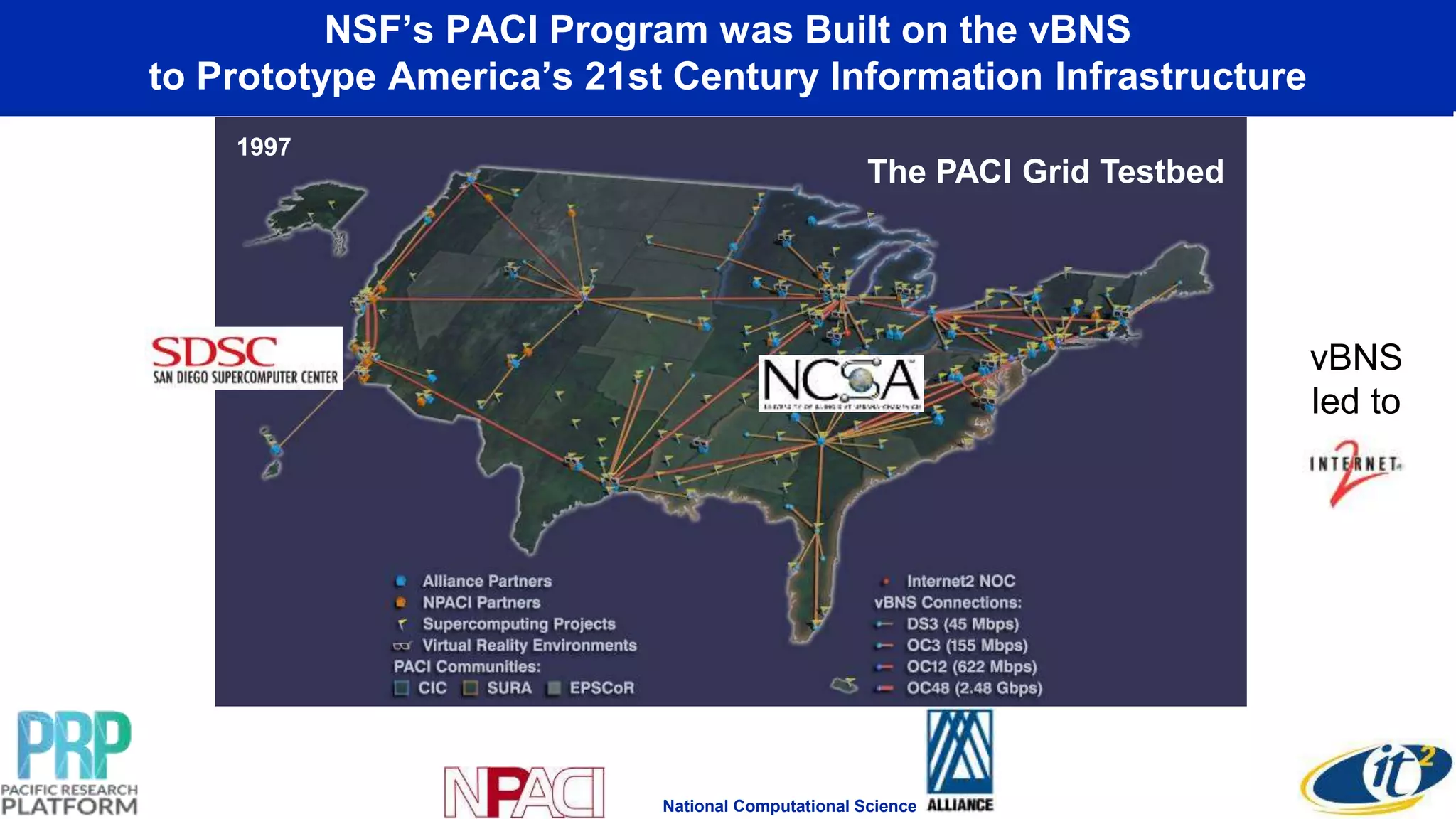

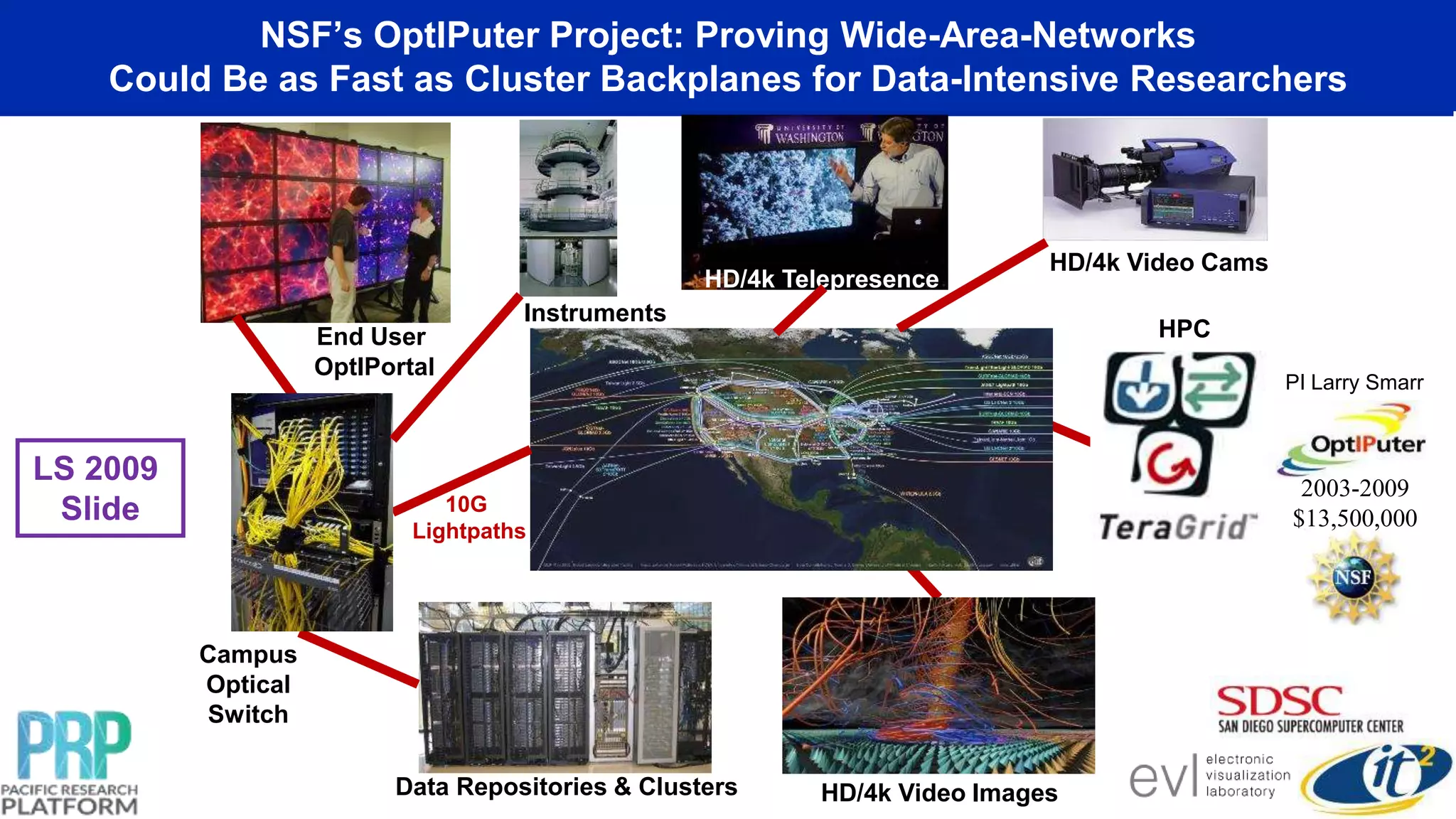

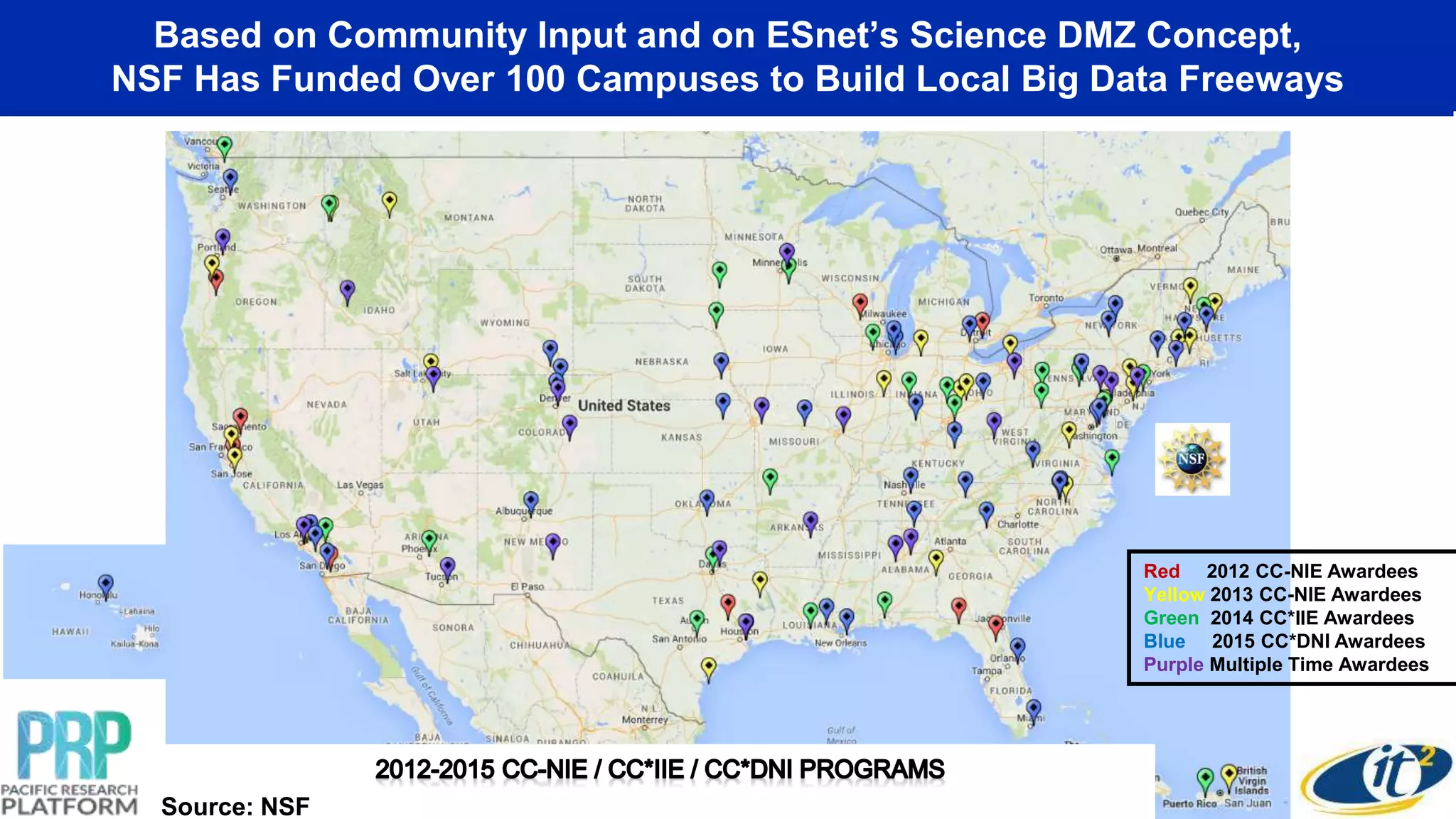

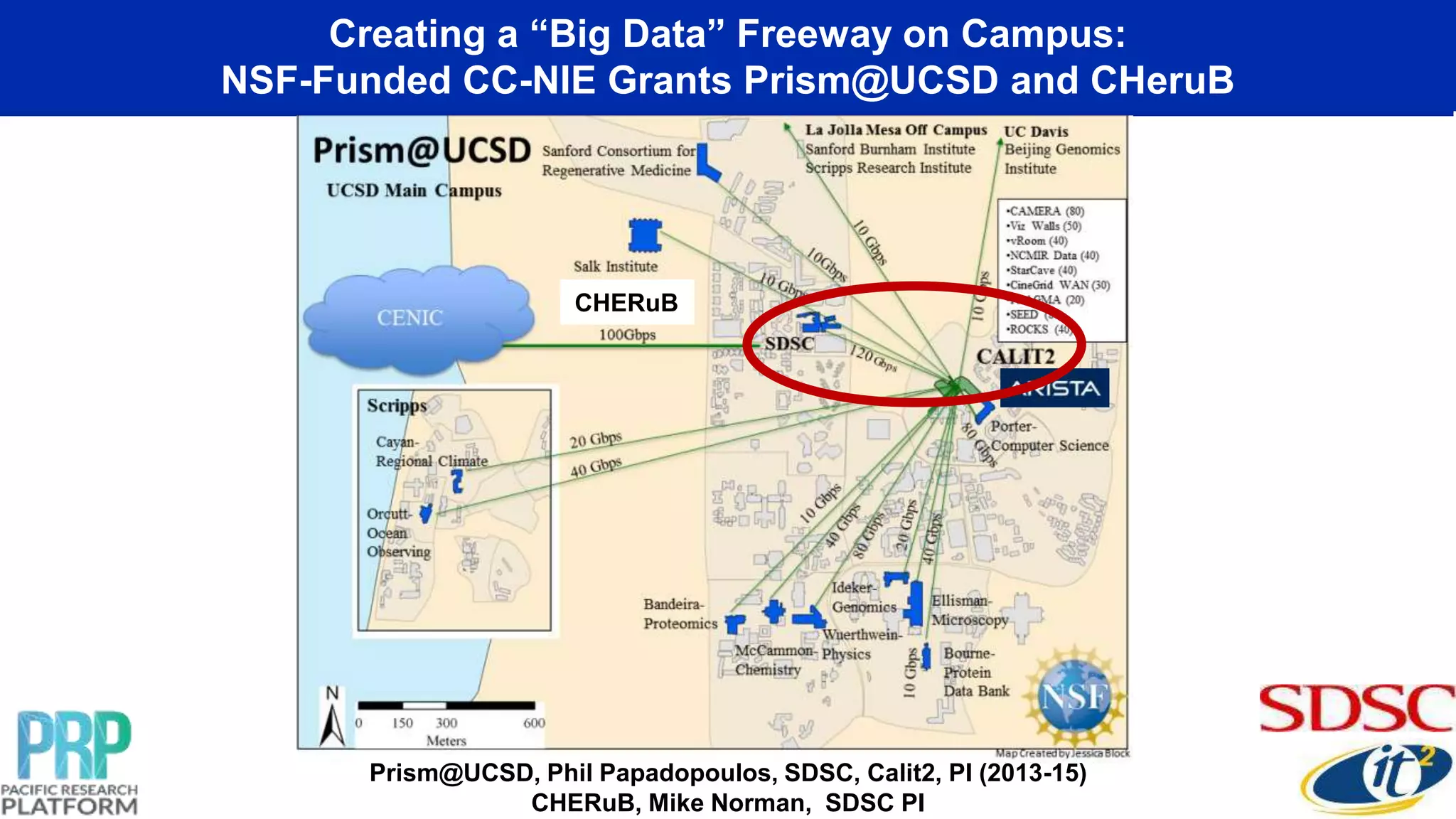

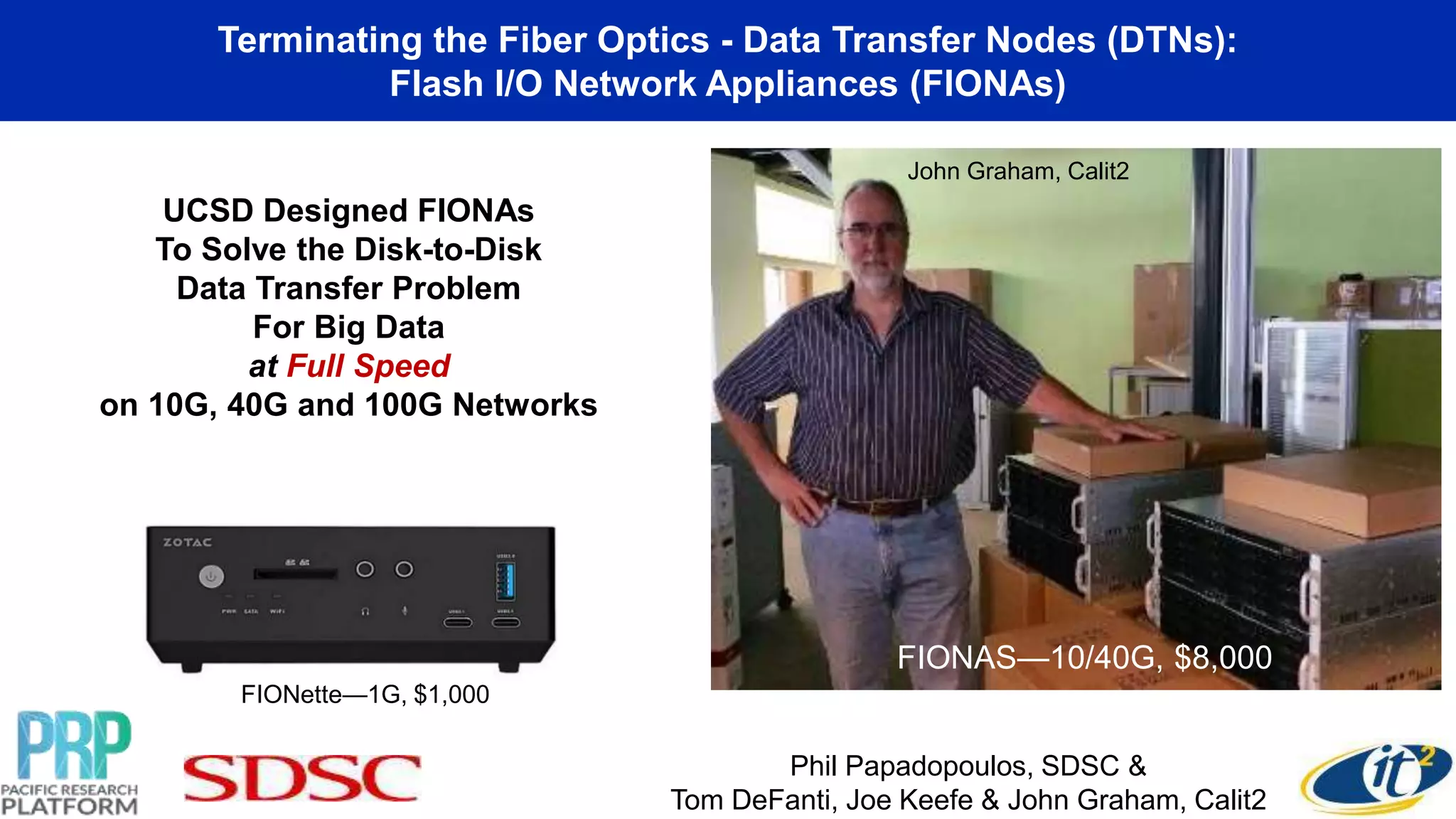

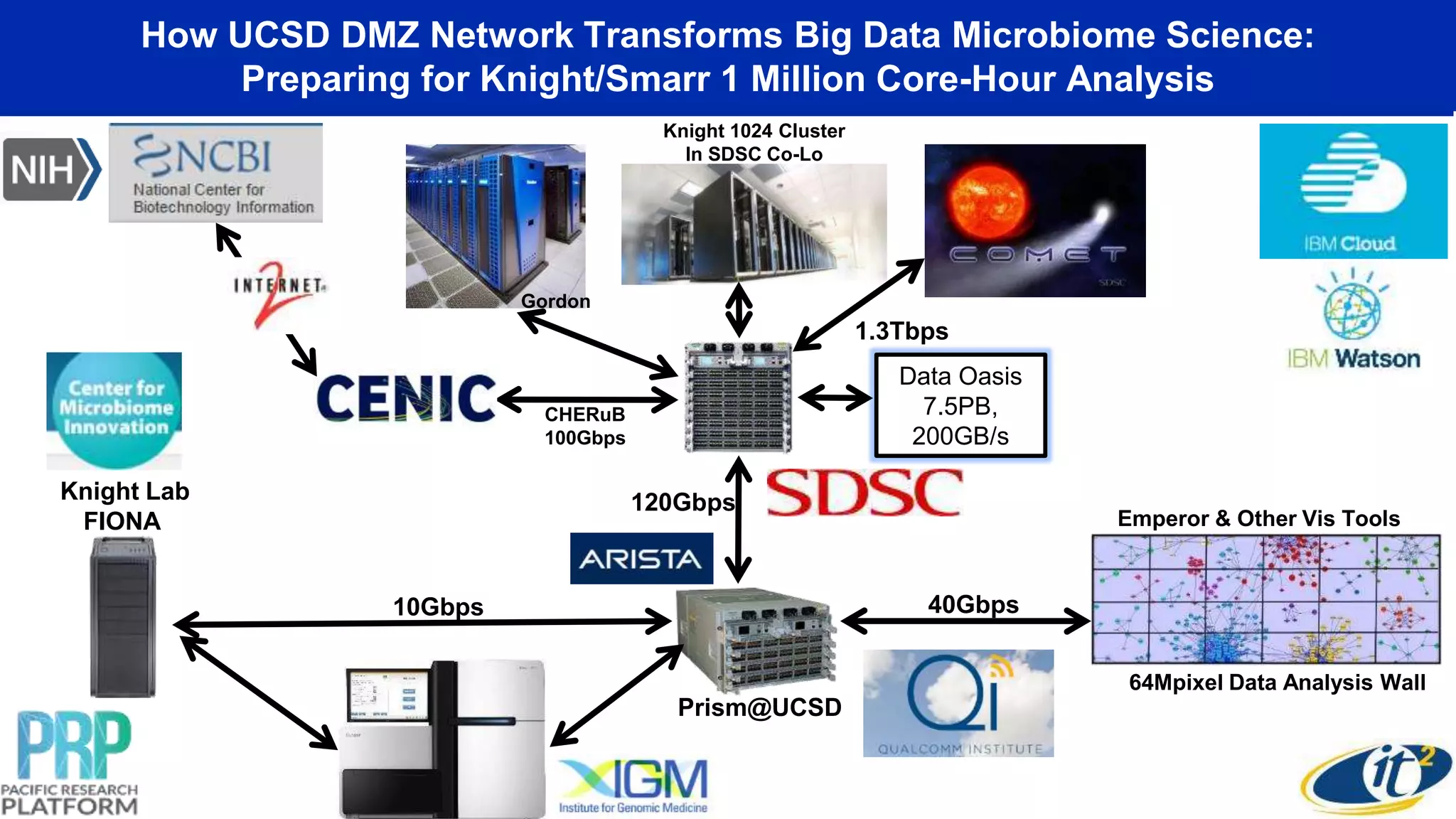

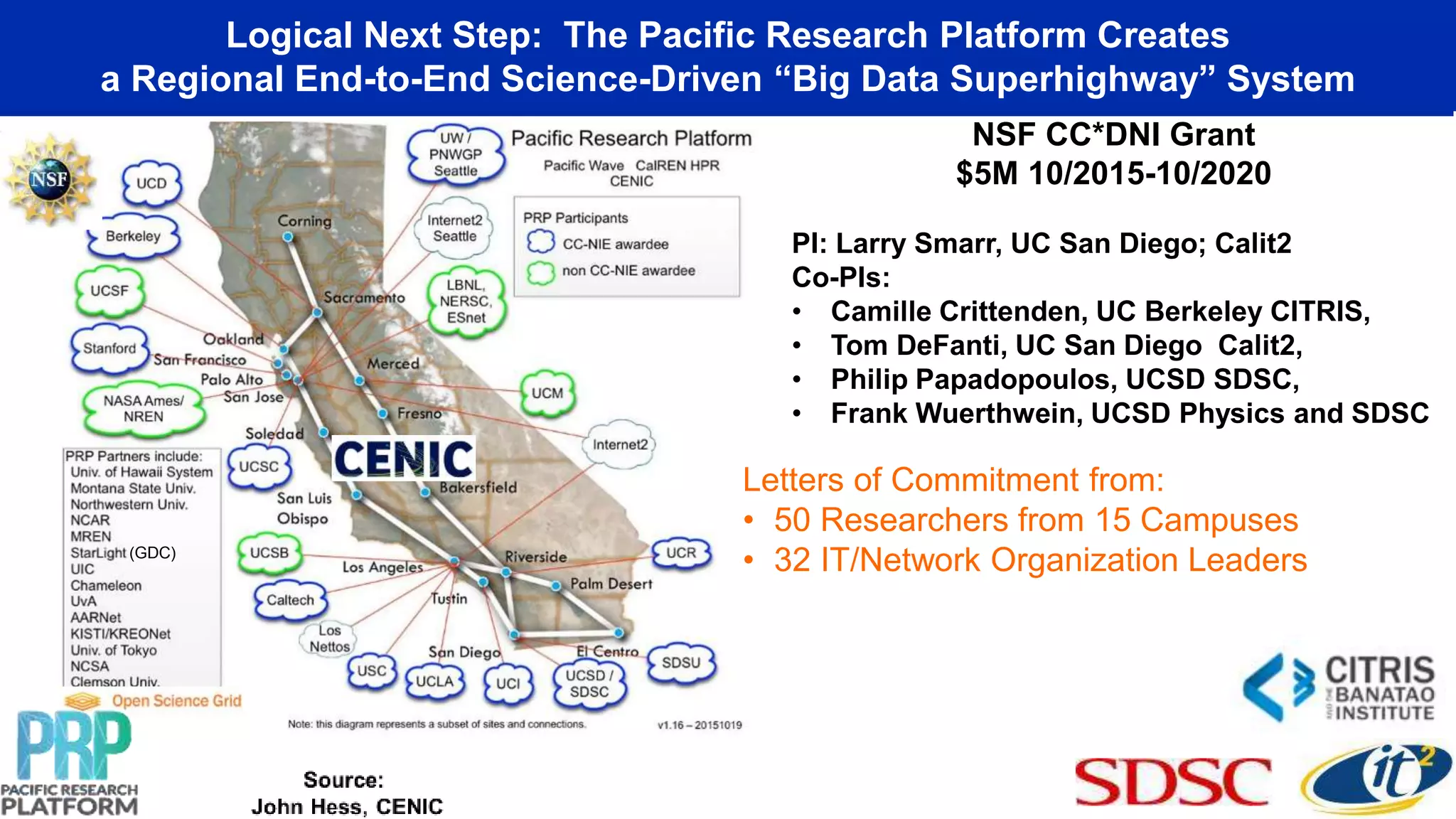

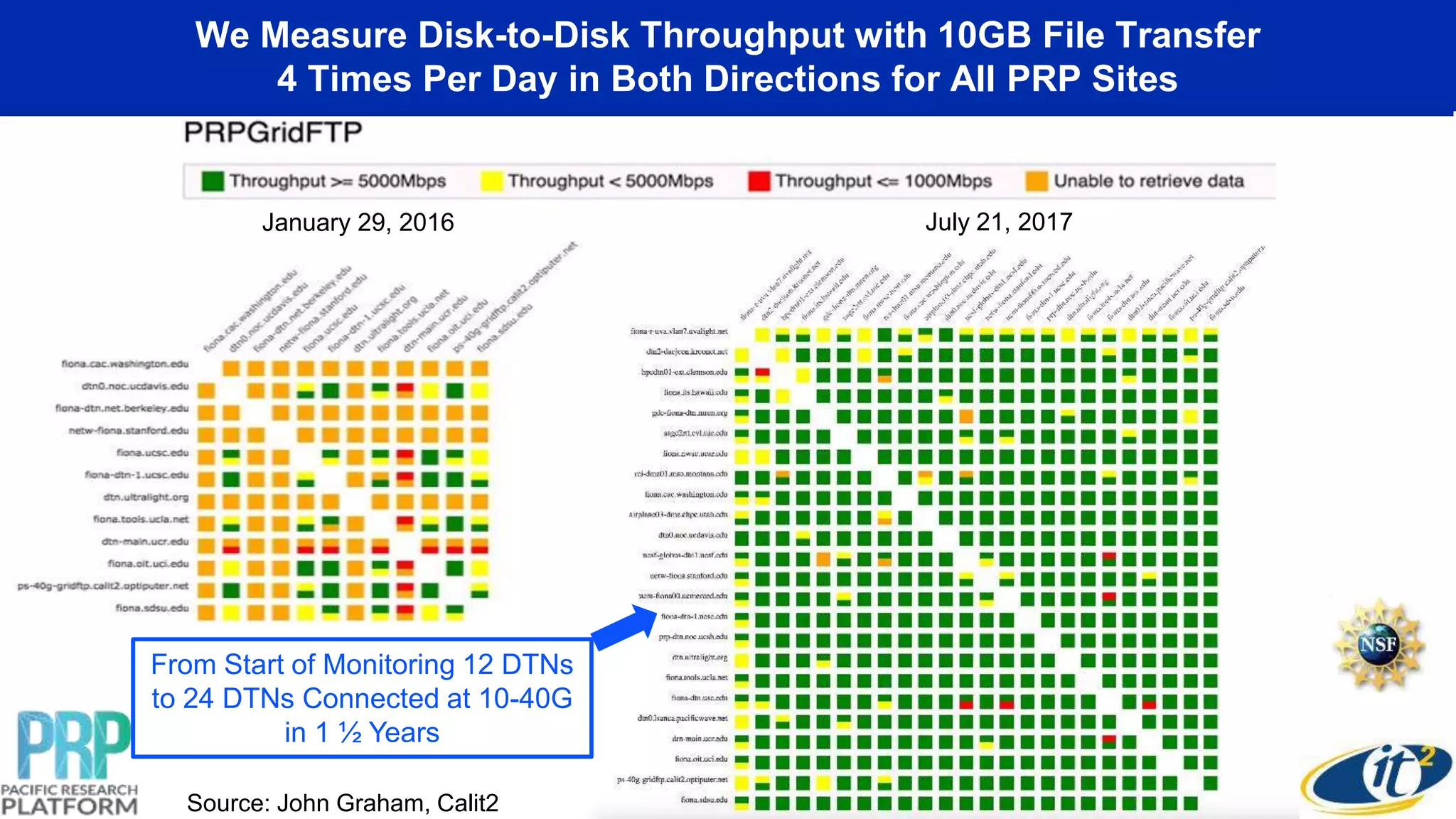

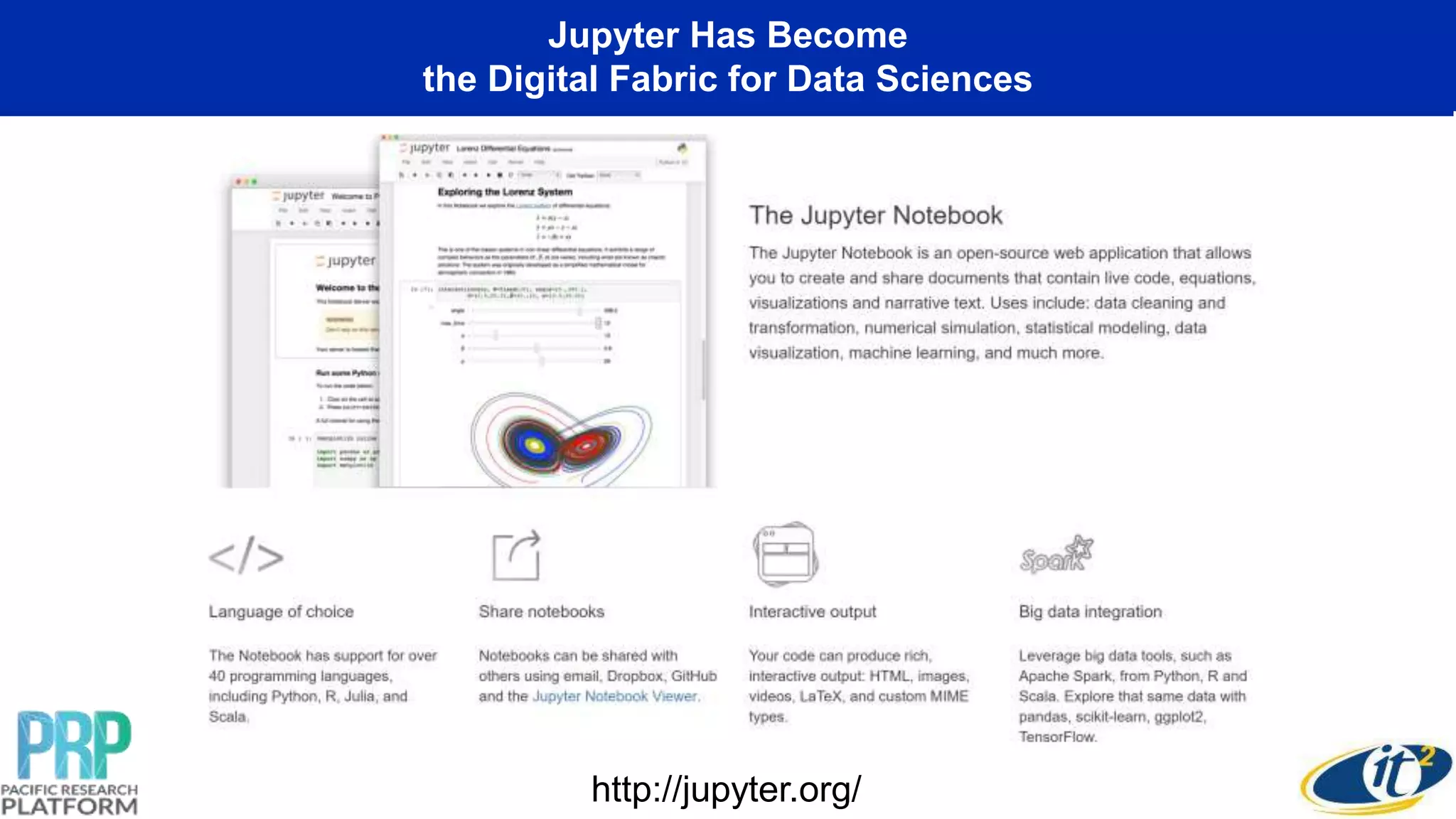

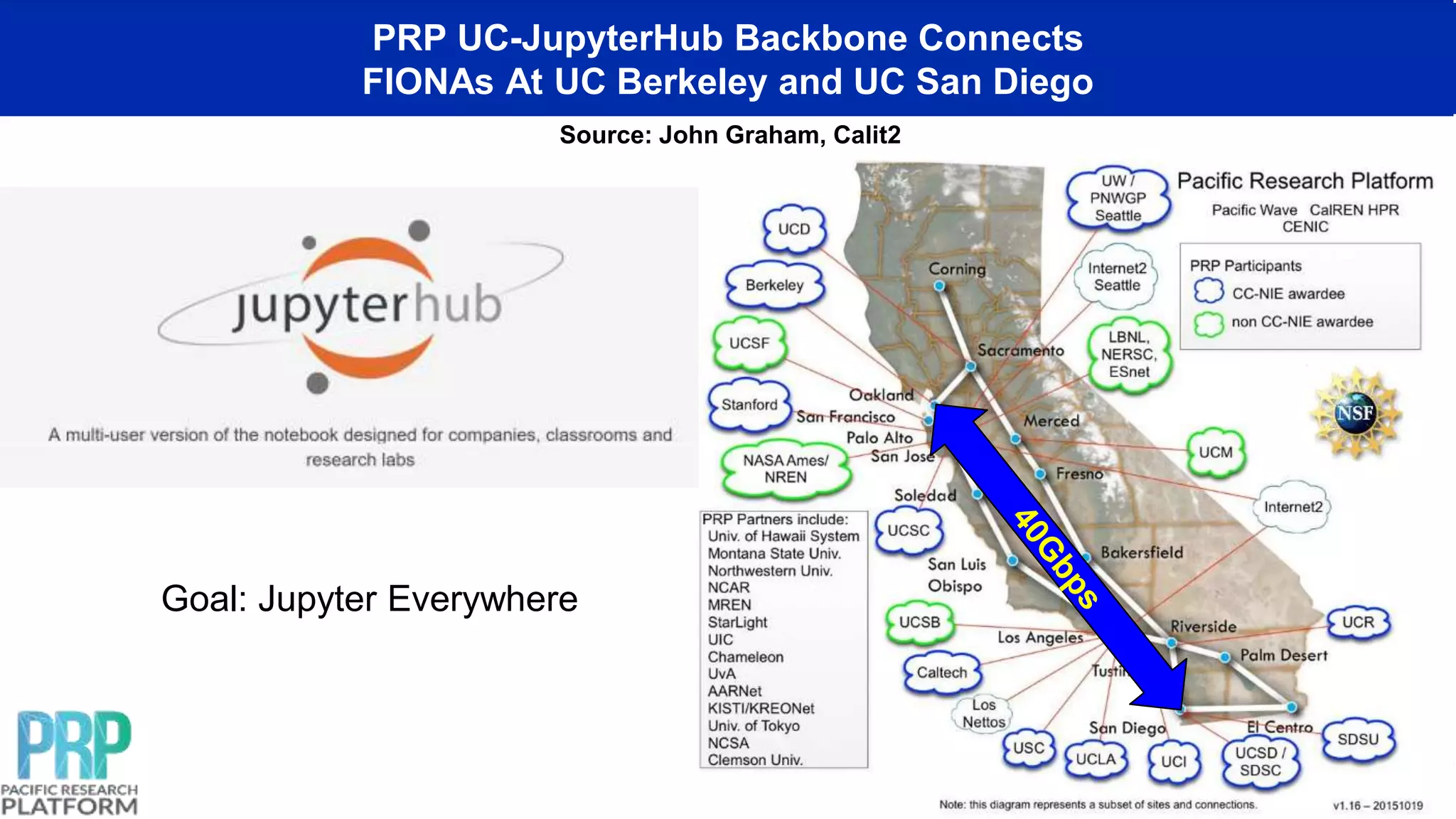

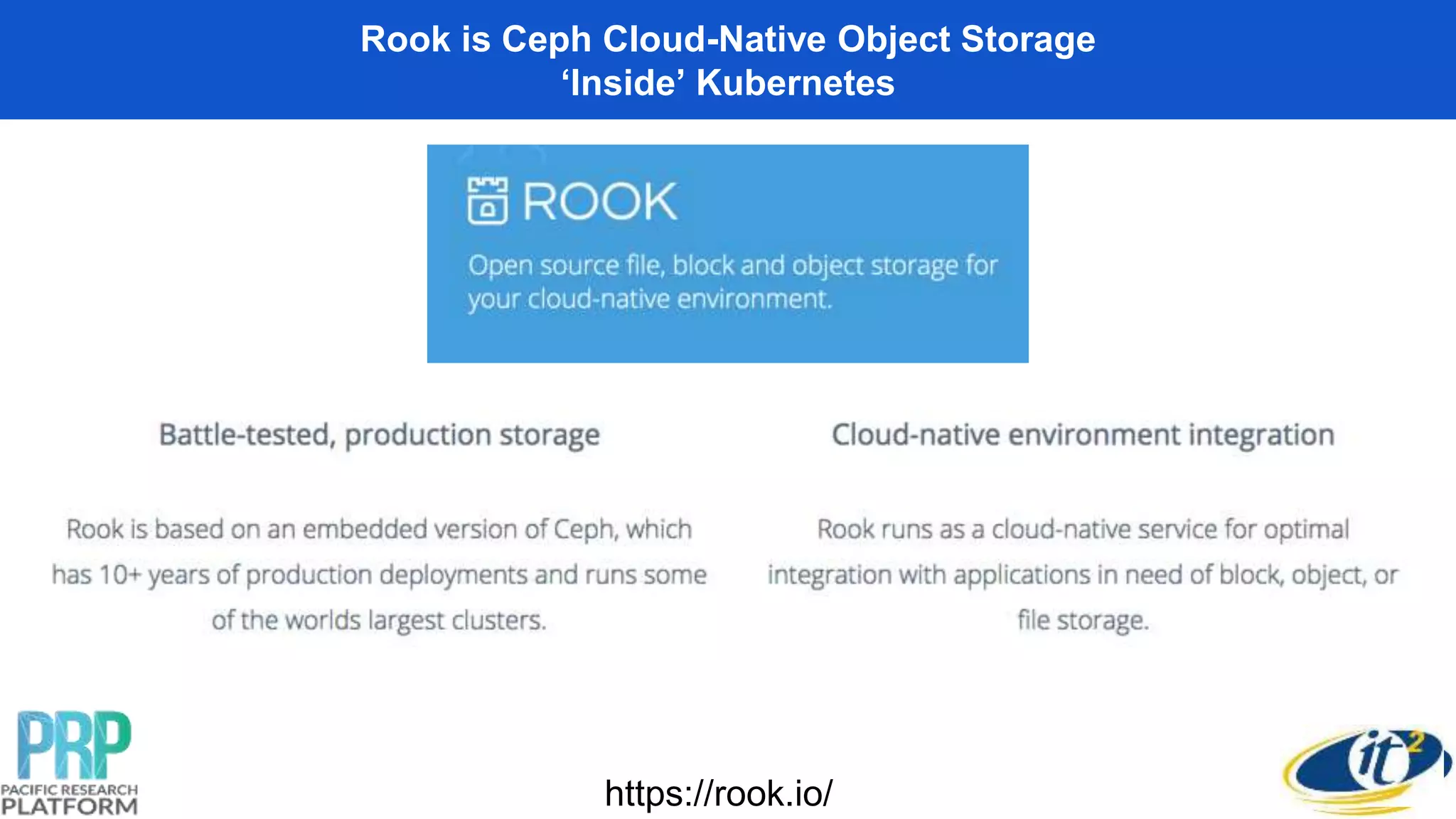

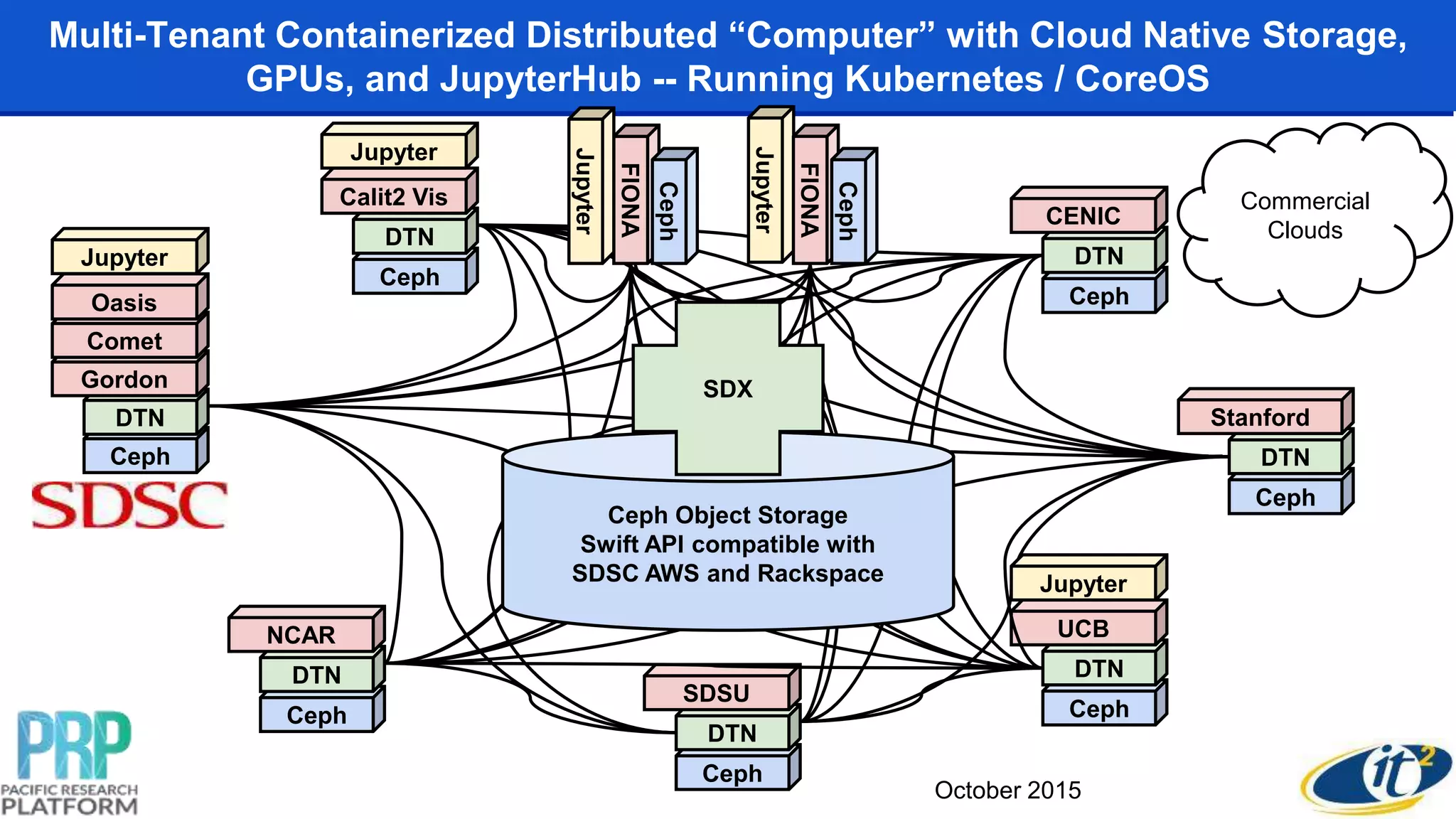

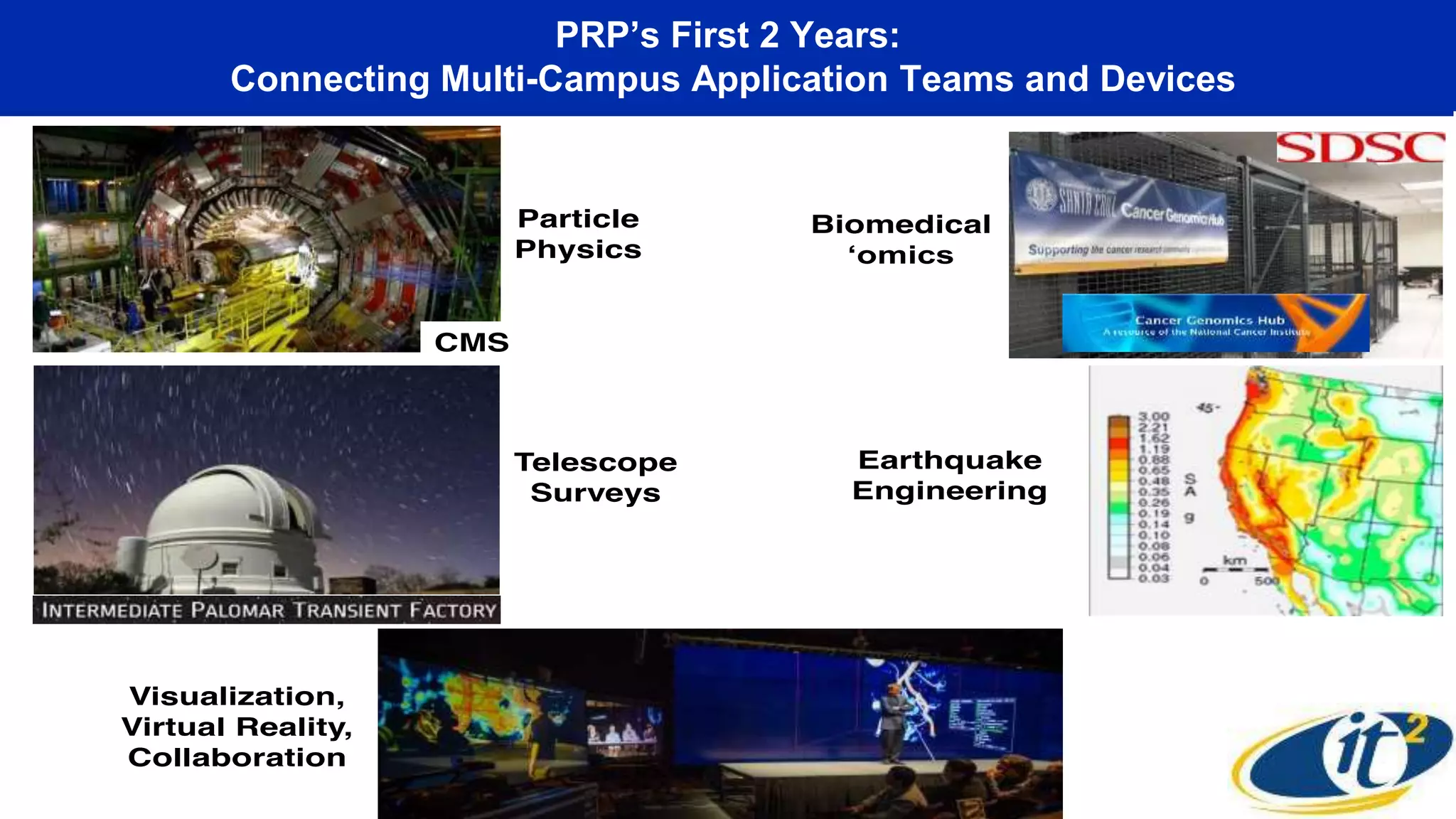

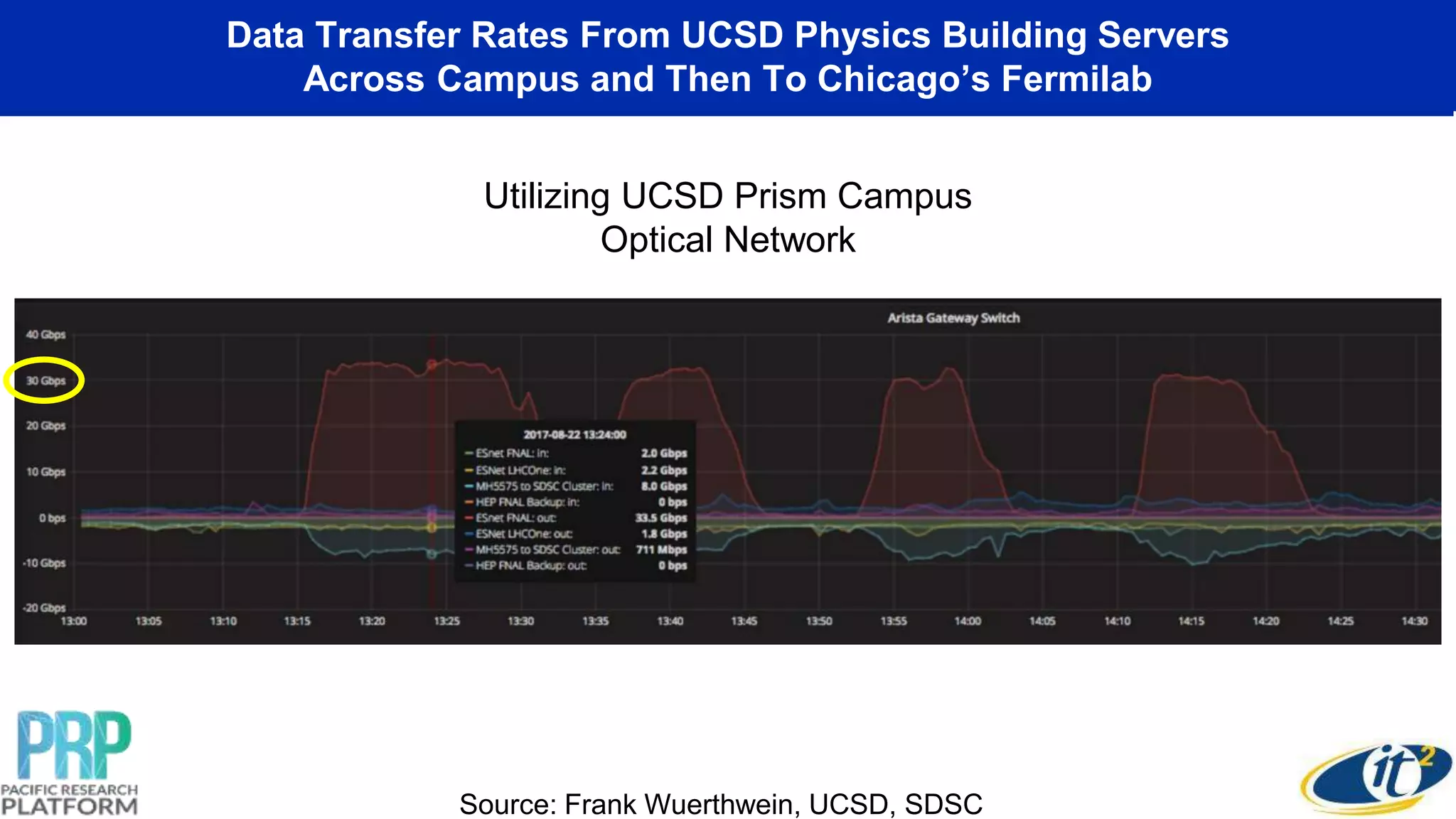

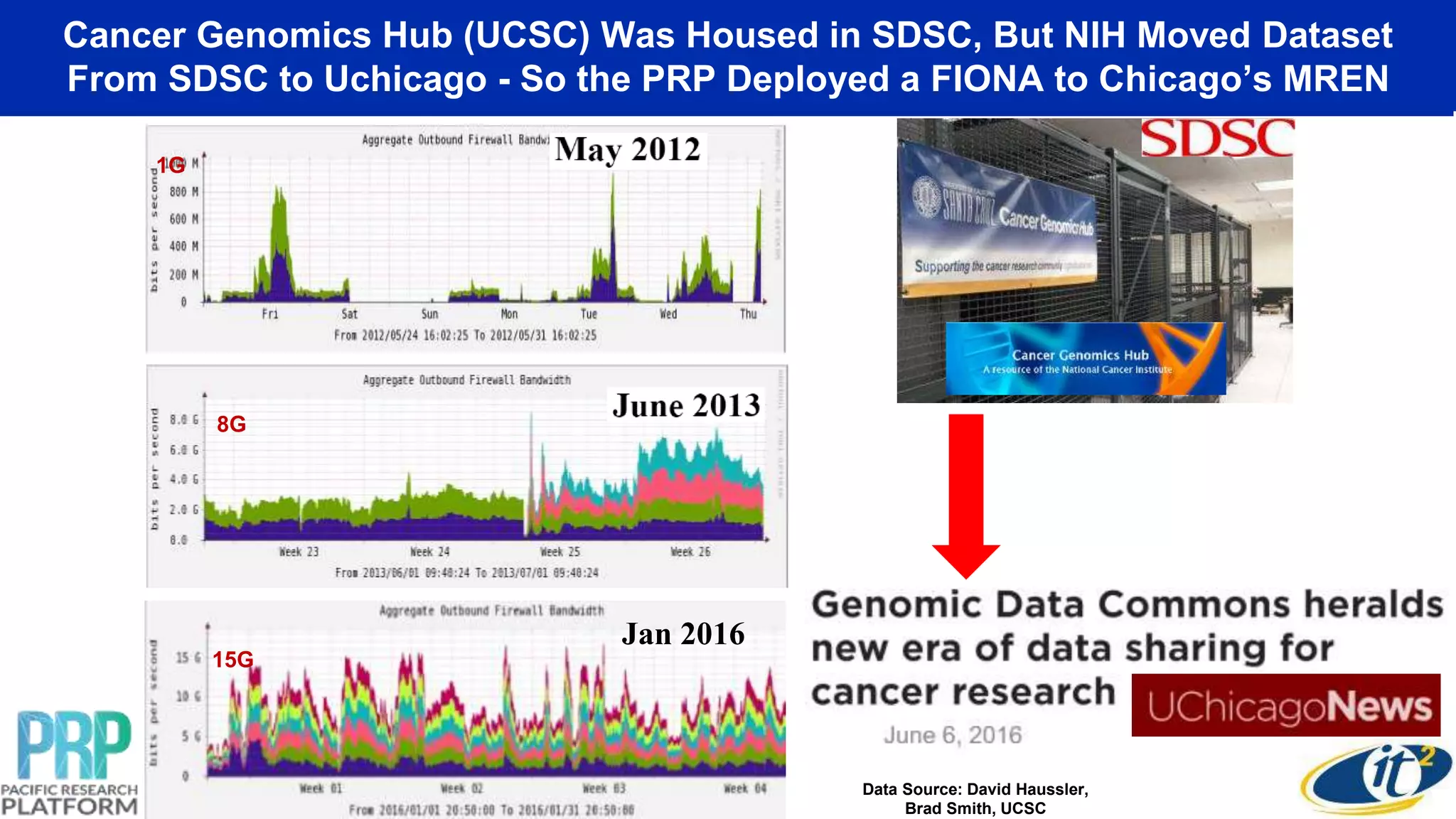

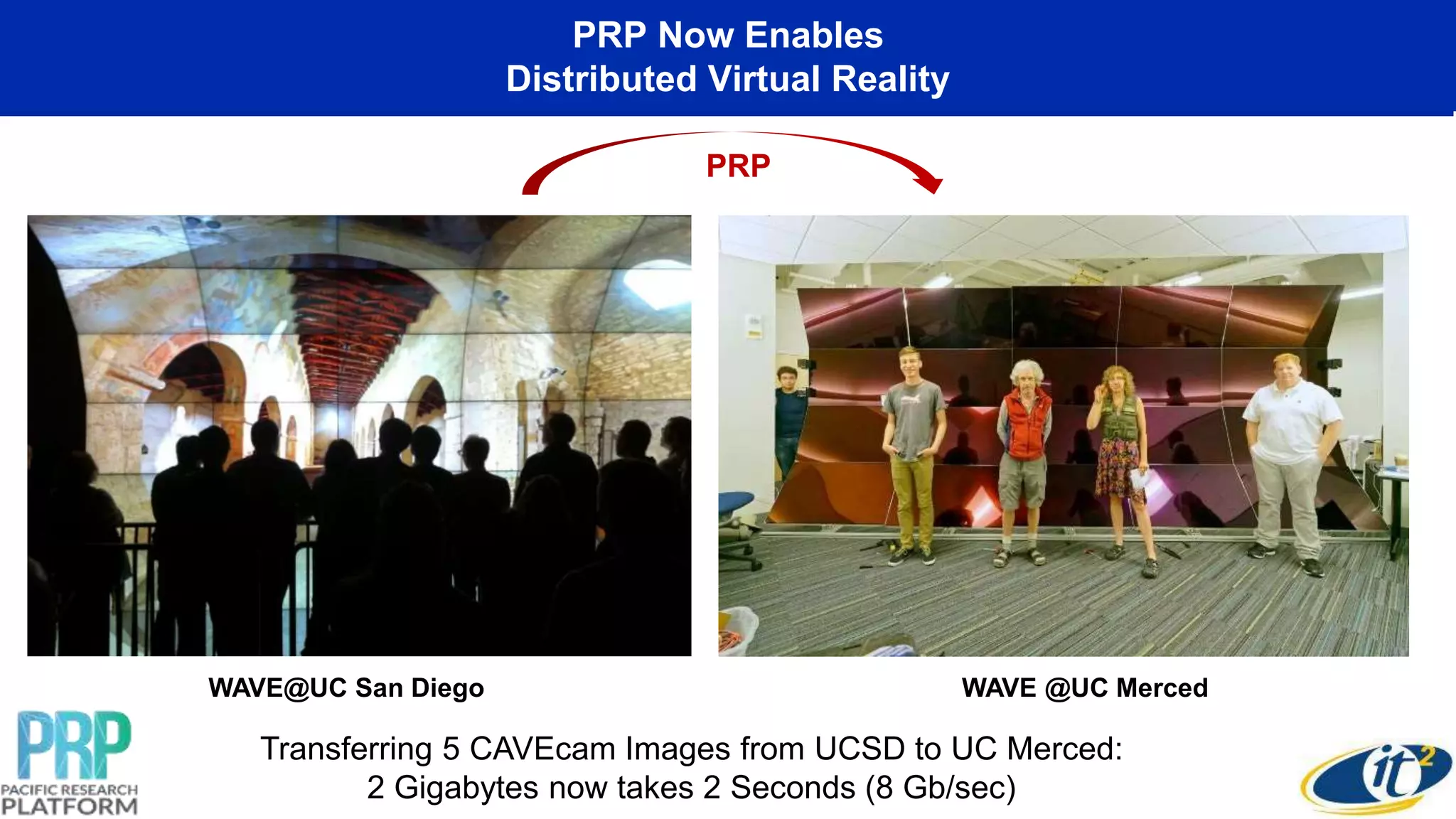

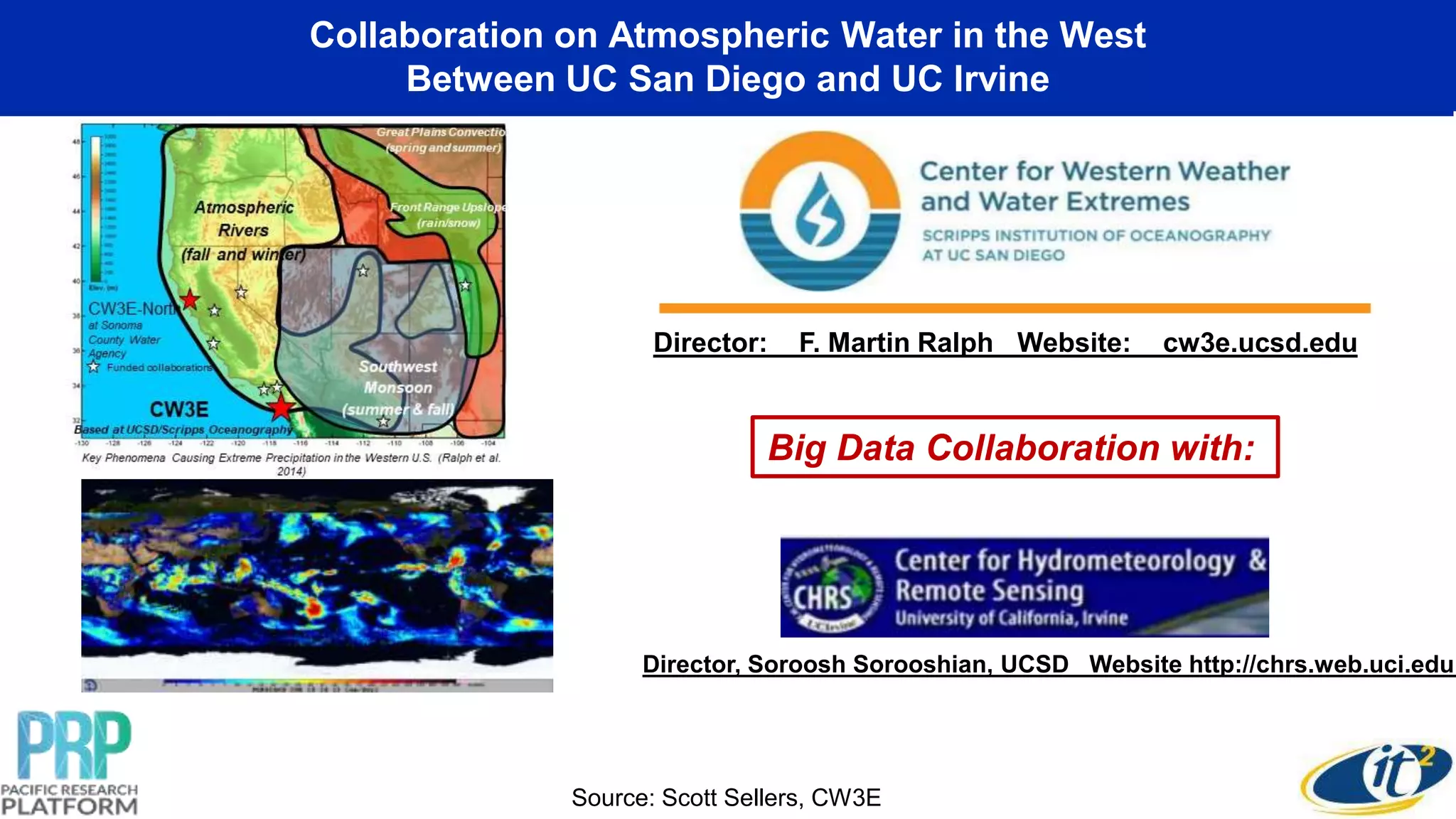

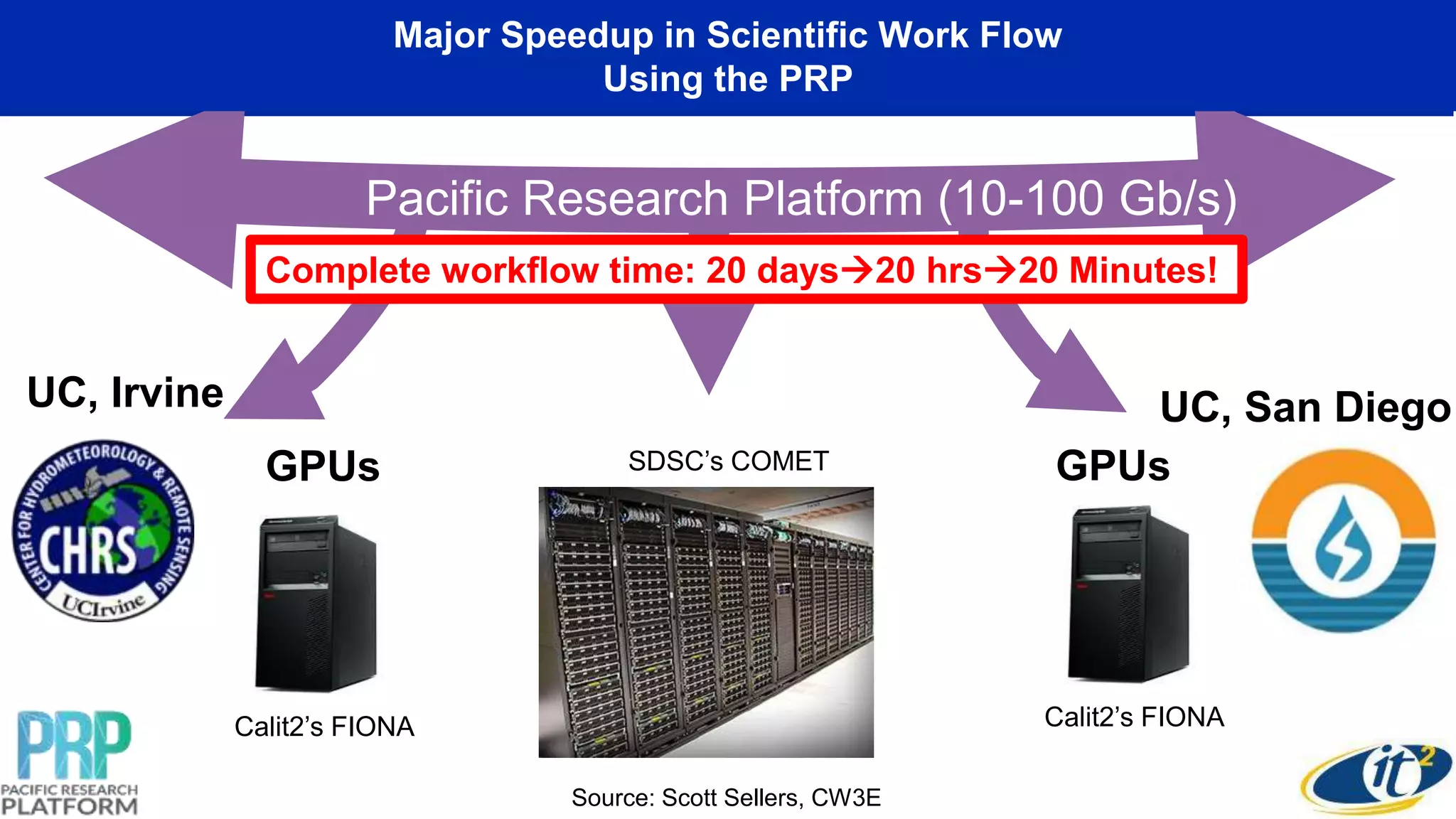

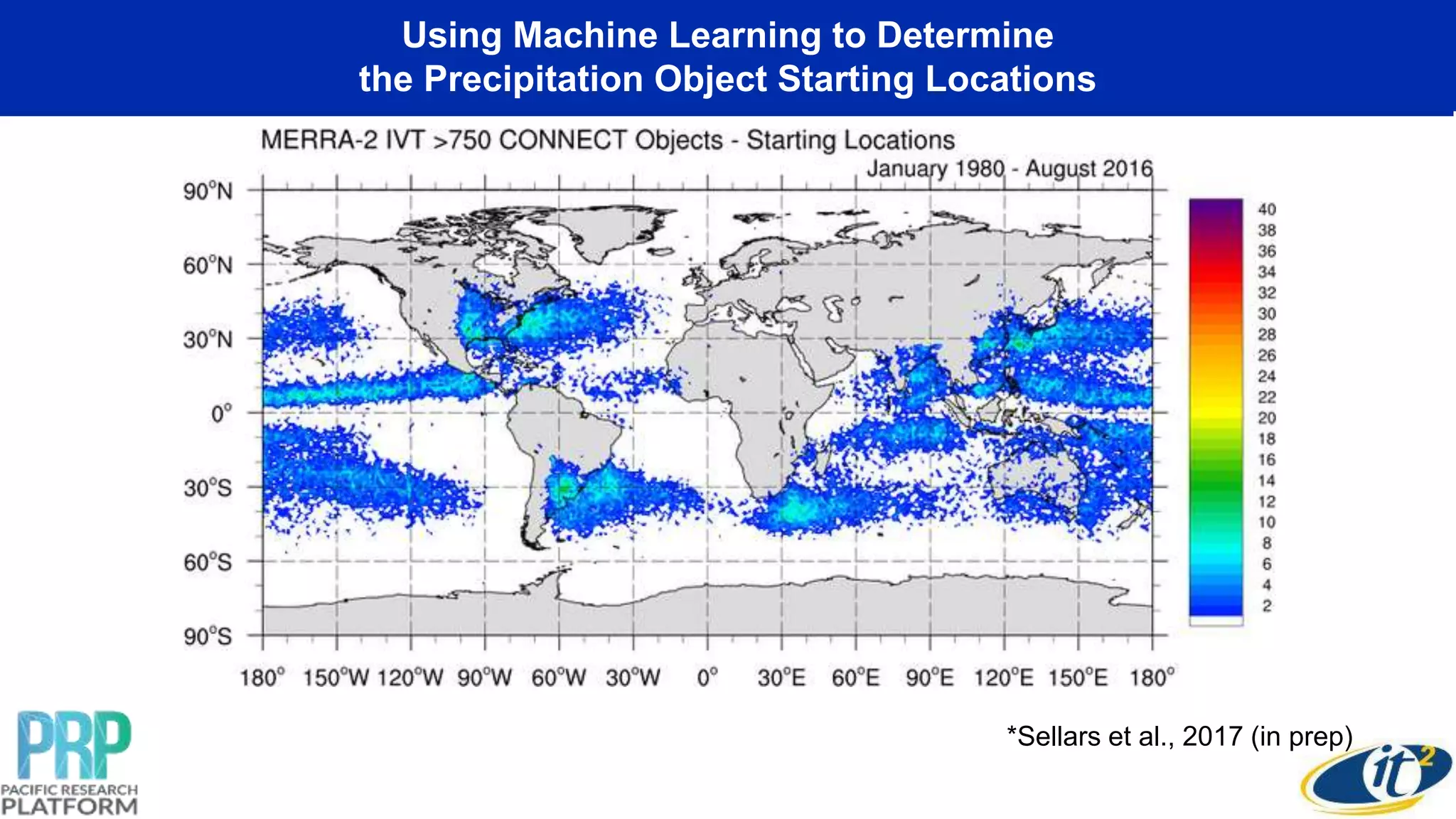

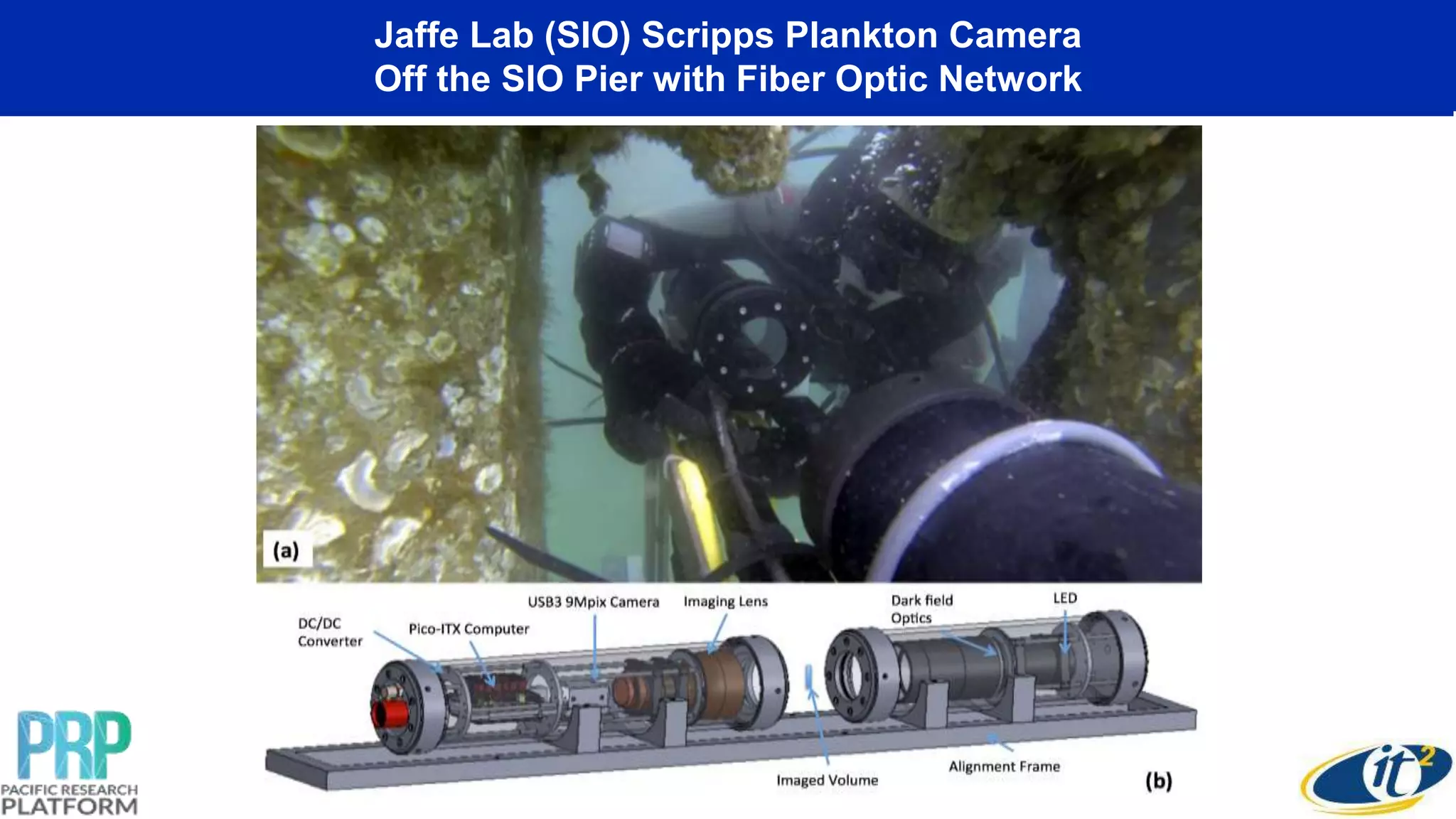

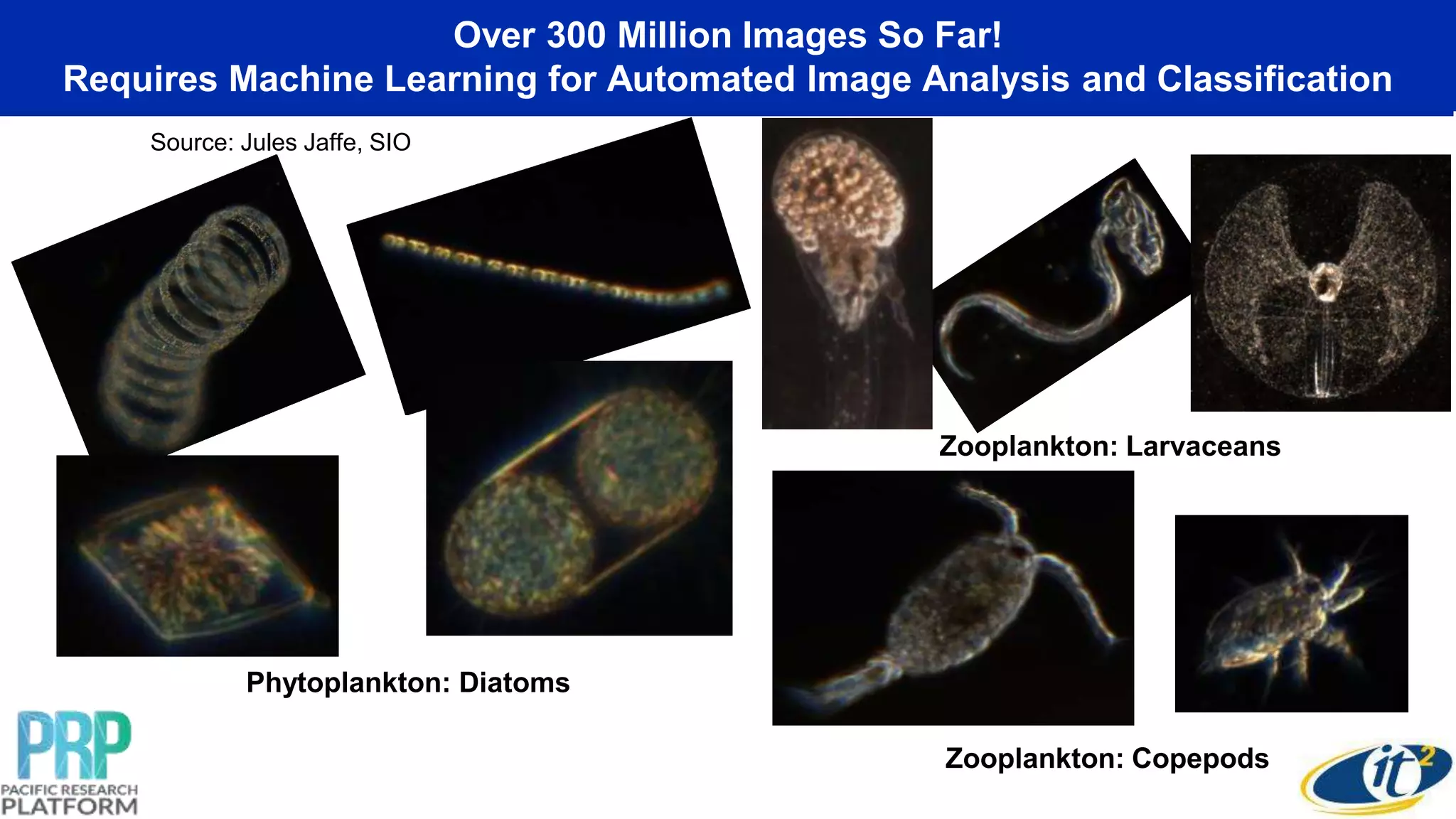

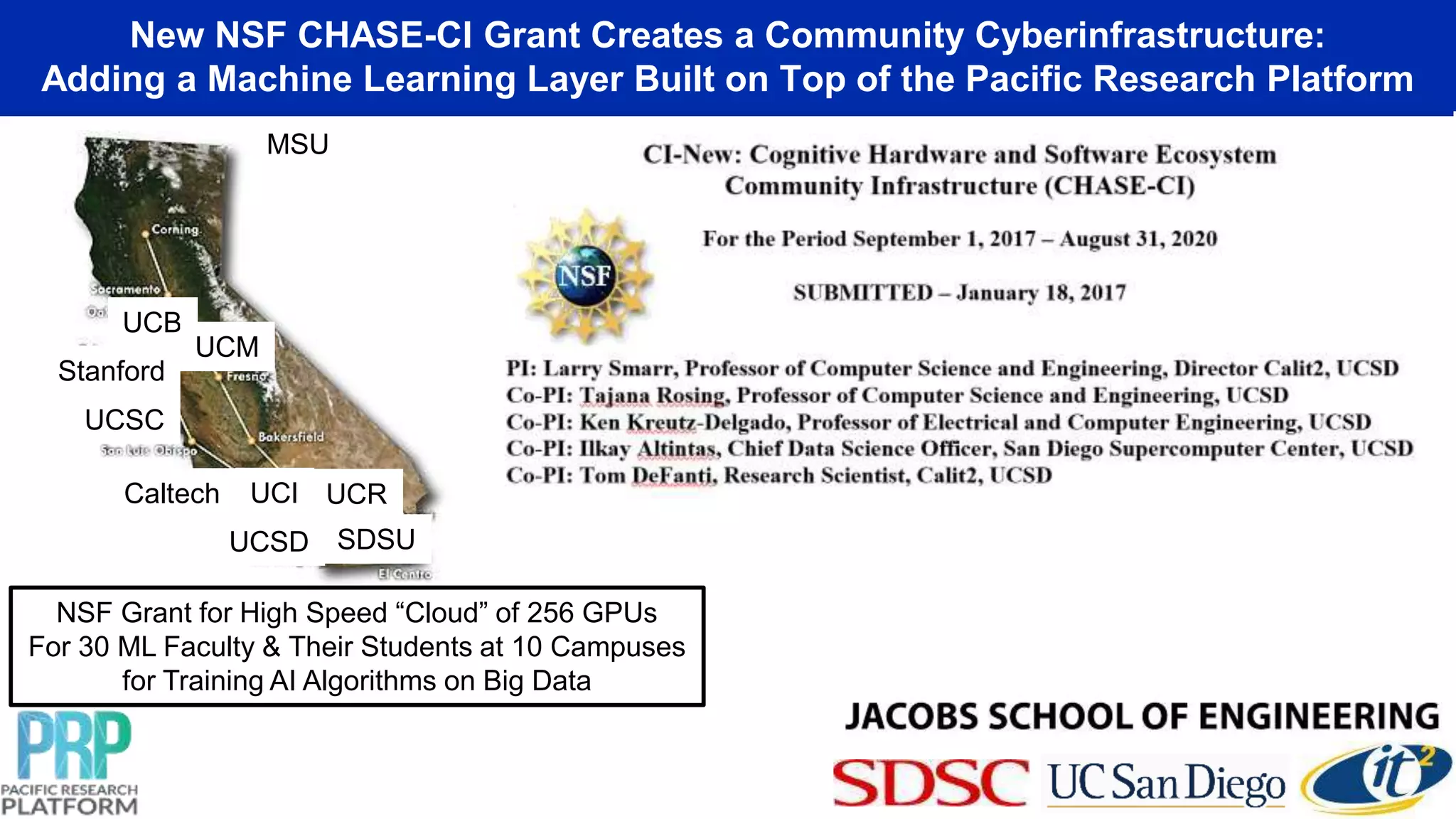

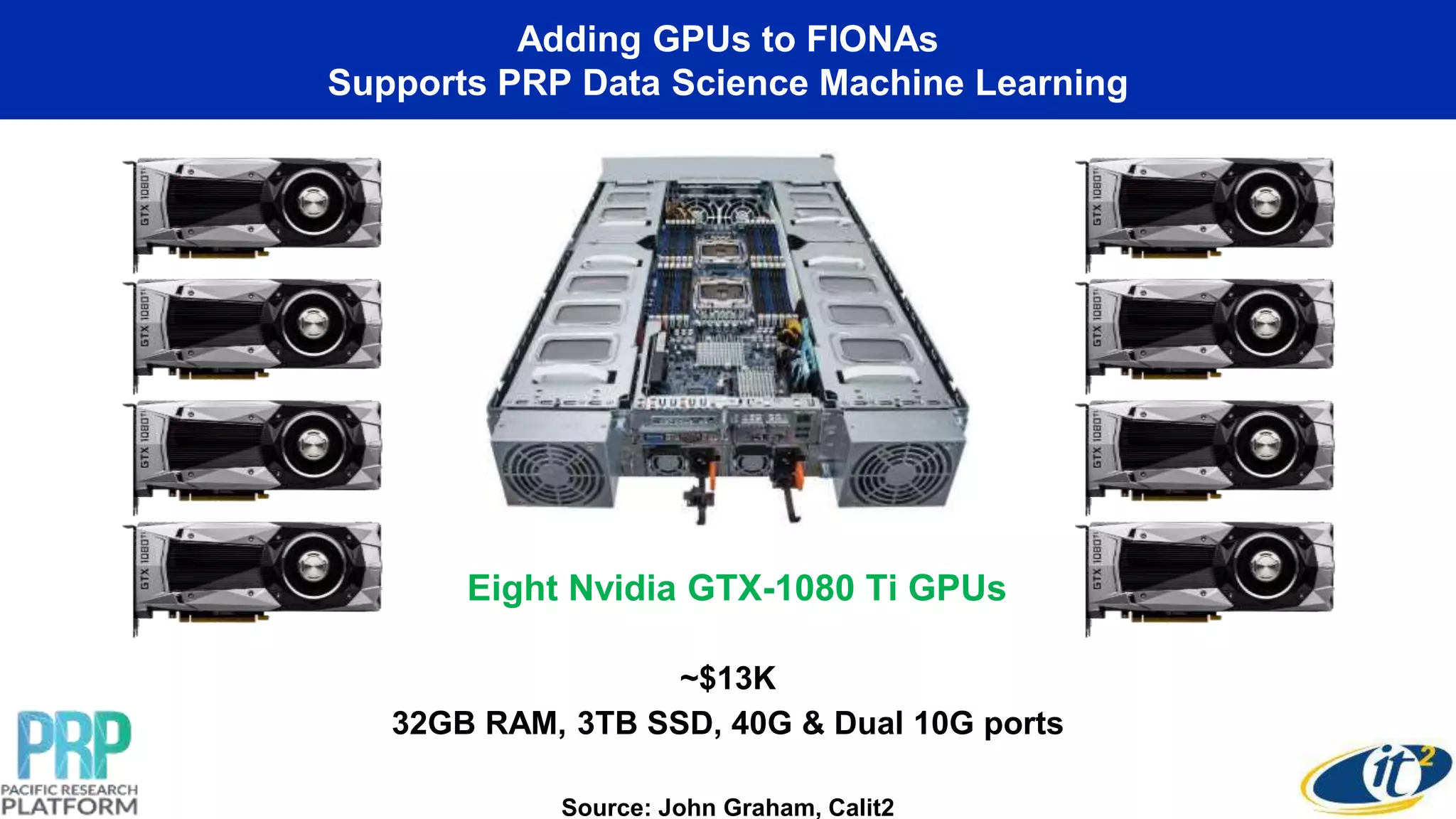

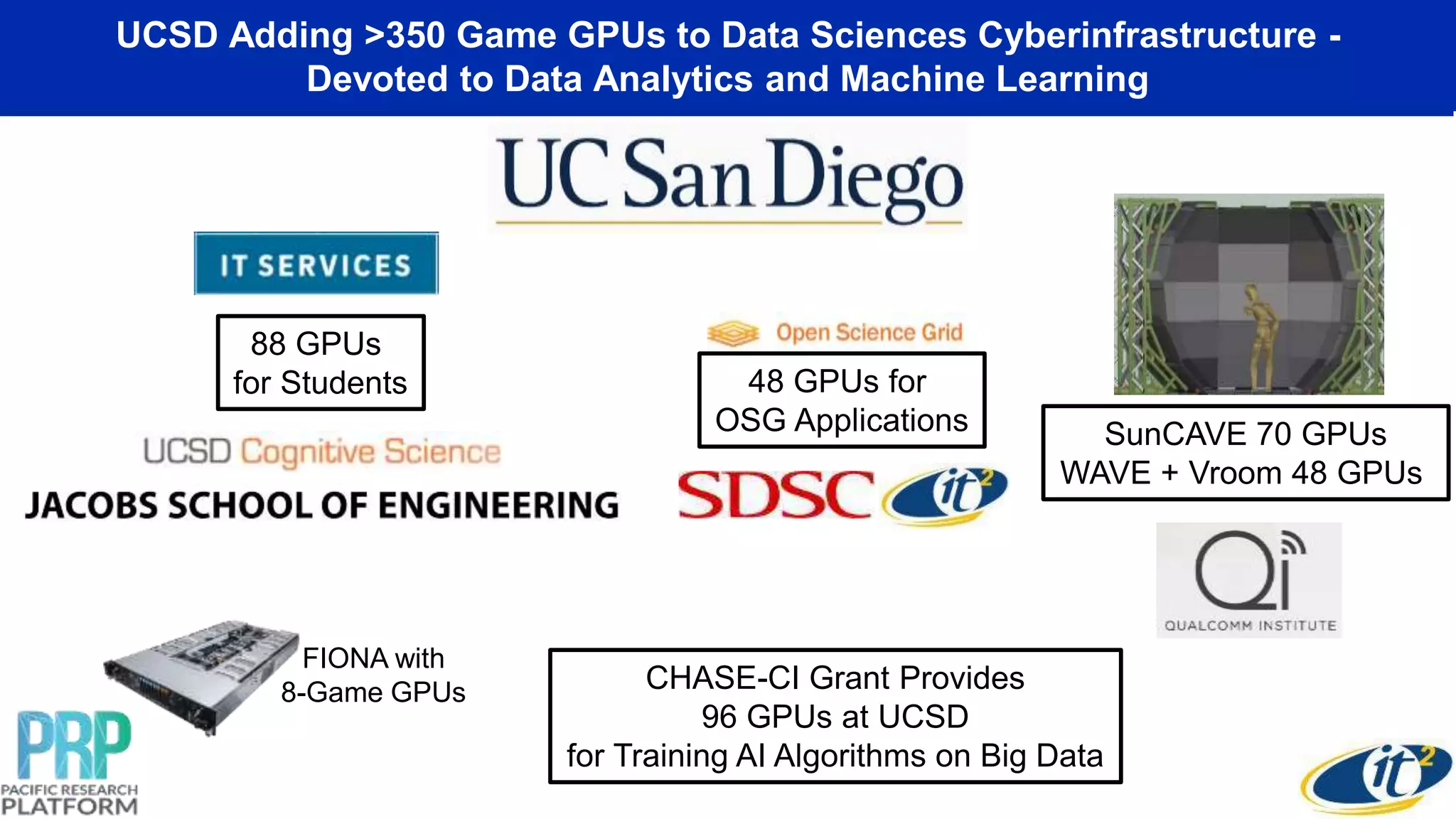

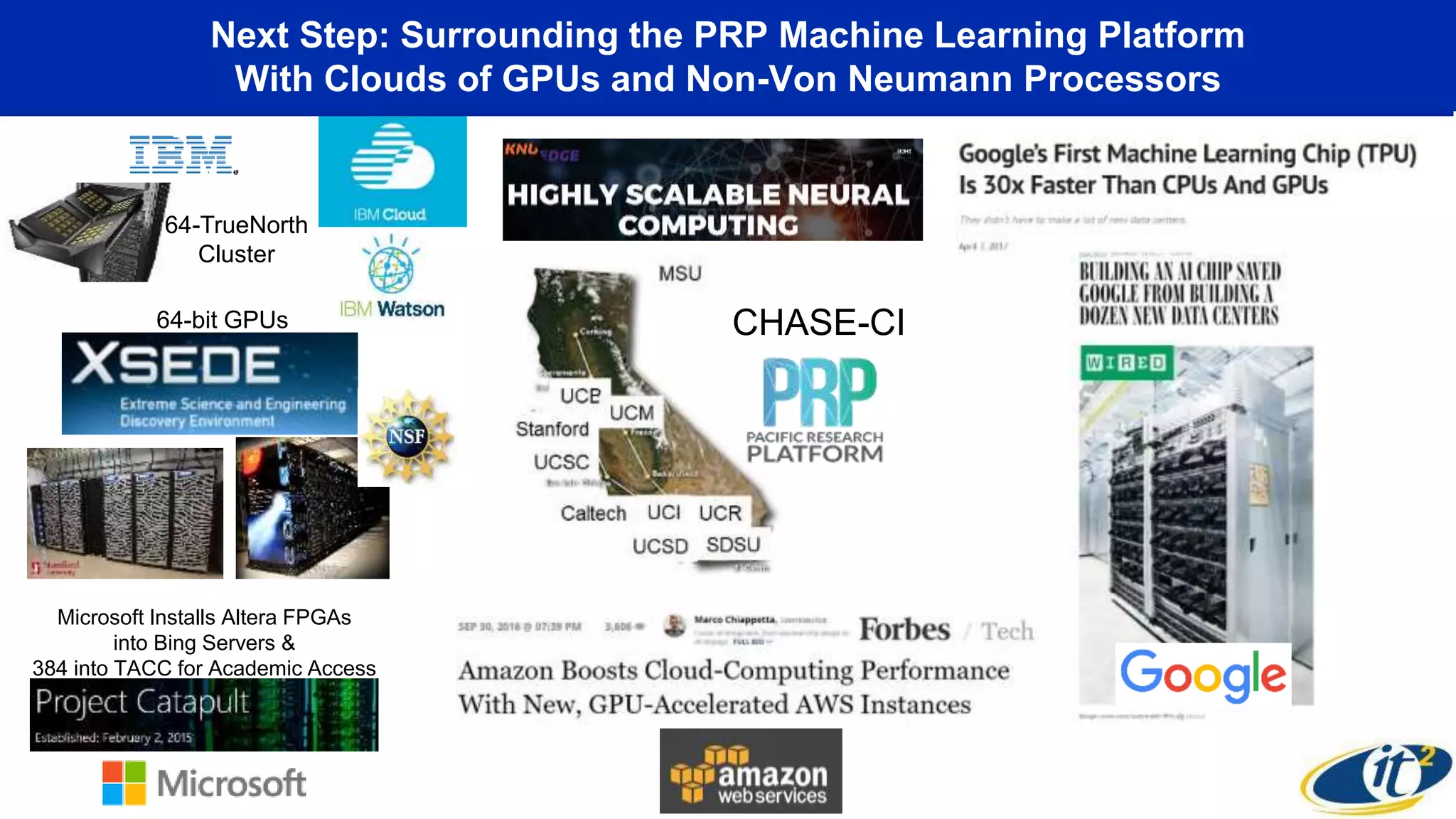

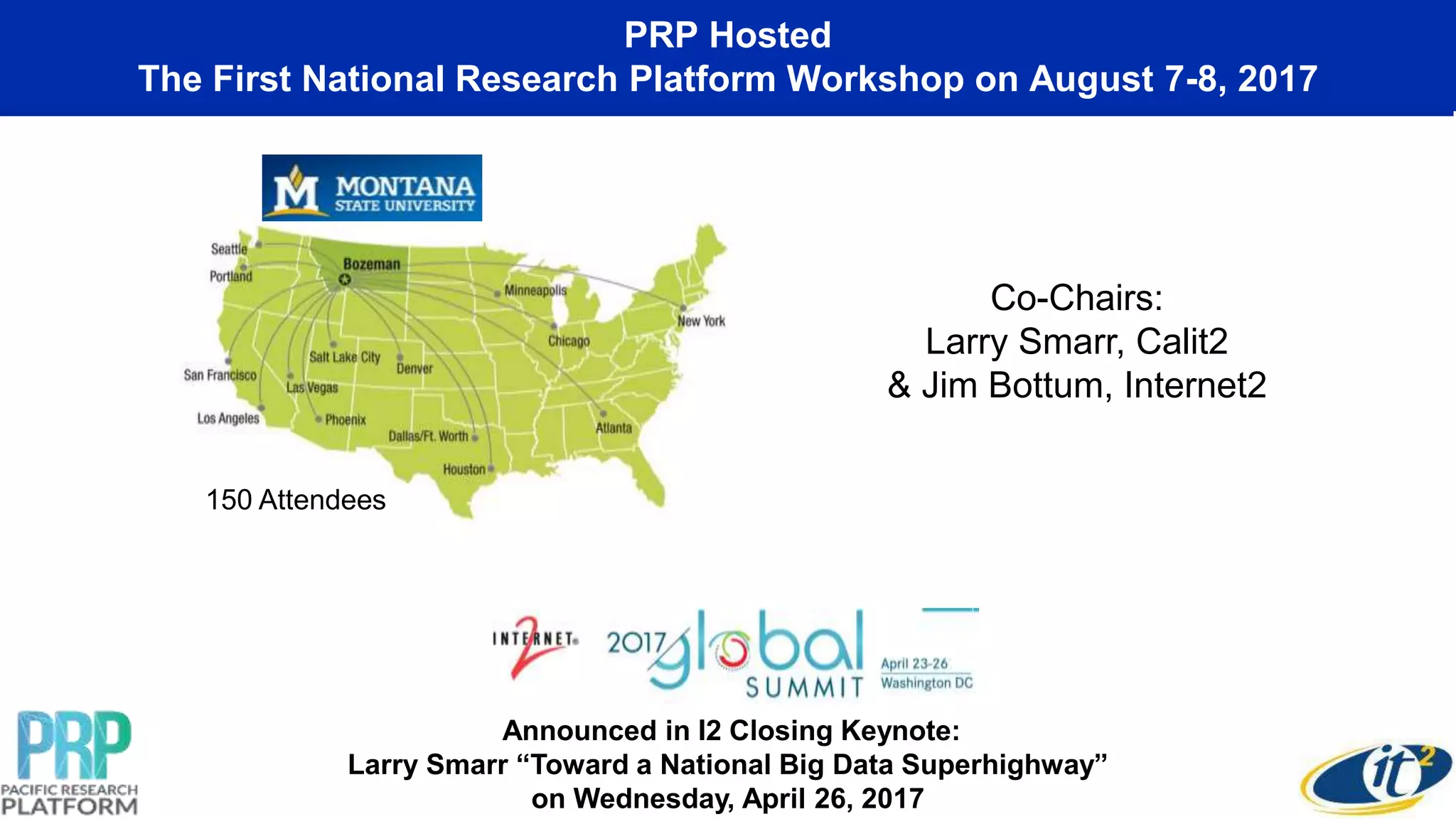

The keynote presentation discusses the exponential growth of big data and the need for enhanced cyberinfrastructure, highlighting the Pacific Research Platform (PRP) funded by NSF, which aims to connect research institutions with high-bandwidth internet. It outlines the development of a community infrastructure that supports distributed big data analytics and machine learning, while also addressing existing challenges such as campus-based network capabilities. The presentation emphasizes the vision of creating a national and global network for big data research, enhanced by collaborations and technological innovations.