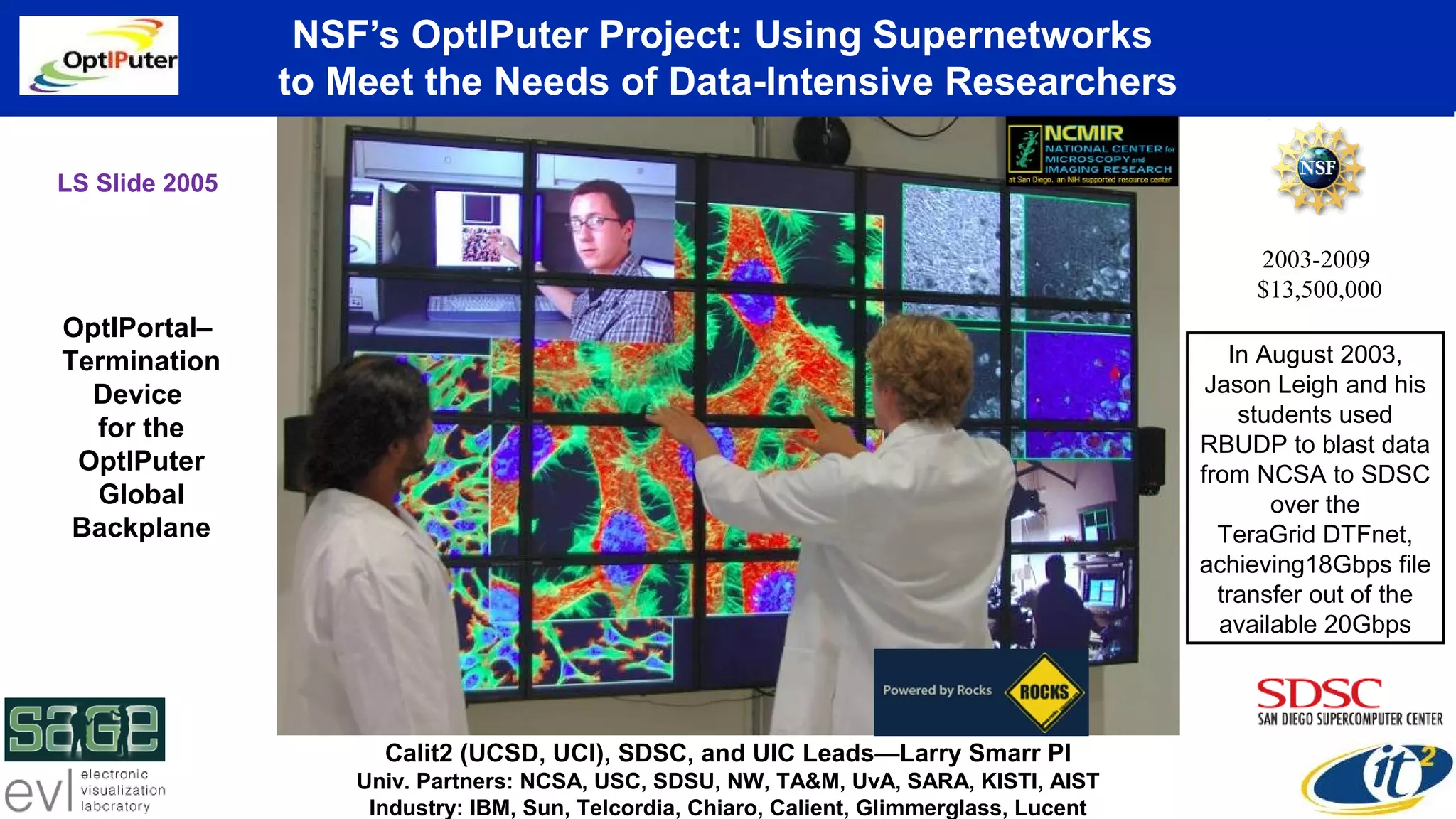

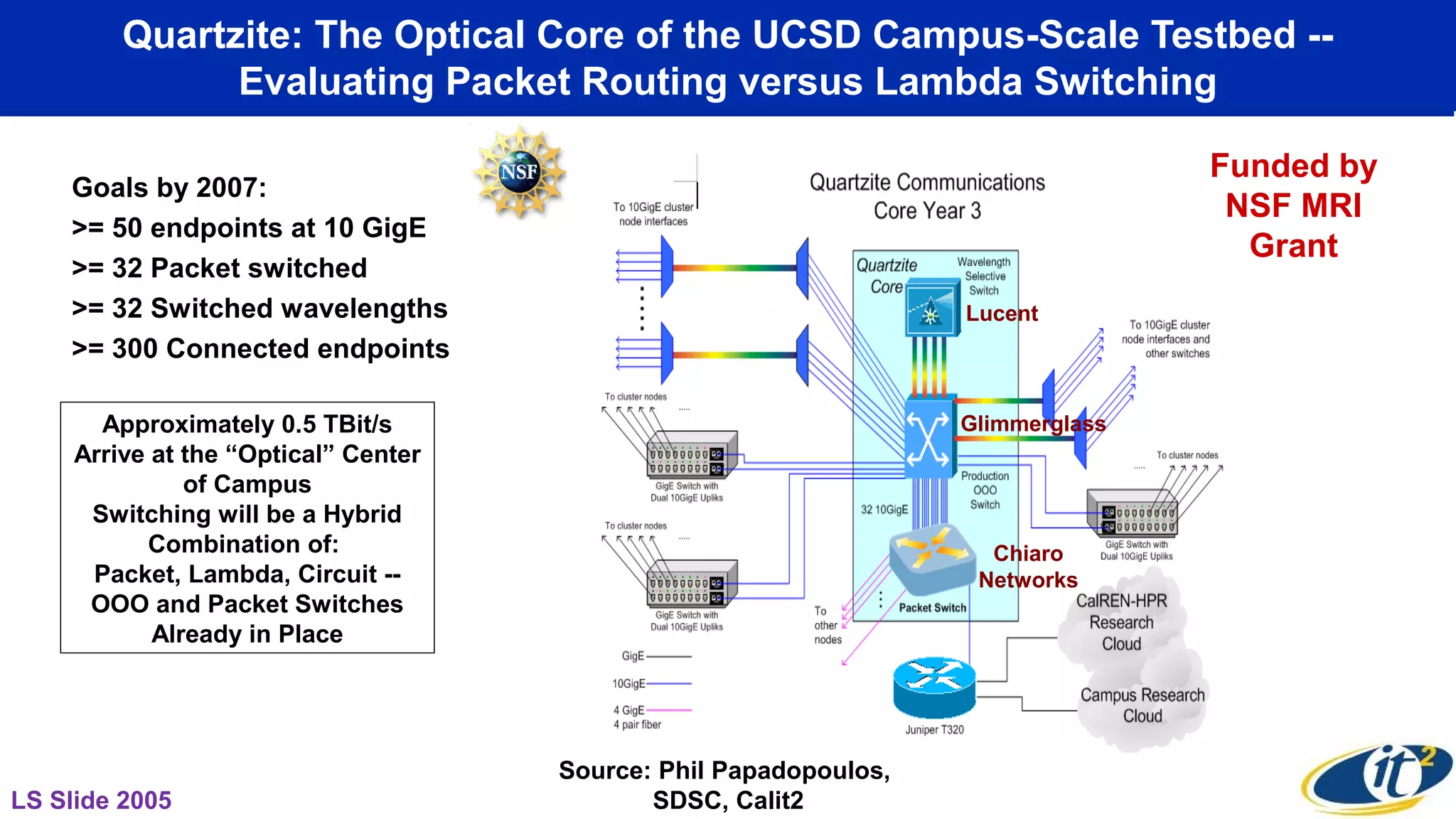

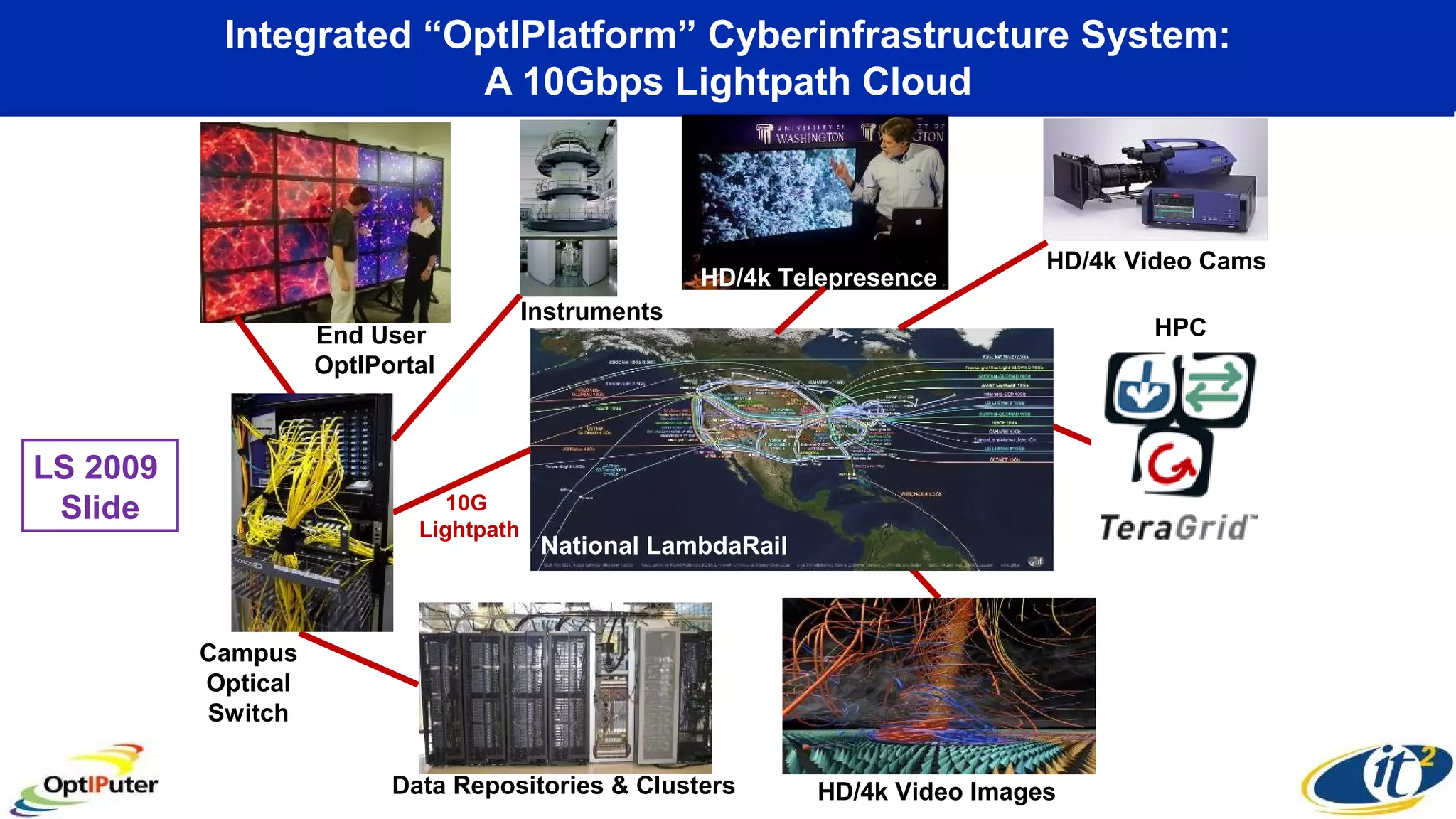

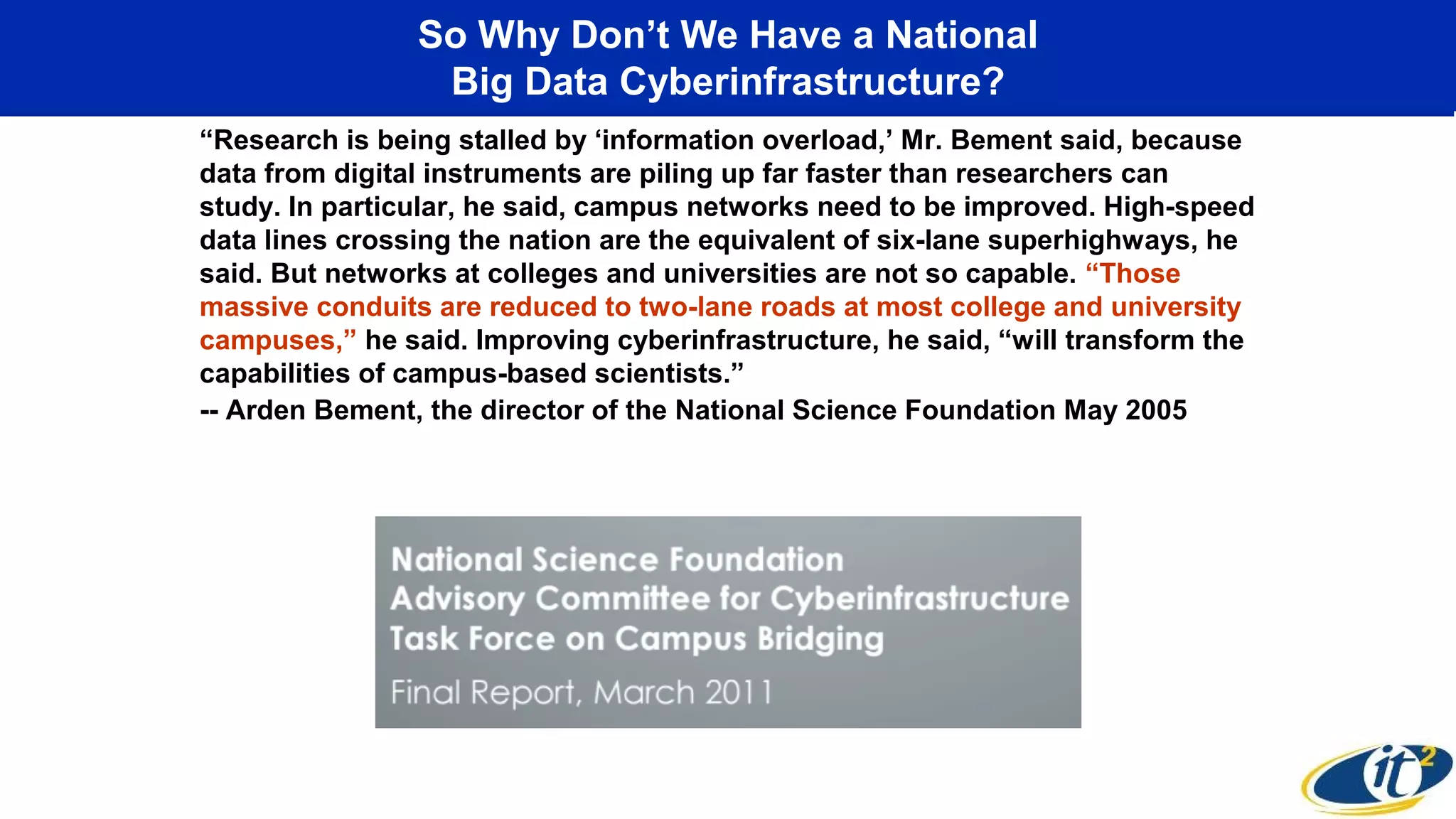

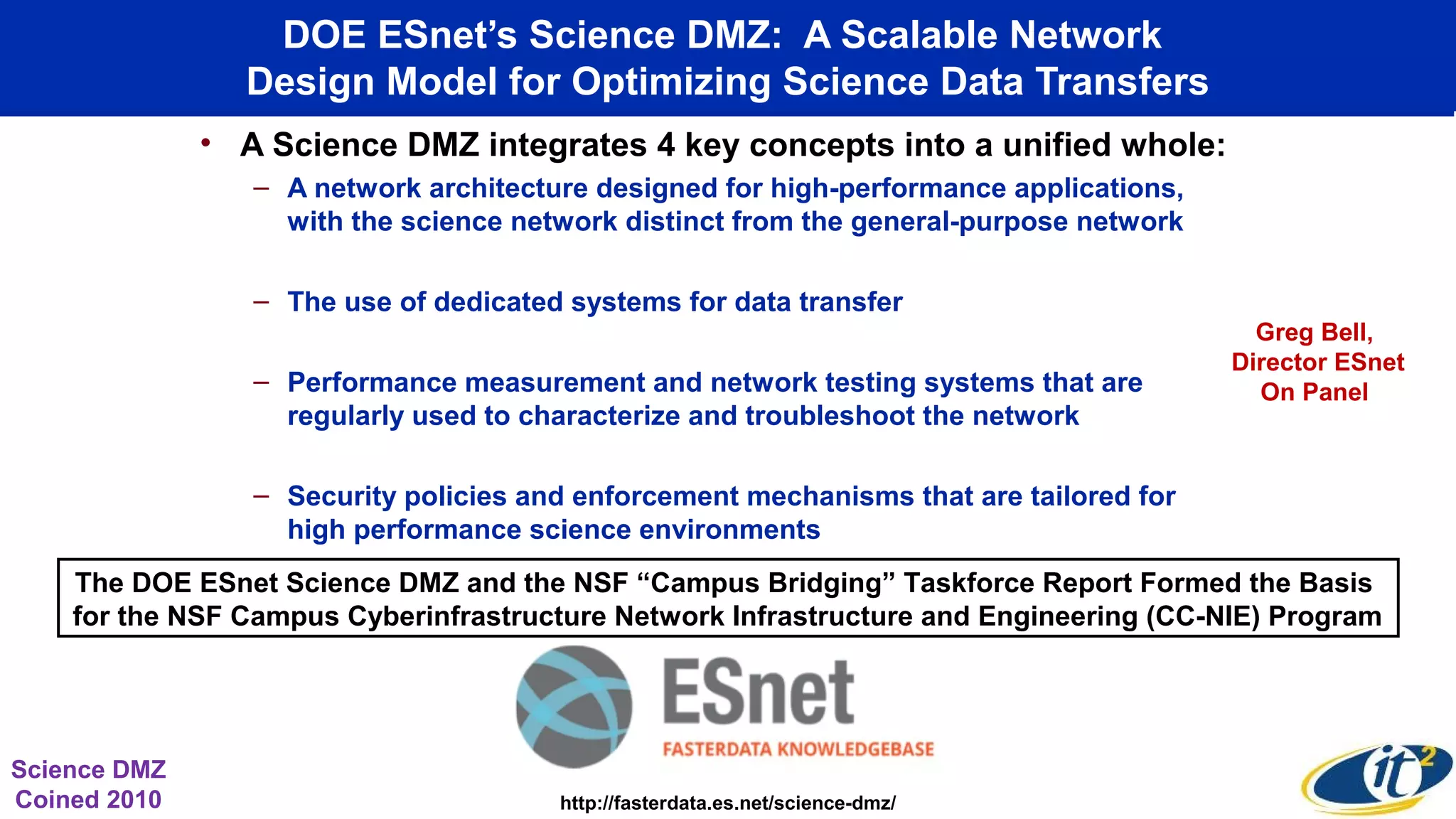

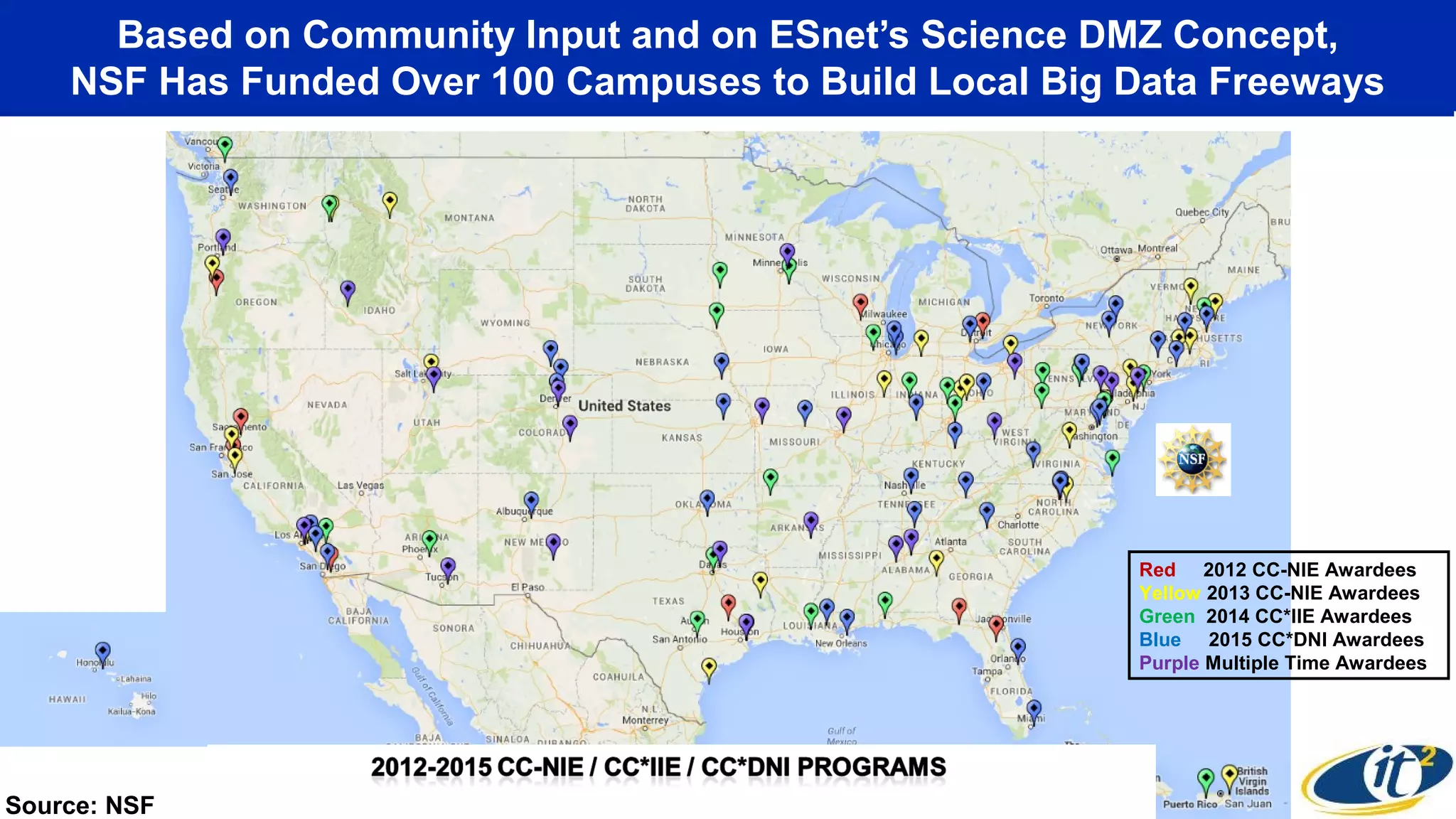

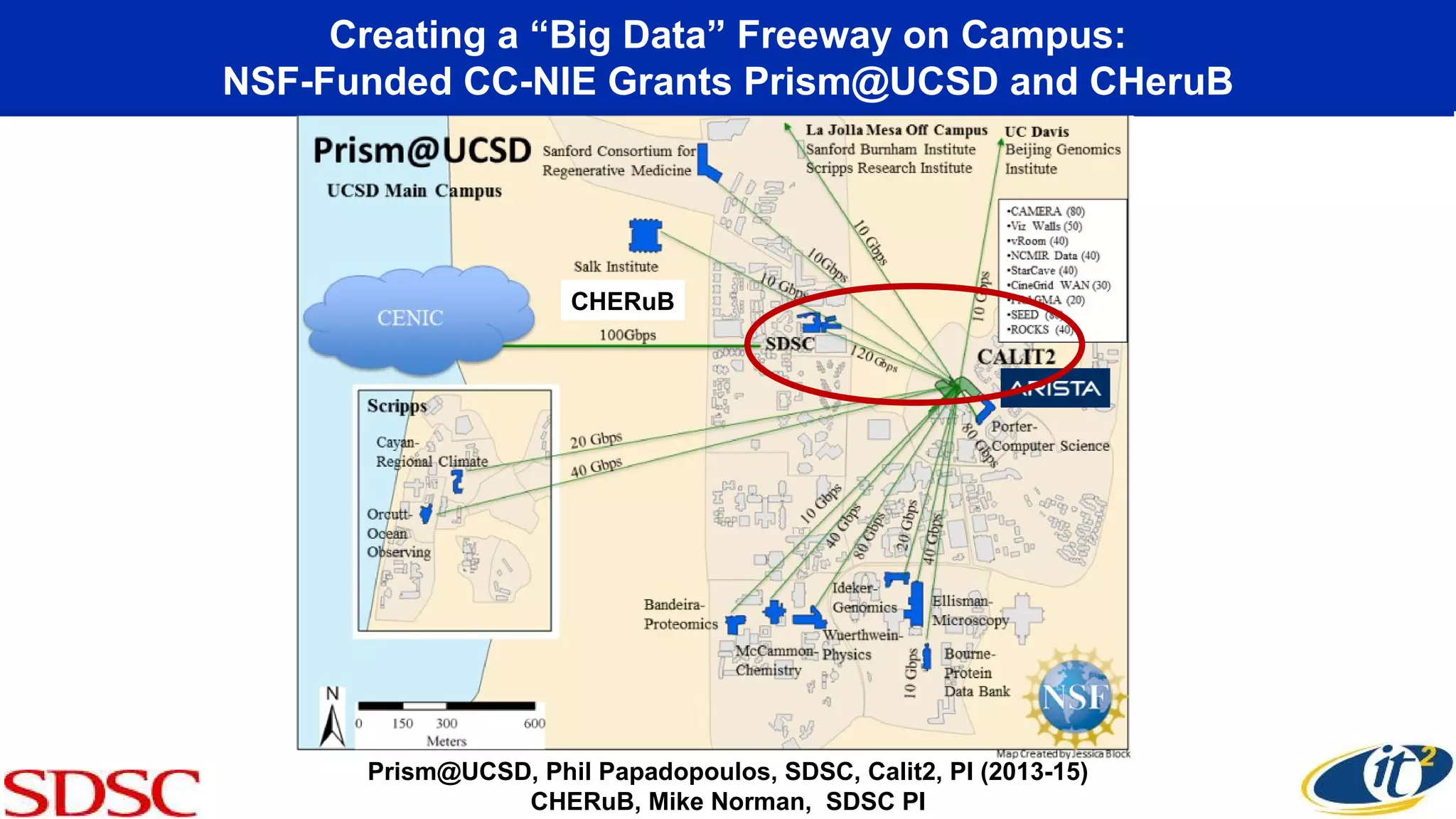

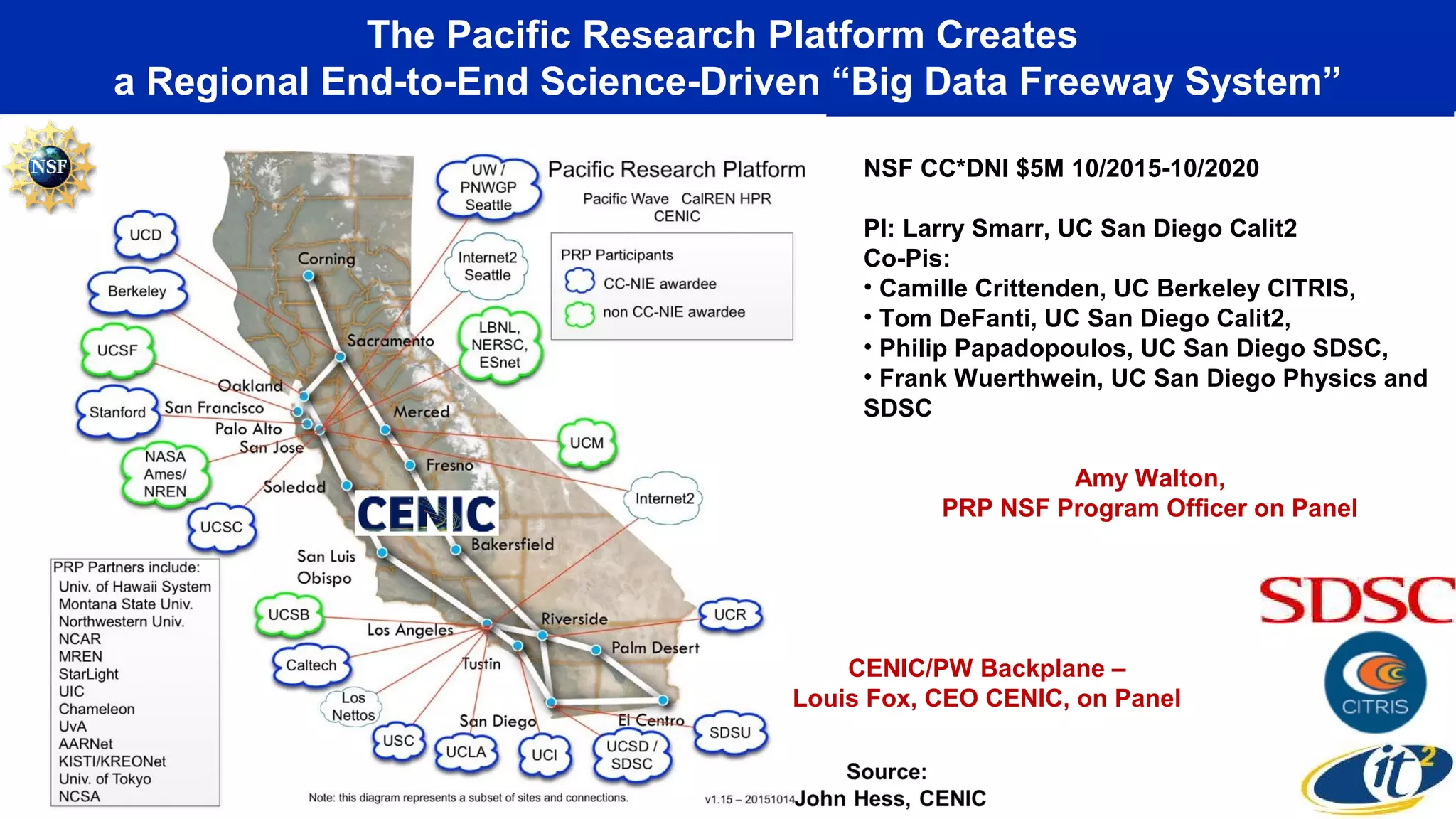

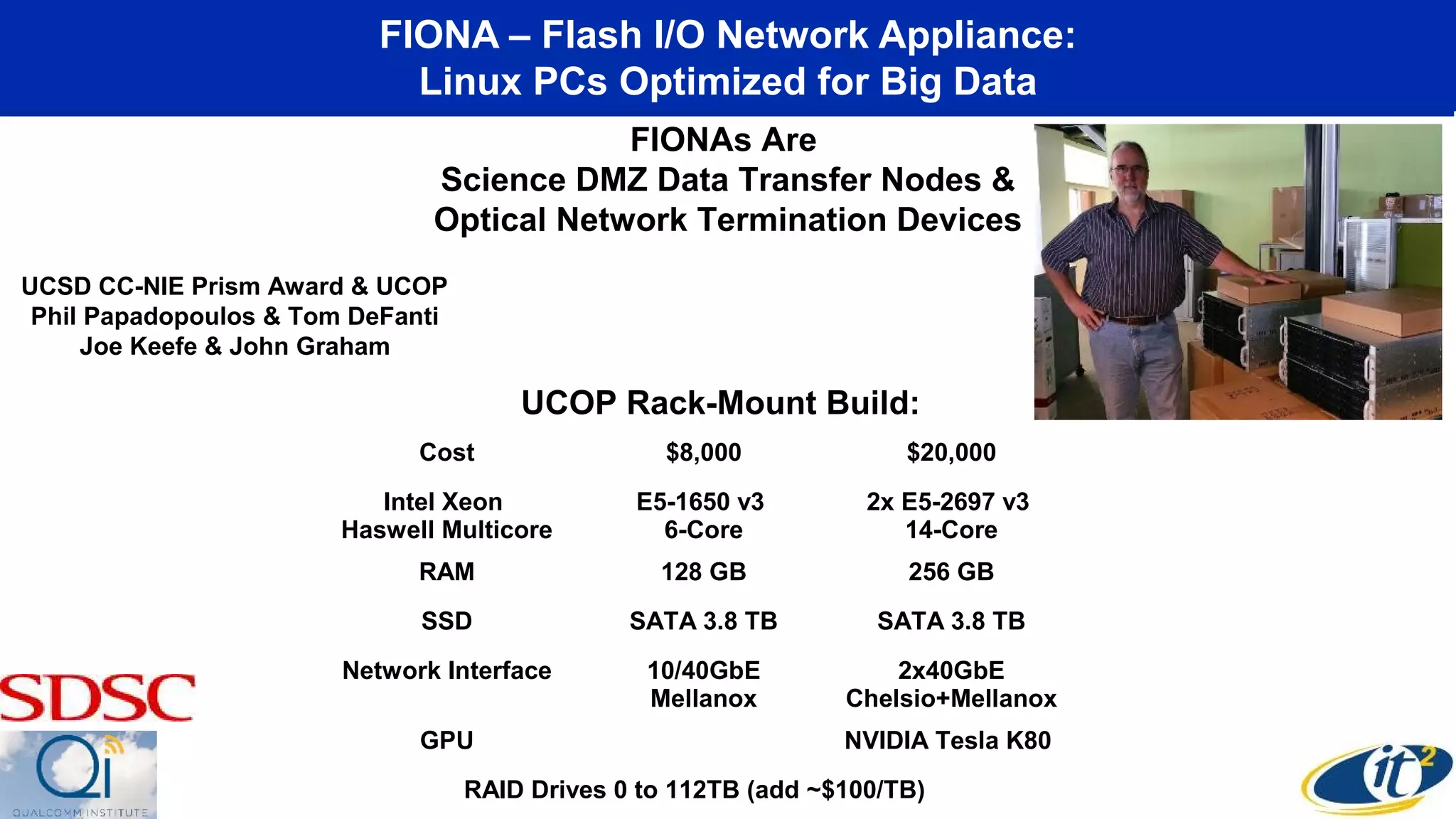

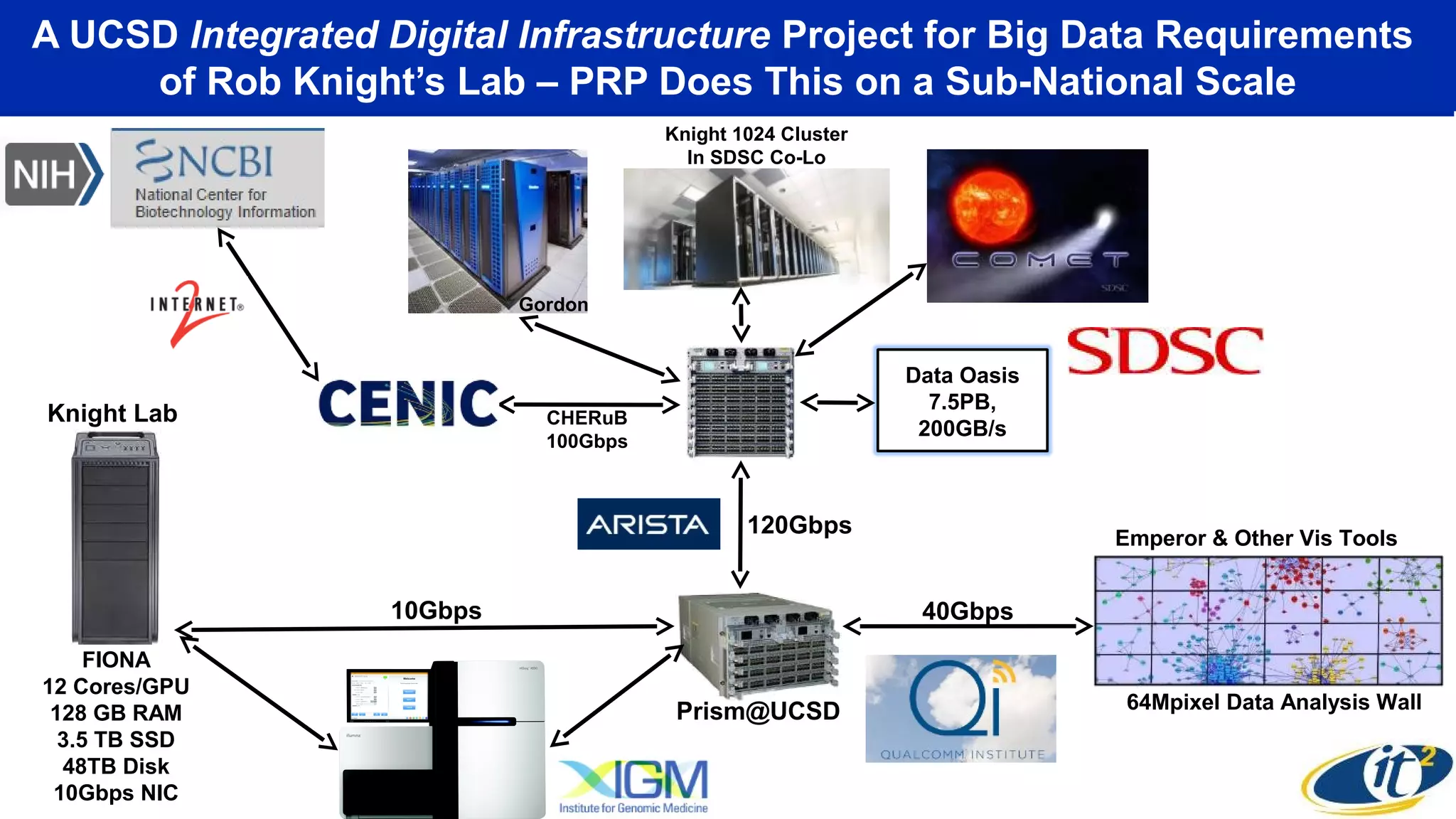

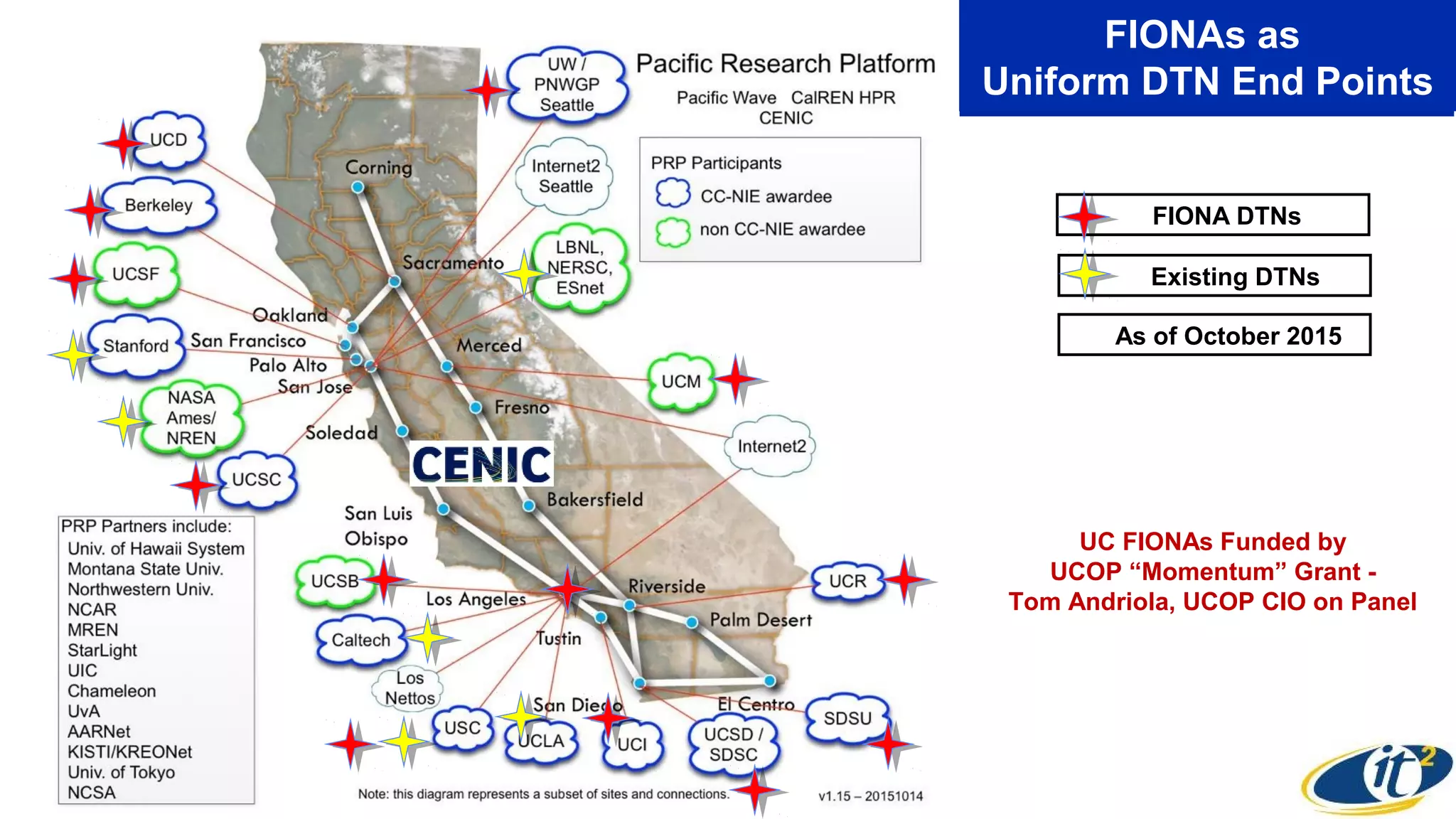

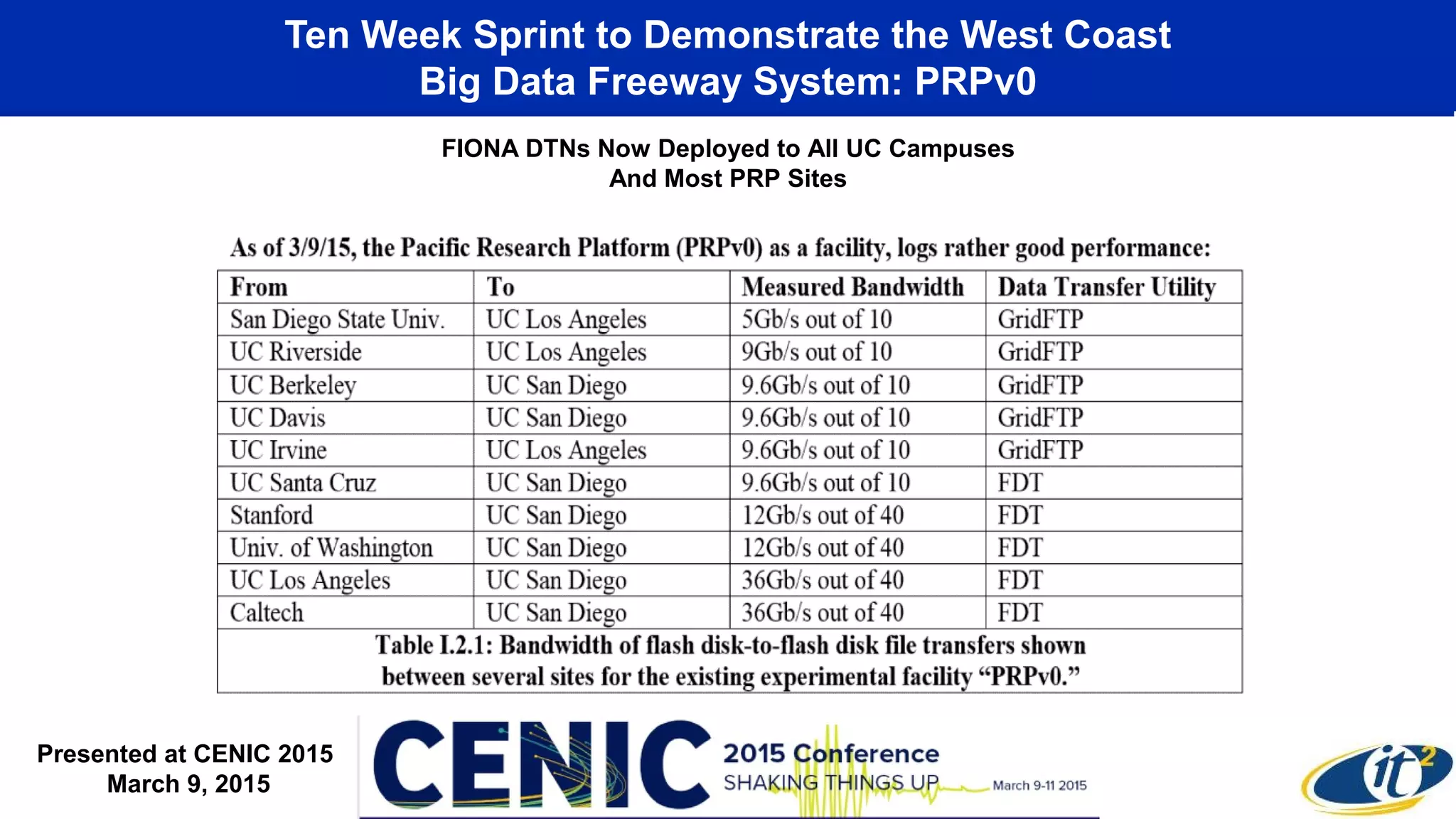

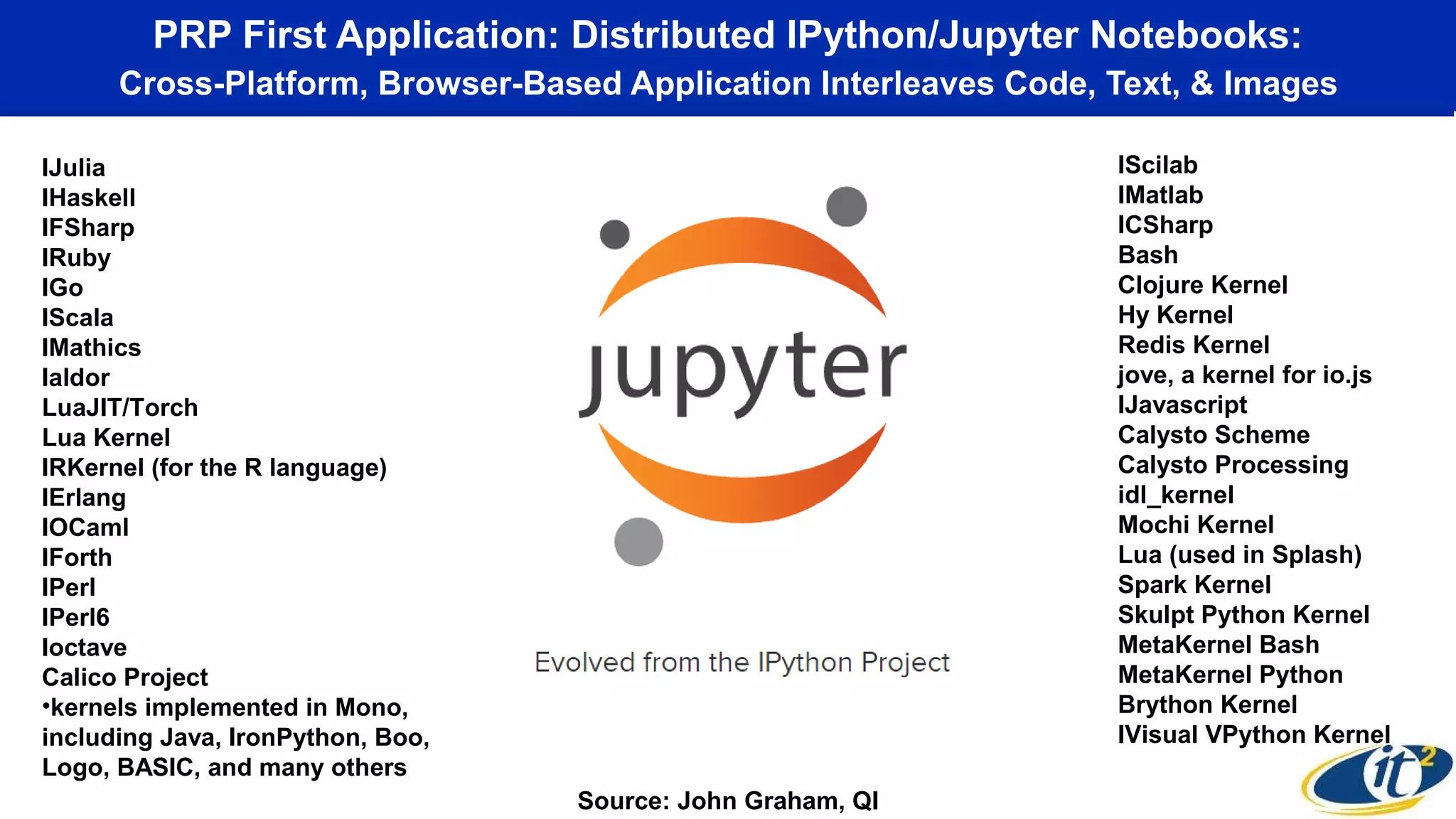

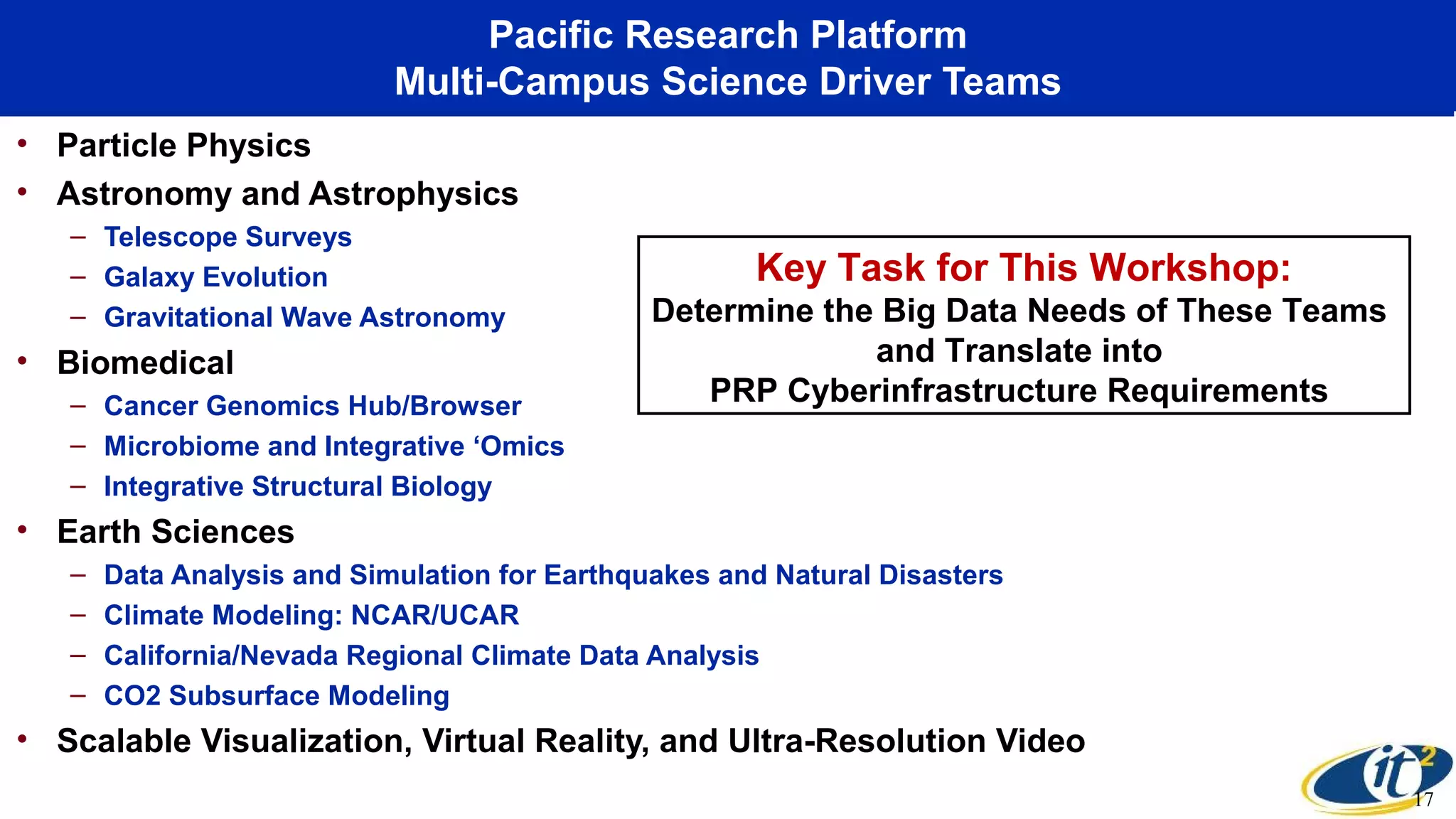

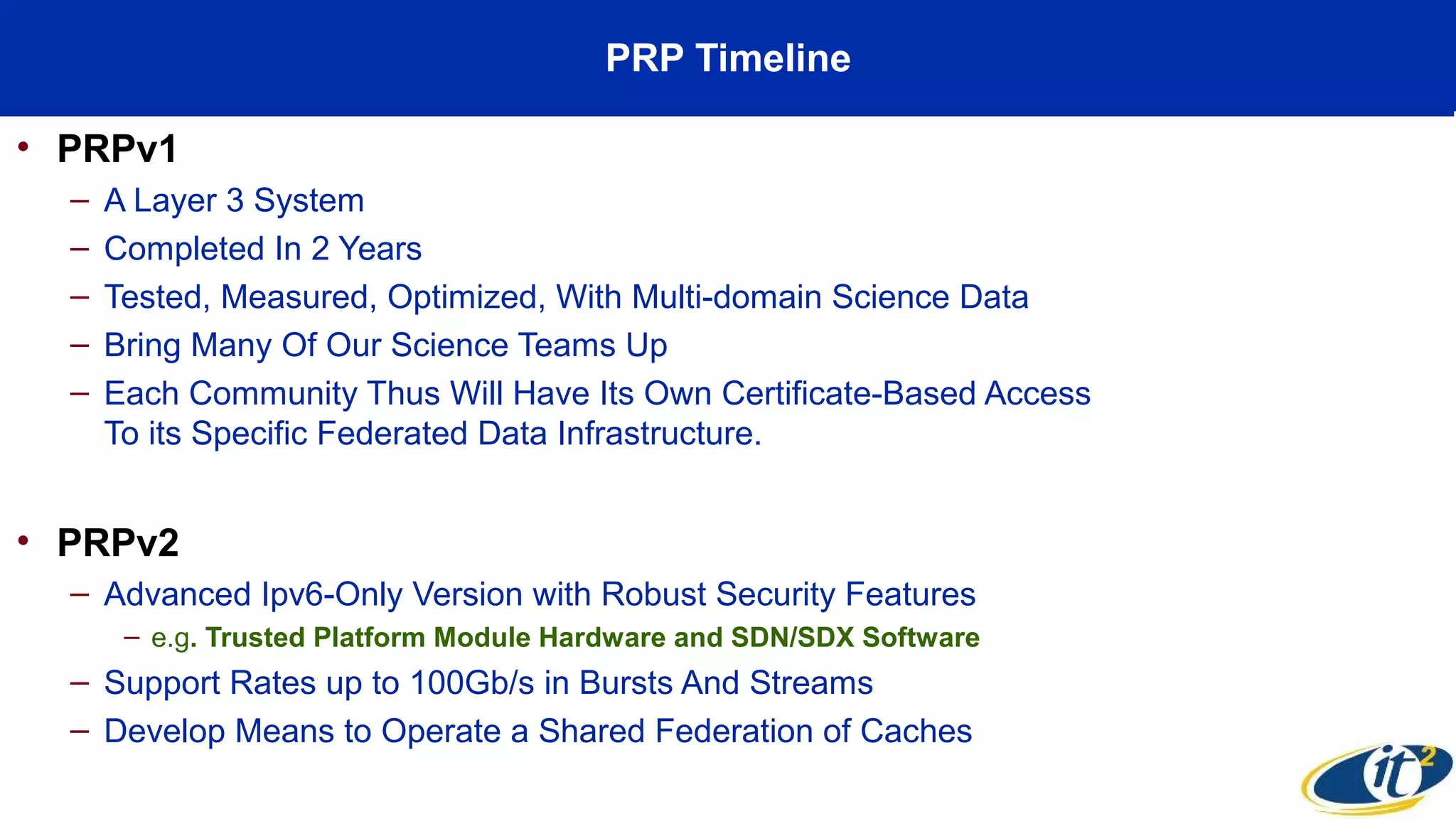

The Pacific Research Platform aims to establish a comprehensive 'big data freeway' along the West Coast, connecting various research campuses with advanced networking technologies to facilitate data-intensive research. Funded by the NSF, the platform's development includes collaborations with multiple universities and industries to enhance cyberinfrastructure capabilities, addressing the challenges of data overload in research. Key initiatives involve deploying optimized digital infrastructure and creating scalable solutions for high-performance data transfers among scientific disciplines.