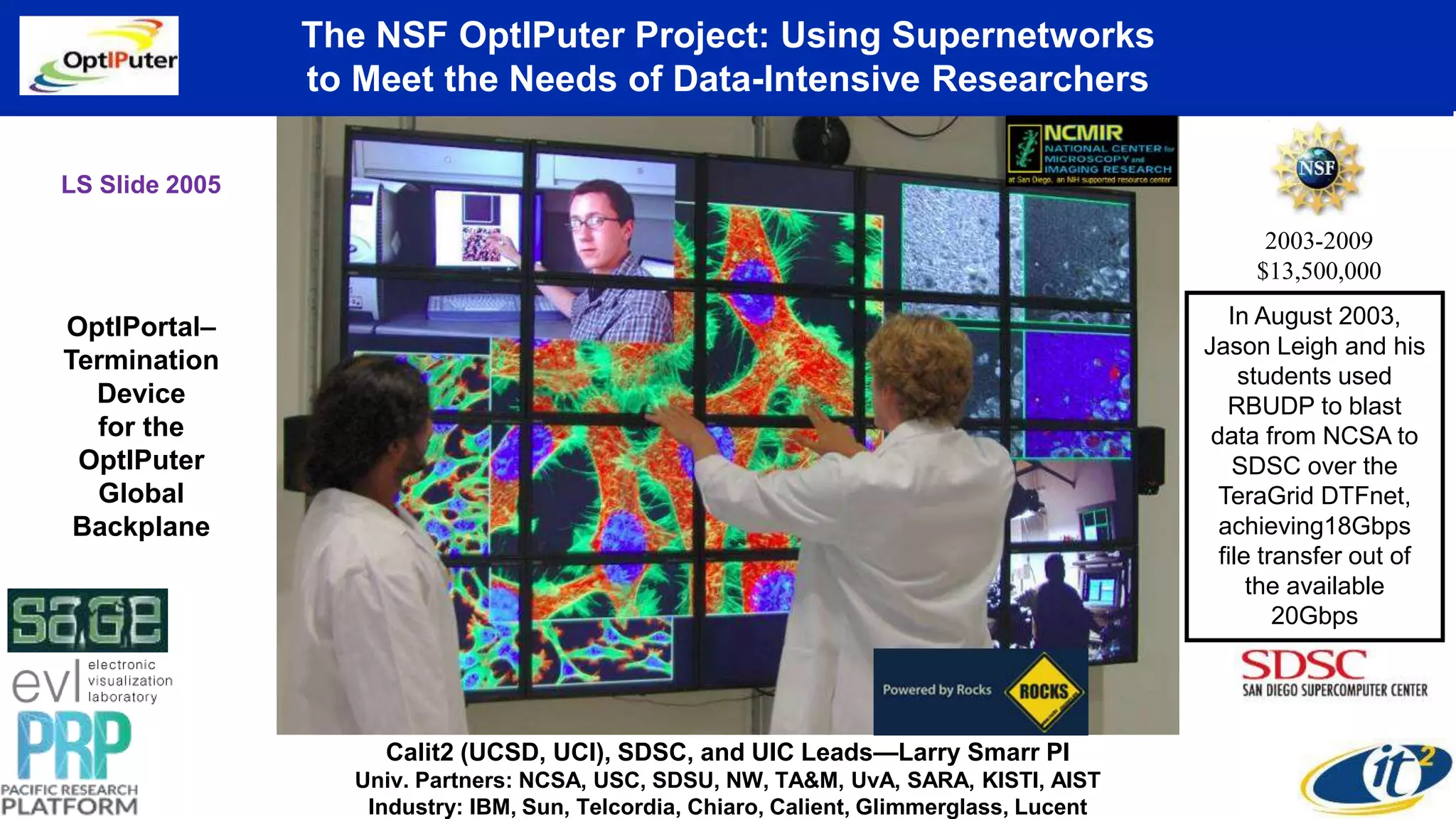

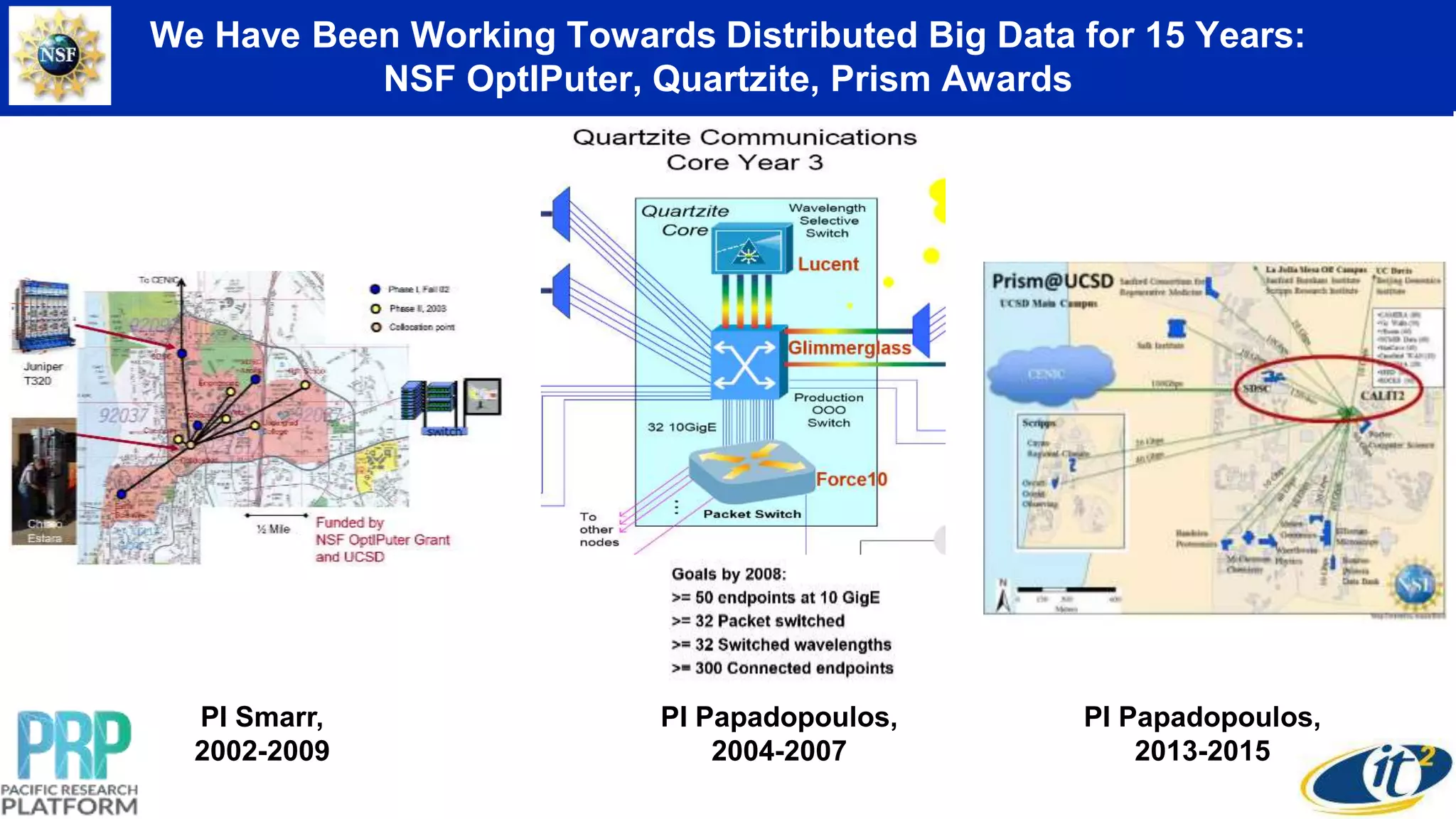

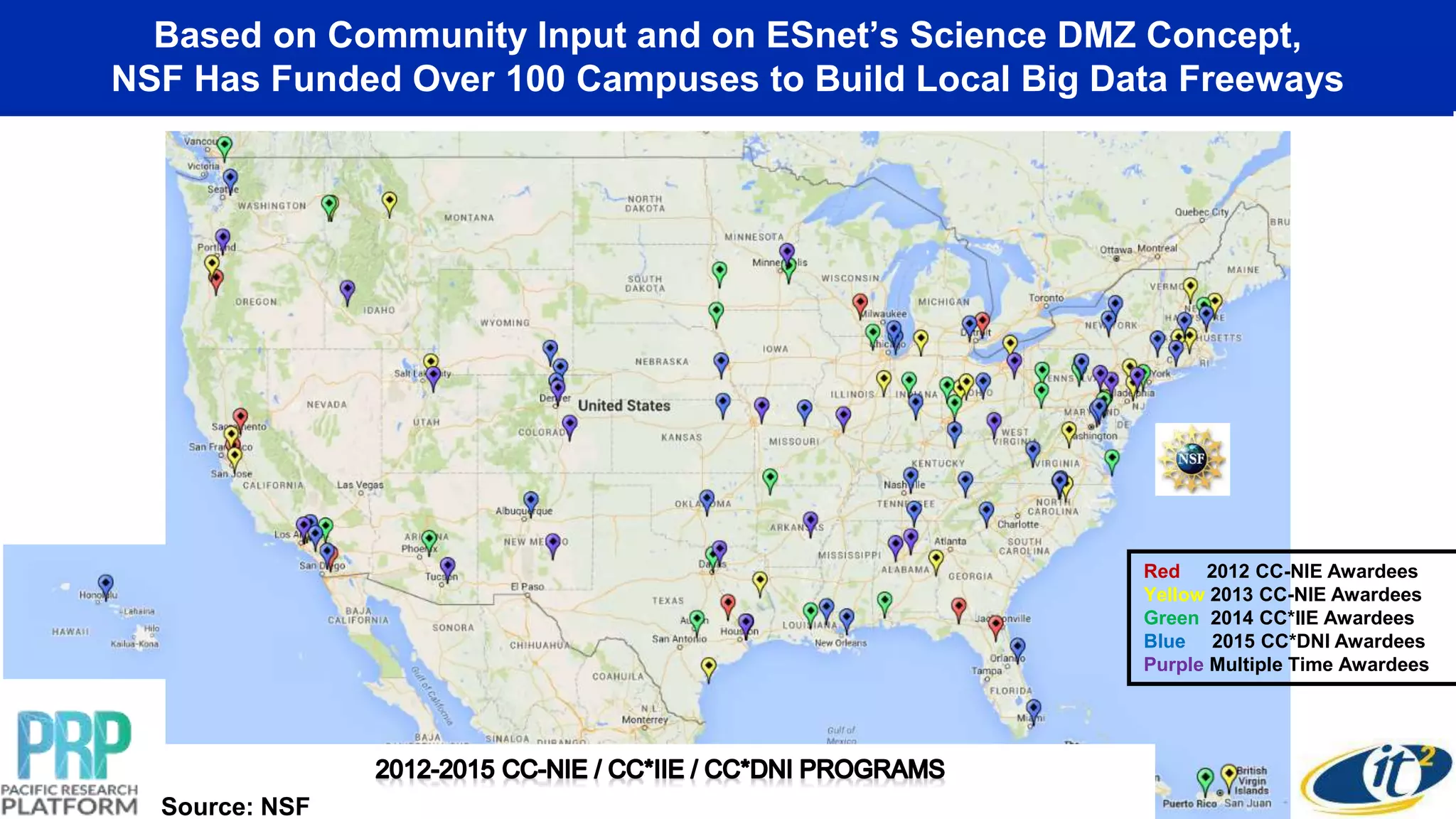

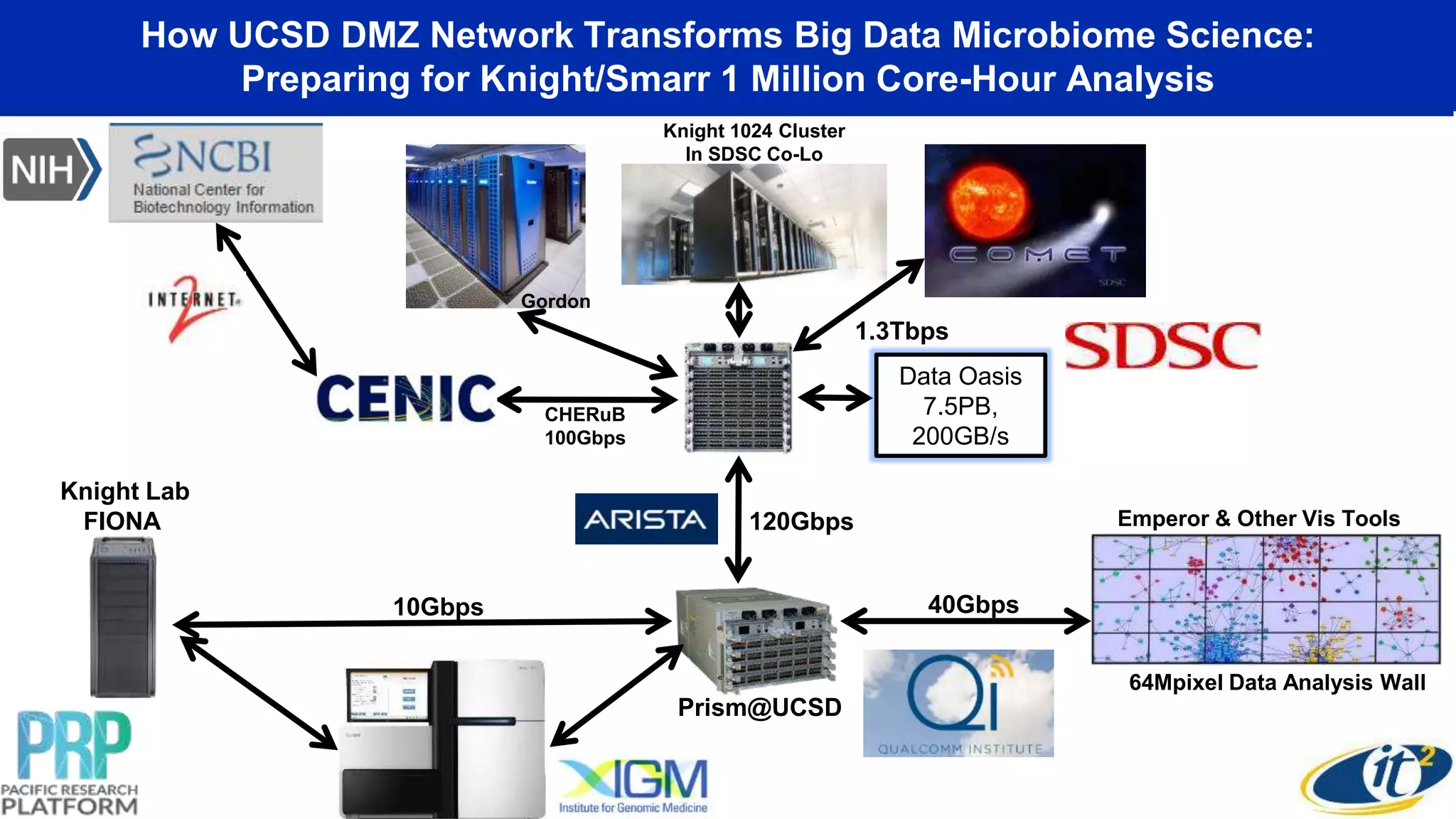

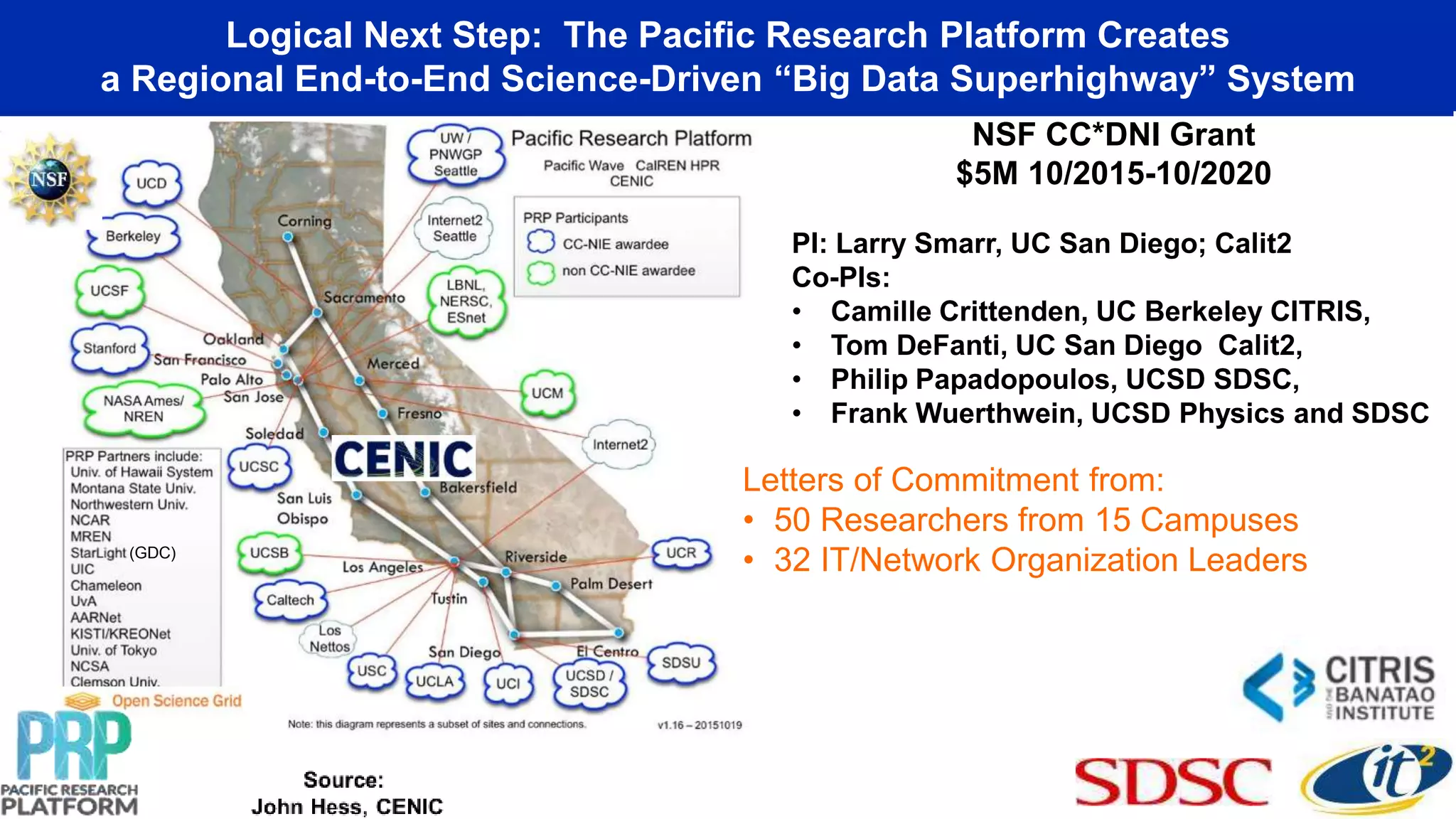

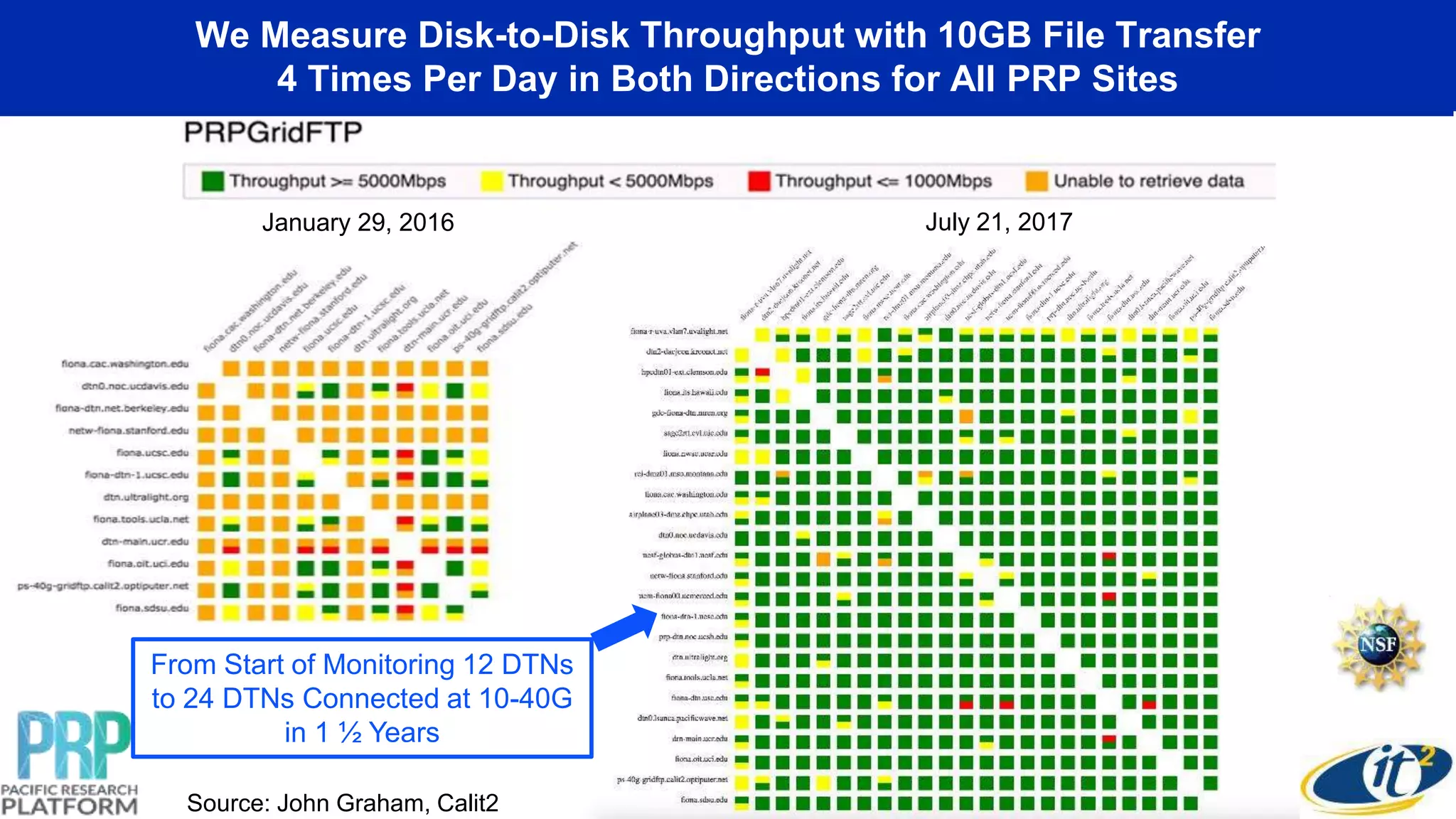

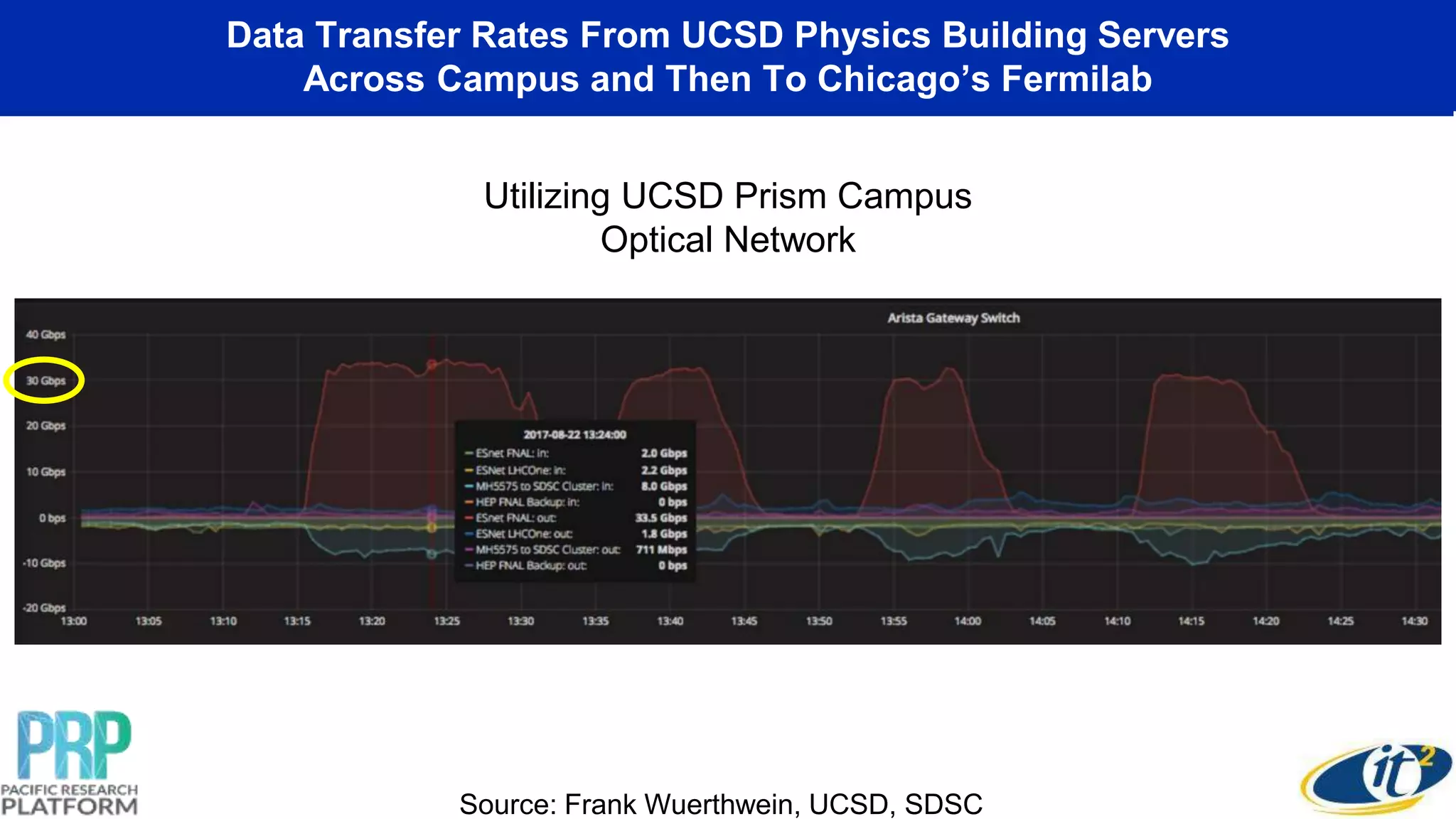

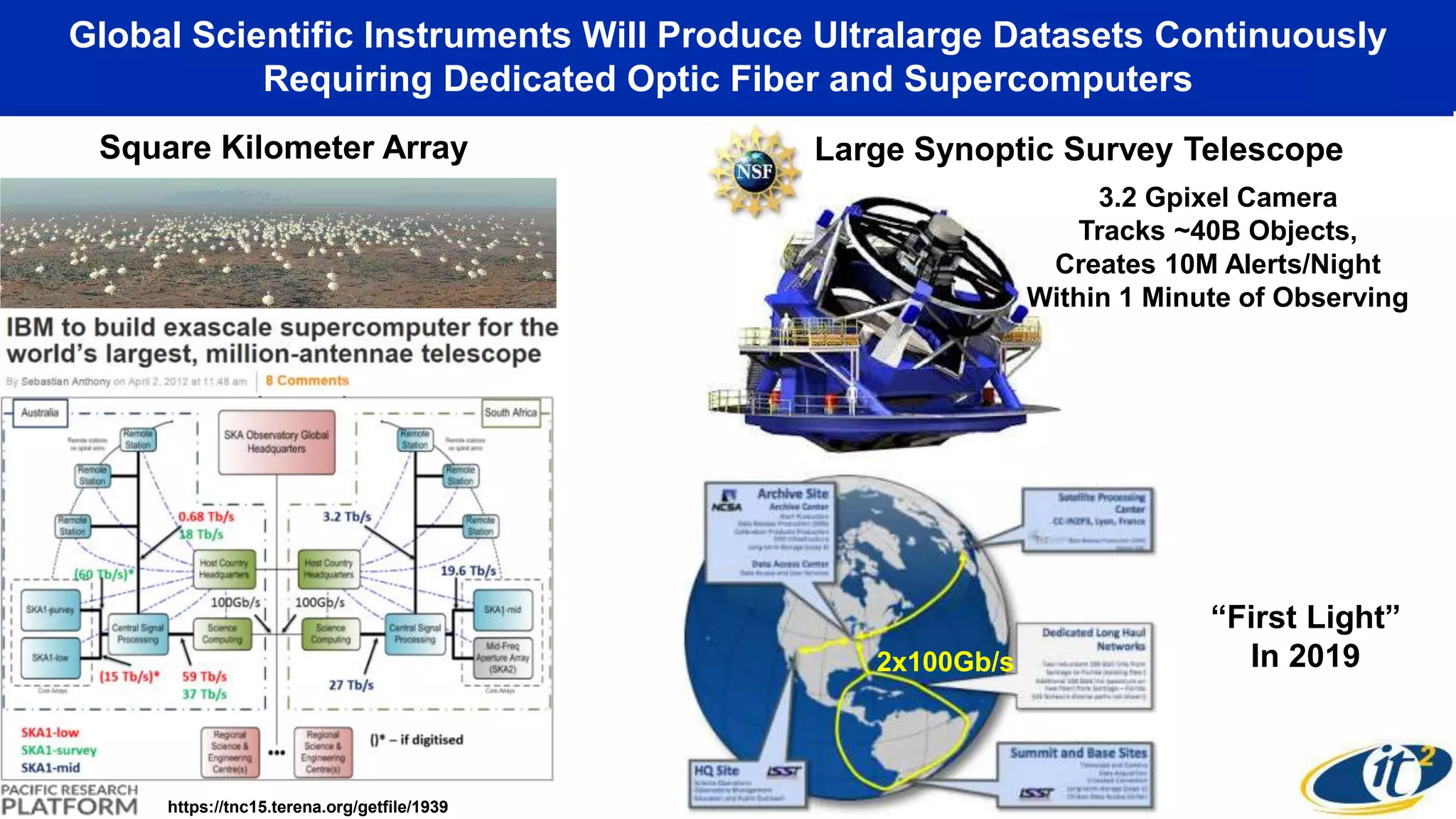

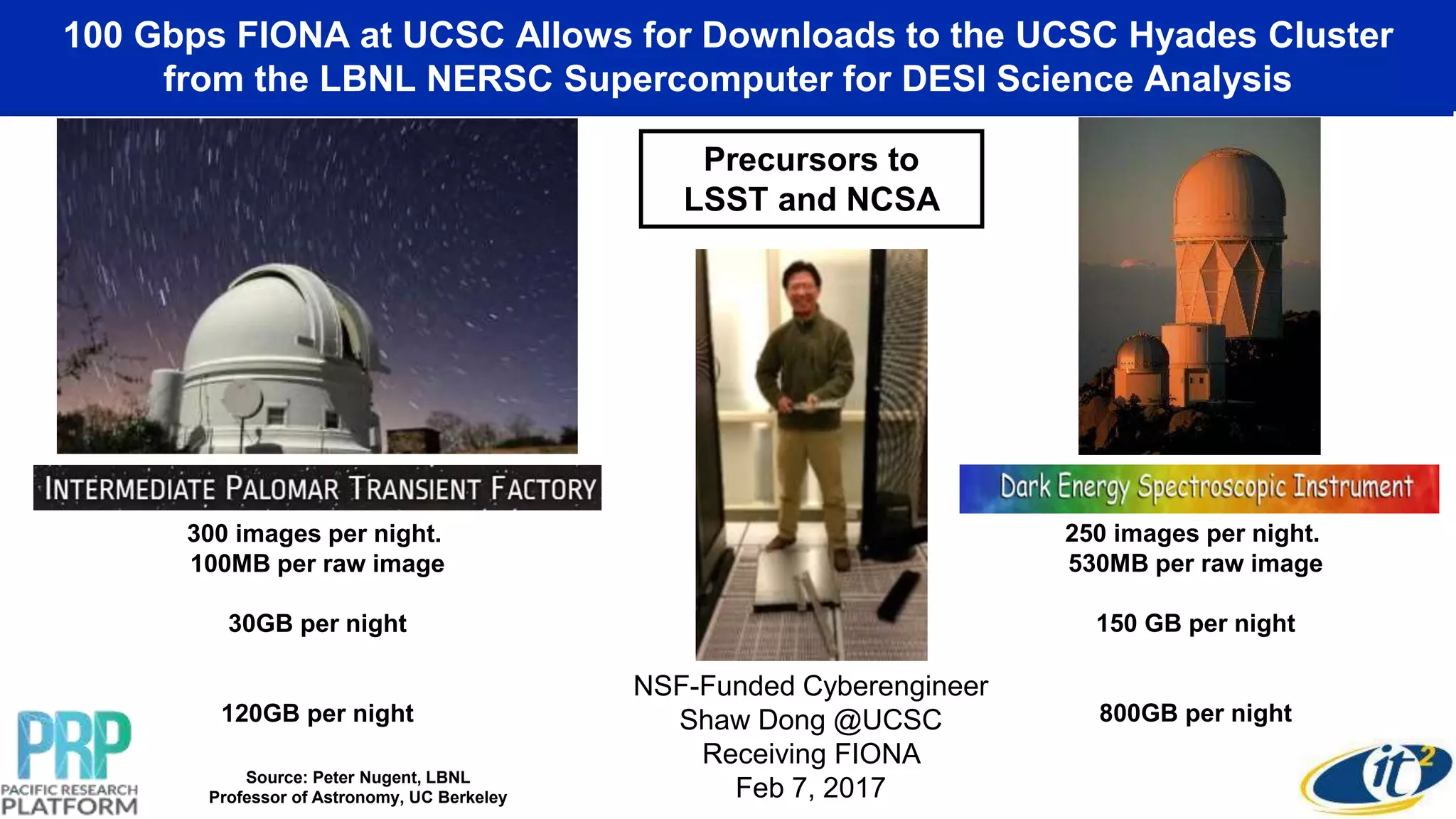

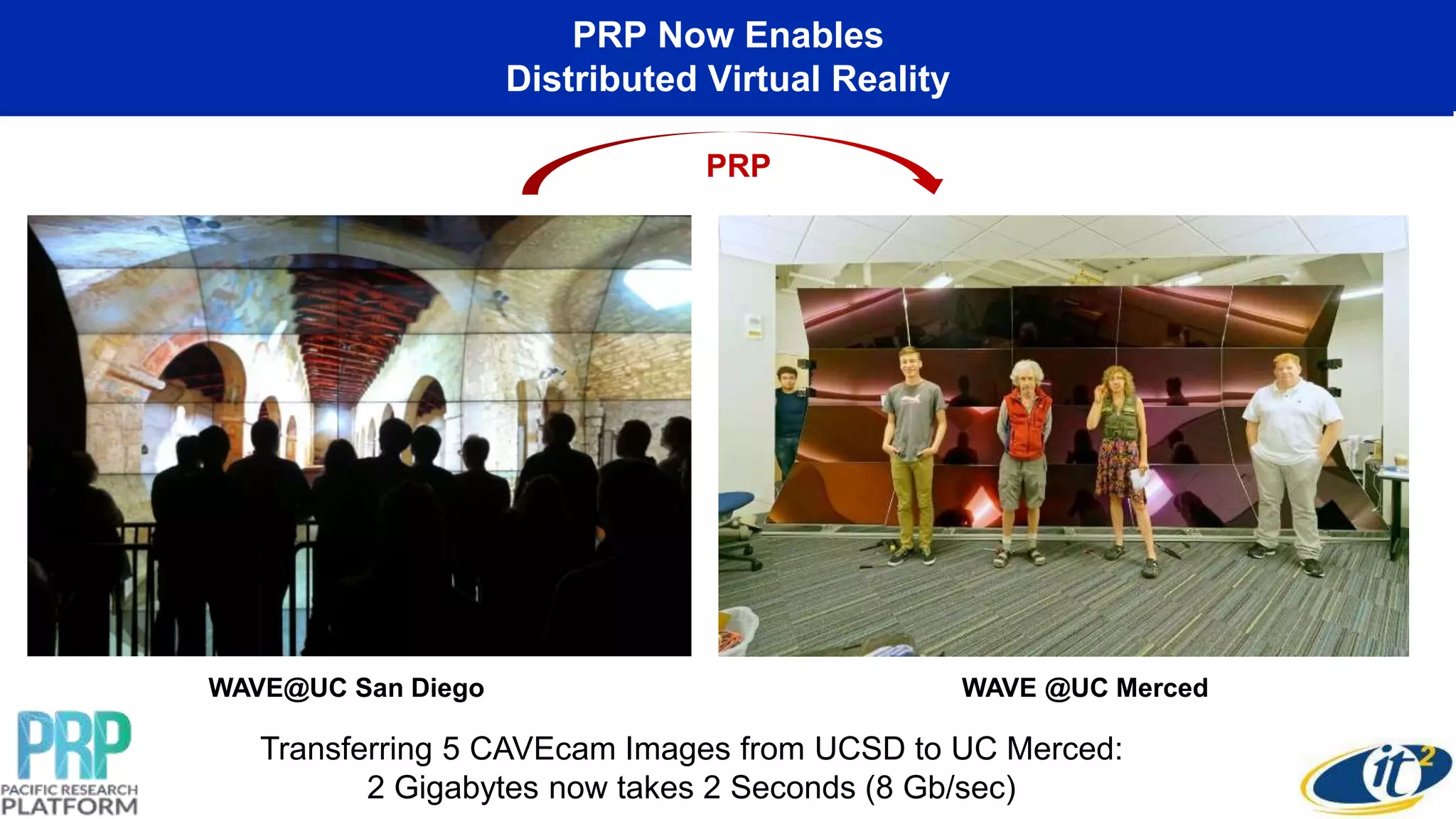

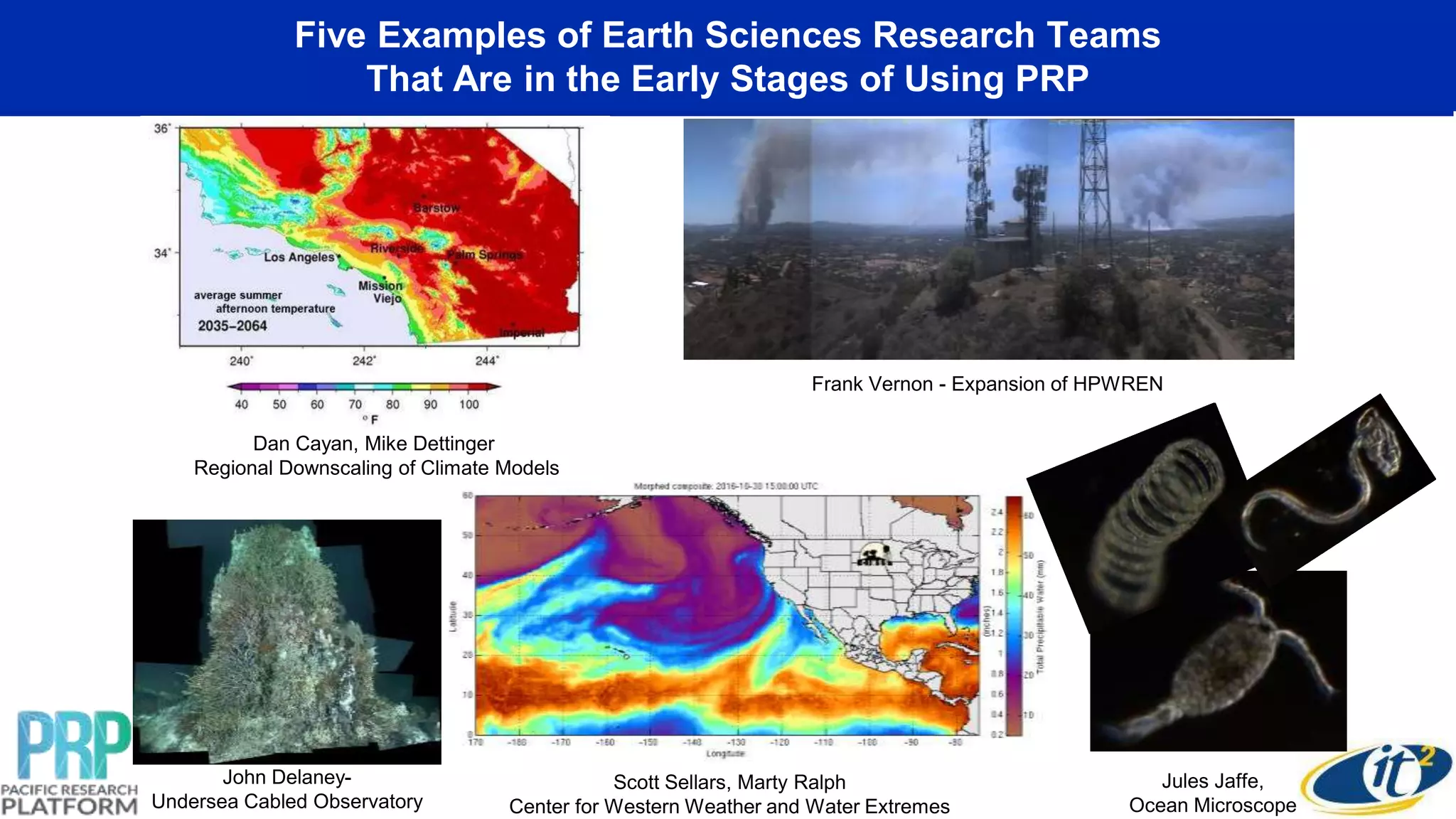

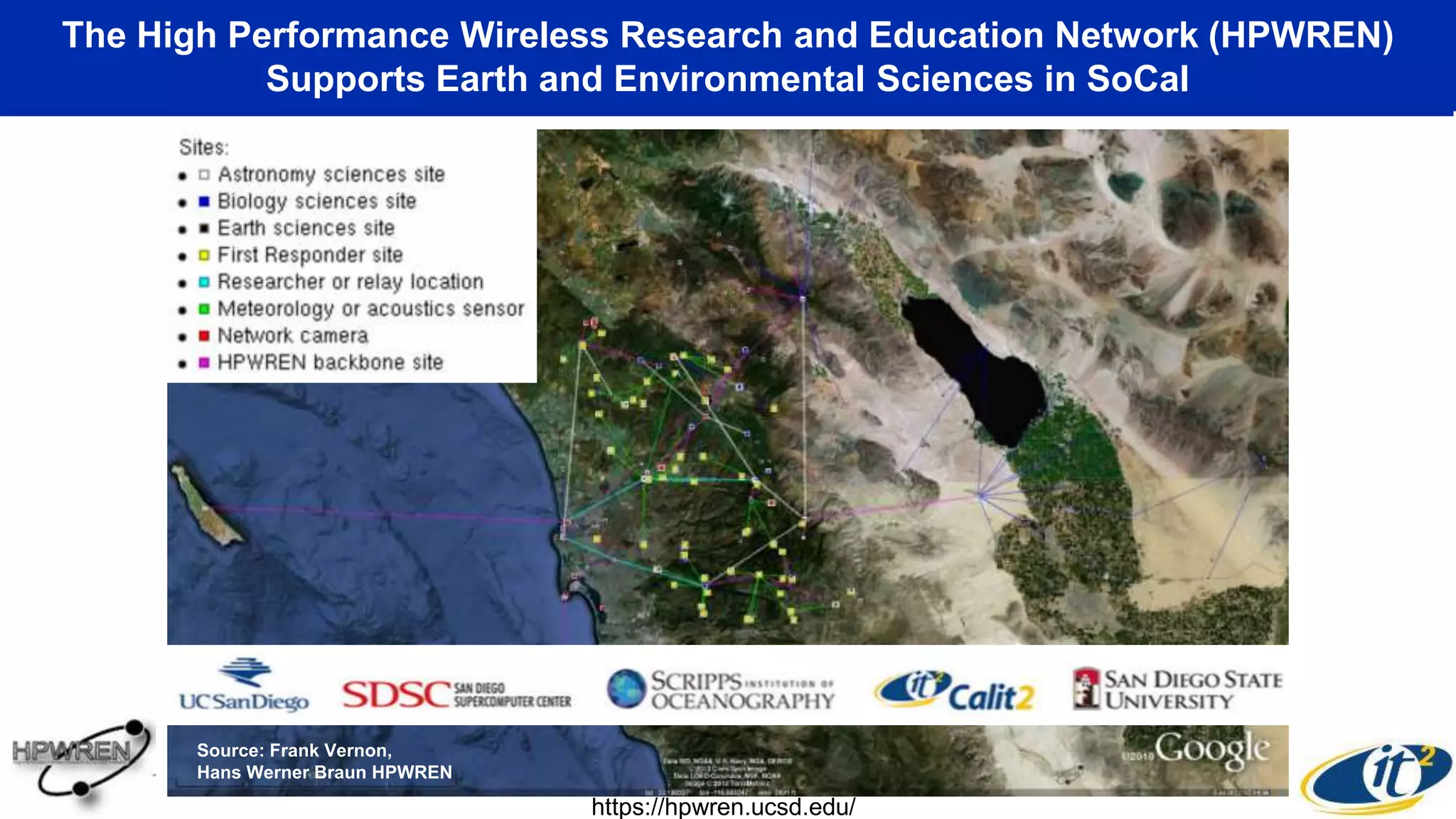

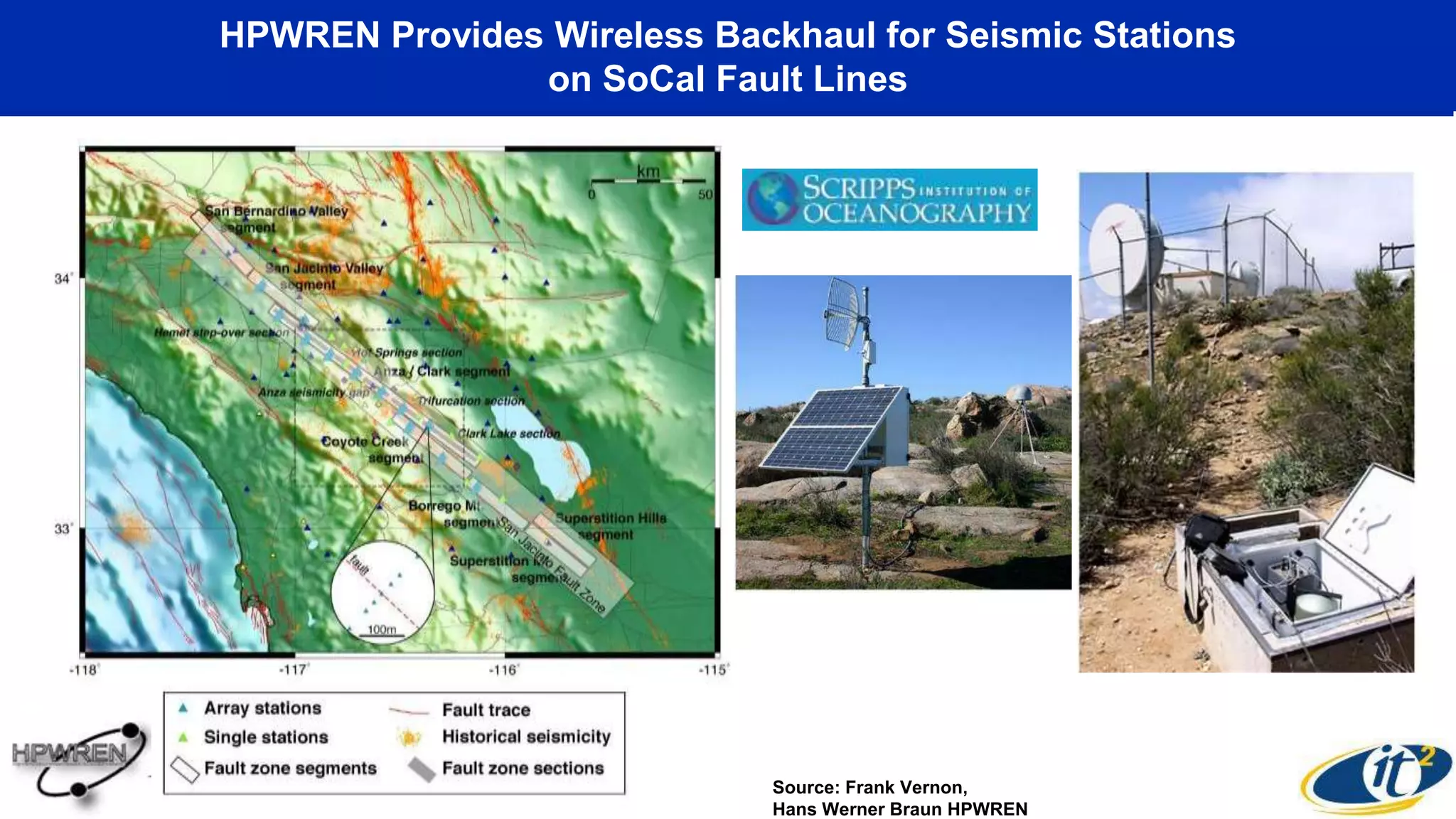

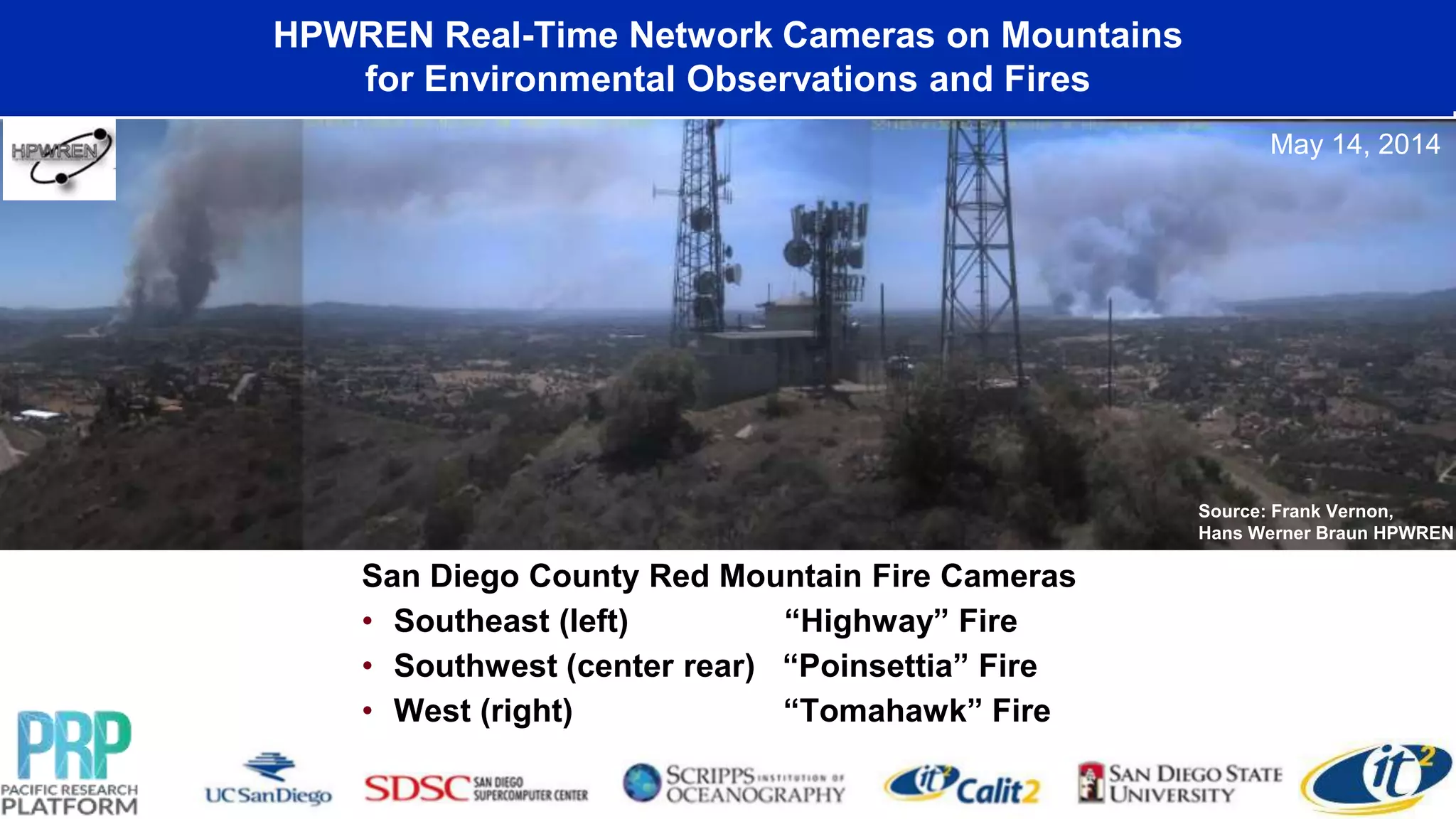

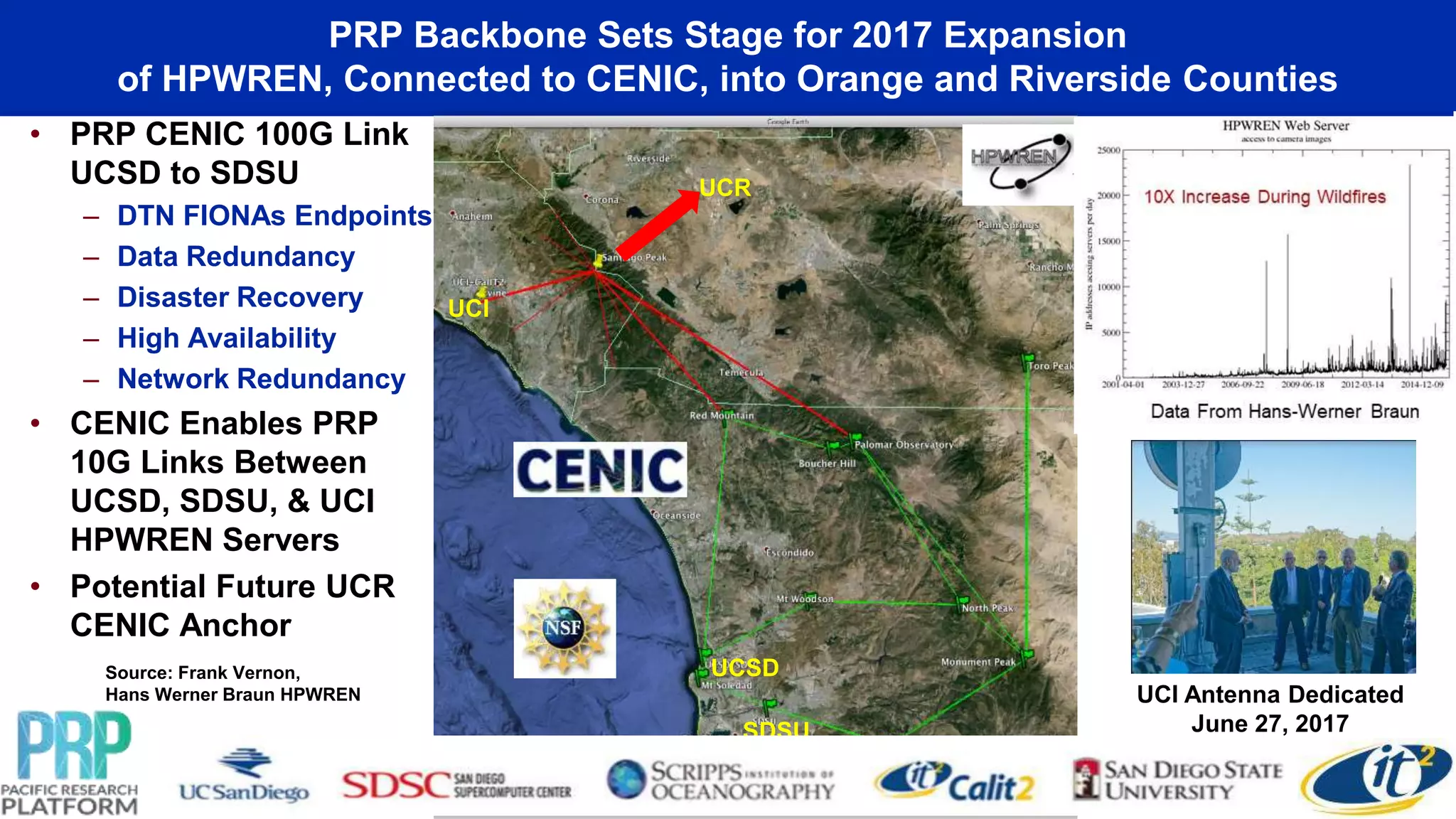

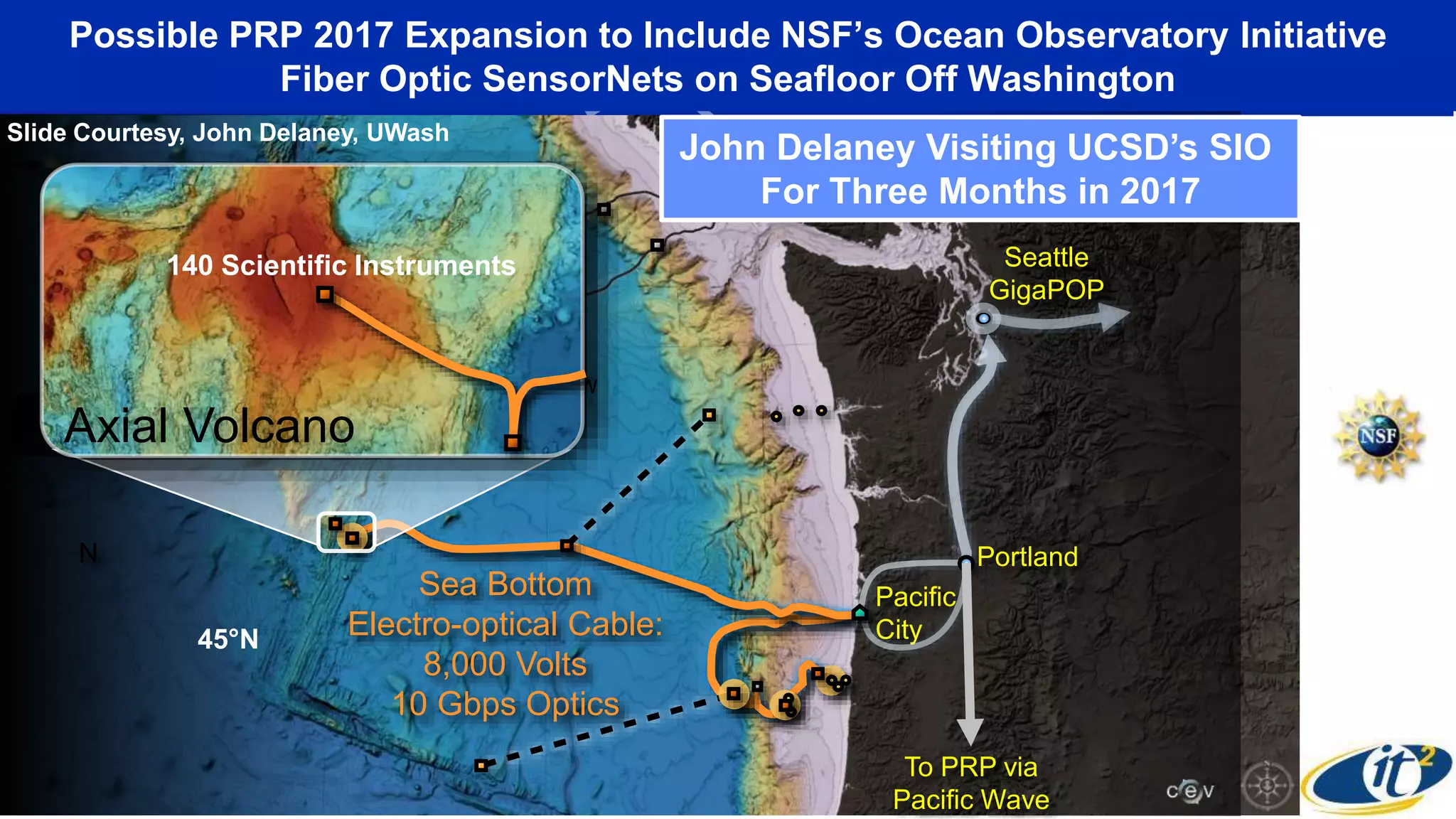

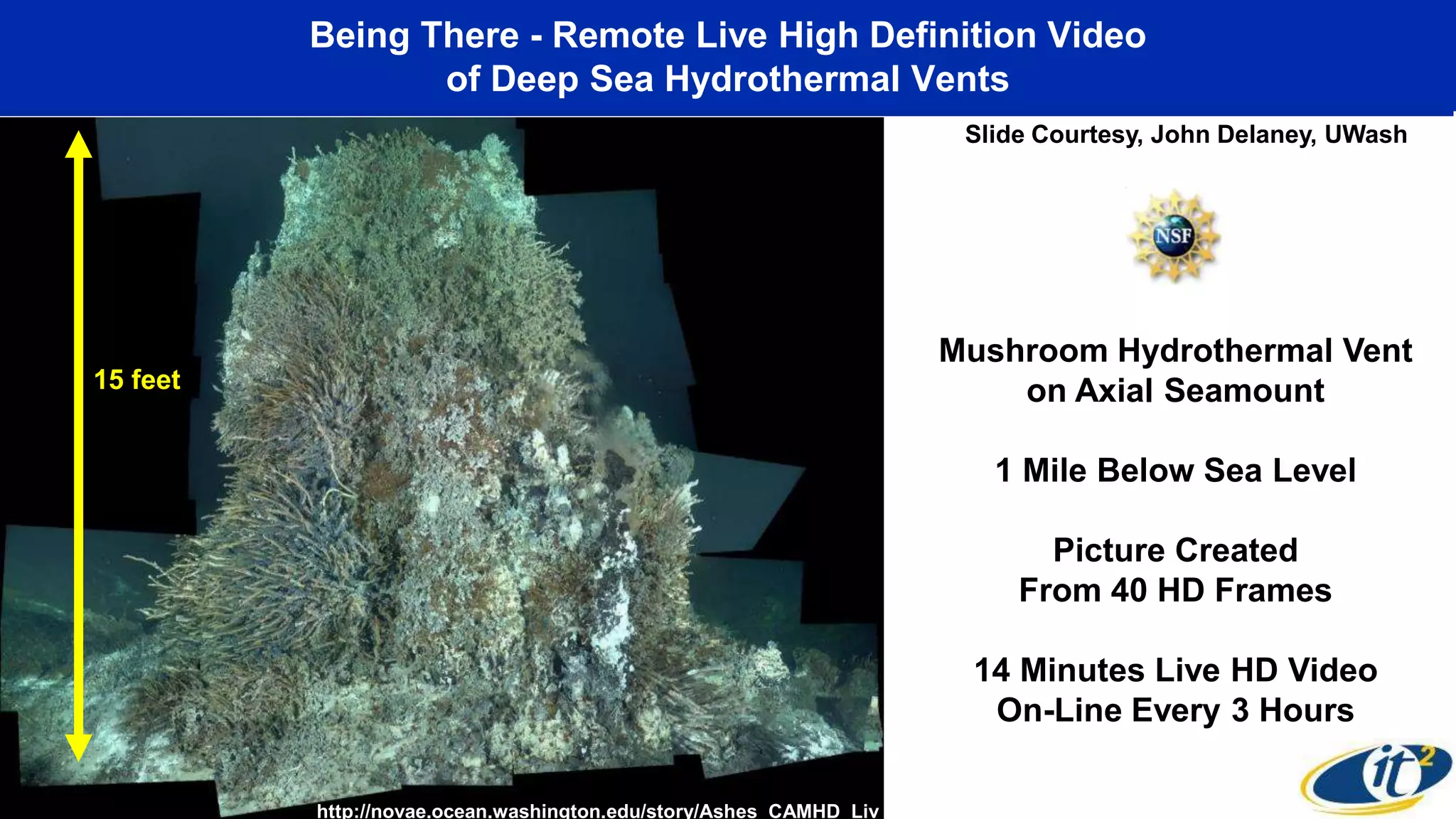

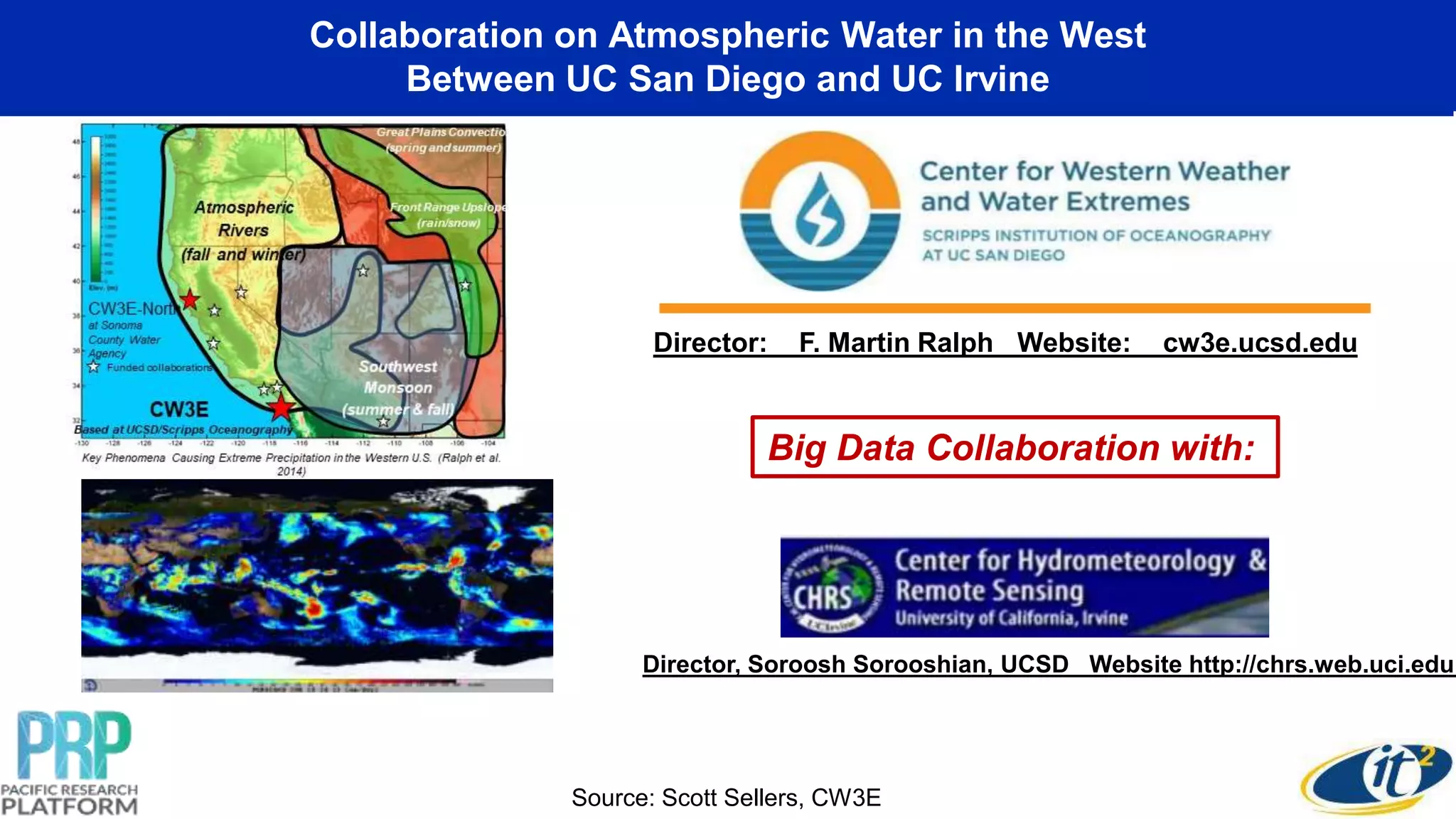

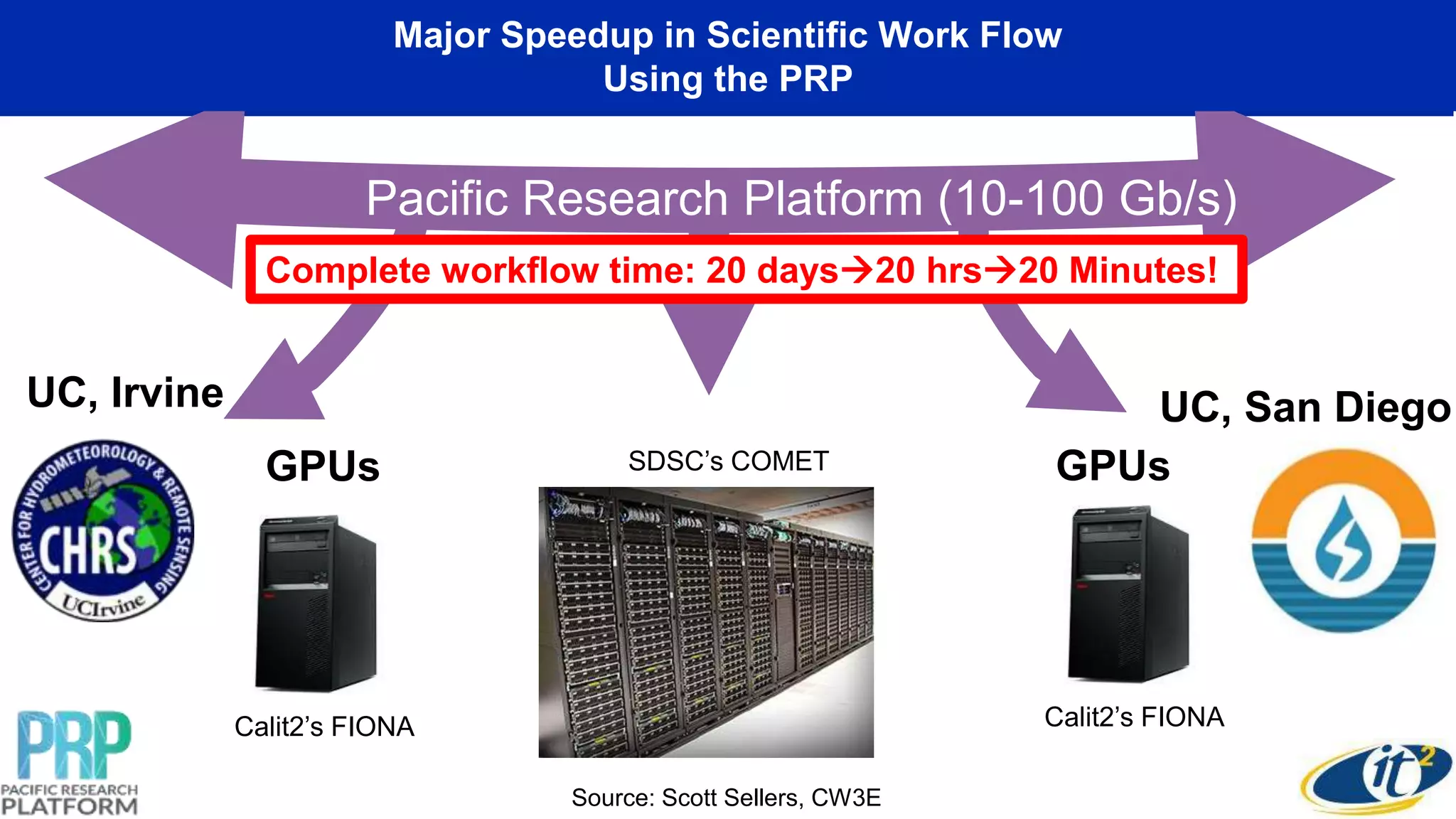

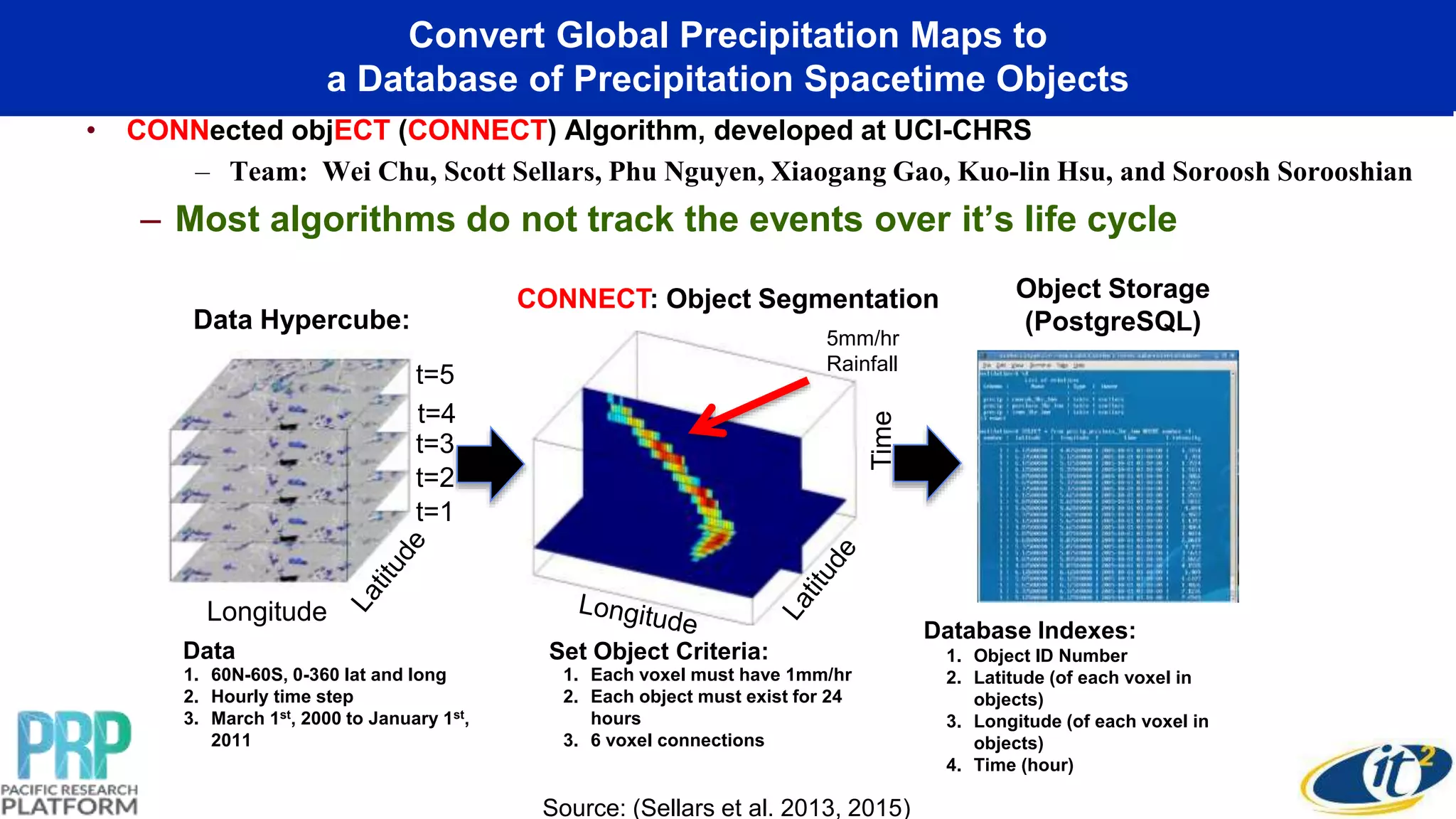

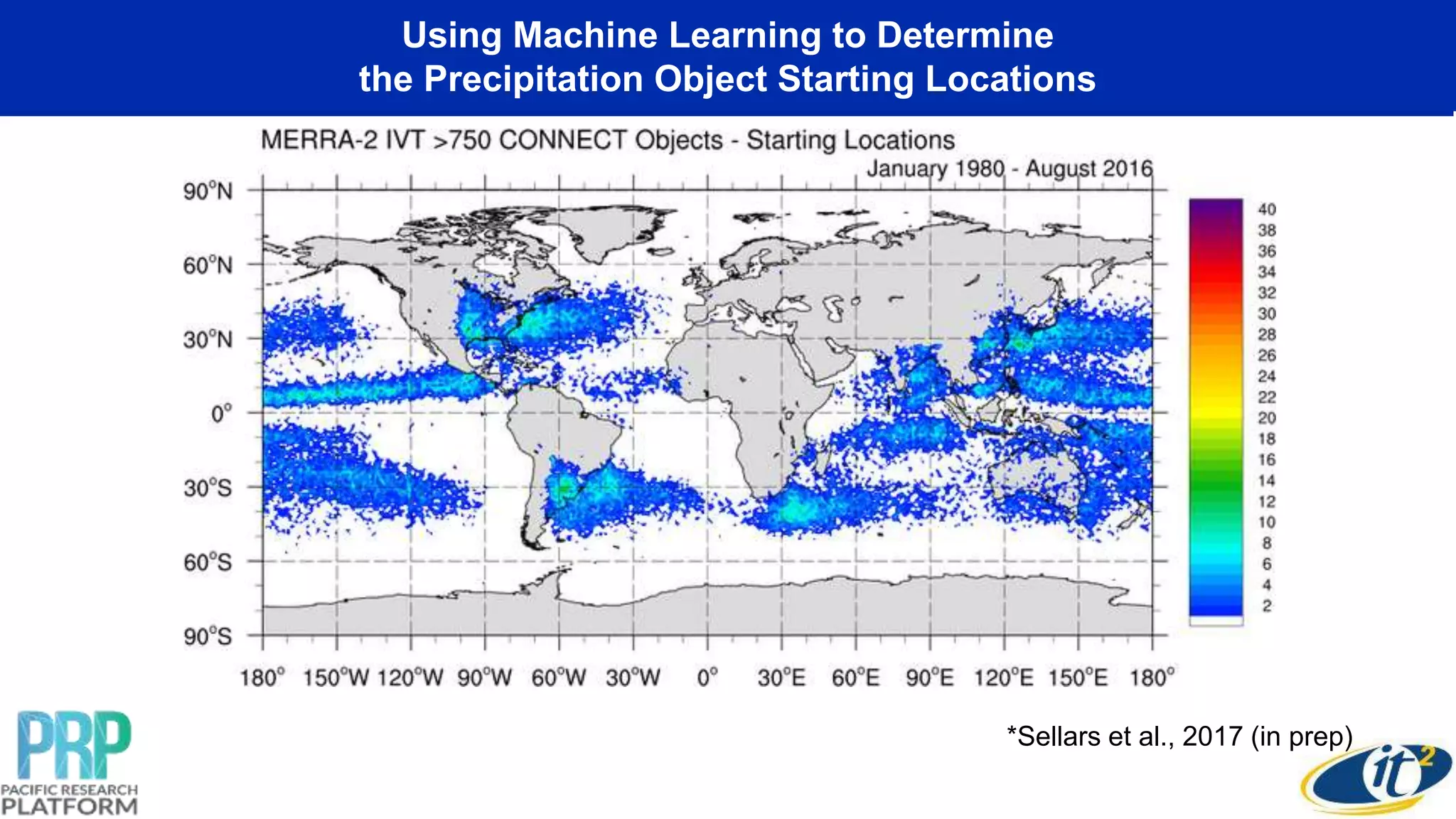

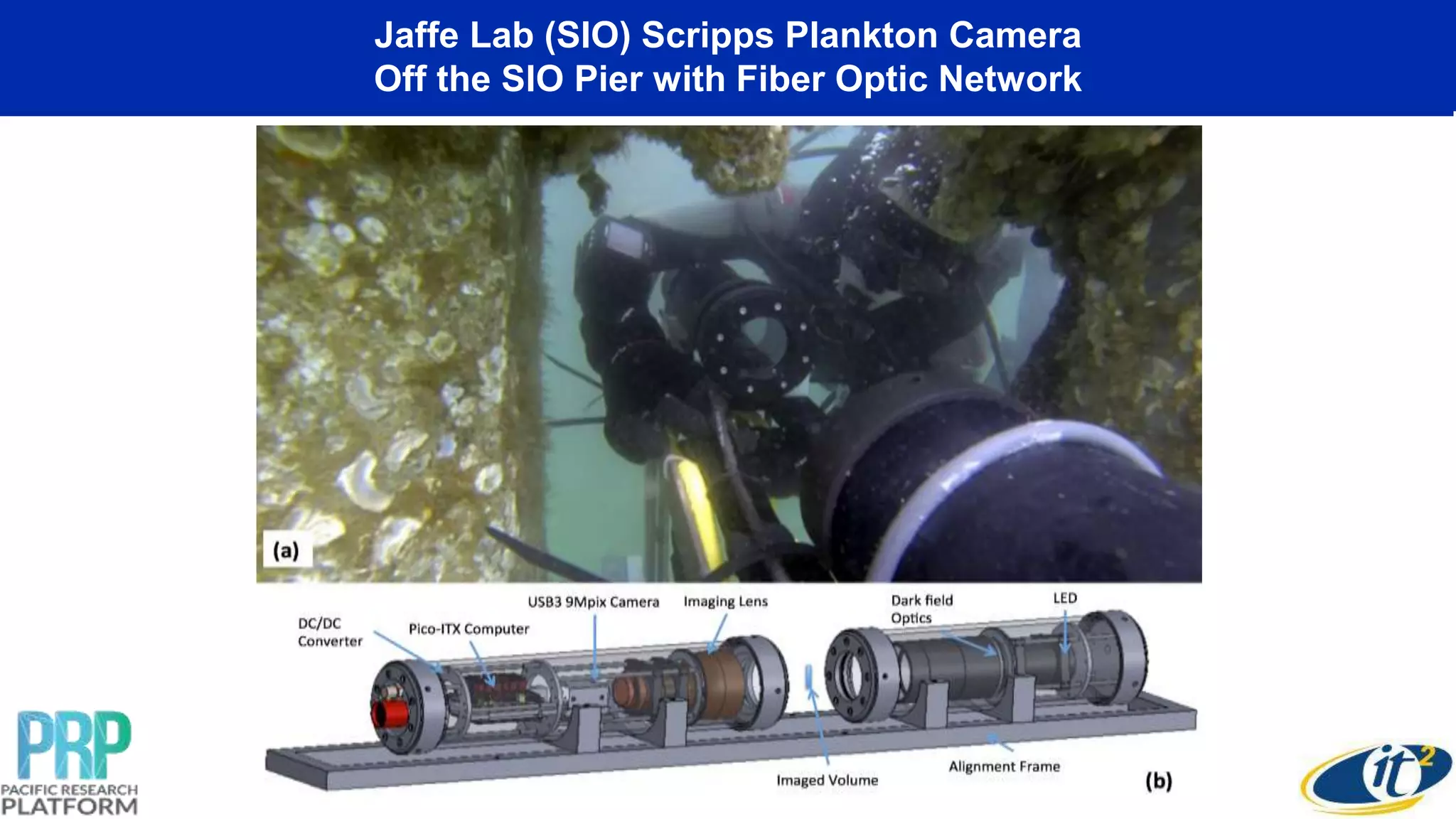

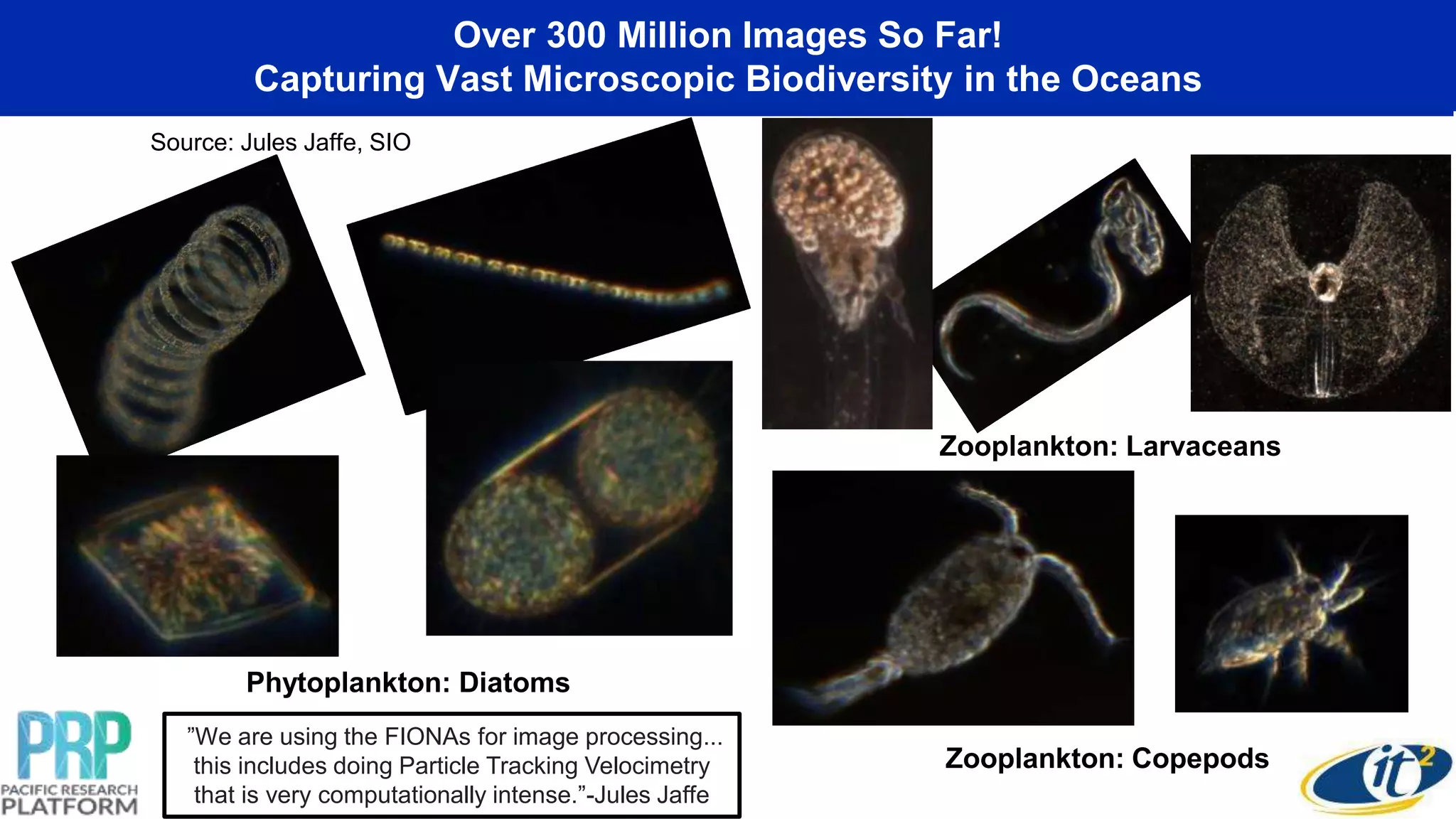

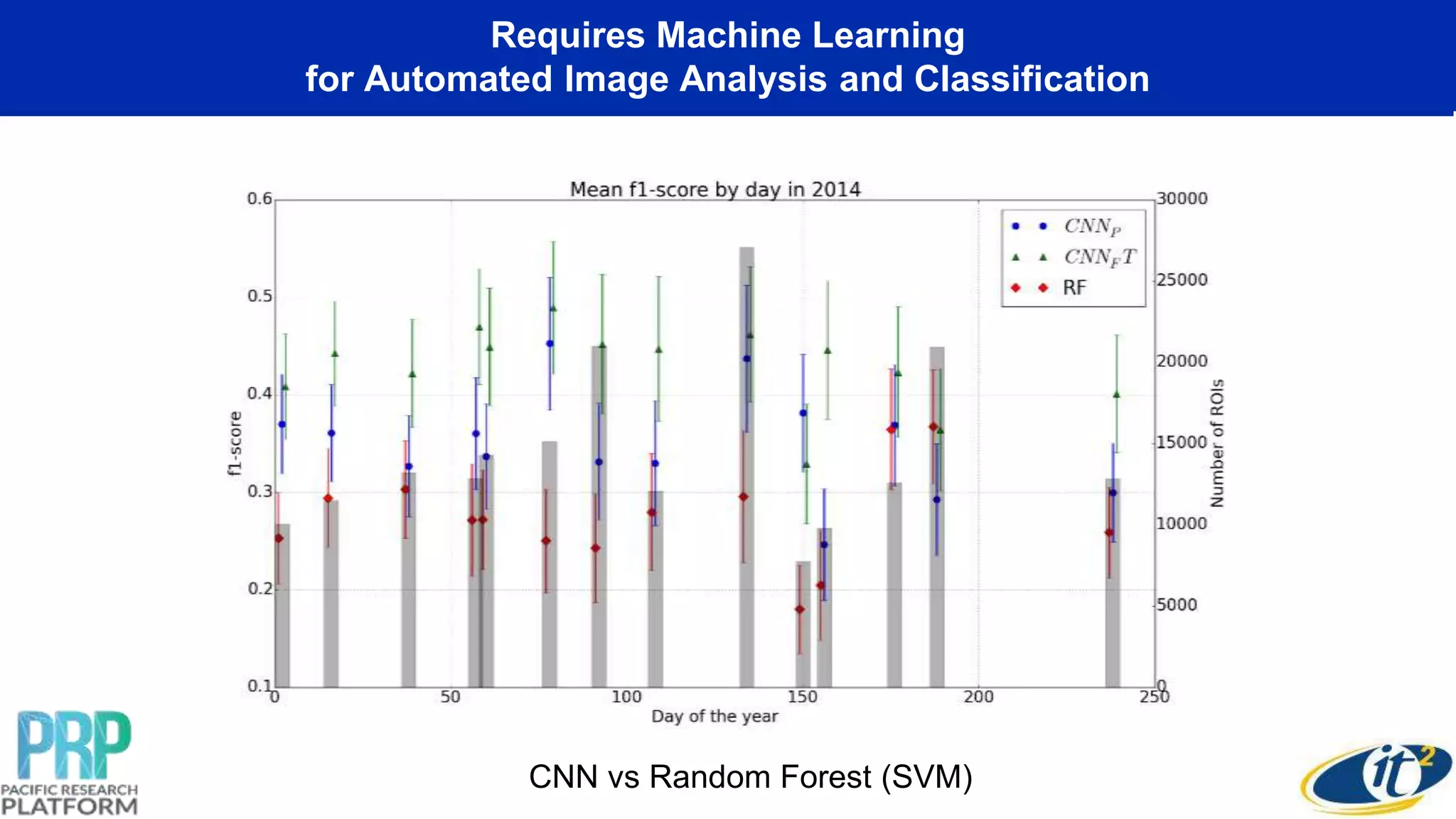

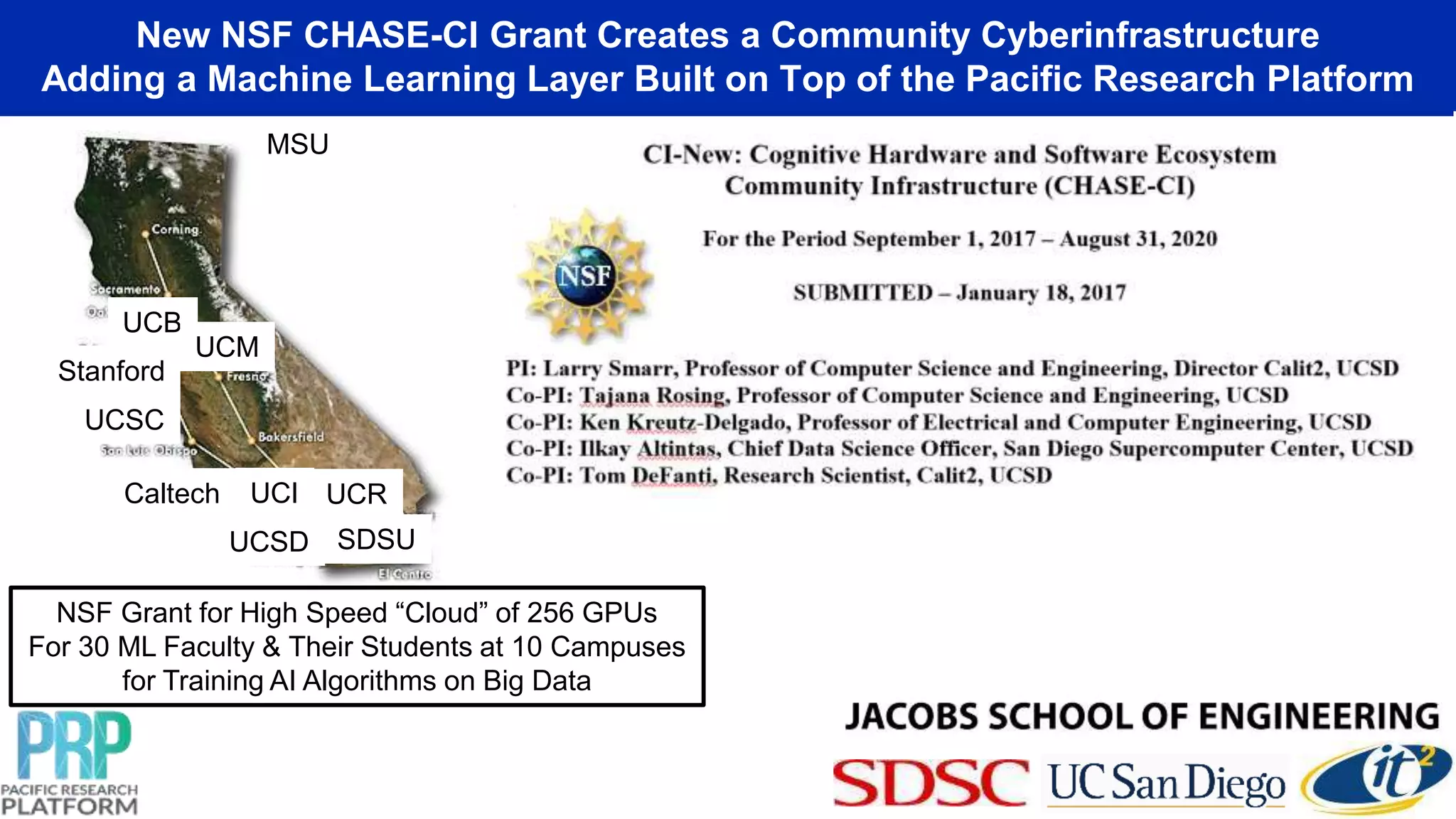

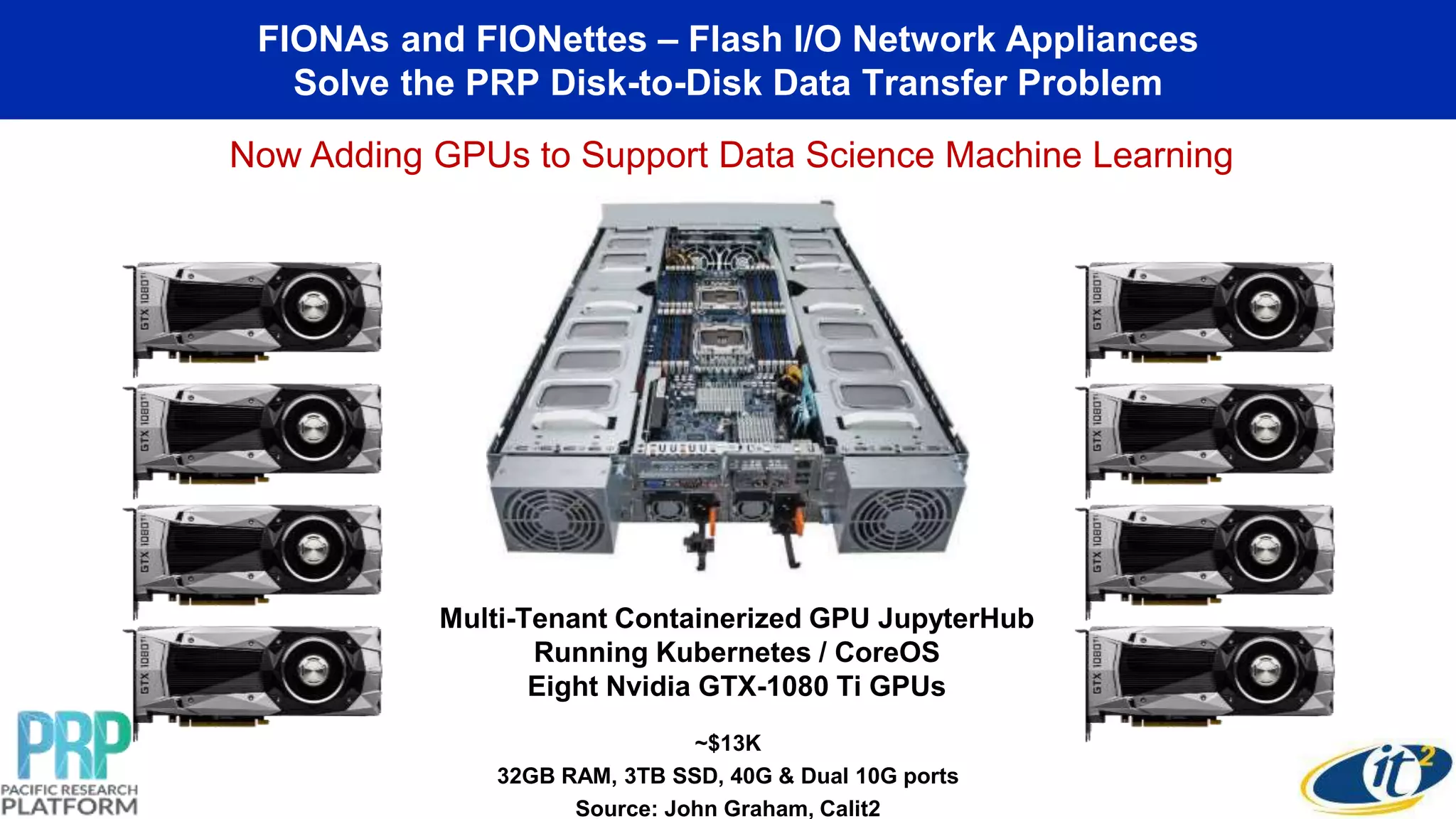

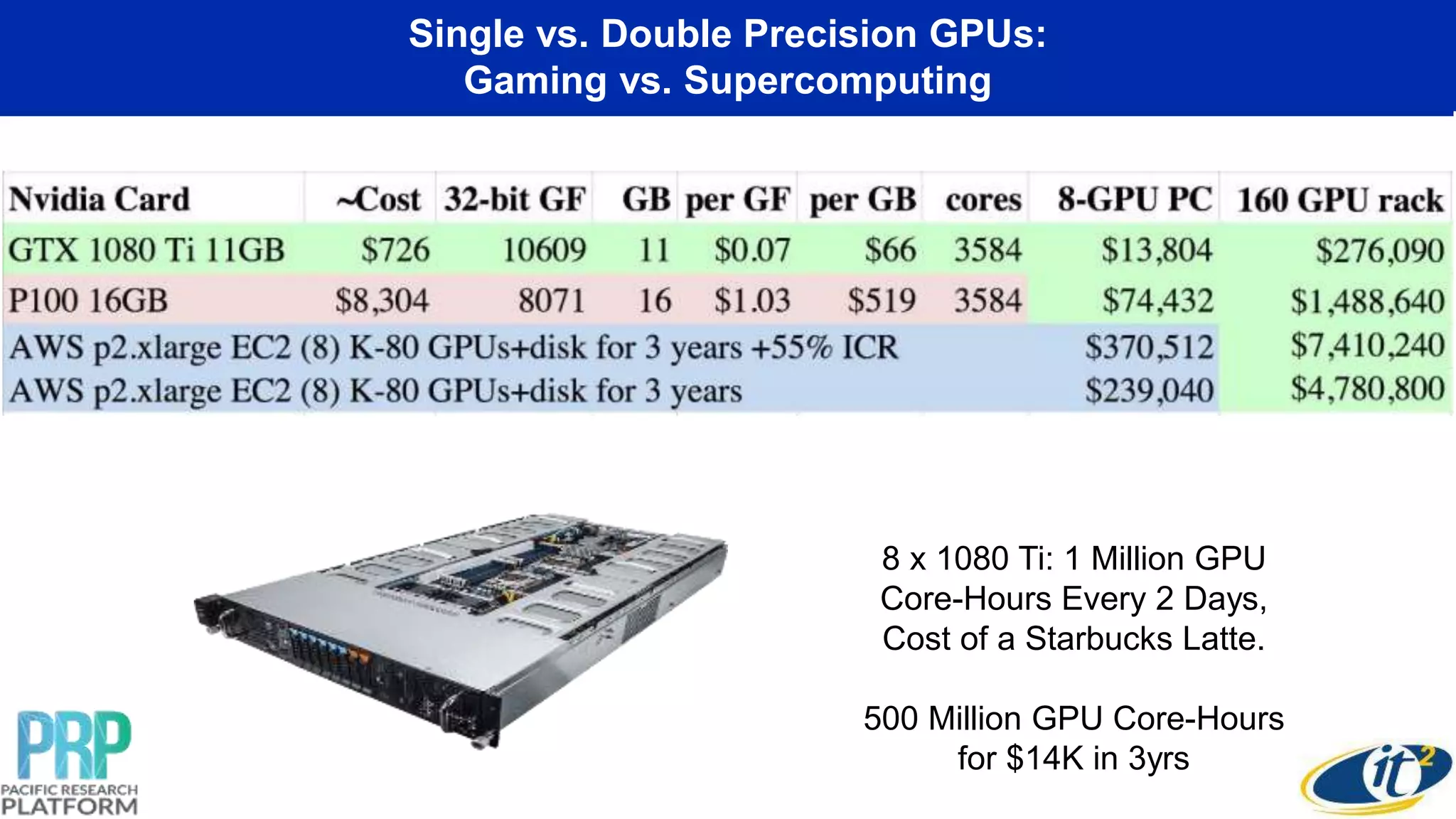

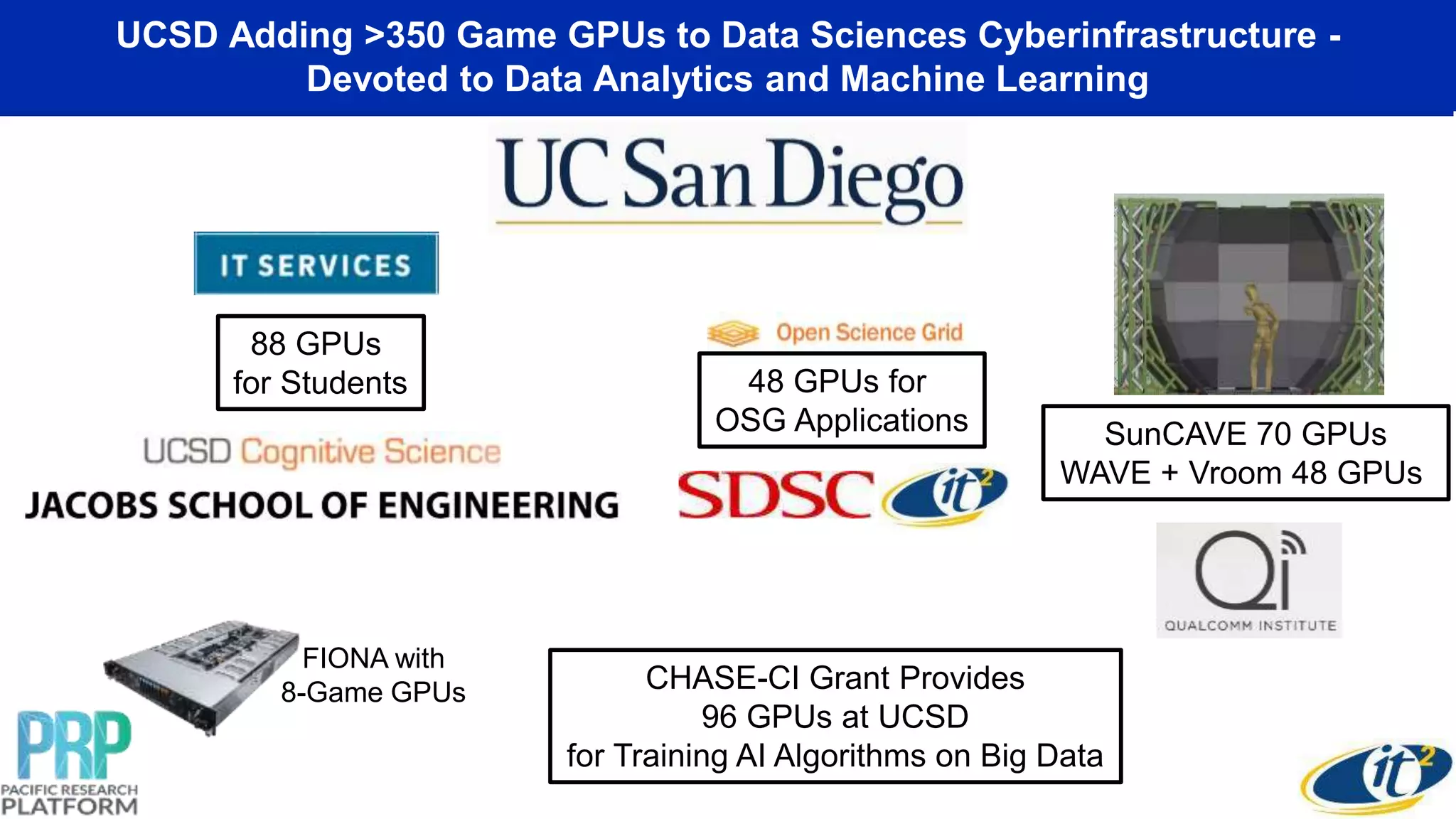

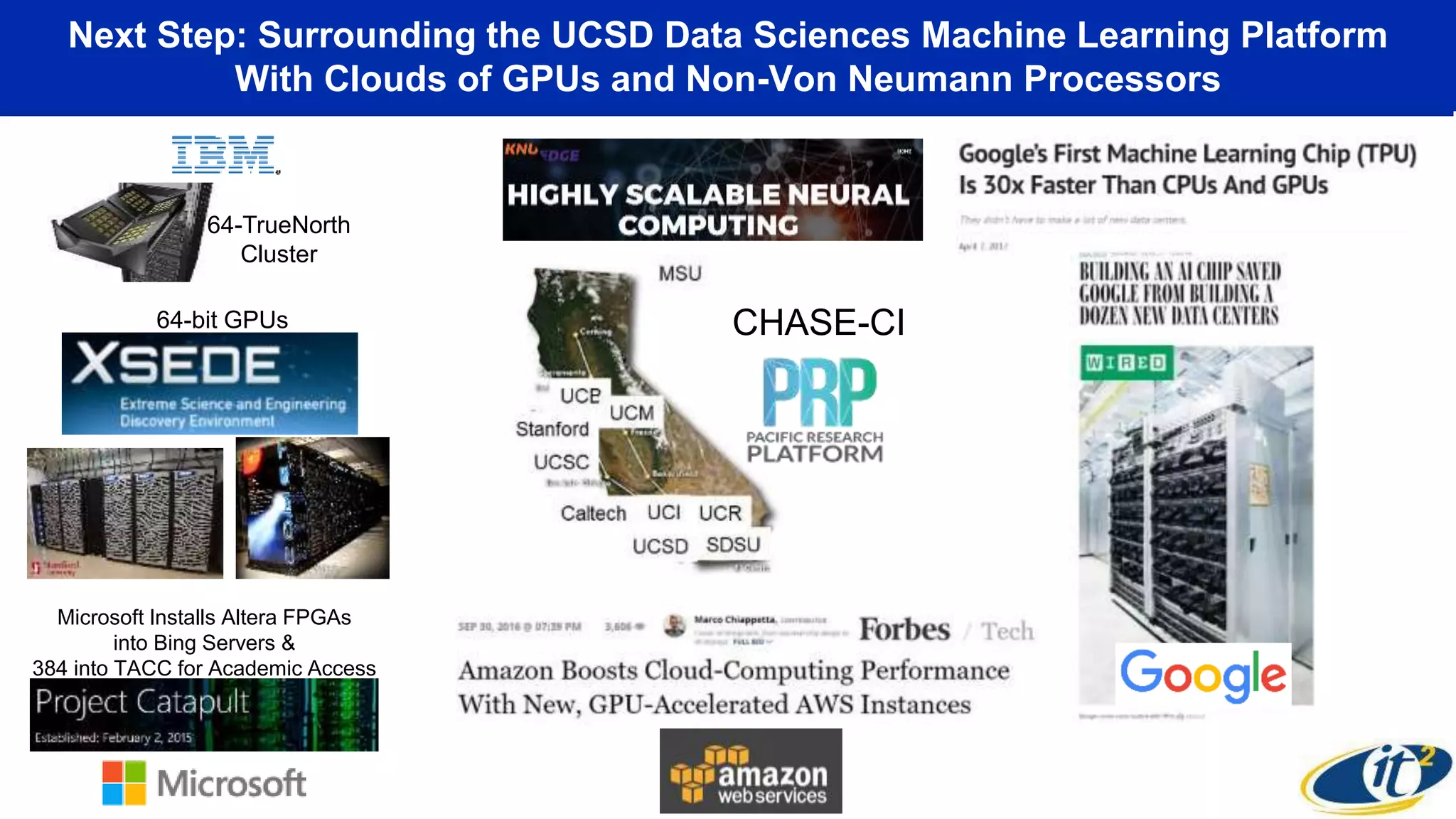

The Pacific Research Platform aims to establish a regional-scale cyberinfrastructure for big data analytics by connecting data generators and consumers through high-speed optical fiber networks. This initiative, supported by NSF funding, focuses on enhancing data transfer capabilities among multiple research campuses and facilitating significant scientific research, particularly in fields requiring large dataset analyses. Key projects include improved data movement speeds for climate models and collaborations among various universities to leverage advanced machine learning techniques.