This document summarizes a research paper on using i-vectors for artist recognition in music. The proposed method extracts i-vectors from songs to obtain a compact representation that captures artist variability. It uses a Gaussian mixture model (GMM) to calculate statistics from frame-level features, then performs factor analysis to extract i-vectors from the GMM supervectors. Experiments on a dataset of 20 artists show the method achieves better artist recognition performance than baselines, and works well across different backends like discriminant analysis and probabilistic linear discriminant analysis.

![Overview

• Introduction

o Artist recognition

o I-vector based systems

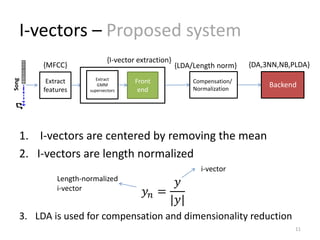

• I-vector Frontend

o Calculate statistics [GMM supervectors ]

o Factor analysis [estimate hidden factors to extract I-vectors]

• Proposed method:

o Normalization and compensation techniques

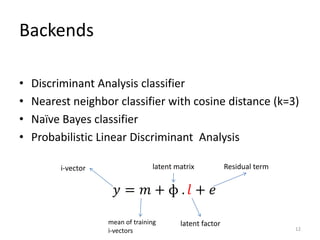

o Backends

• Experiments

o Setup

o Evaluation

o Baselines

o Results

• Conclusion

2](https://image.slidesharecdn.com/timbralmodelingformusicartistrecognitionusingi-vectors-151029165323-lva1-app6892/85/Timbral-modeling-for-music-artist-recognition-using-i-vectors-2-320.jpg)

![I-vector Factor Analysis – Terminology

5

i-vector

GMM supervector

Frame-level feature

Step3:

Factor

Analysis

Total Variability

Space (TVS)

[~400]

GMM space

[~20,000]

Frame-level

feature space

[~20]

Total factors

hidden

hidden

SpacesFeatures Factors

Step2:

Statistics

calculation

Step1:

Feature

extraction

Artist variability : the variability appears between different artists.

Session variability : the variability appears within songs of an artist.

Total variability : Artist + Session variability](https://image.slidesharecdn.com/timbralmodelingformusicartistrecognitionusingi-vectors-151029165323-lva1-app6892/85/Timbral-modeling-for-music-artist-recognition-using-i-vectors-5-320.jpg)

![Experiments – Baselines

Best artist recognition performance found on Artist20 db:

1. Single GMM : [D. PW Ellis, 2007]

– Provided with the dataset

2. Signature-based approach: [S. Shirali, 2009]

– Generates compact signatures and compares them using graph matching

3. Sparse modelling: [L. Su, 2013]

– Sparse feature learning method with a ‘bag of features’ using the

magnitude and phase parts of the spectrum

4. Multivariate kernels: [P. Kuksa, 2014]

– Uses multivariate kernels with the direct uniform quantization

5. Alternative:

– Uses the same structure as proposed method, only i-vector extraction block

is switched with PCA

15

{PCA} {DA}

{MFCC}

Extract

features

GMM

supervecto

rs

Front

end

Compensation/

Normalization

{LDA/Length norm}

Song

Backend](https://image.slidesharecdn.com/timbralmodelingformusicartistrecognitionusingi-vectors-151029165323-lva1-app6892/85/Timbral-modeling-for-music-artist-recognition-using-i-vectors-15-320.jpg)