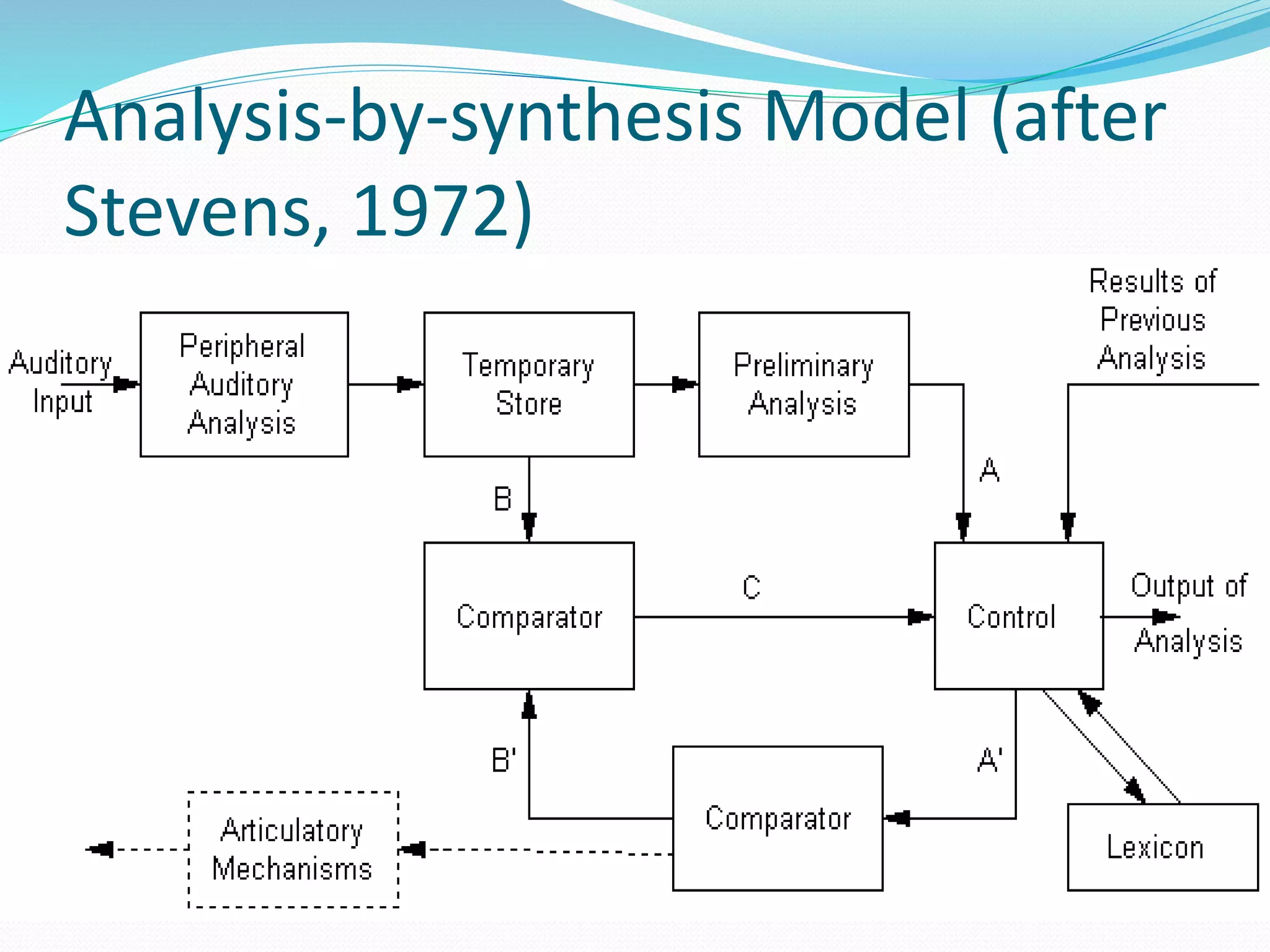

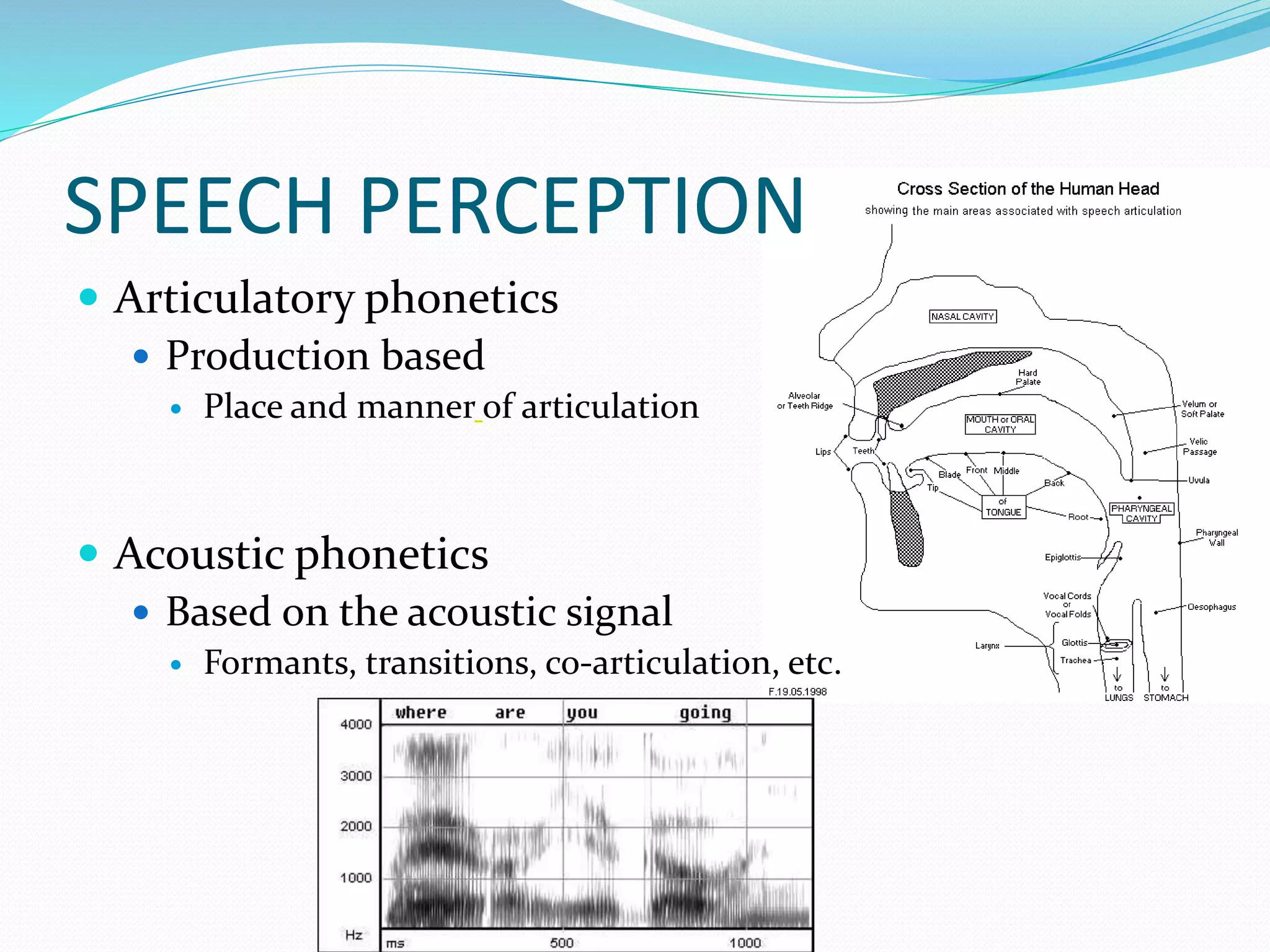

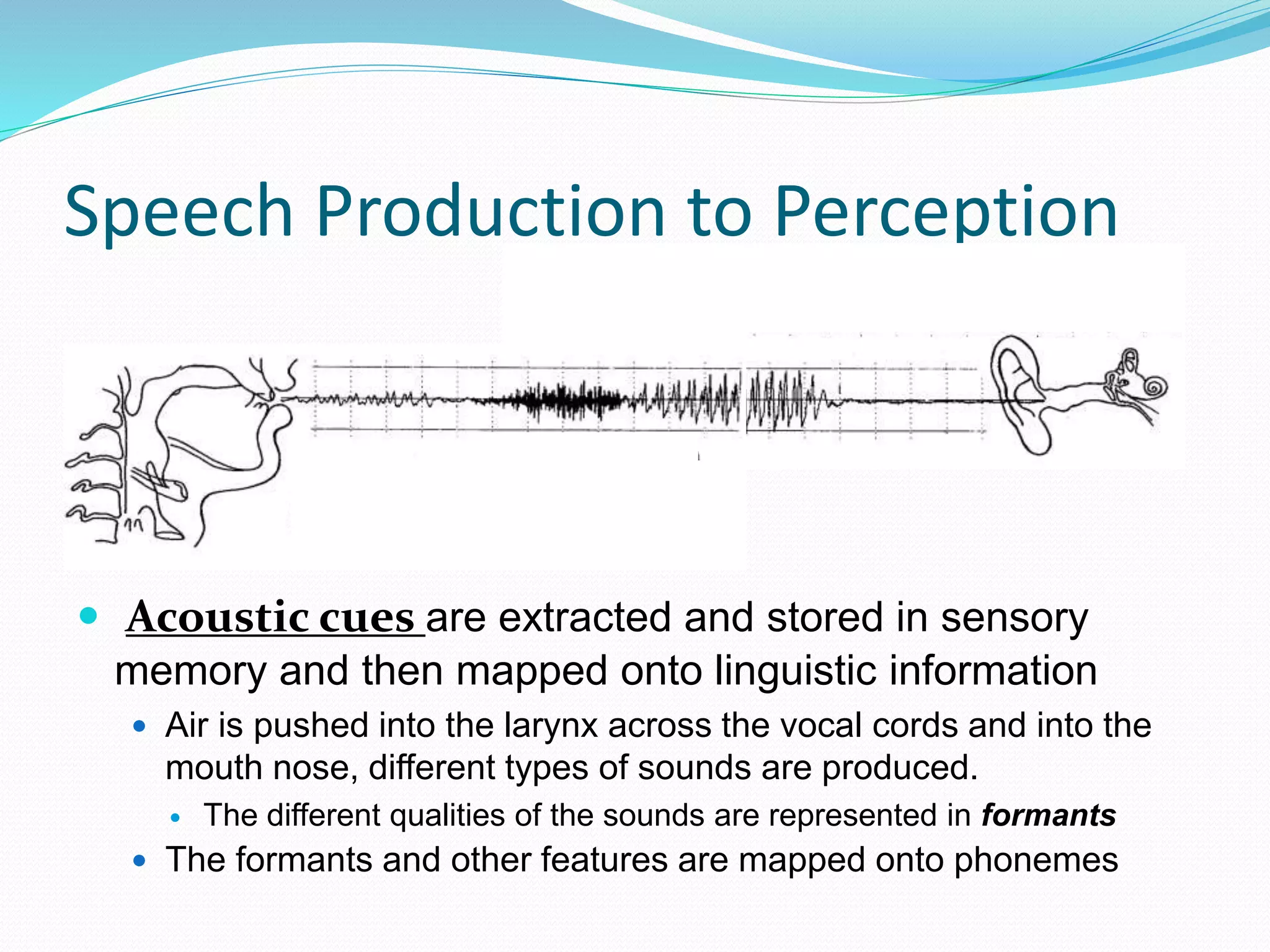

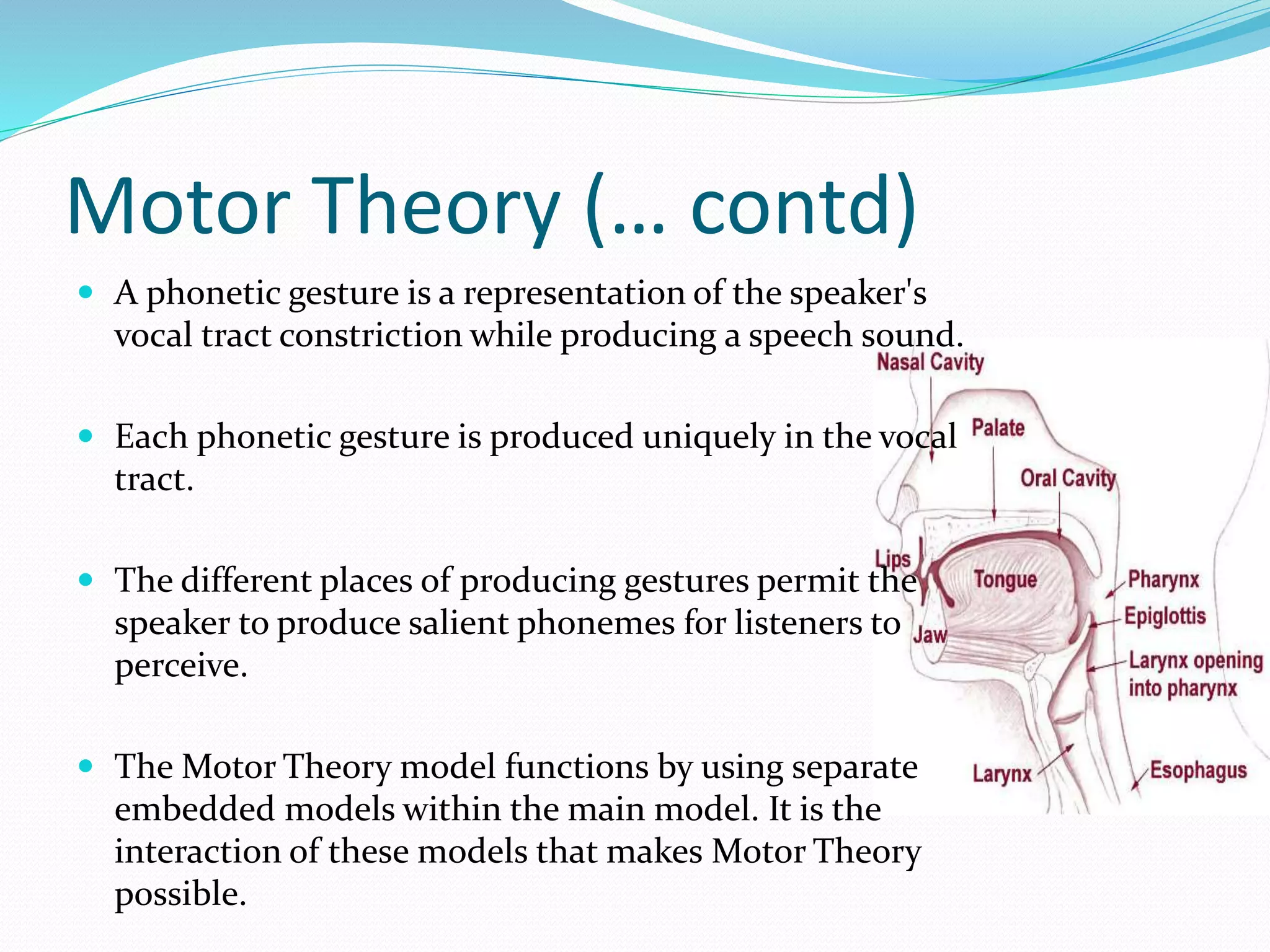

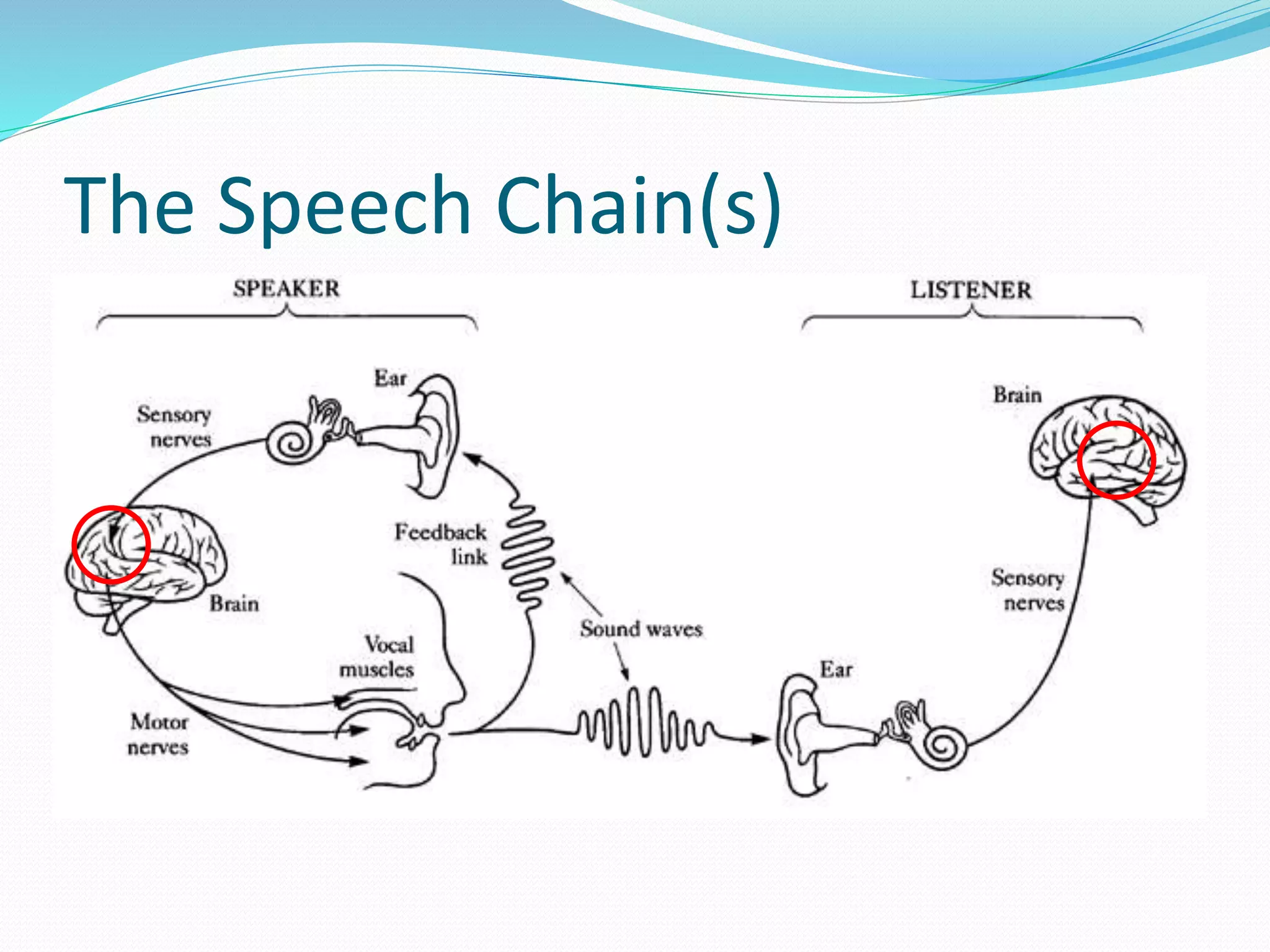

The document discusses various theories of speech perception including motor theory, analysis-by-synthesis, direct realism, and stage theories. It emphasizes how speech perception involves mapping acoustic signals to linguistic information and highlights the complexity of this process. Key points include the relationship between speech production and perception, the uniqueness of phonetic gestures, and the role of auditory processing in understanding speech signals.

![Analysis-by-Synthesis Theory of Speech

Perception

(Stevens and Halle 1967)

Stevens and Halle (1967) have postulated that

"... the perception of speech involves the internal

synthesis of patterns according to certain rules, and a

matching of these internally generated patterns

against the pattern under analysis. ..moreover, ...the

generative rules in the perception of speech [are] in

large measure identical to those utilized in speech

production, and that fundamental to both processes

[is] an abstract representation of the speech event."](https://image.slidesharecdn.com/theoriesofspeechperception-141130095002-conversion-gate01/75/Theories-of-Speech-Perception-18-2048.jpg)