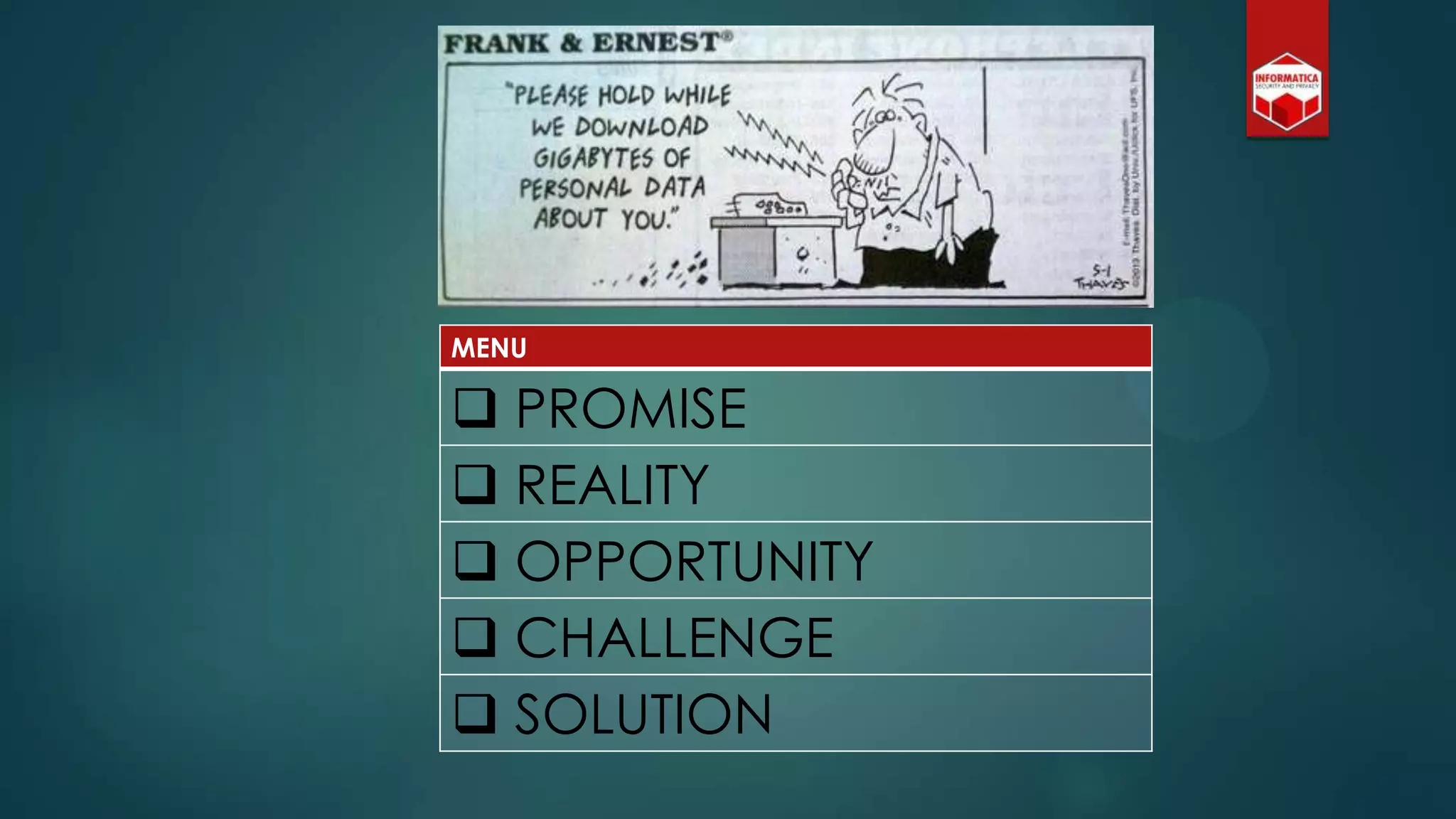

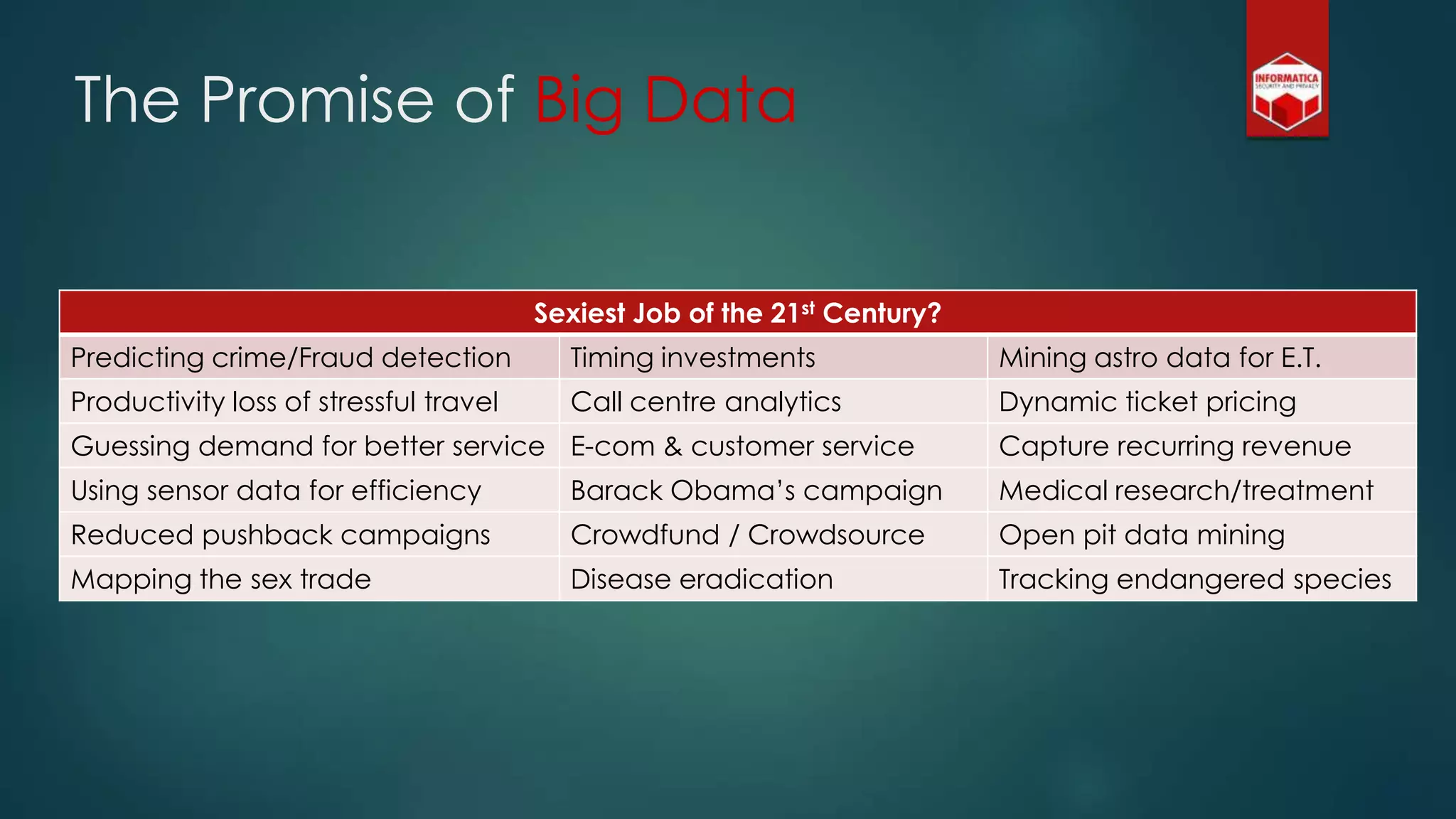

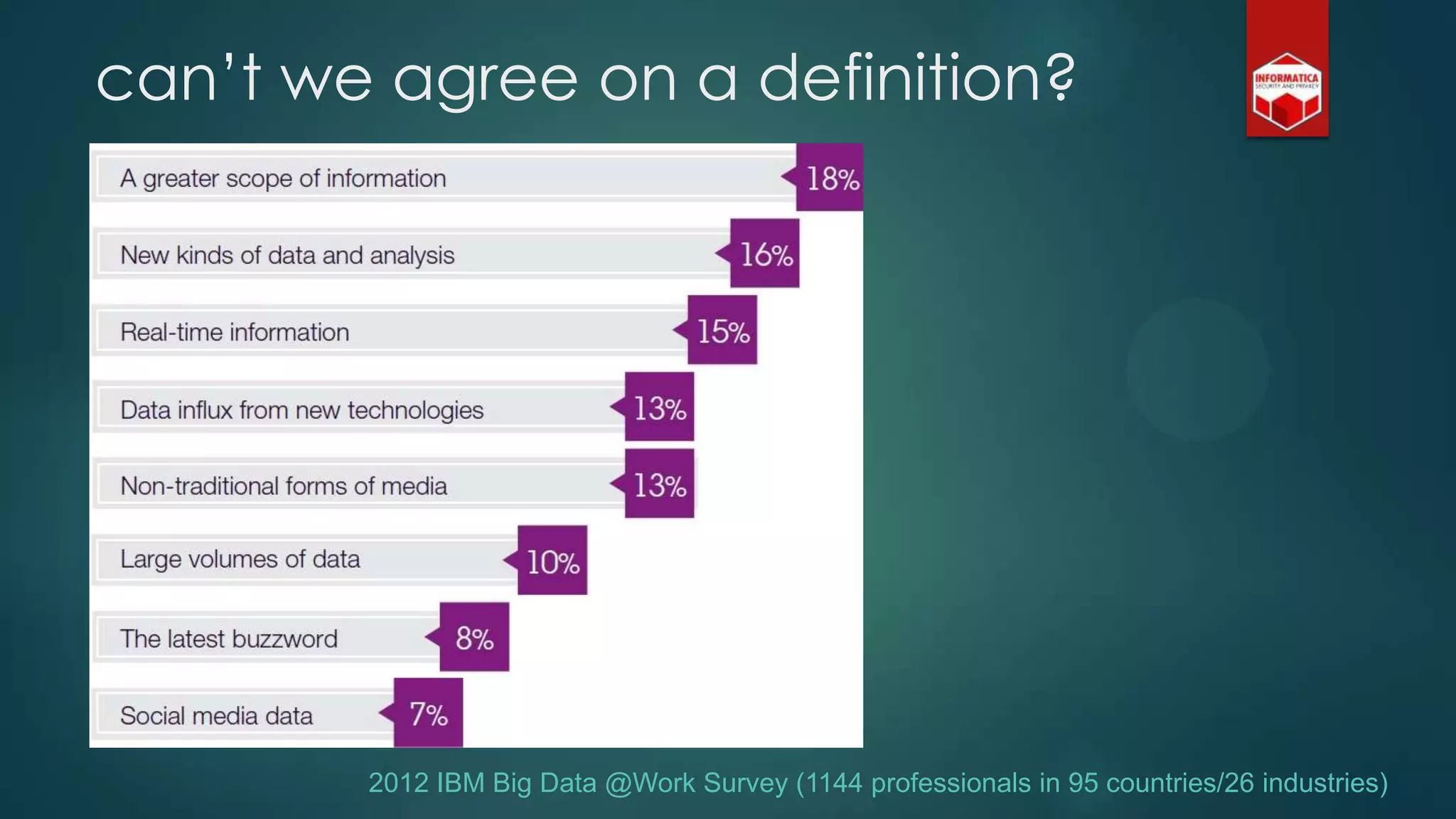

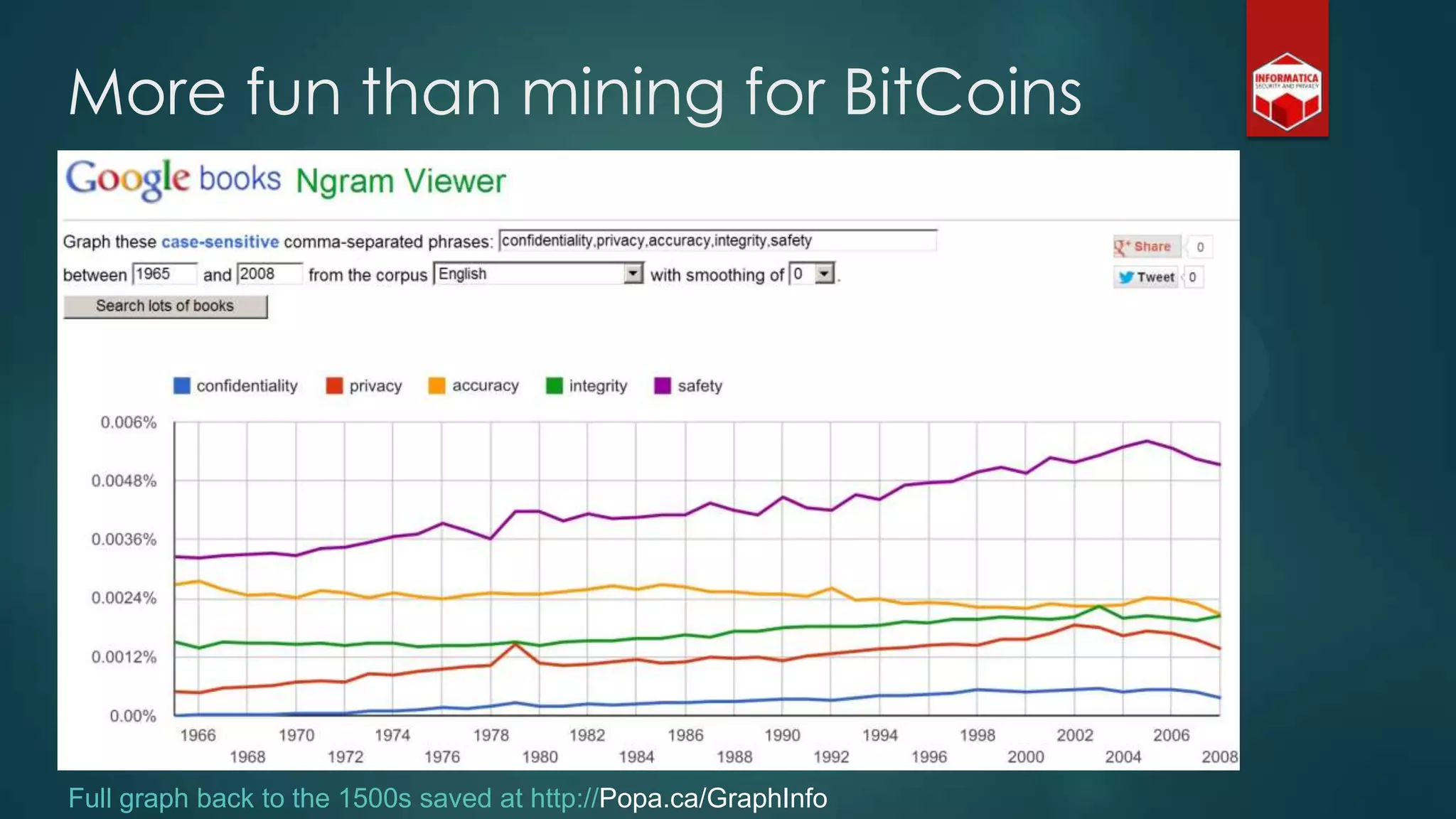

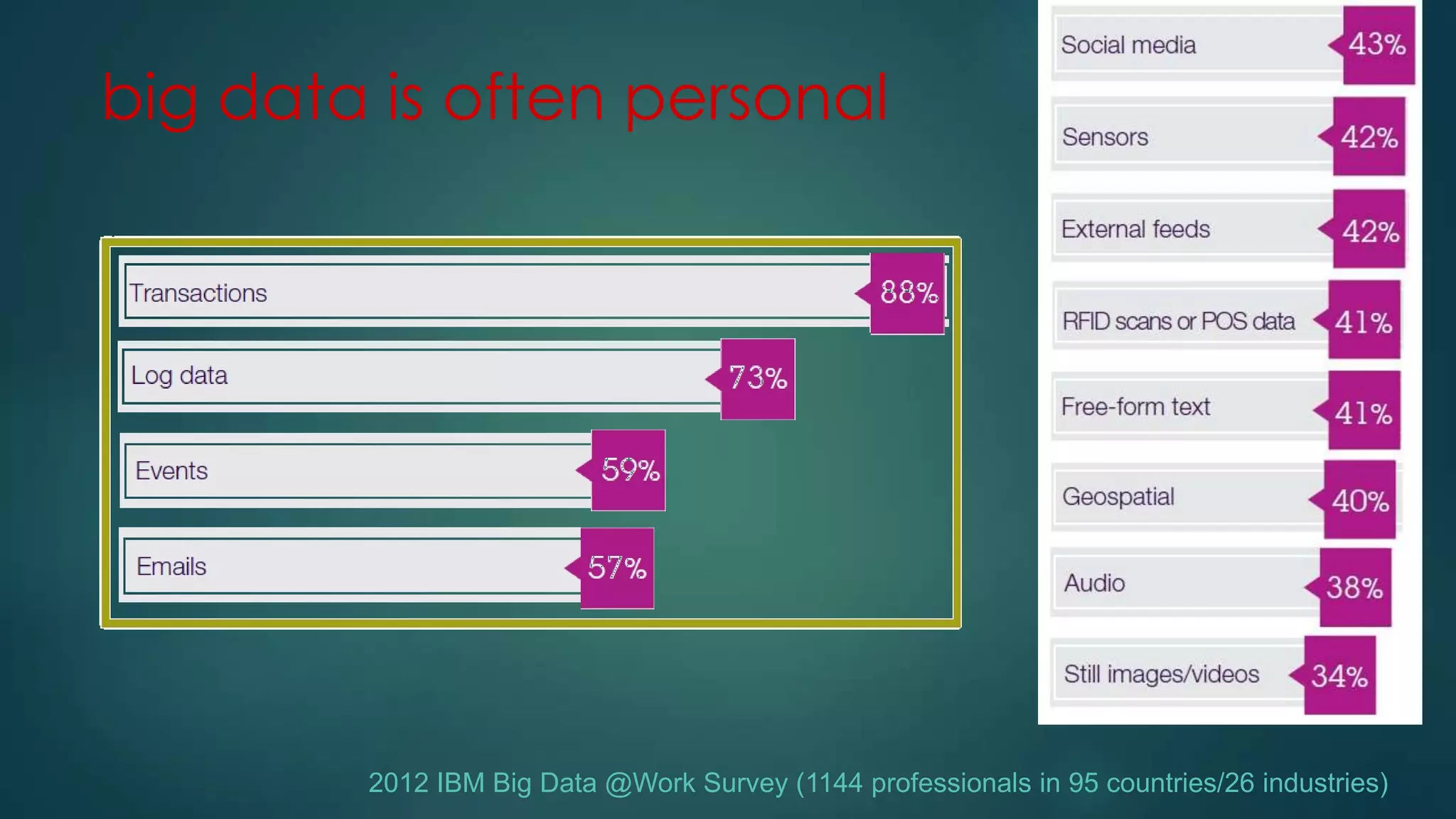

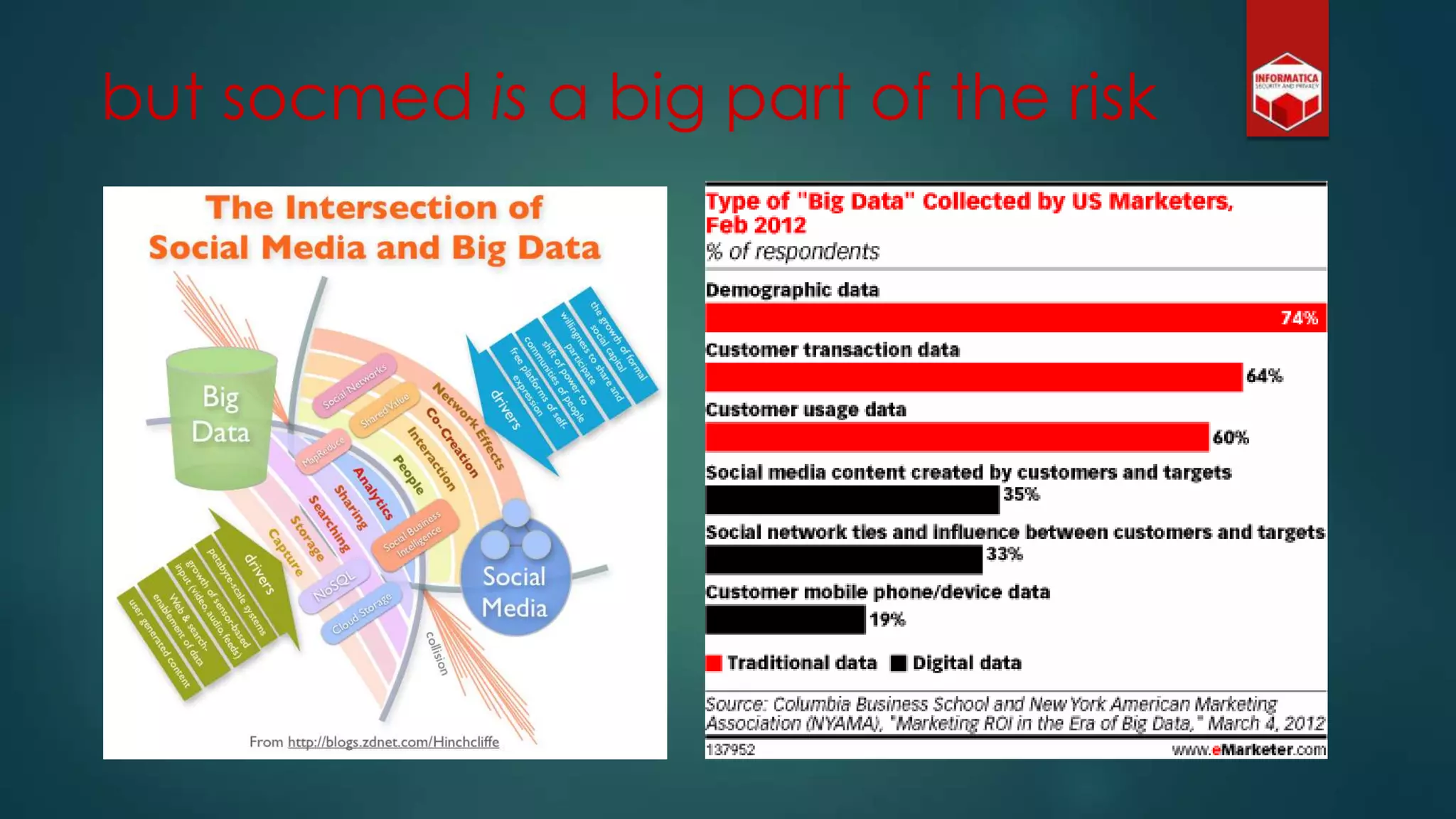

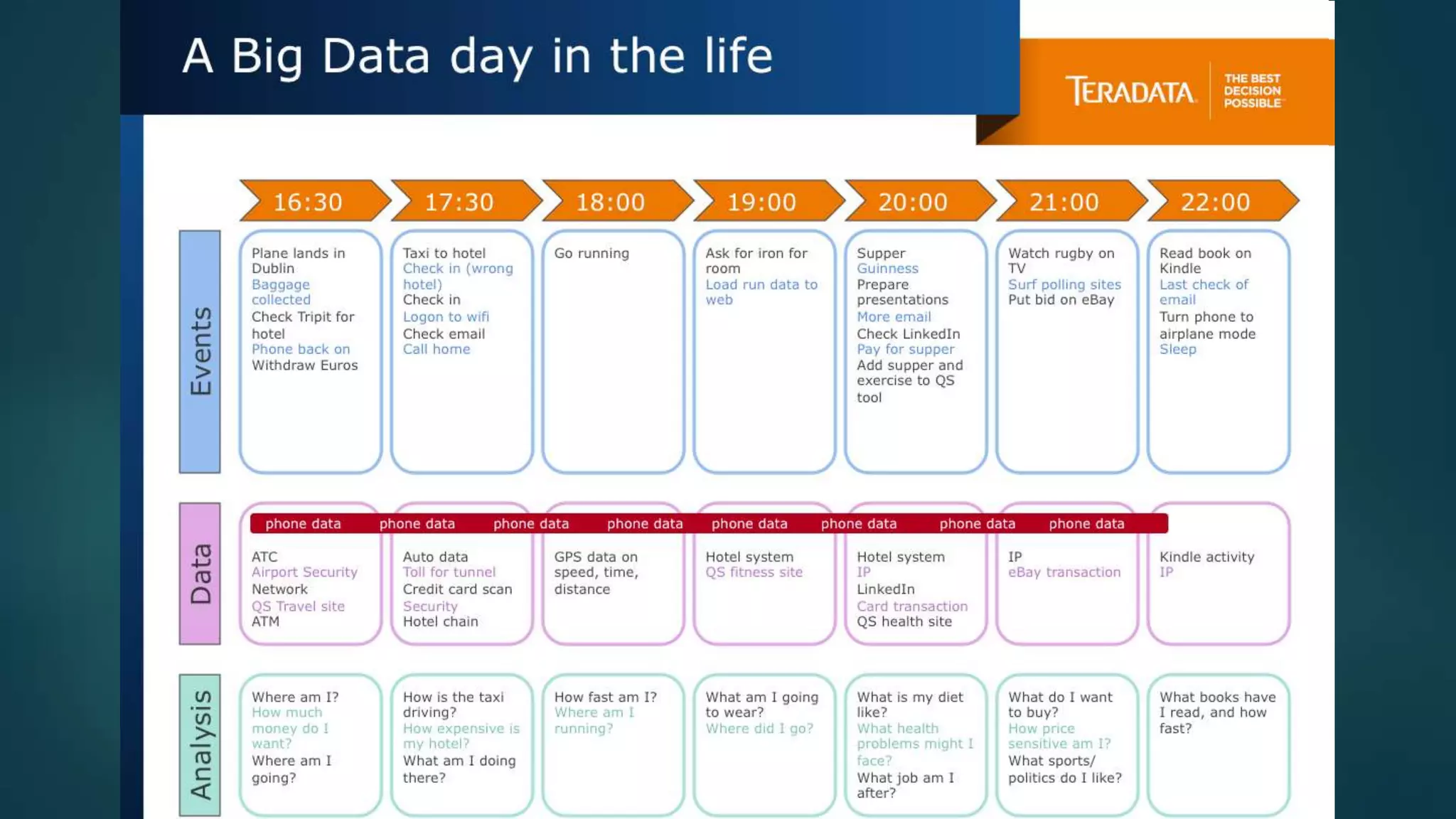

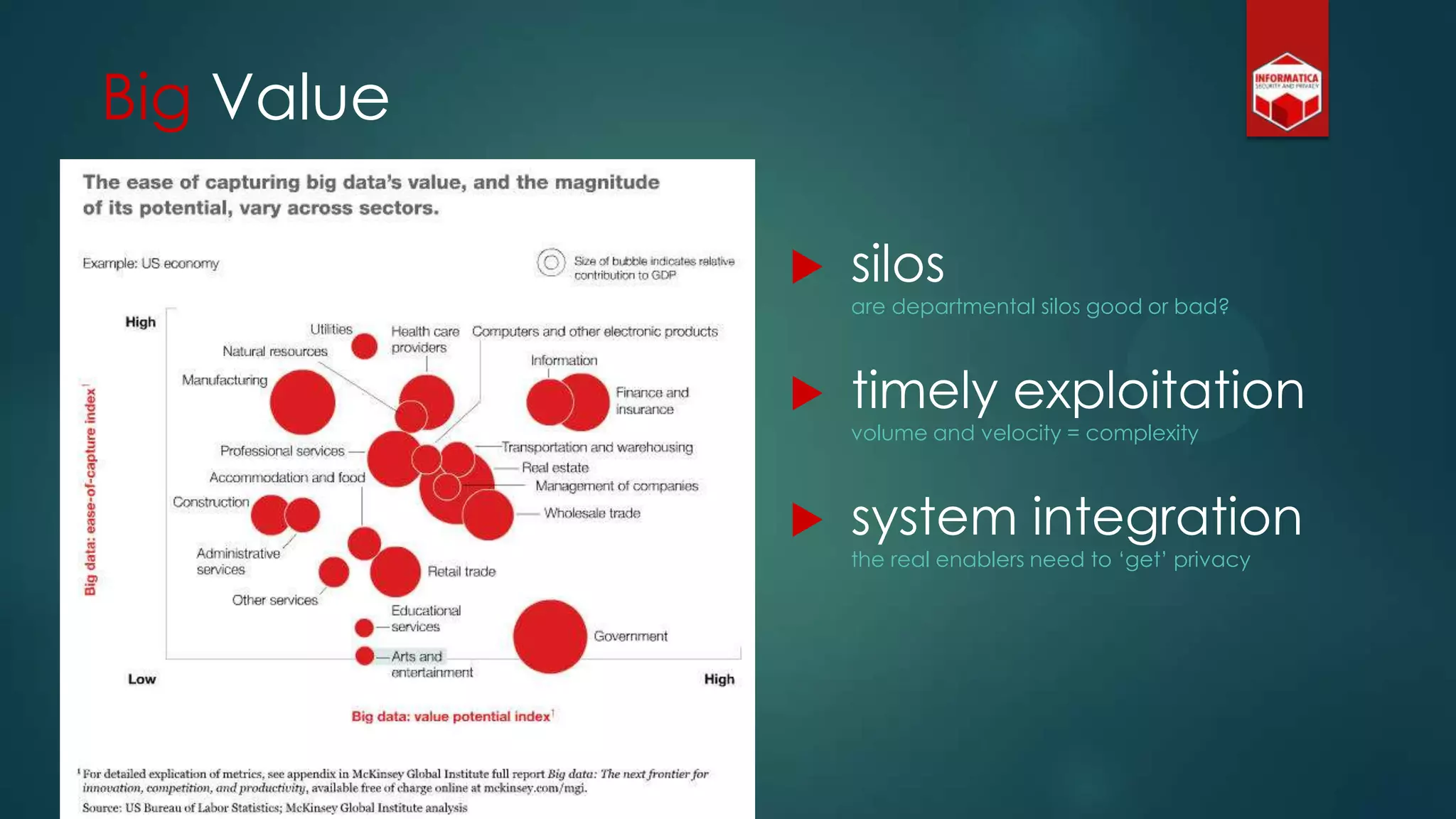

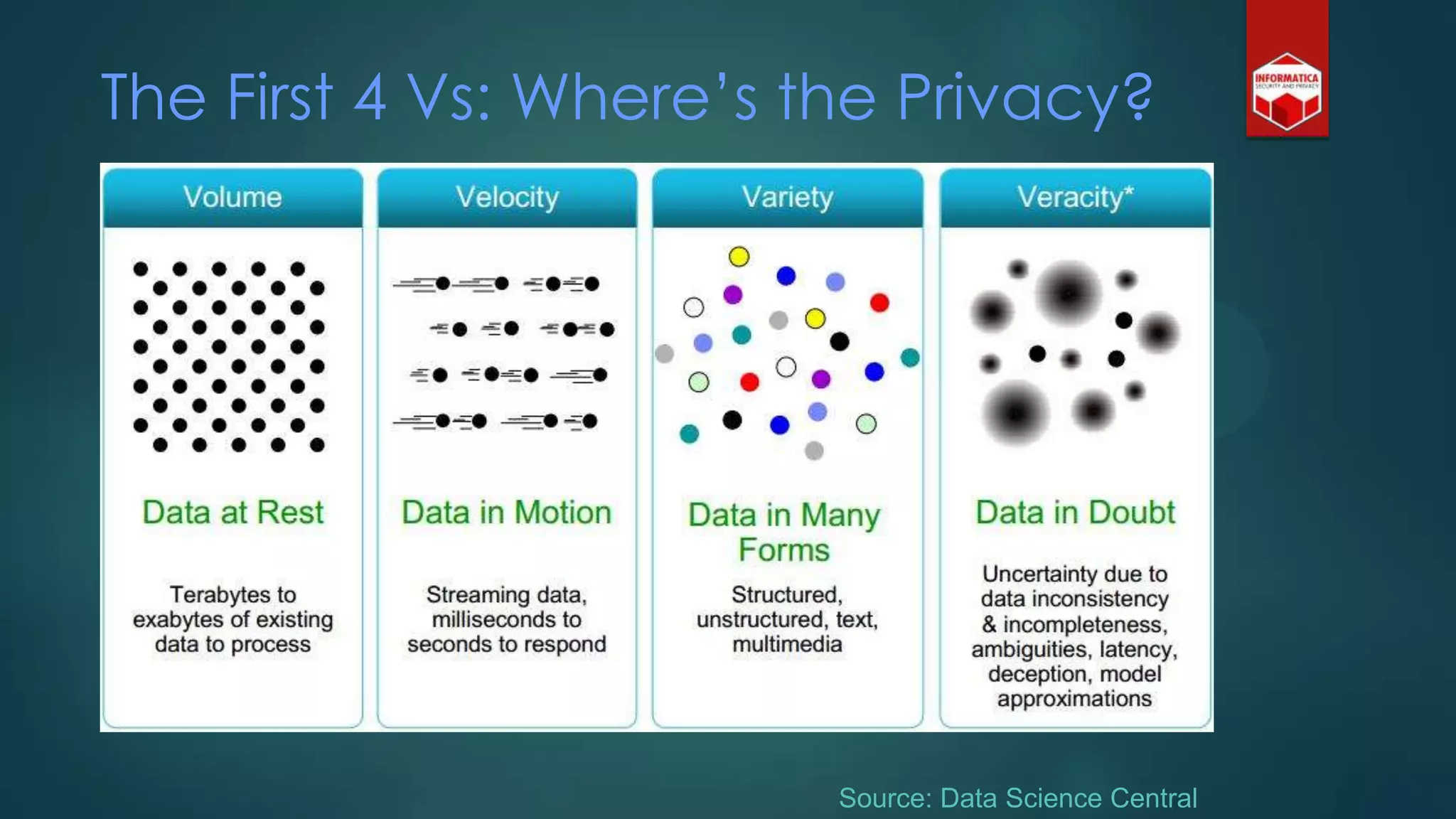

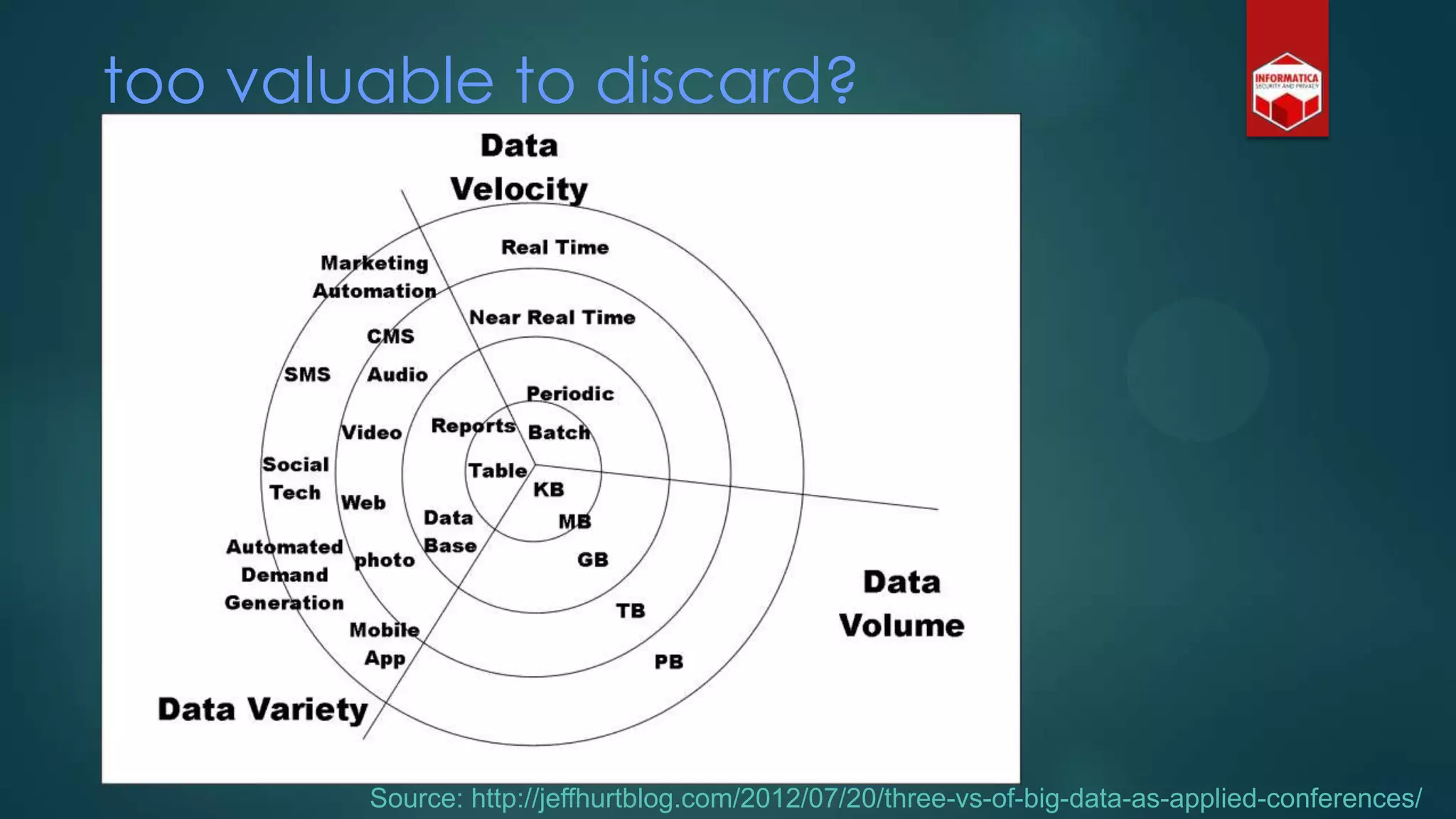

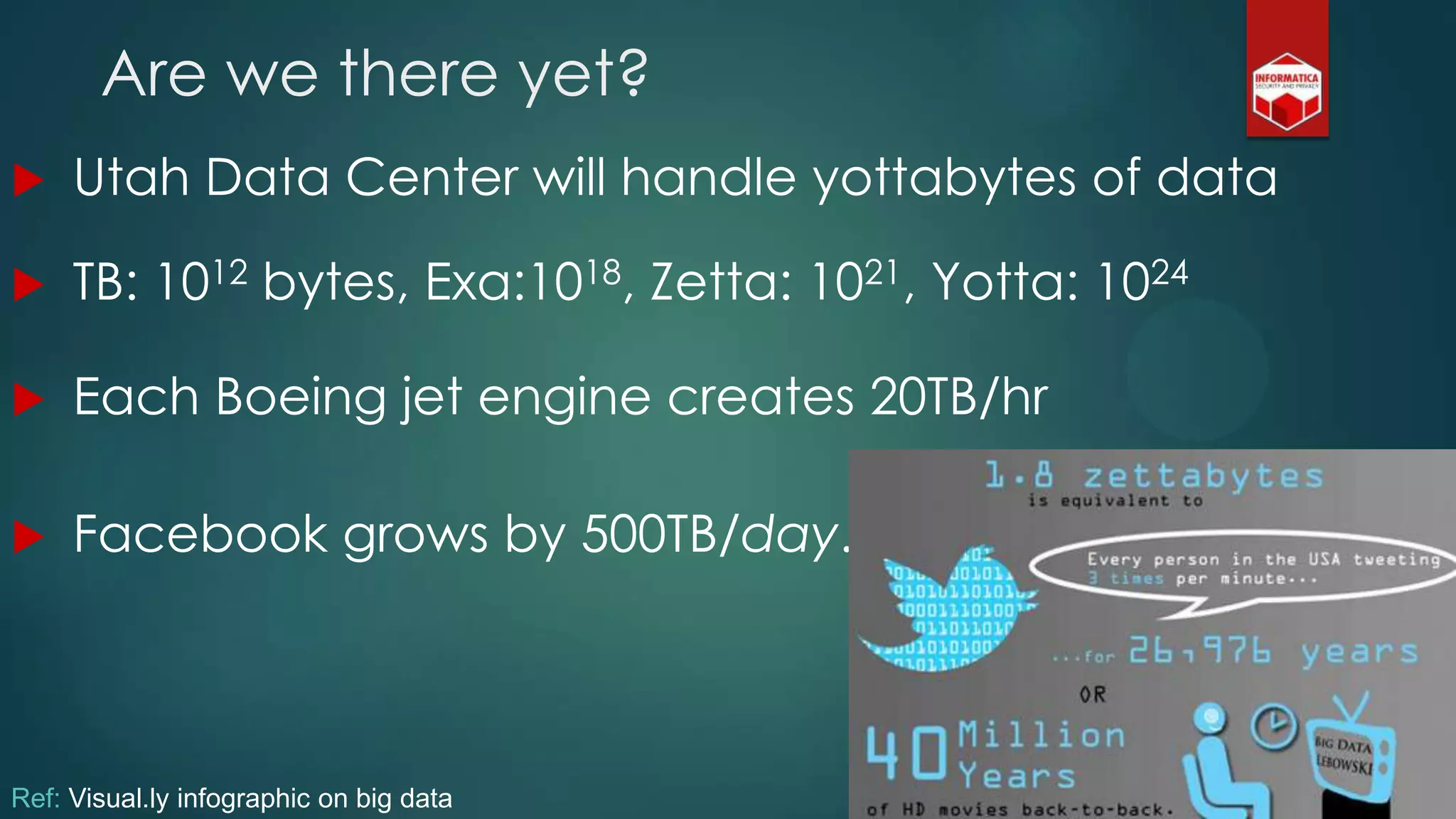

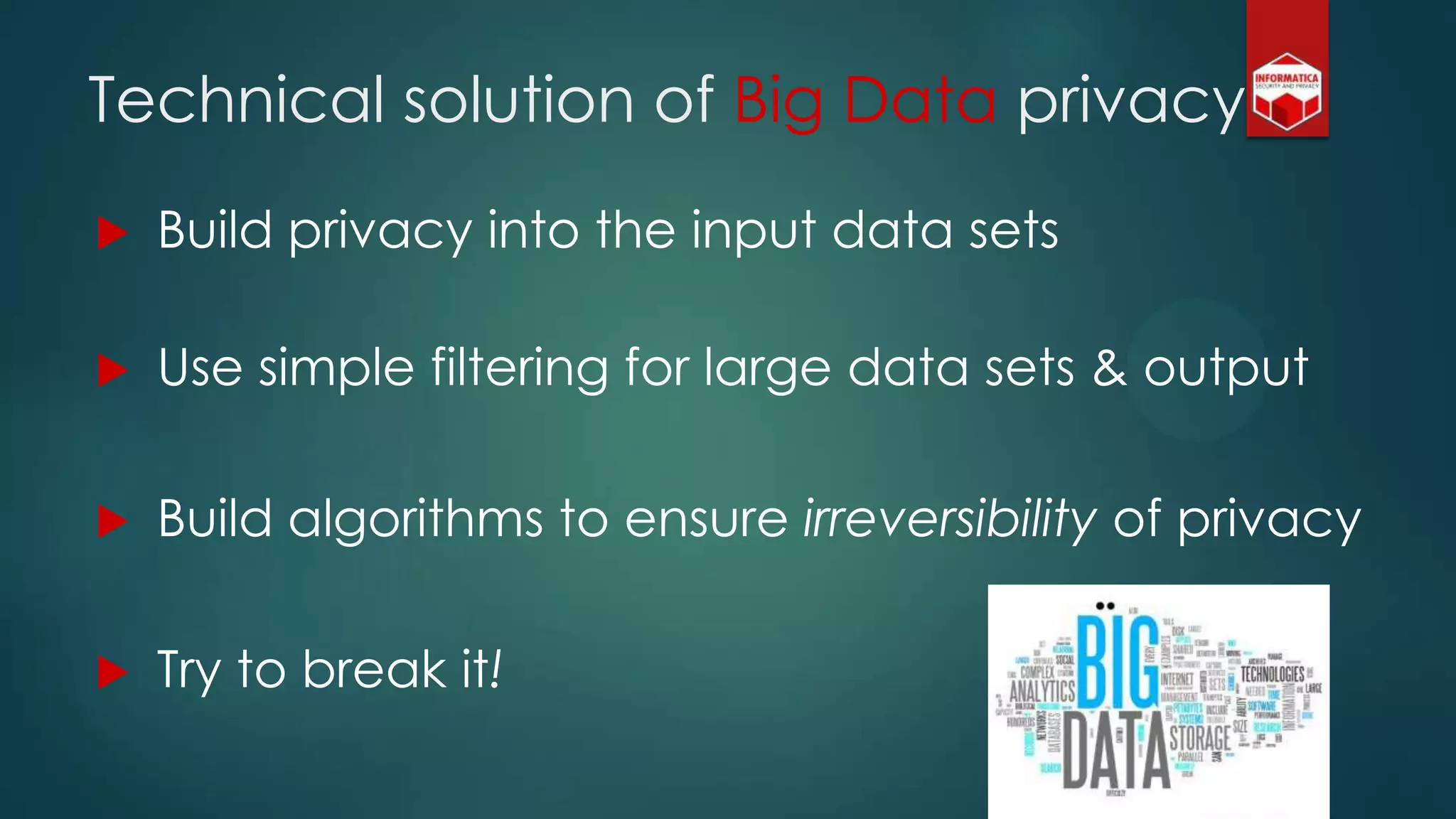

The document explores the concept of big data, its promises, challenges, and implications for privacy and business intelligence. It emphasizes the importance of data analytics in various fields, from crime prediction to customer service, while also addressing the risks associated with personal data usage and the need for responsible data handling. Overall, it advocates for a balanced approach to harnessing big data's potential while safeguarding individuals' privacy.

![Privacy must be tack[l]ed [head-]on](https://image.slidesharecdn.com/iapp-bigdata-130920084113-phpapp01/75/The-REAL-Impact-of-Big-Data-on-Privacy-54-2048.jpg)