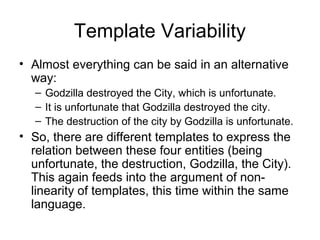

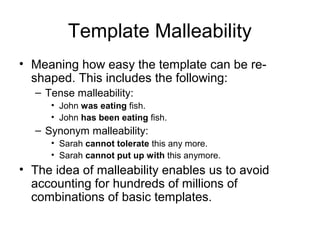

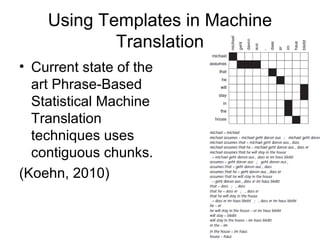

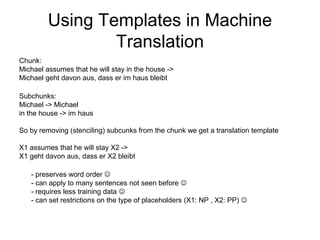

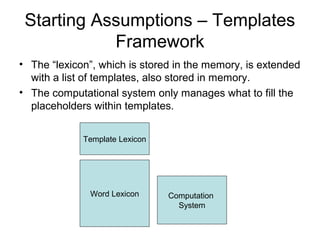

The document discusses templates in linguistics, illustrating how children create language through simple sentence structures and recursion. It emphasizes the importance of templates in language acquisition, linguistic variability, and their applications in information extraction and machine translation. The main claim posits that language complexity arises from a combination of basic templates and the malleability of these templates across different languages.

![Semantic-Pragmatic Prompt

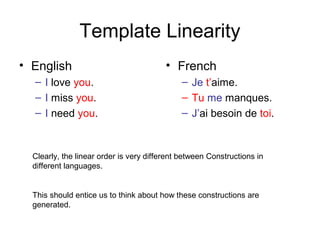

• An area of overlap between the reason, context,

and information content of some sentence.

• Start with list of arguments (X1: I, X2: You)

• I Want to express [+feeling] [+positive]

[+distance], therefore:

– in English, we invoke the template I miss X2.

– In French, we invoke the template X2 me manques

(with some adjustments depending on pronouns, etc)

• So I can utter the sentence after filling the

template:

– I miss Randa.

– Randa me manque.](https://image.slidesharecdn.com/squid-templatesinlinguistics-160814180500/85/Templates-in-linguistics-Why-Garbage-Garbage-12-320.jpg)