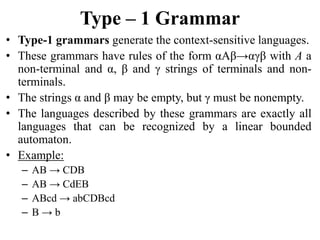

This document provides an overview of natural language processing (NLP). It discusses how NLP analyzes human language input to build computational models of language. The key components of NLP are natural language understanding and natural language generation. Challenges in NLP include ambiguity, context dependence, and the creative nature of language. The document also outlines common NLP techniques like keyword analysis and syntactic parsing, as well as formal grammars and parsing approaches.