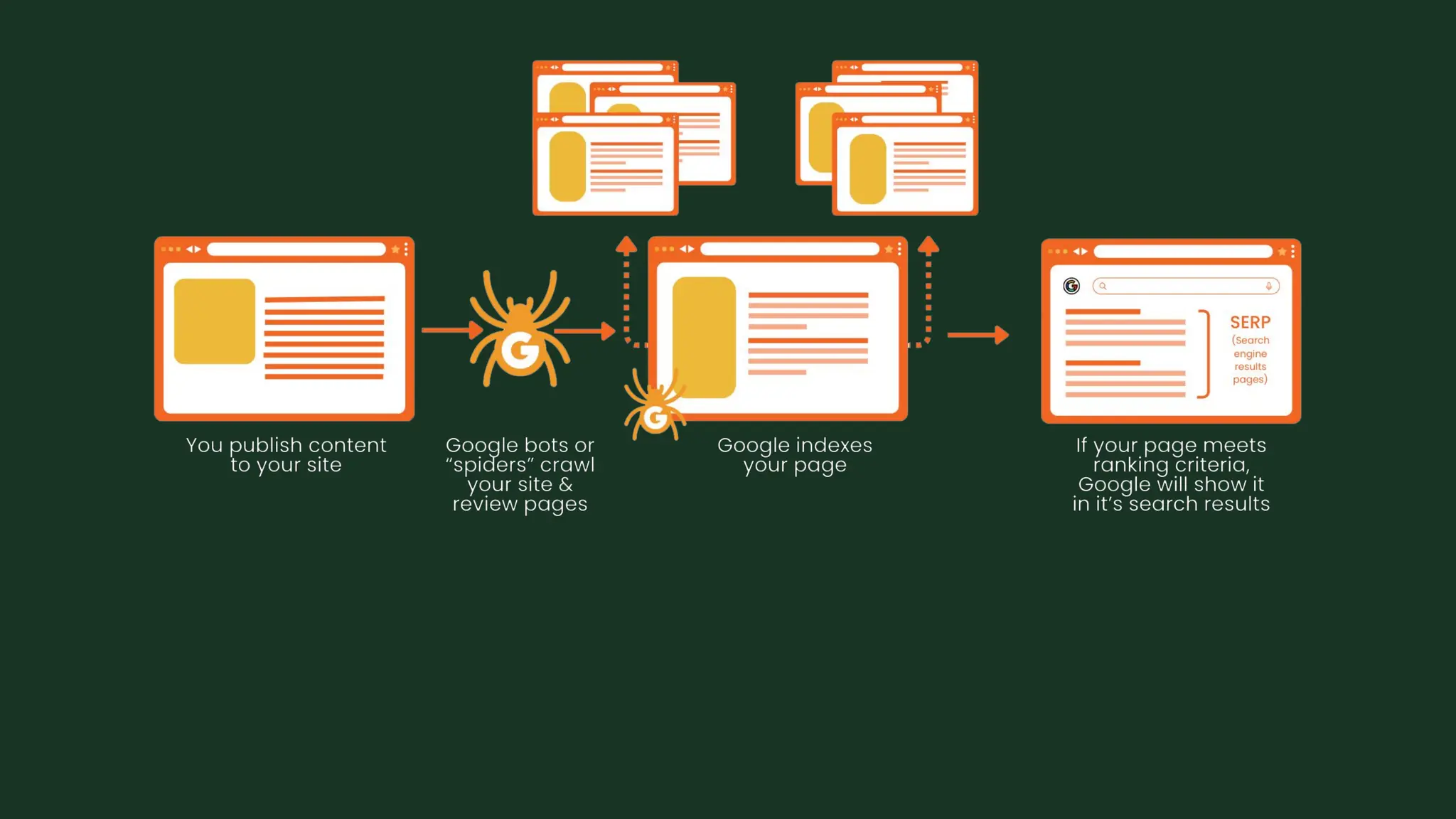

The talk "Technical SEO for Local Websites and Why It Matters" done for Local SEO for Good 2025

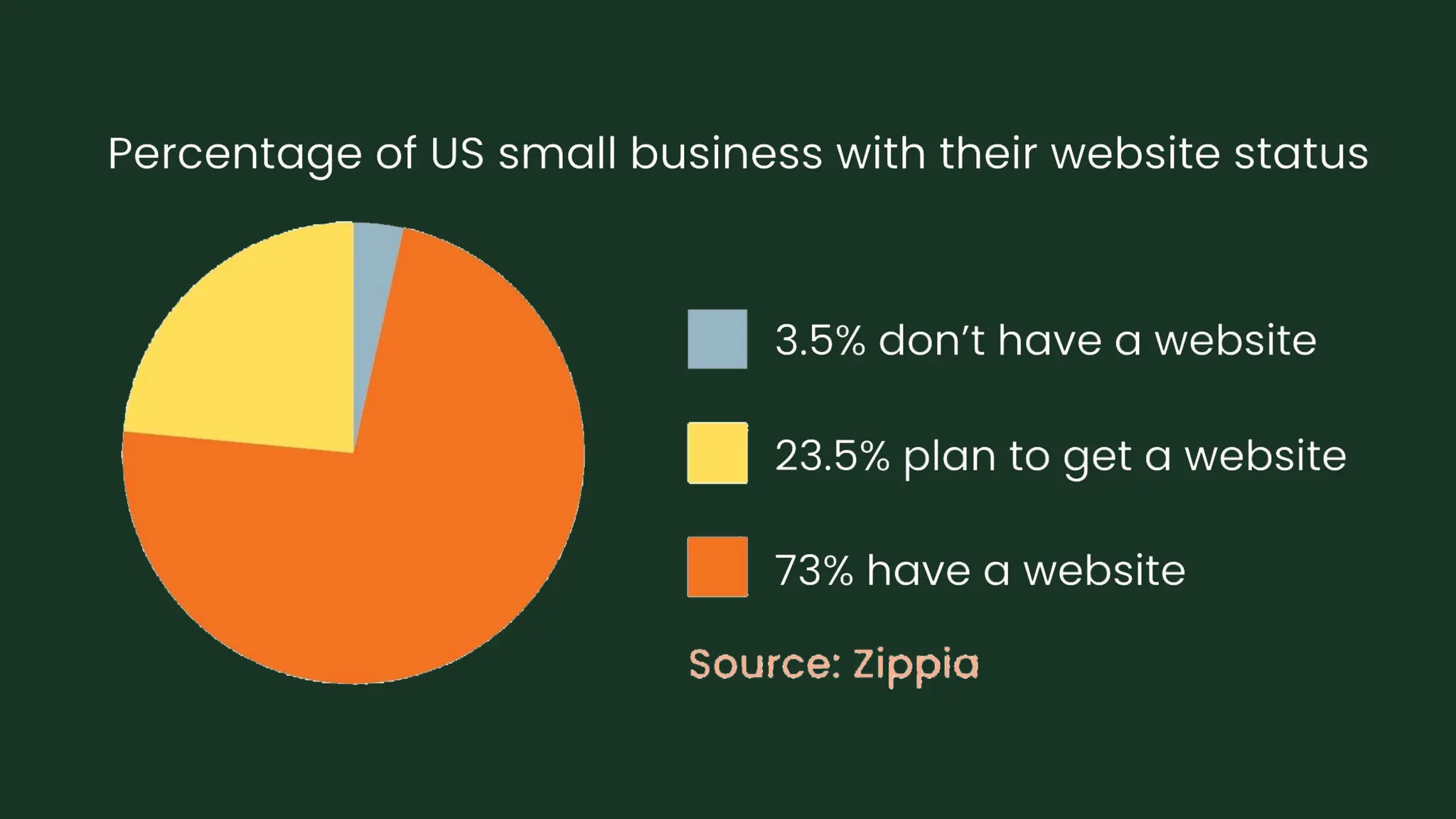

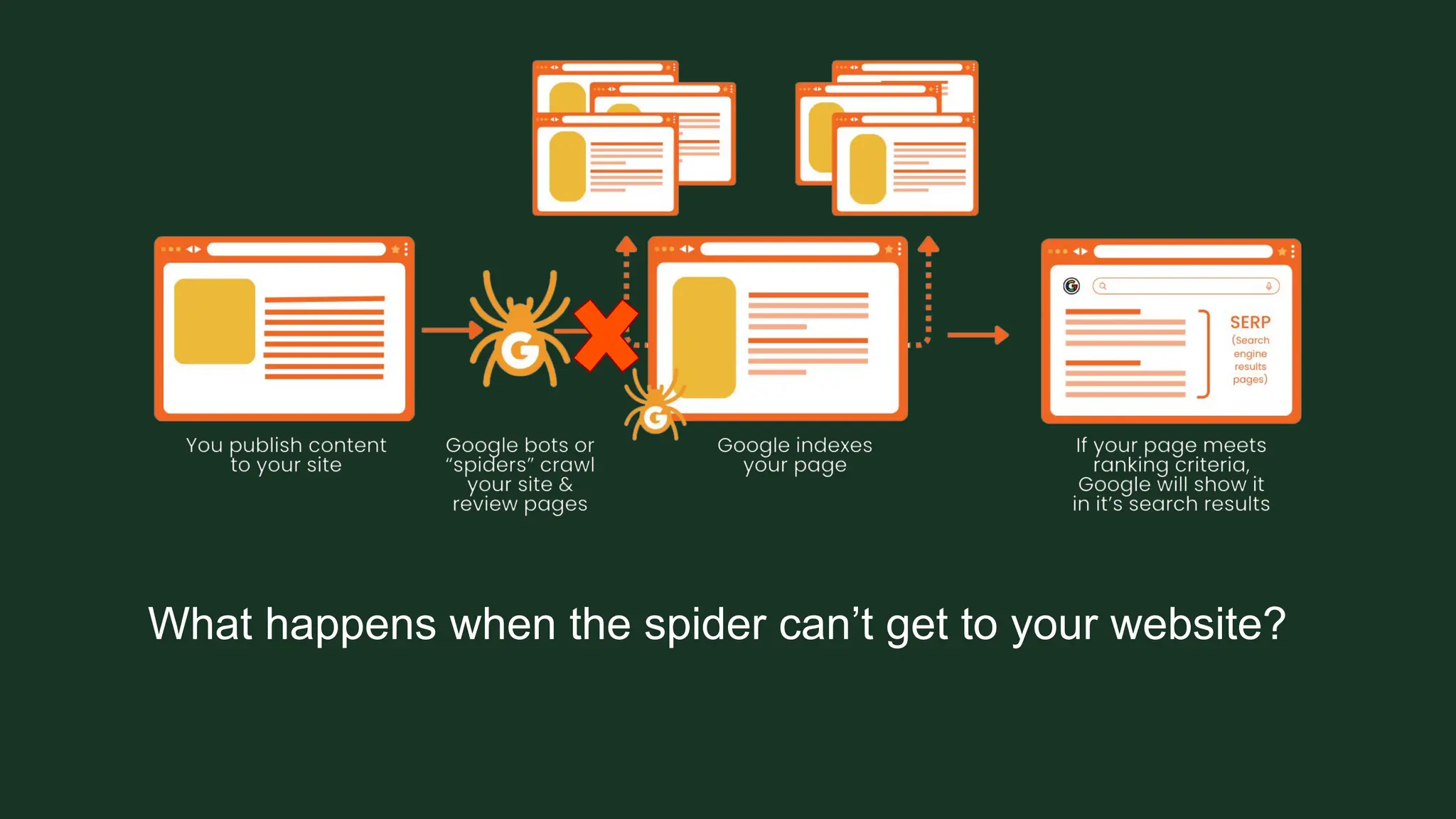

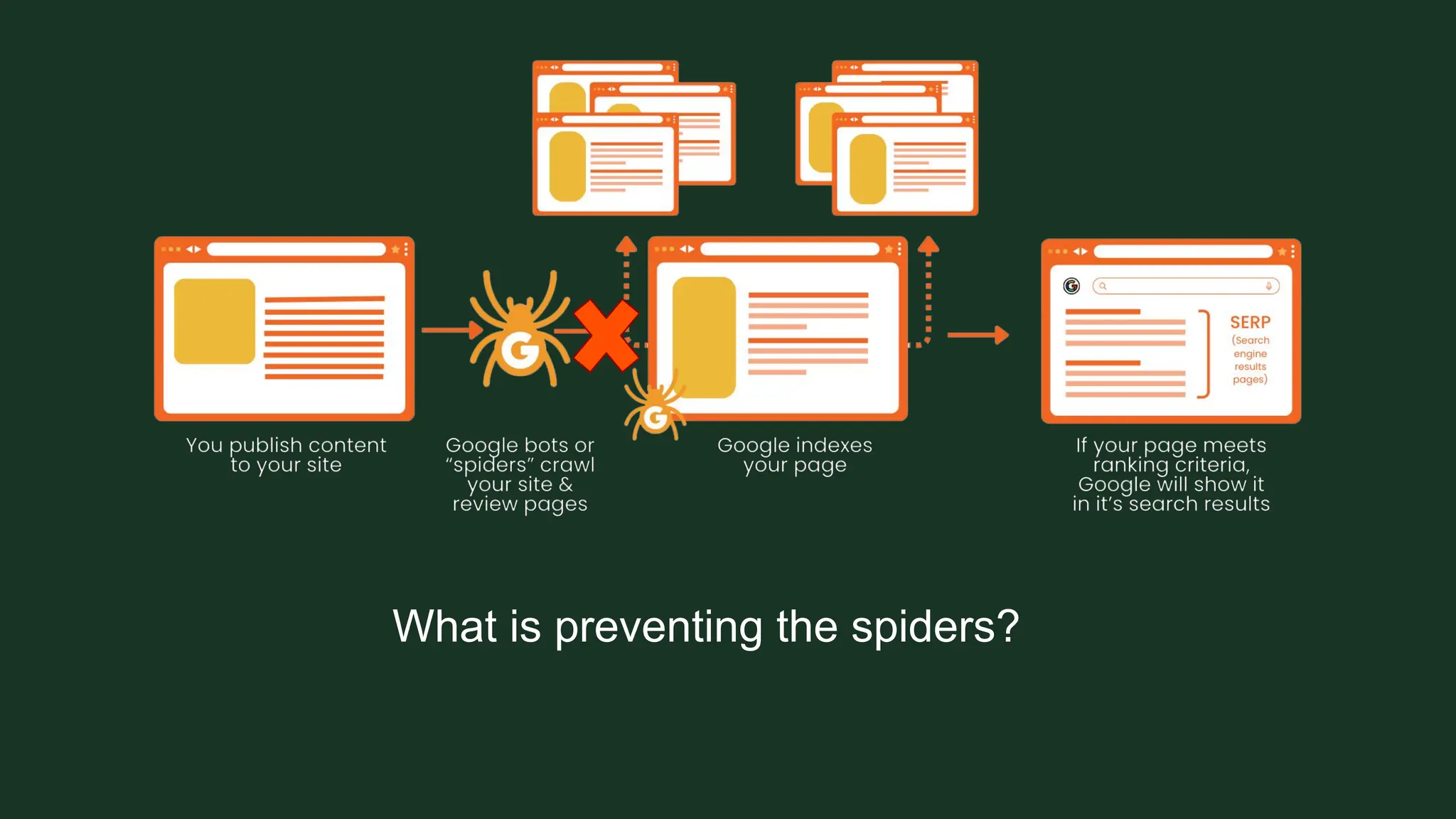

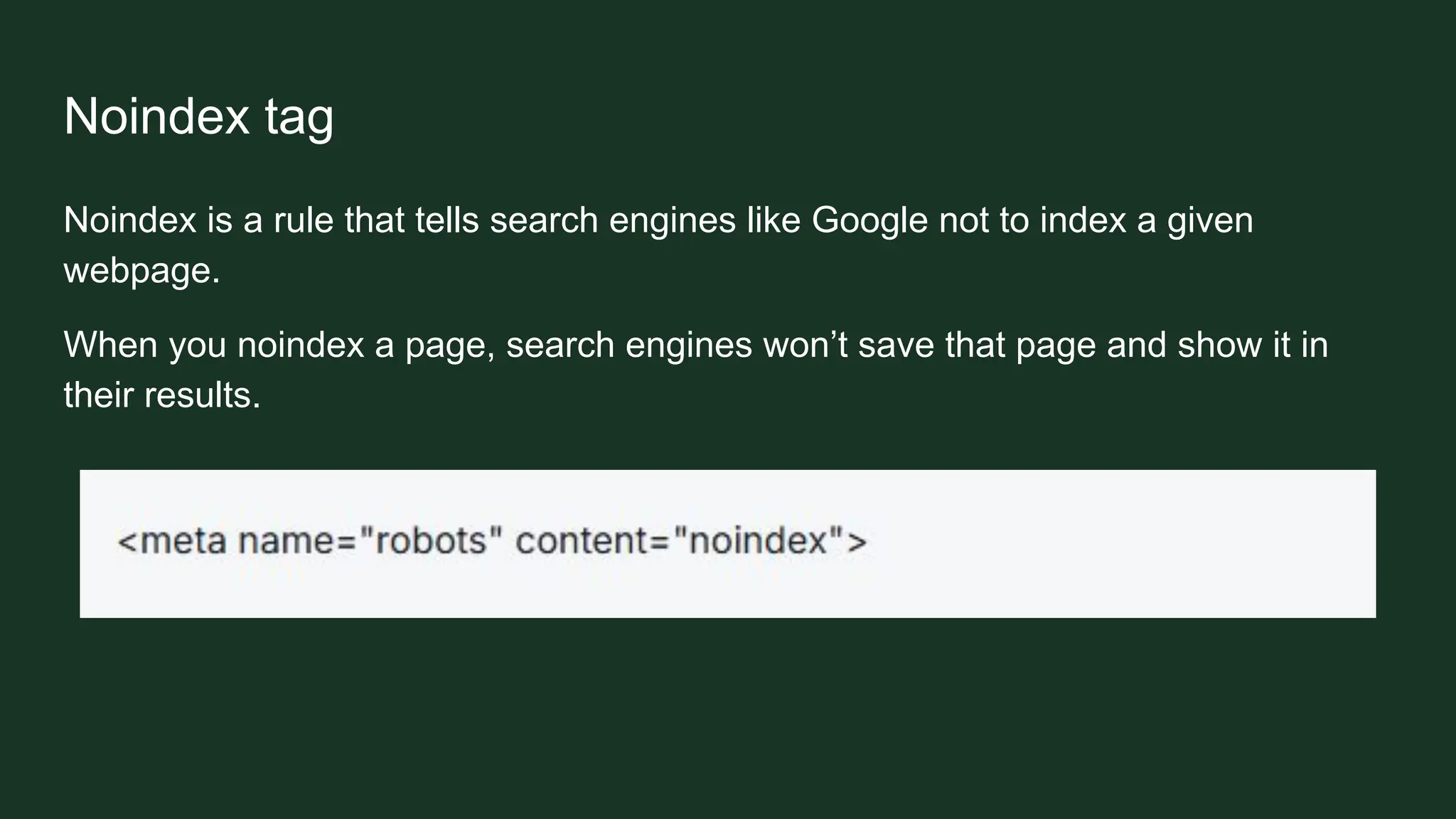

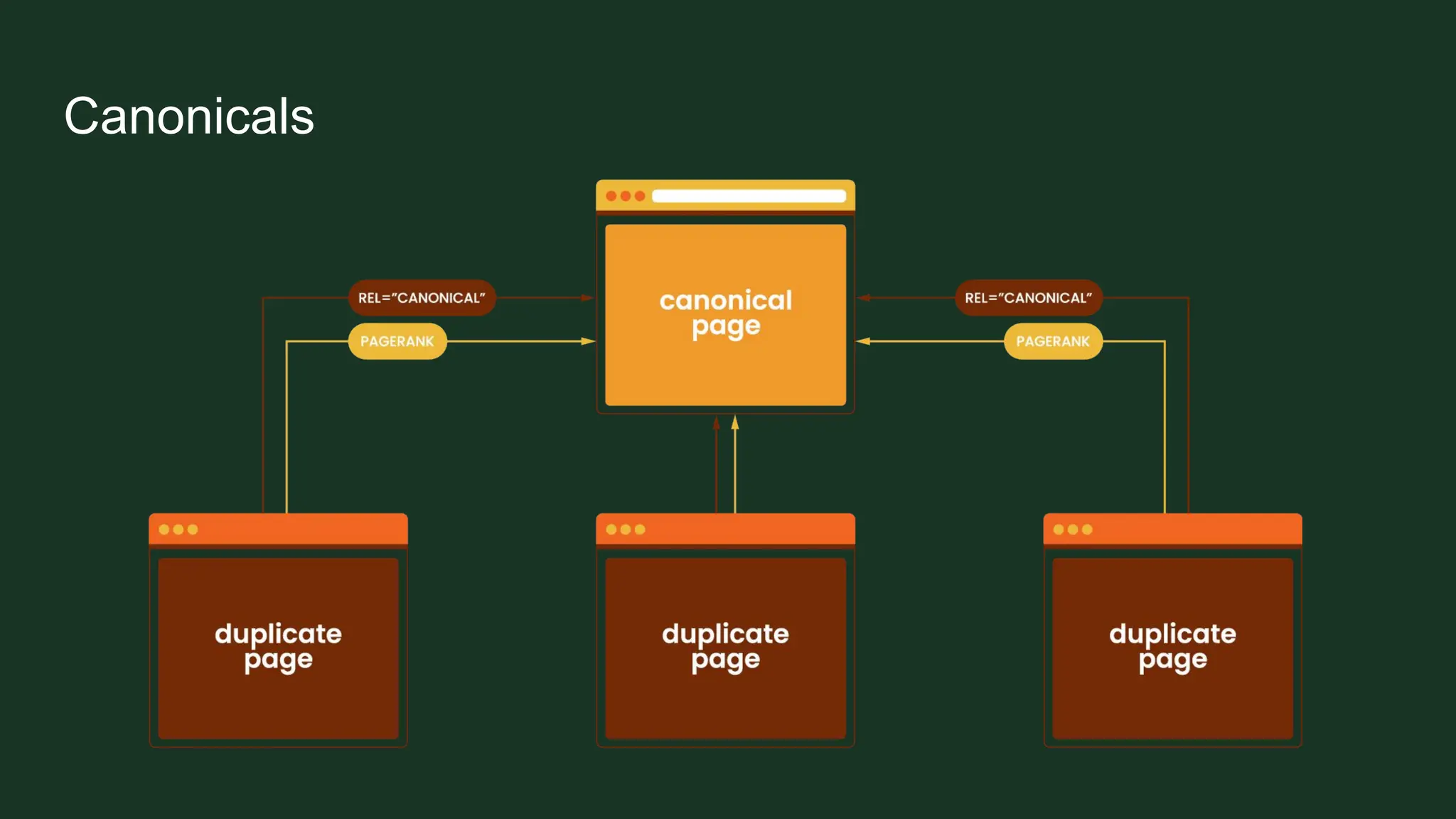

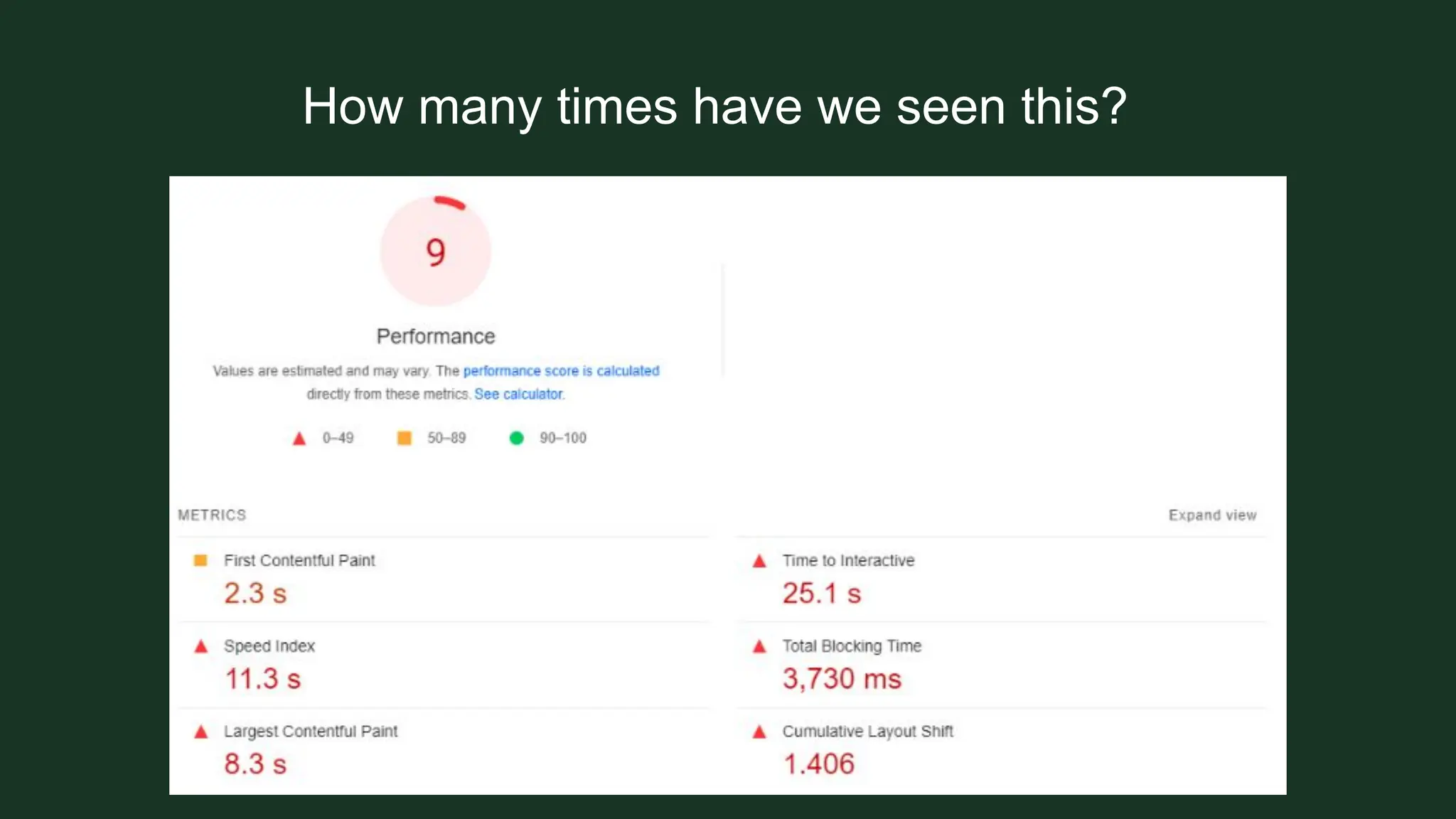

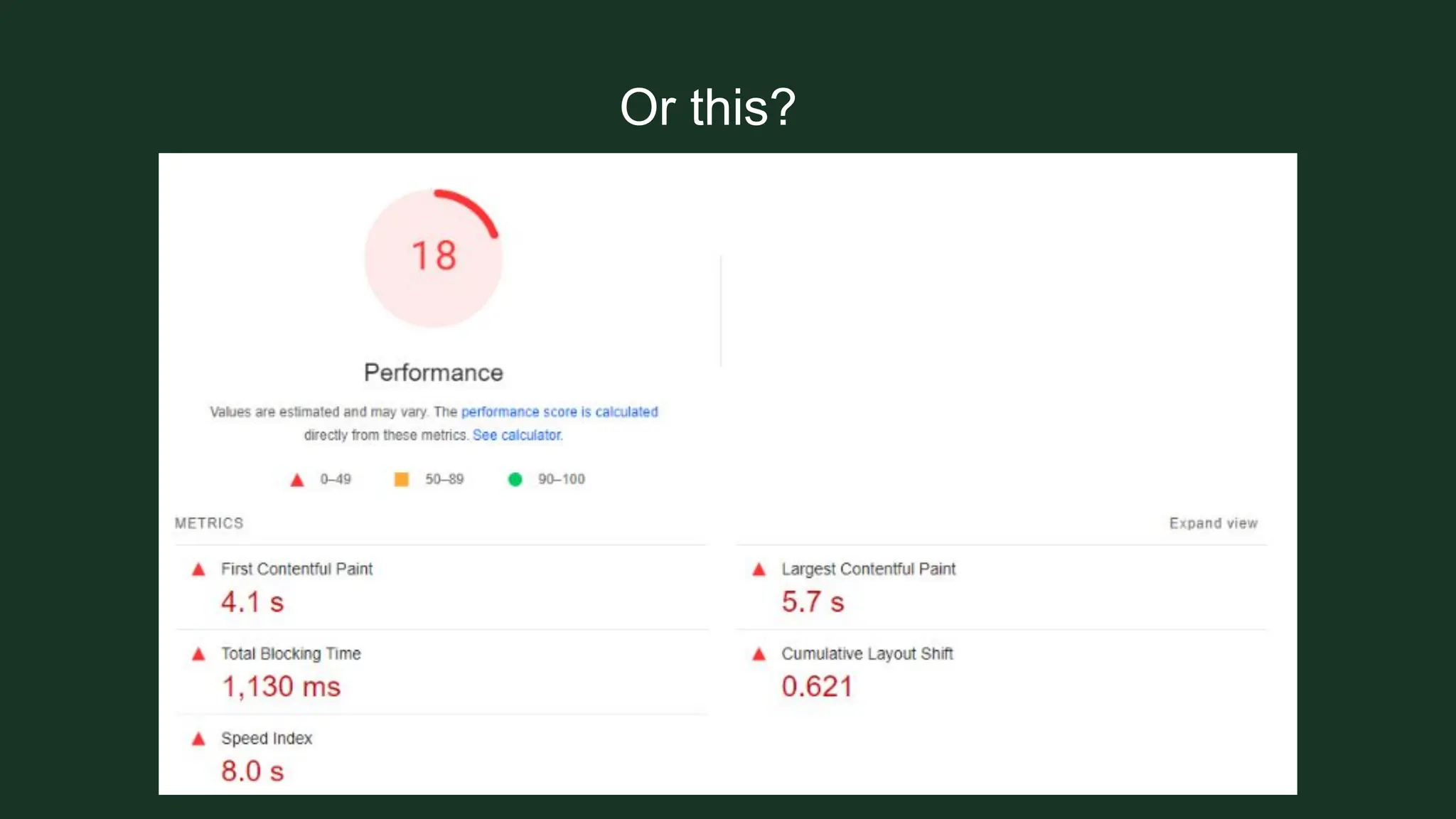

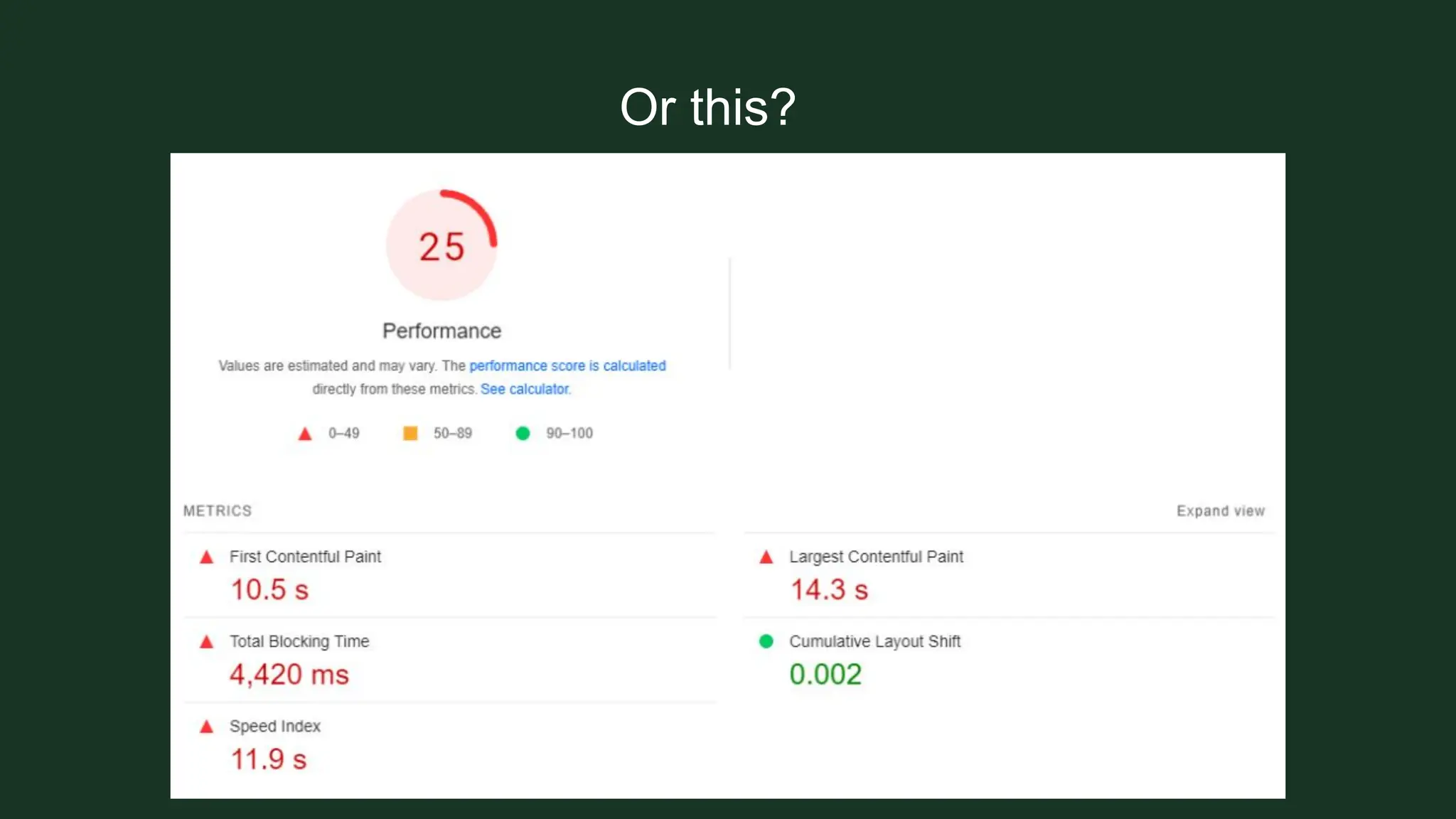

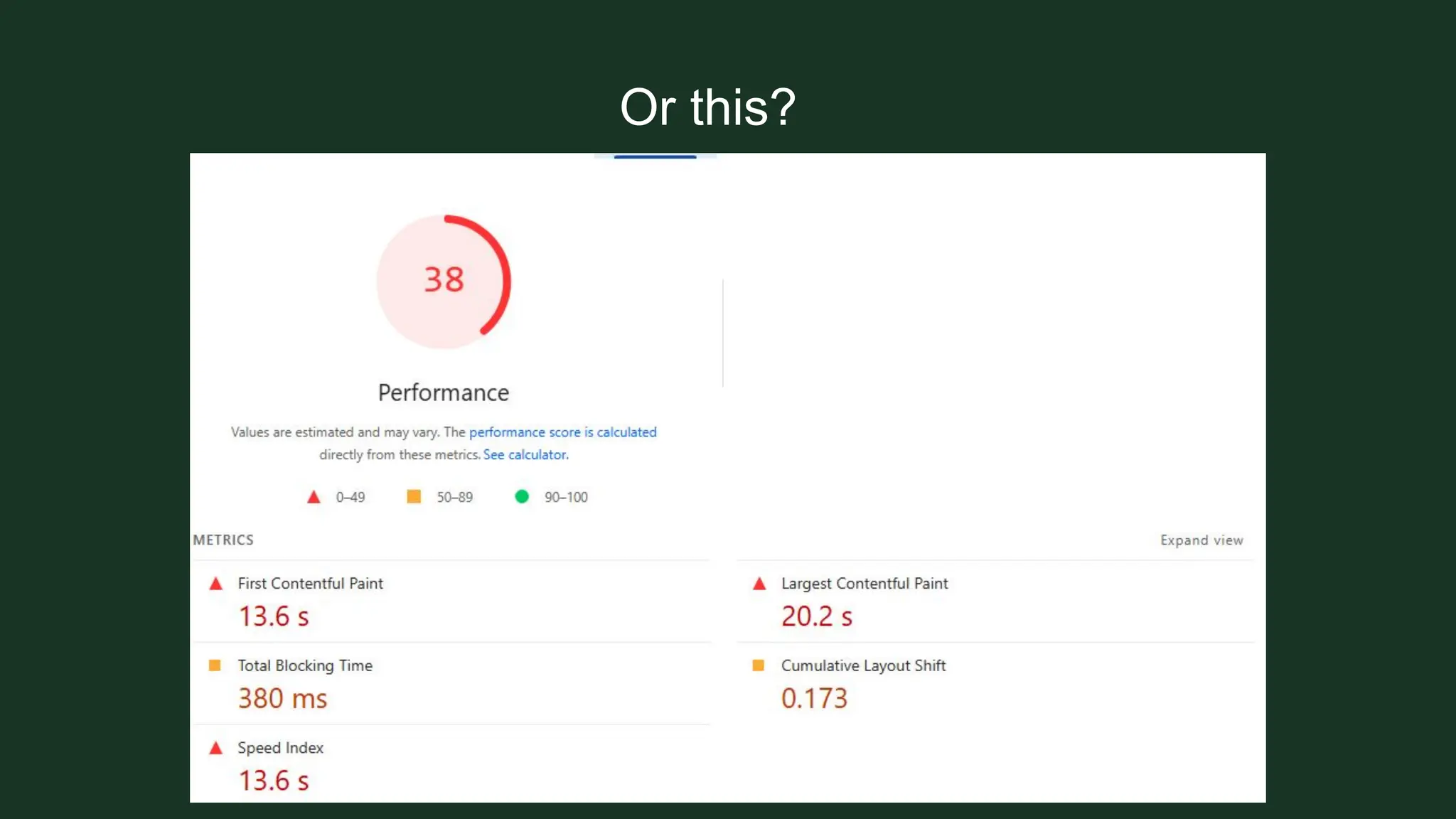

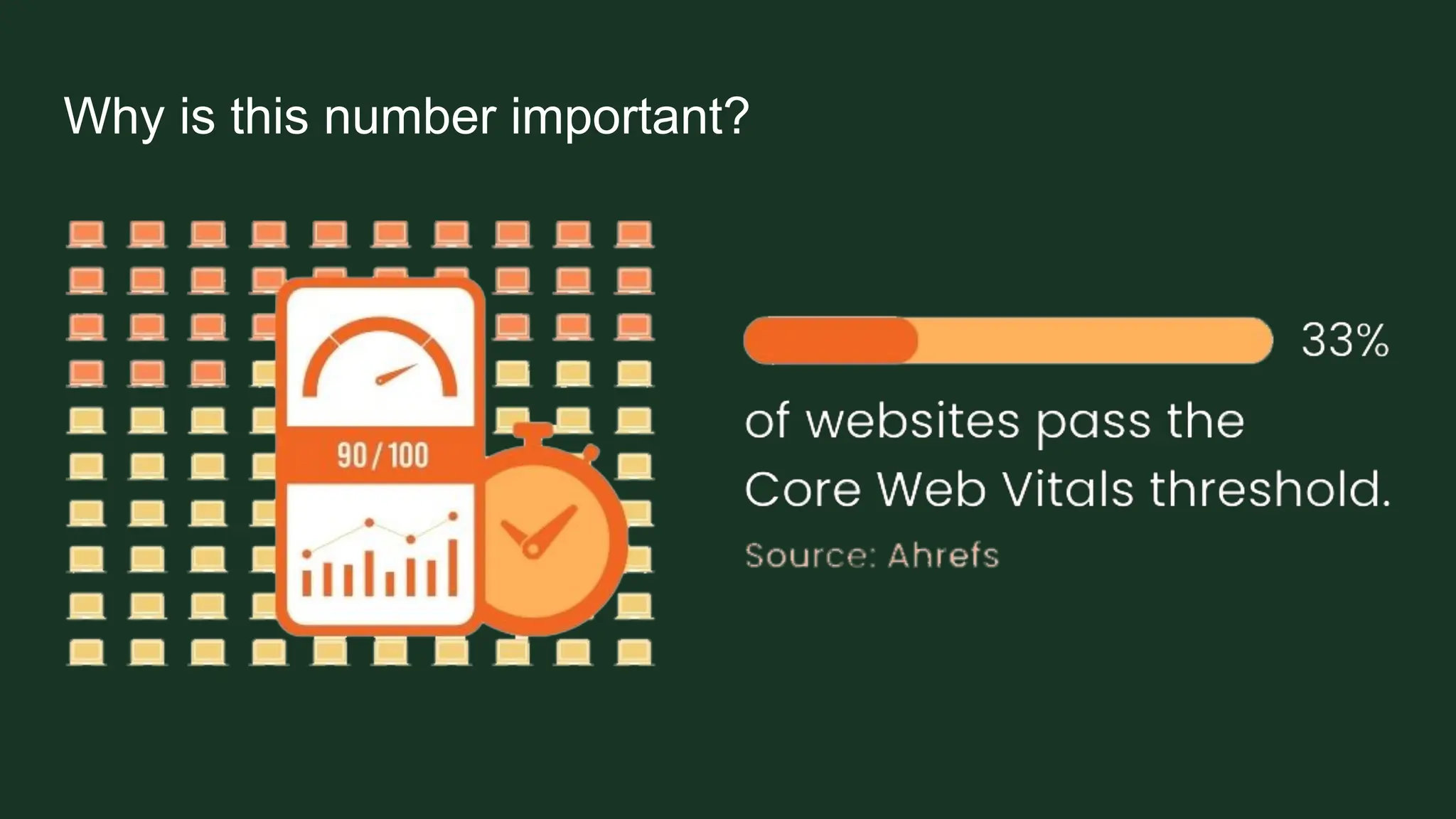

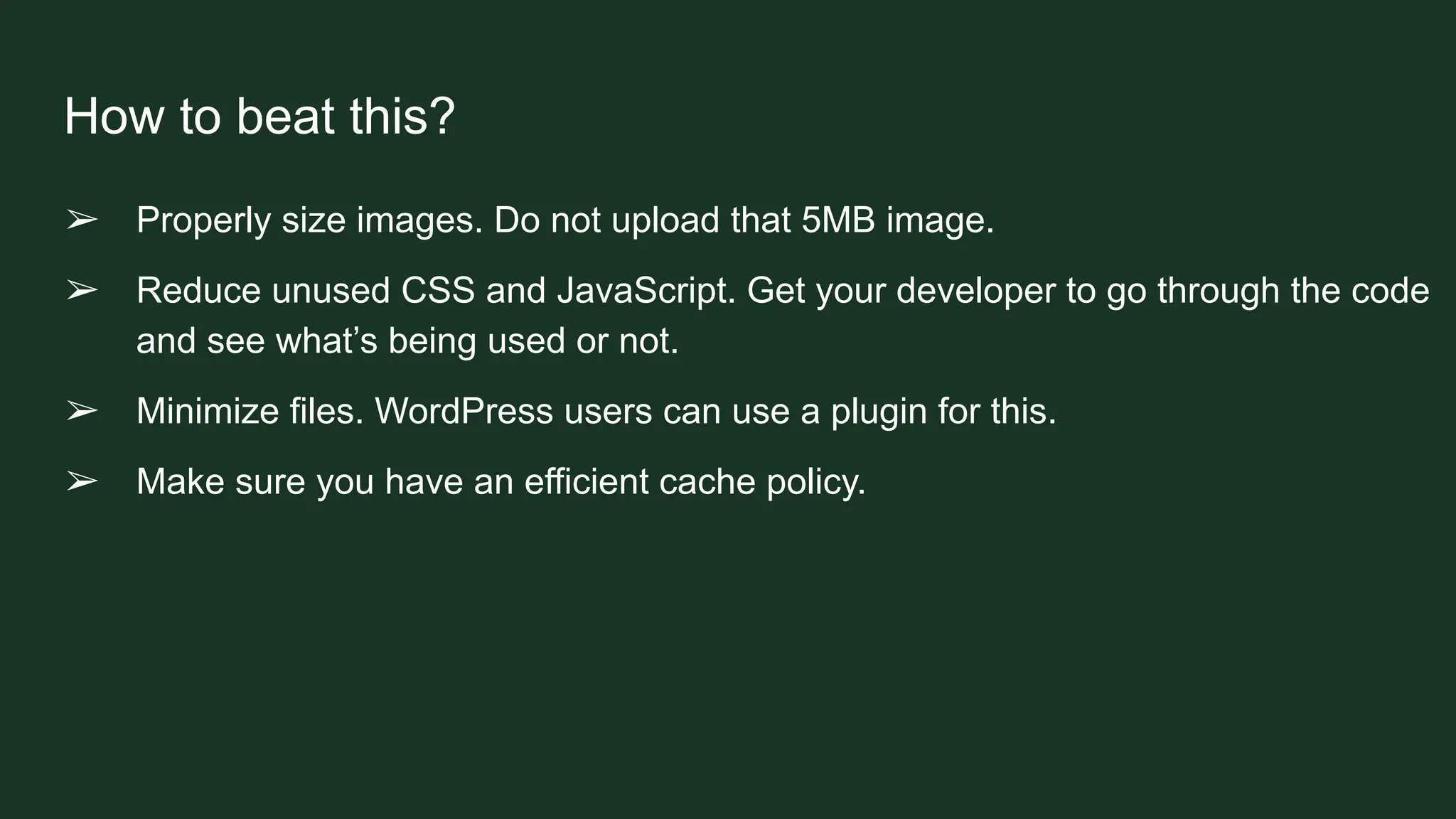

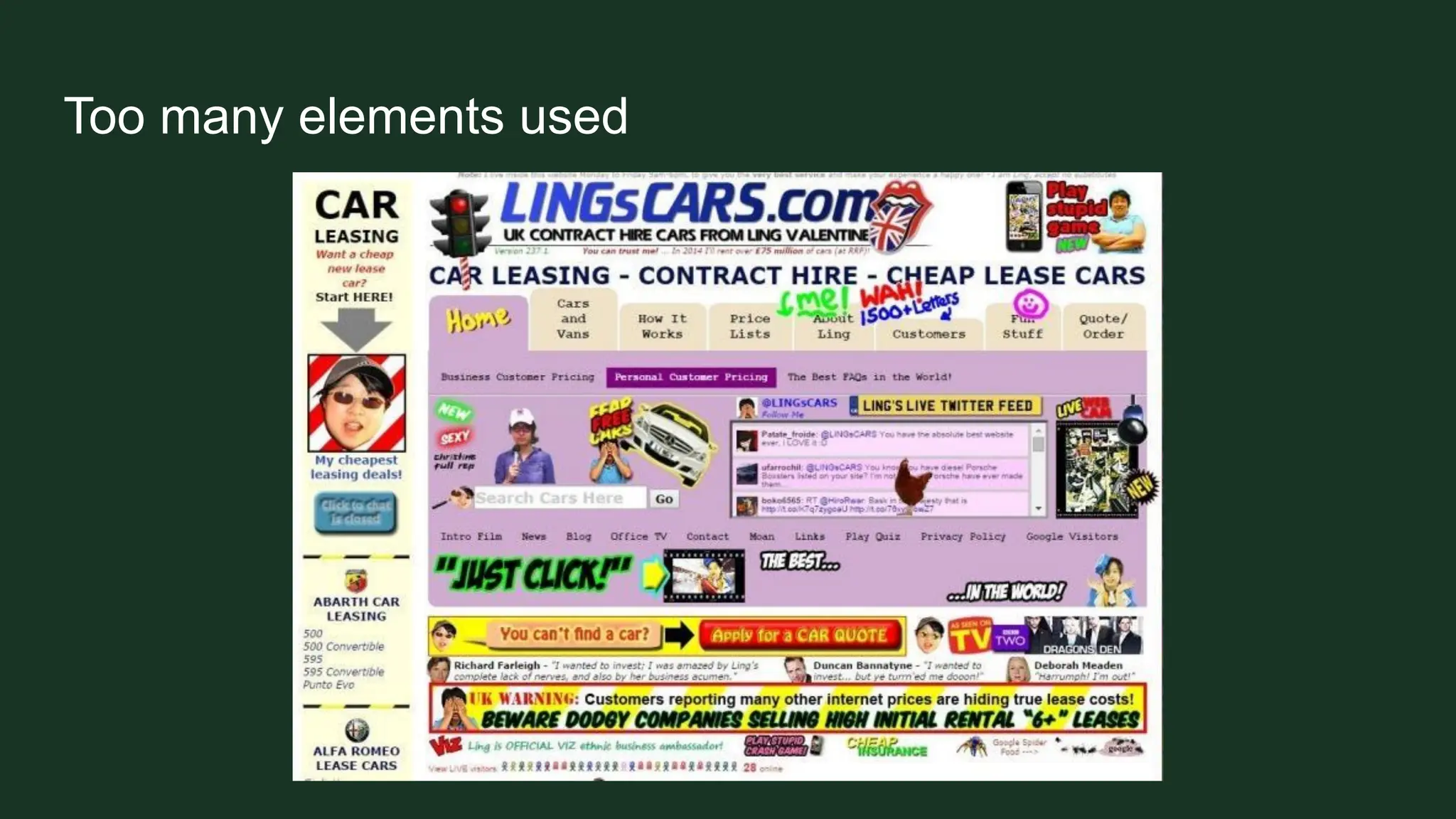

Description: Local businesses rely heavily on organic visibility to attract nearby customers, but they also need a strong technical SEO foundation. Most businesses deal with a technically challenging website, or have no budget to take care of this - but what happens when the loading takes forever? Or if Core Web Vitals fail? What does all this mean for local businesses? With examples, practical tips, and common mistakes, this talk will break down the essential technical elements that directly impact local search performance and how to prioritize and address these issues.