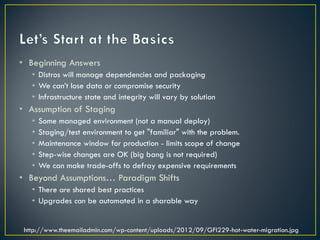

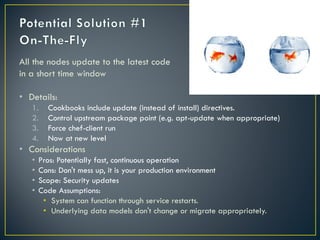

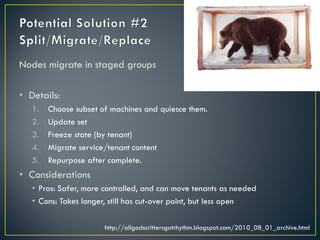

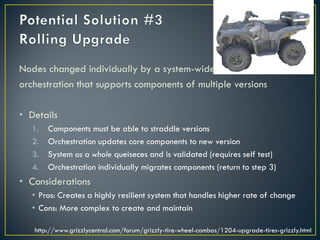

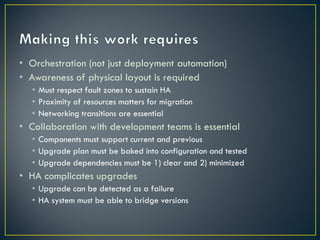

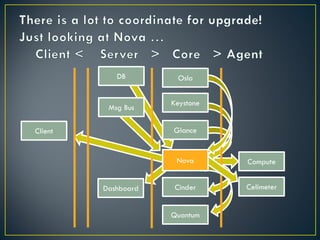

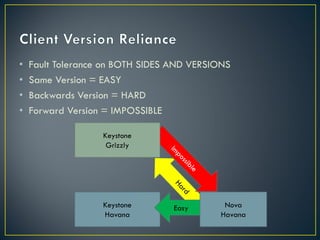

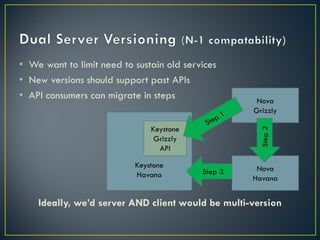

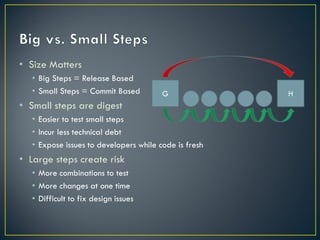

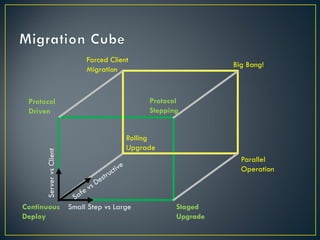

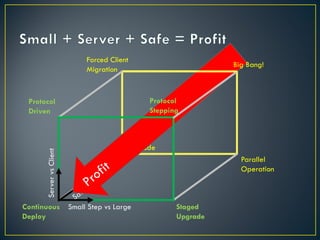

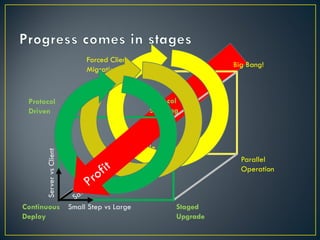

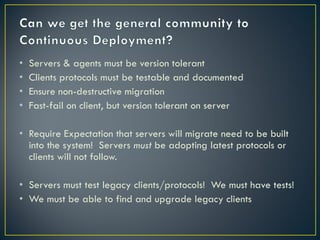

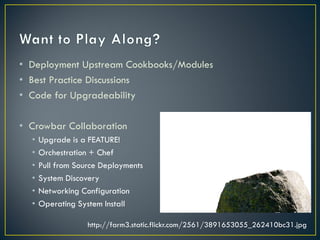

The document discusses several challenges and strategies for upgrading OpenStack deployments from one release to another. It notes that OpenStack has a rapid release cycle and emphasizes the importance of designing systems that can support rolling, continuous upgrades of components through multiple versions. Effective upgrade strategies require coordination between operations and development teams to ensure new versions maintain backward compatibility and upgrades can be tested in a controlled manner.