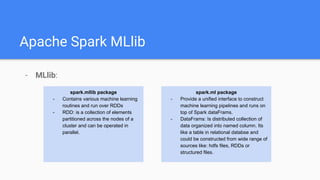

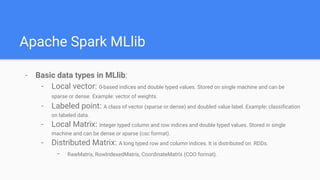

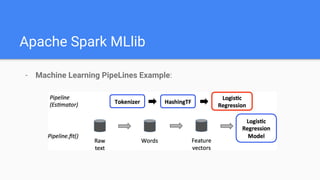

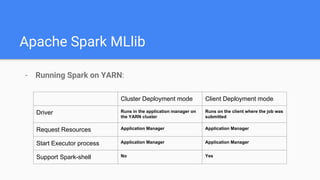

This document discusses Spark MLlib, which provides machine learning routines that can run on massive datasets distributed across a cluster. It describes MLlib's basic data types like vectors and matrices, common machine learning algorithms it supports like classification and clustering, and how to build machine learning pipelines. It also covers how to run Spark applications that use MLlib on a YARN cluster for distributed processing of large datasets.