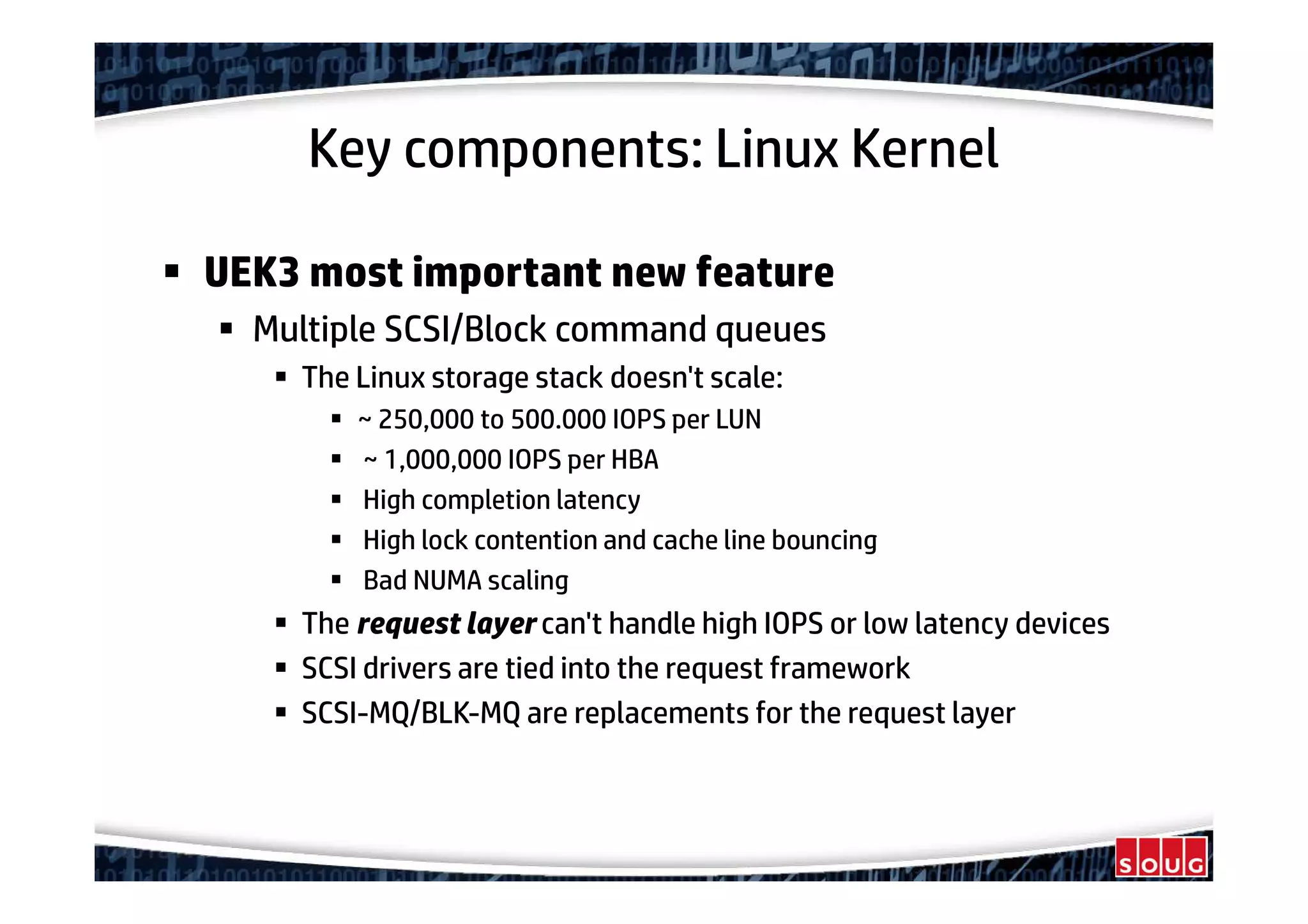

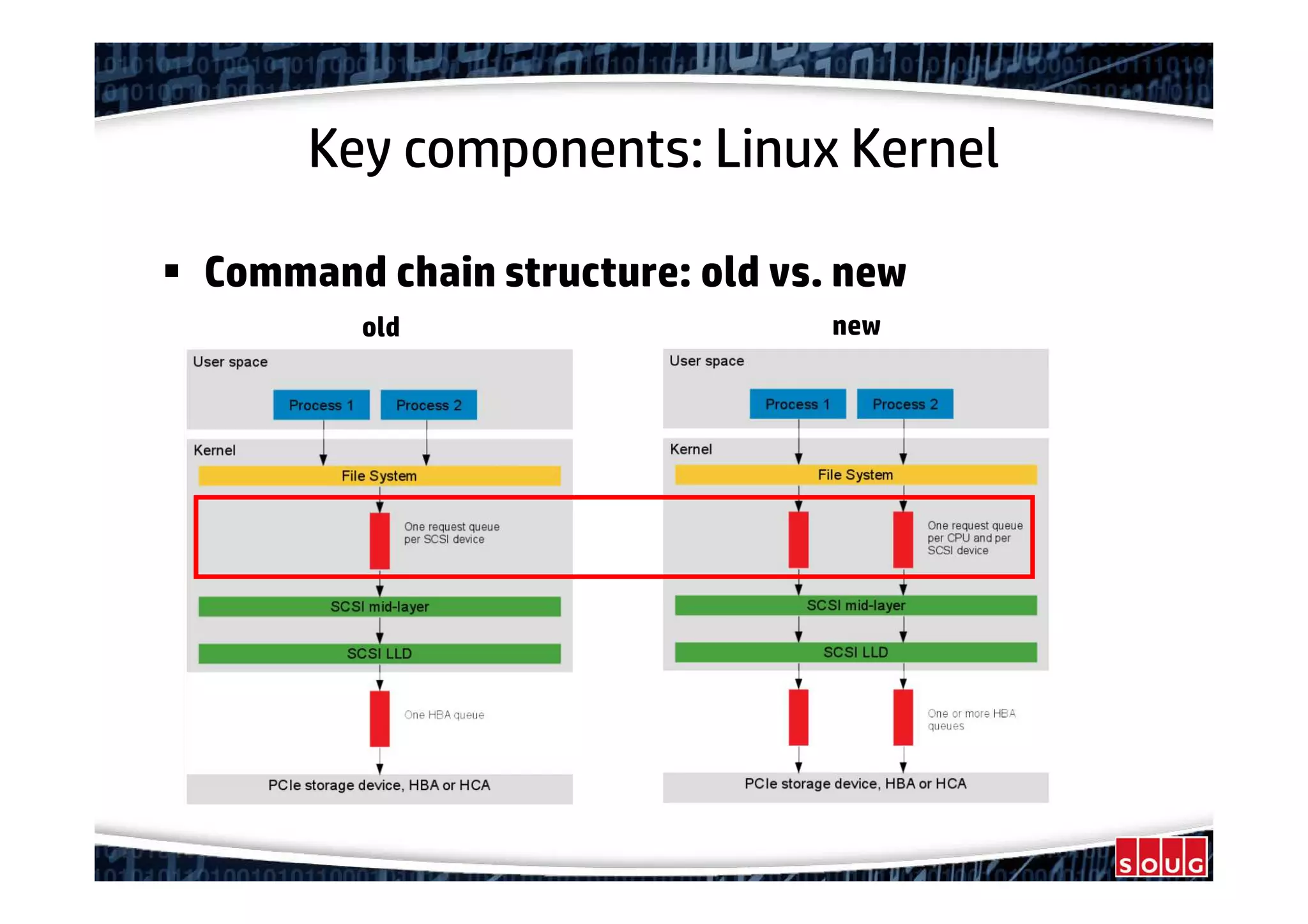

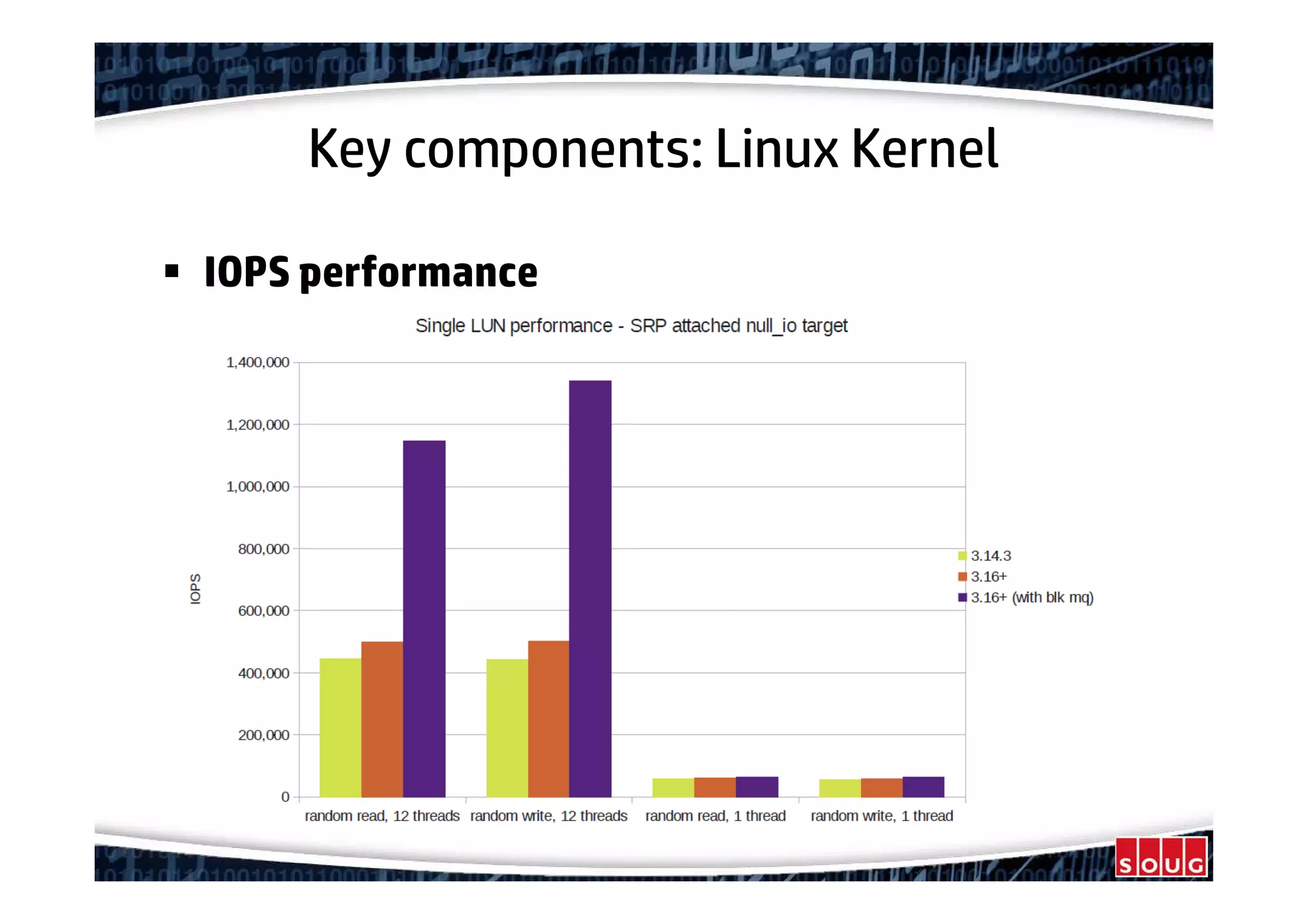

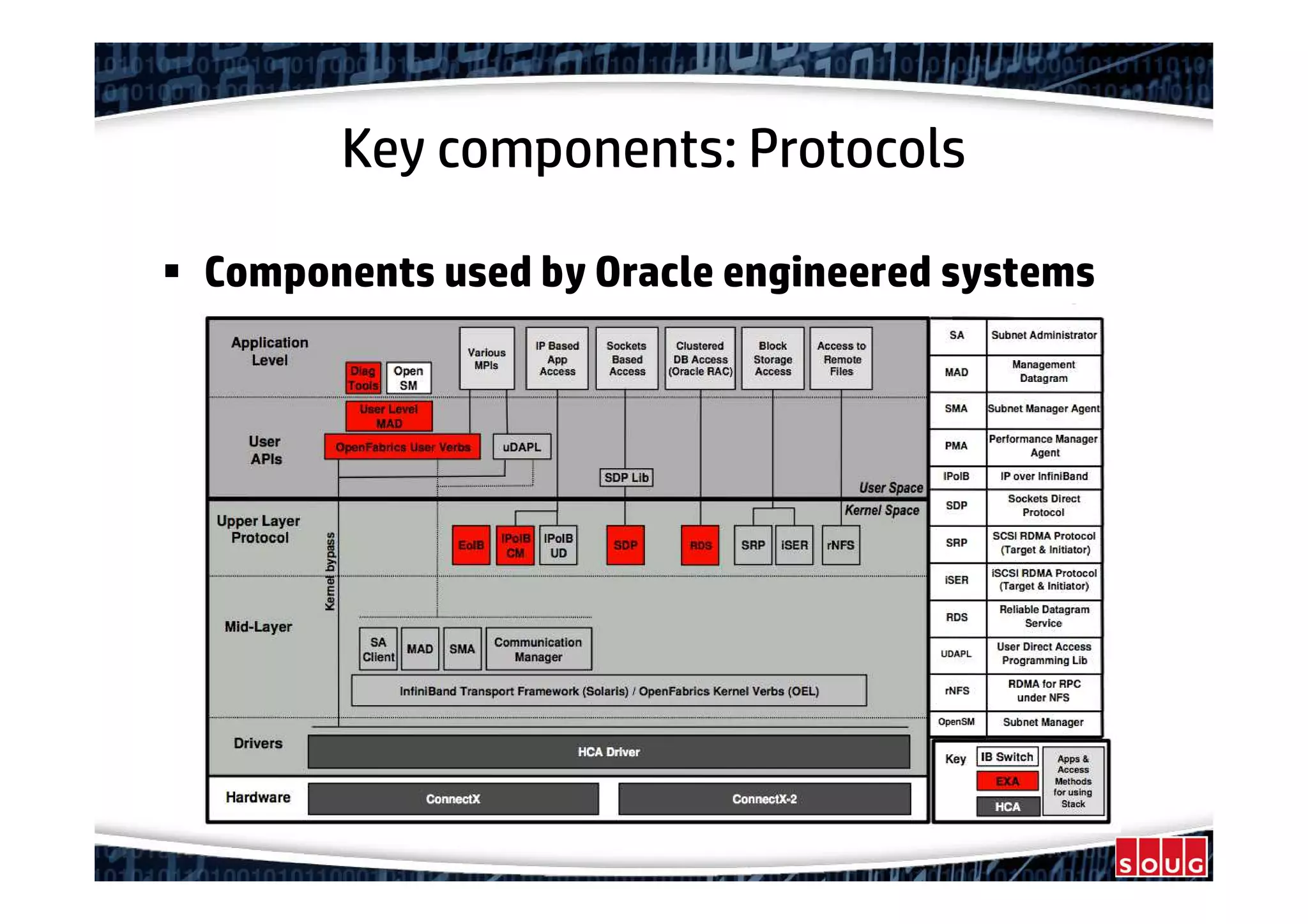

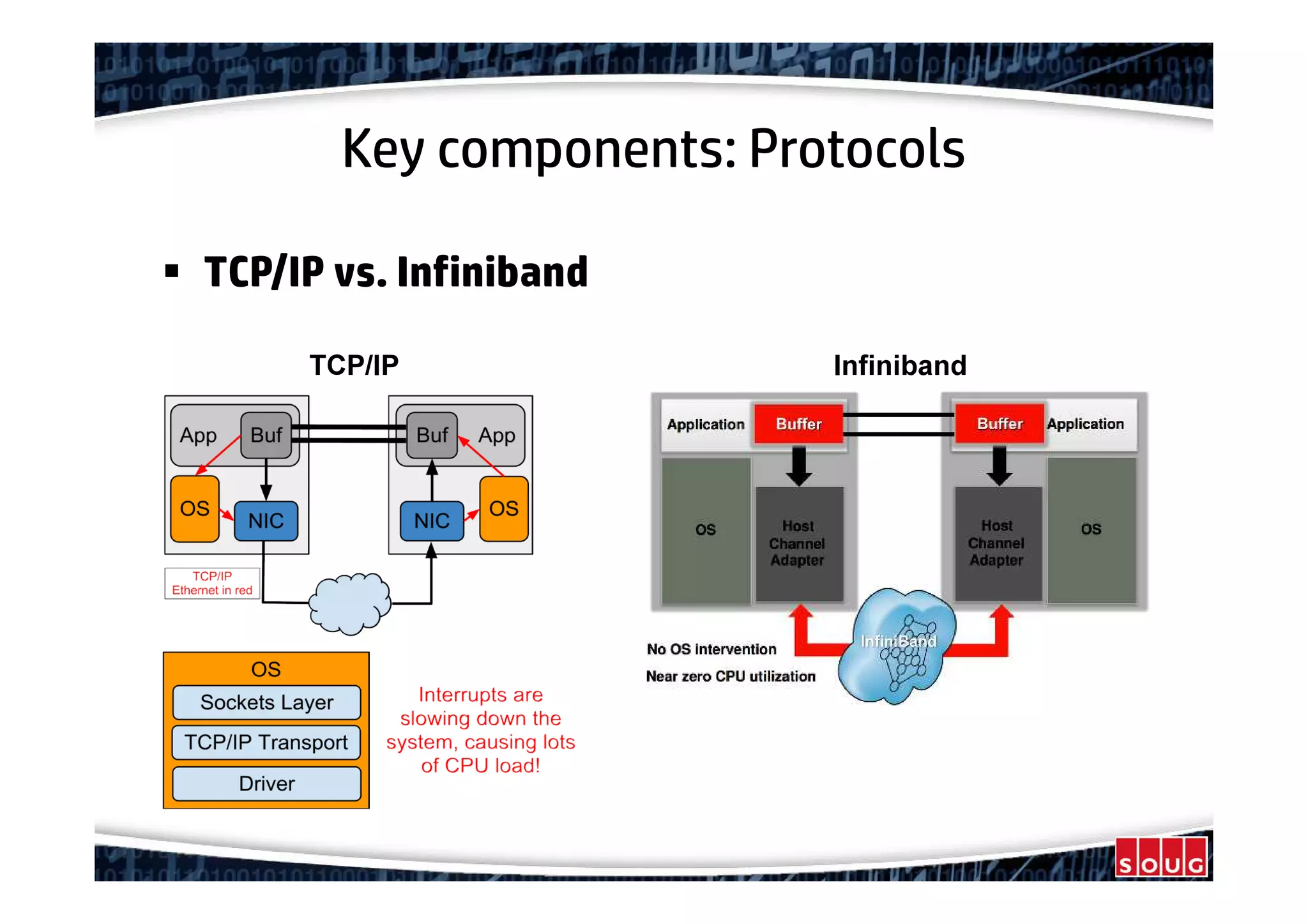

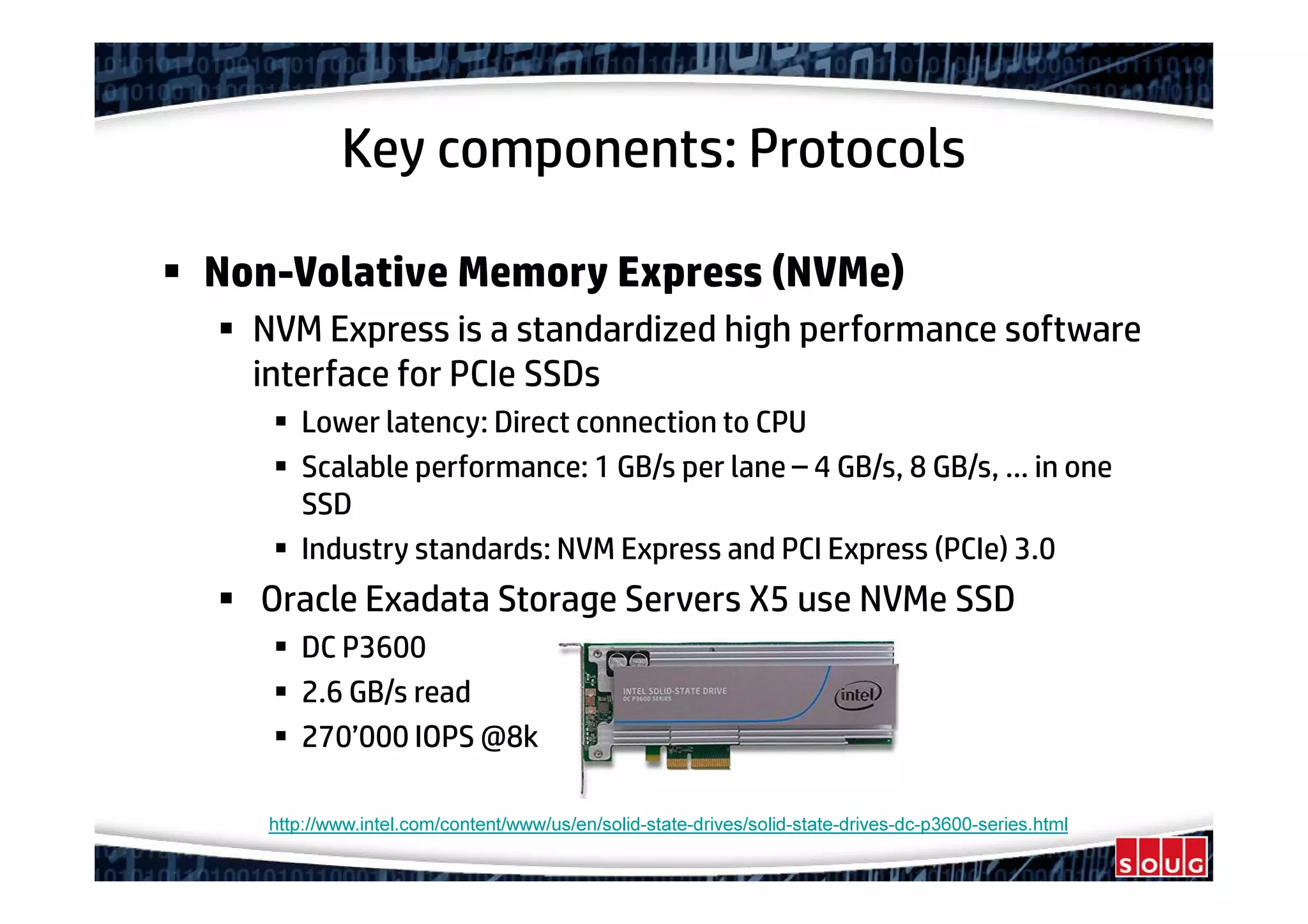

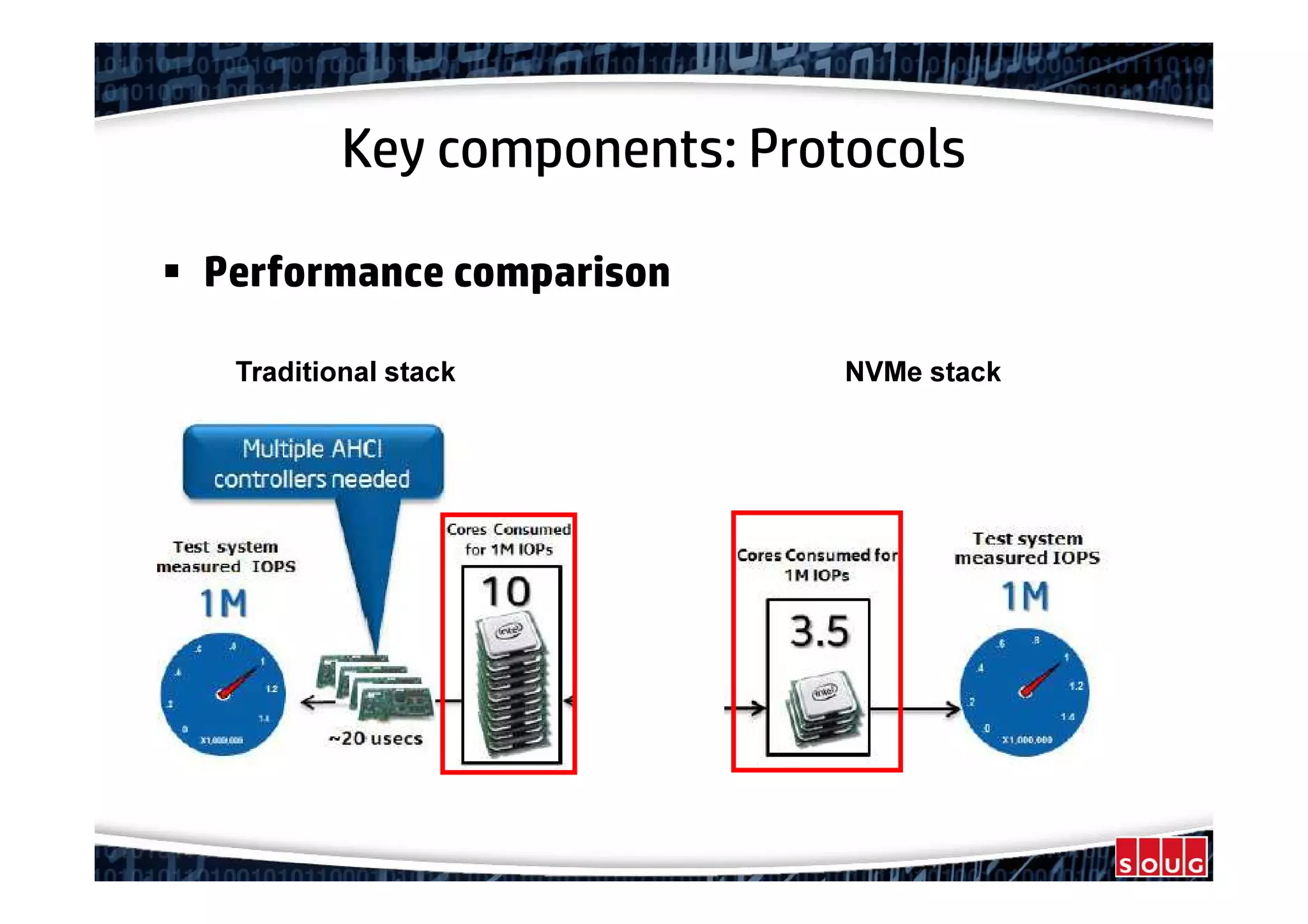

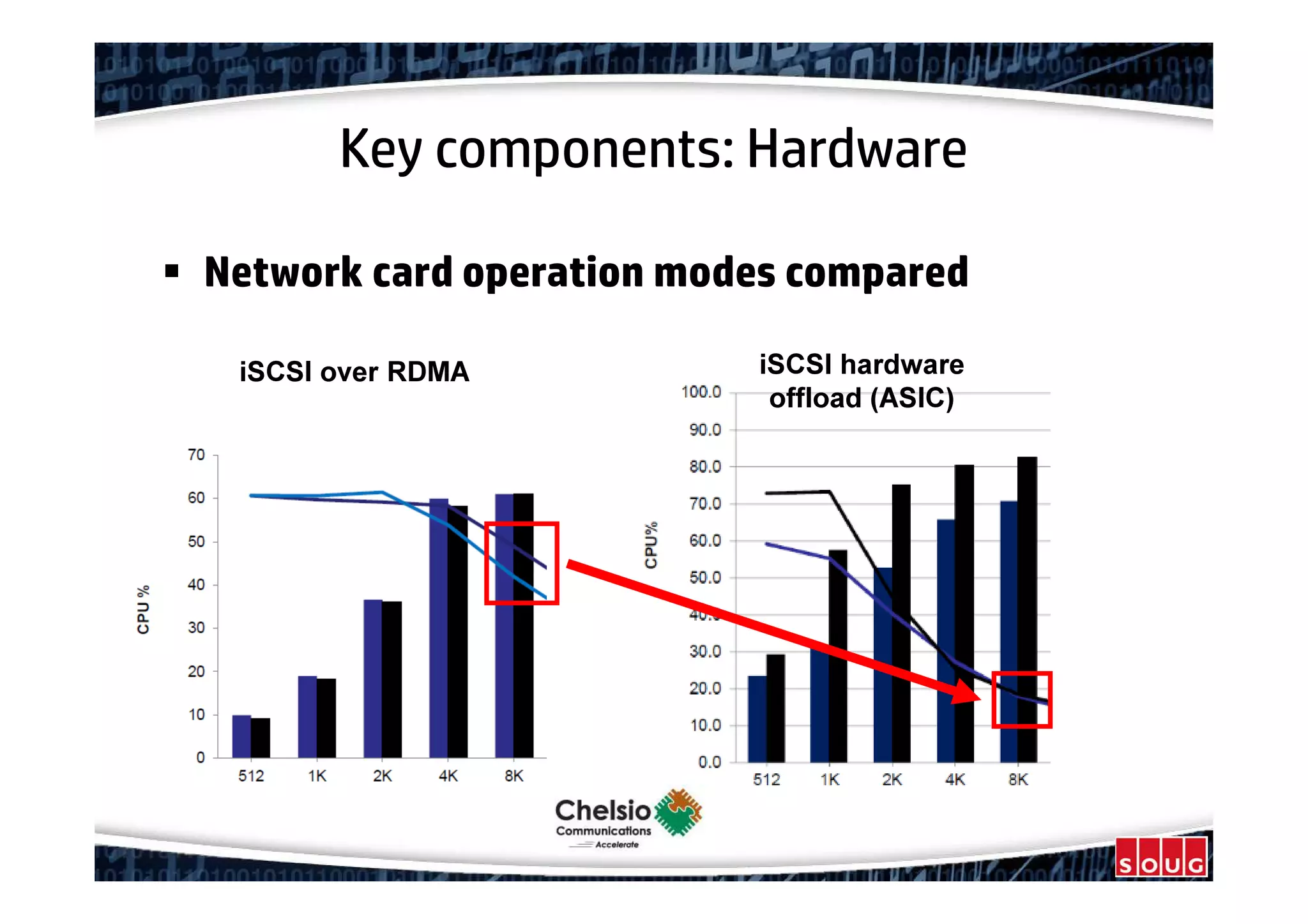

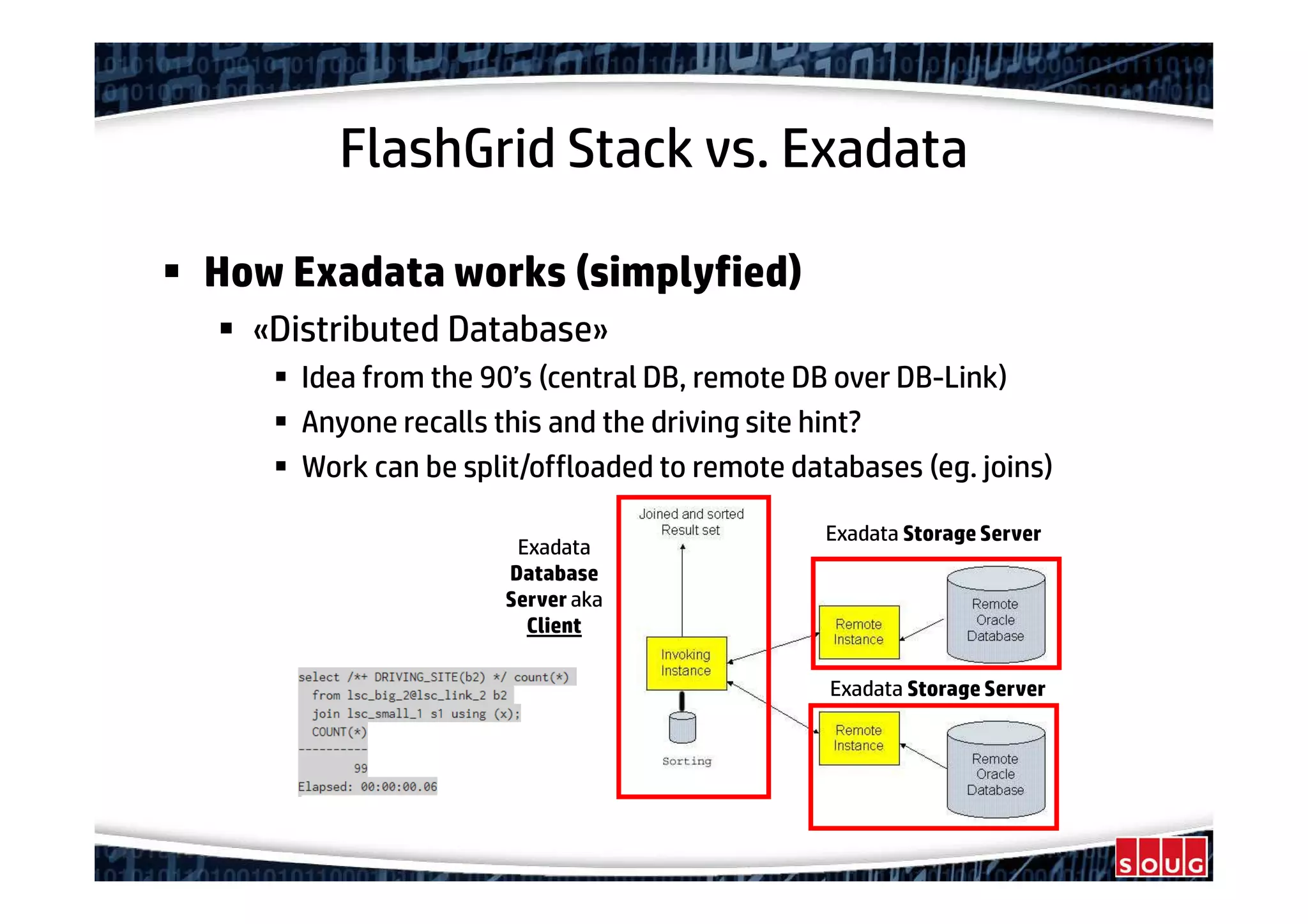

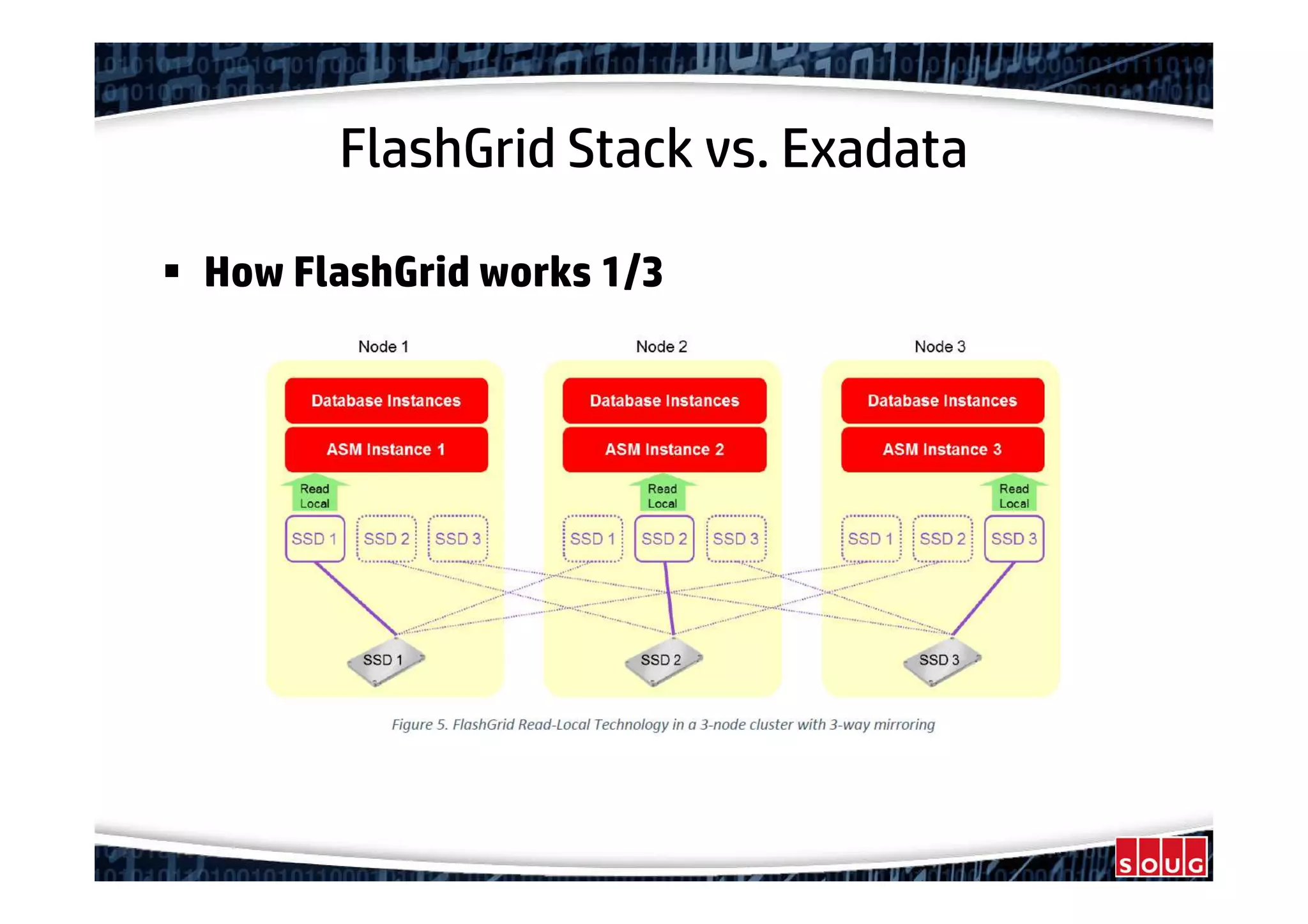

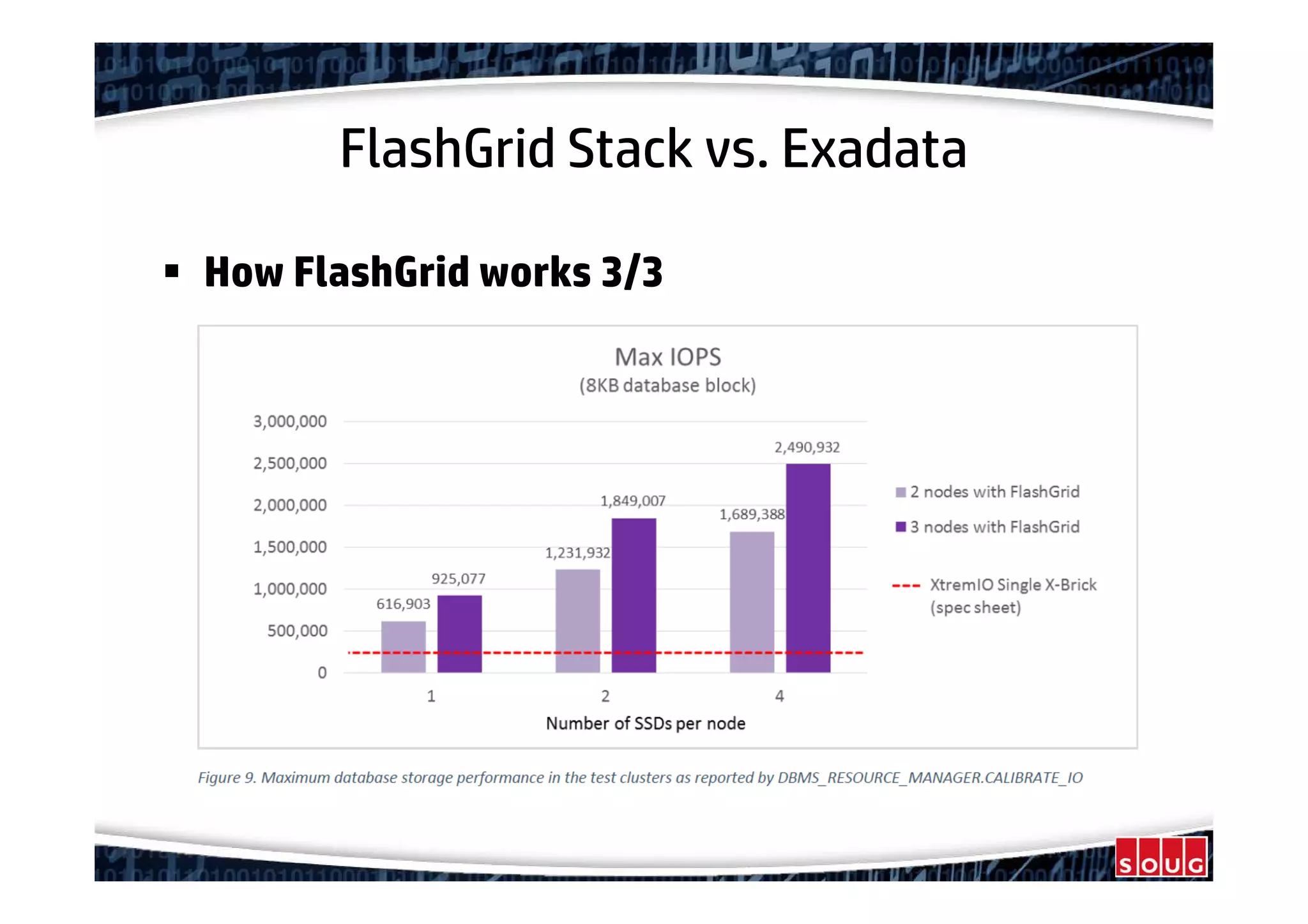

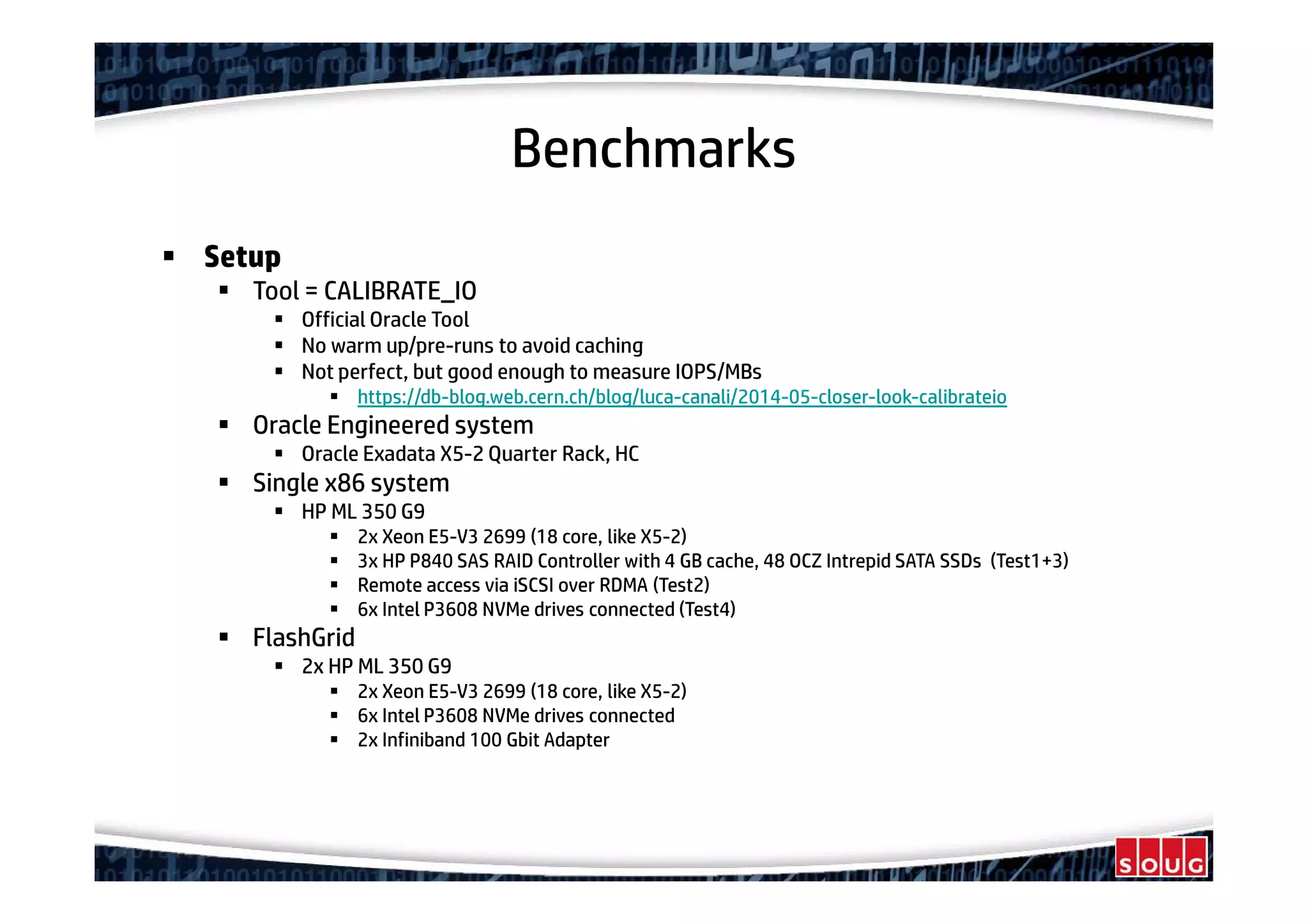

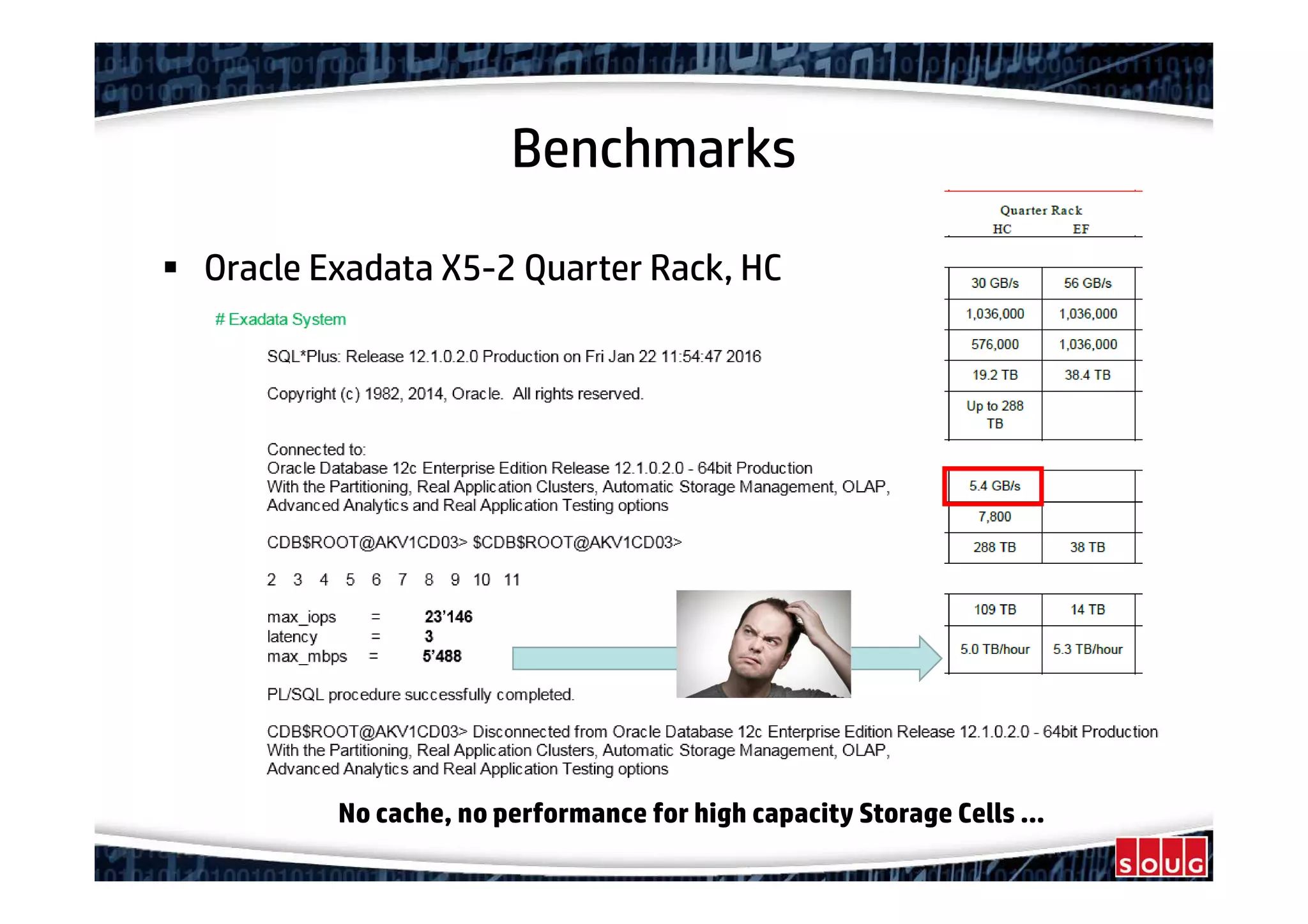

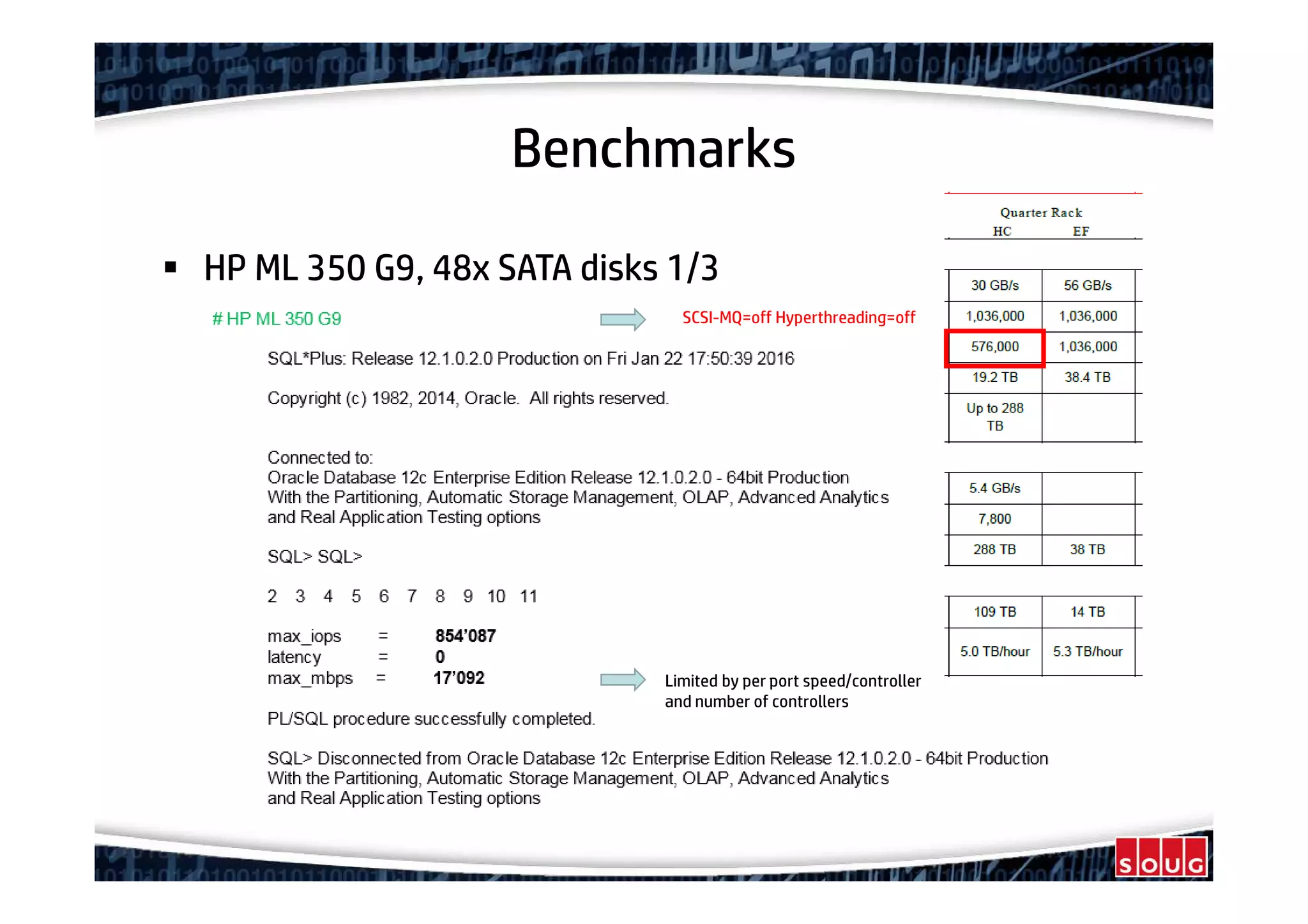

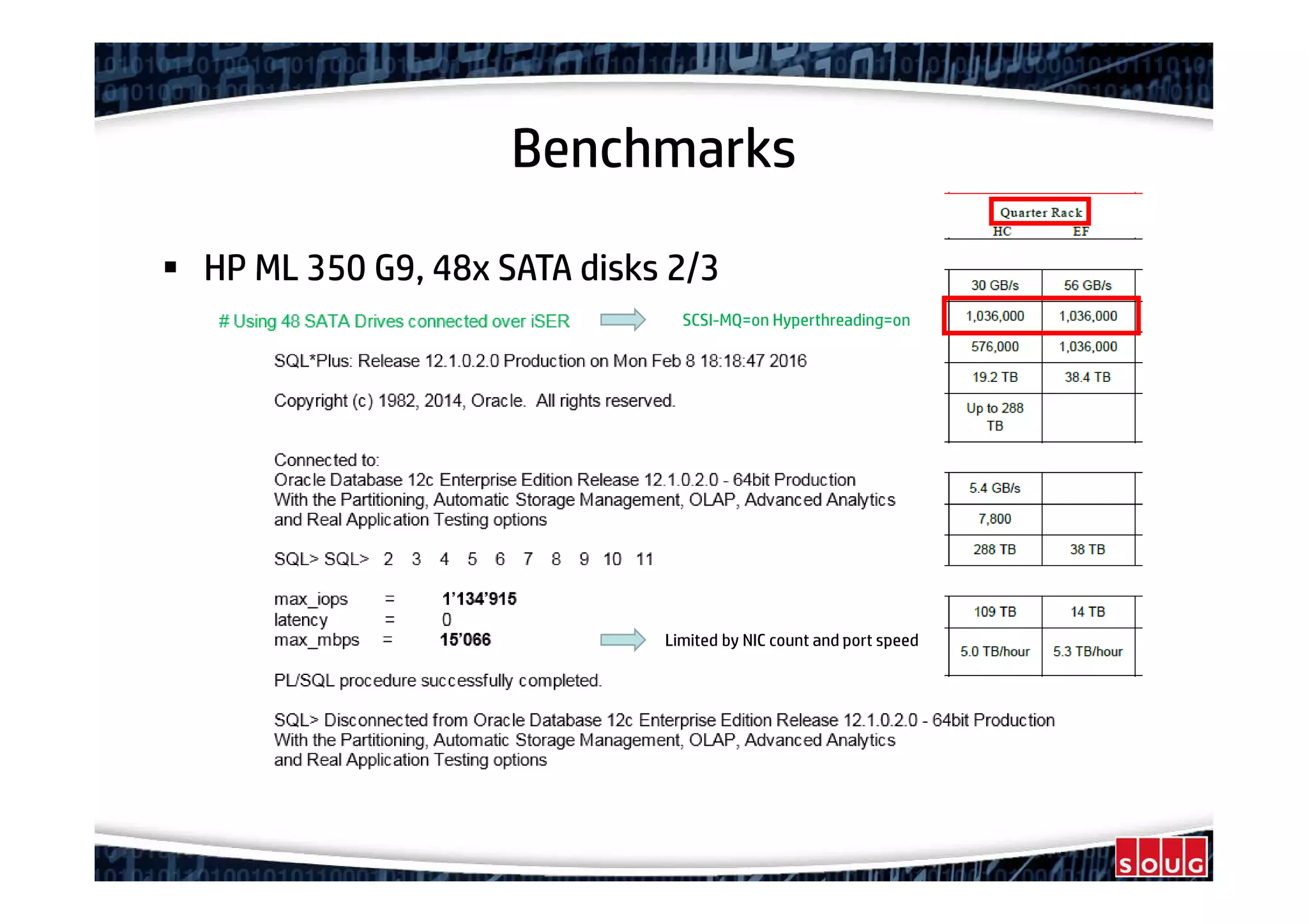

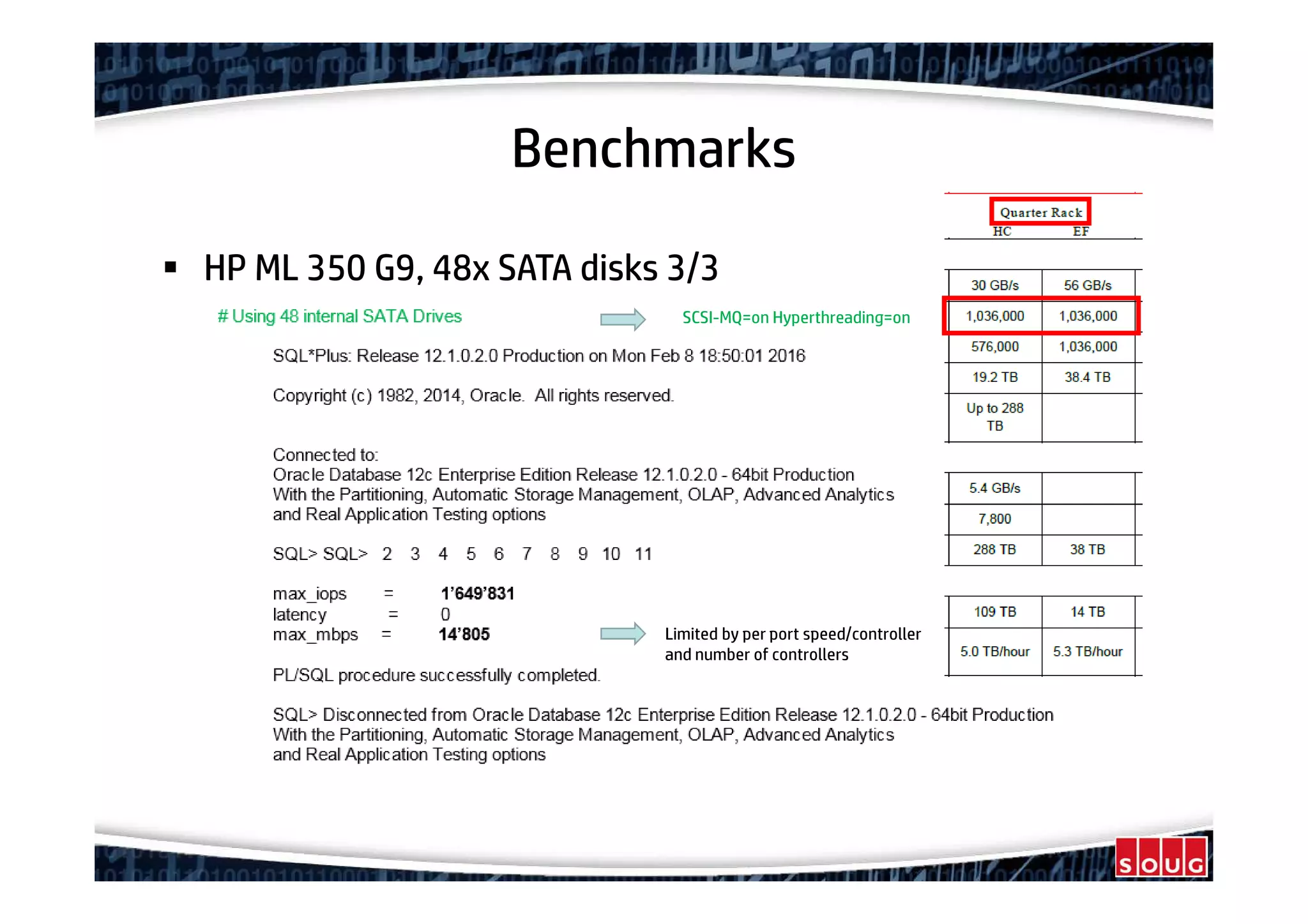

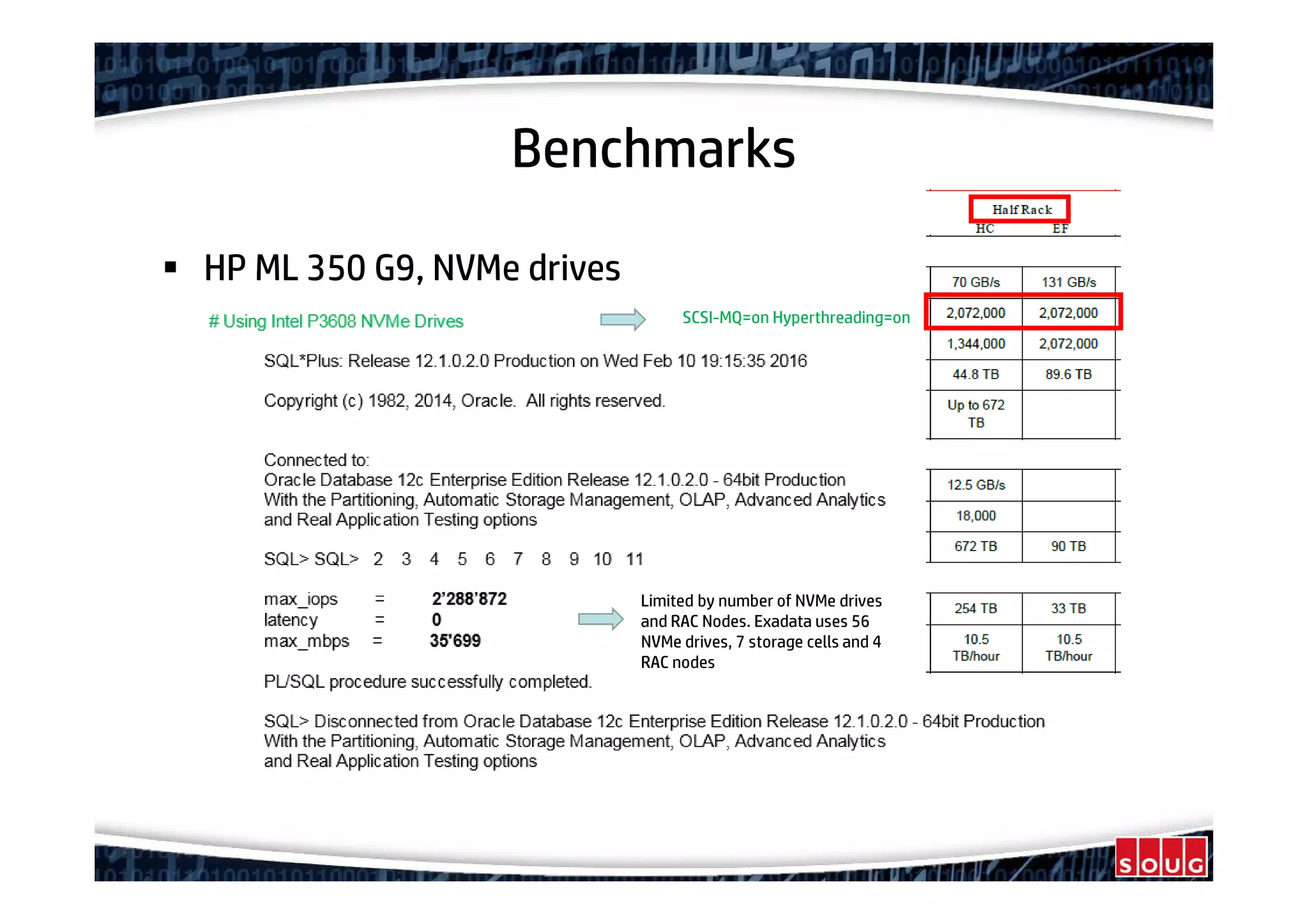

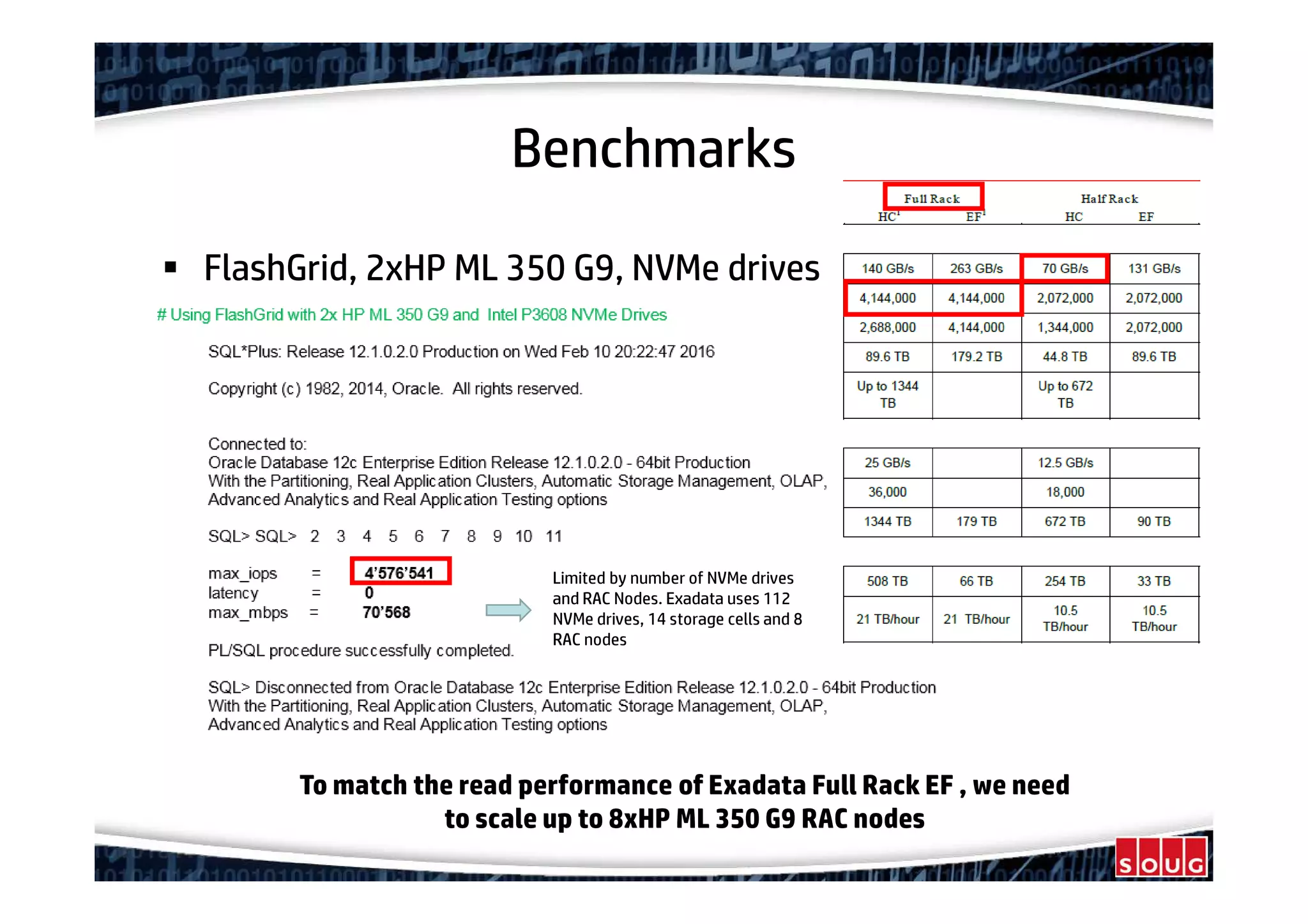

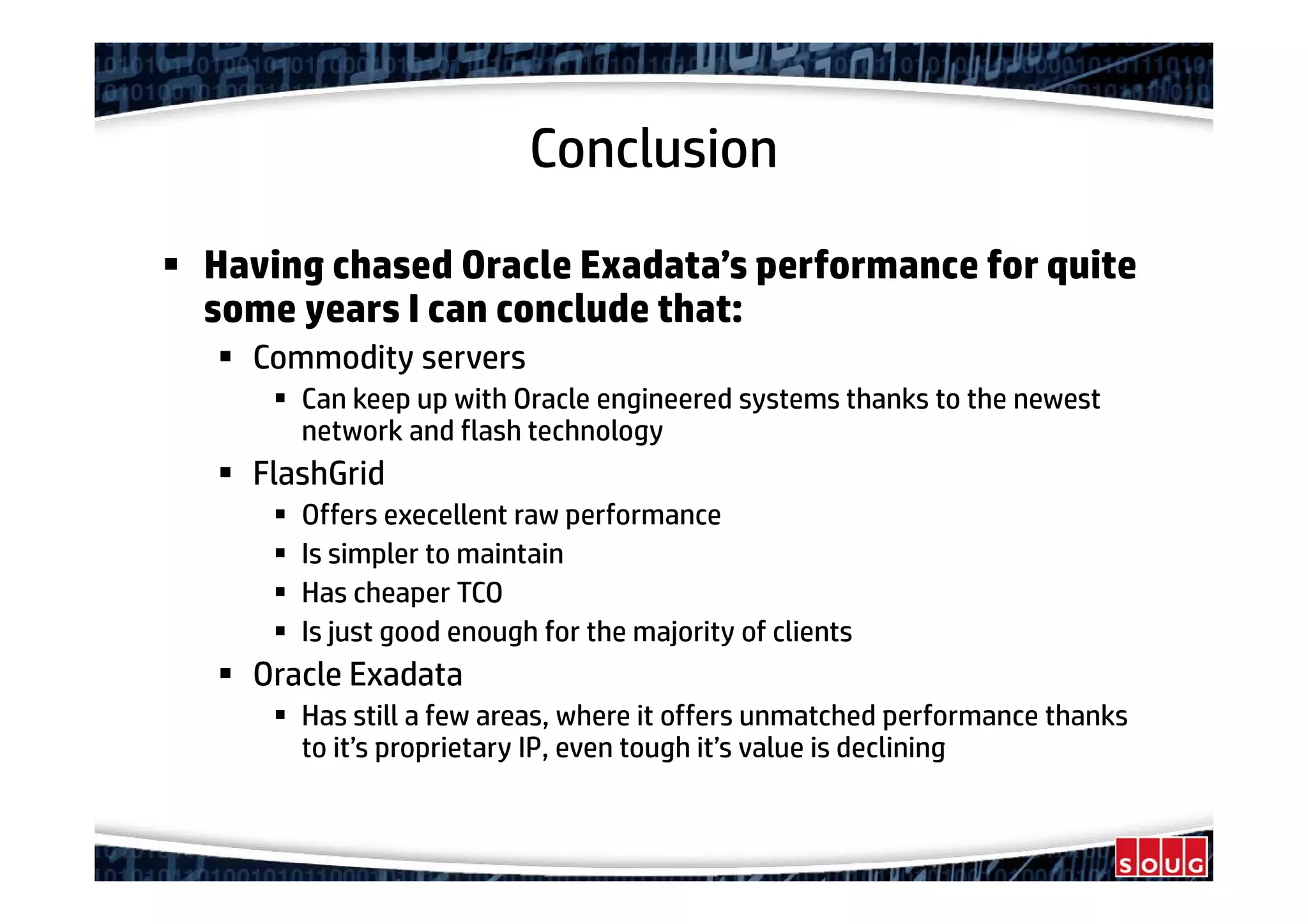

This document summarizes a presentation about FlashGrid, an alternative to Oracle Exadata that aims to achieve similar performance levels using commodity hardware. It discusses the key components of FlashGrid including the Linux kernel, networking protocols like Infiniband and NVMe, and hardware. Benchmarks show FlashGrid achieving comparable IOPS and throughput to Exadata on a single server. While Exadata has proprietary advantages, FlashGrid offers excellent raw performance at lower cost and with simpler maintenance through the use of standard technologies.