The document discusses how Mellanox storage solutions can maximize data center return on investment through faster database performance, increased virtual machine density per server, and lower total cost of ownership. Mellanox's high-speed interconnect technologies like InfiniBand and RDMA can provide over 10x higher storage performance compared to traditional Ethernet and Fibre Channel solutions.

![© 2012 Mellanox Technologies 6- Mellanox Confidential -- Mellanox Confidential -- Mellanox Confidential -

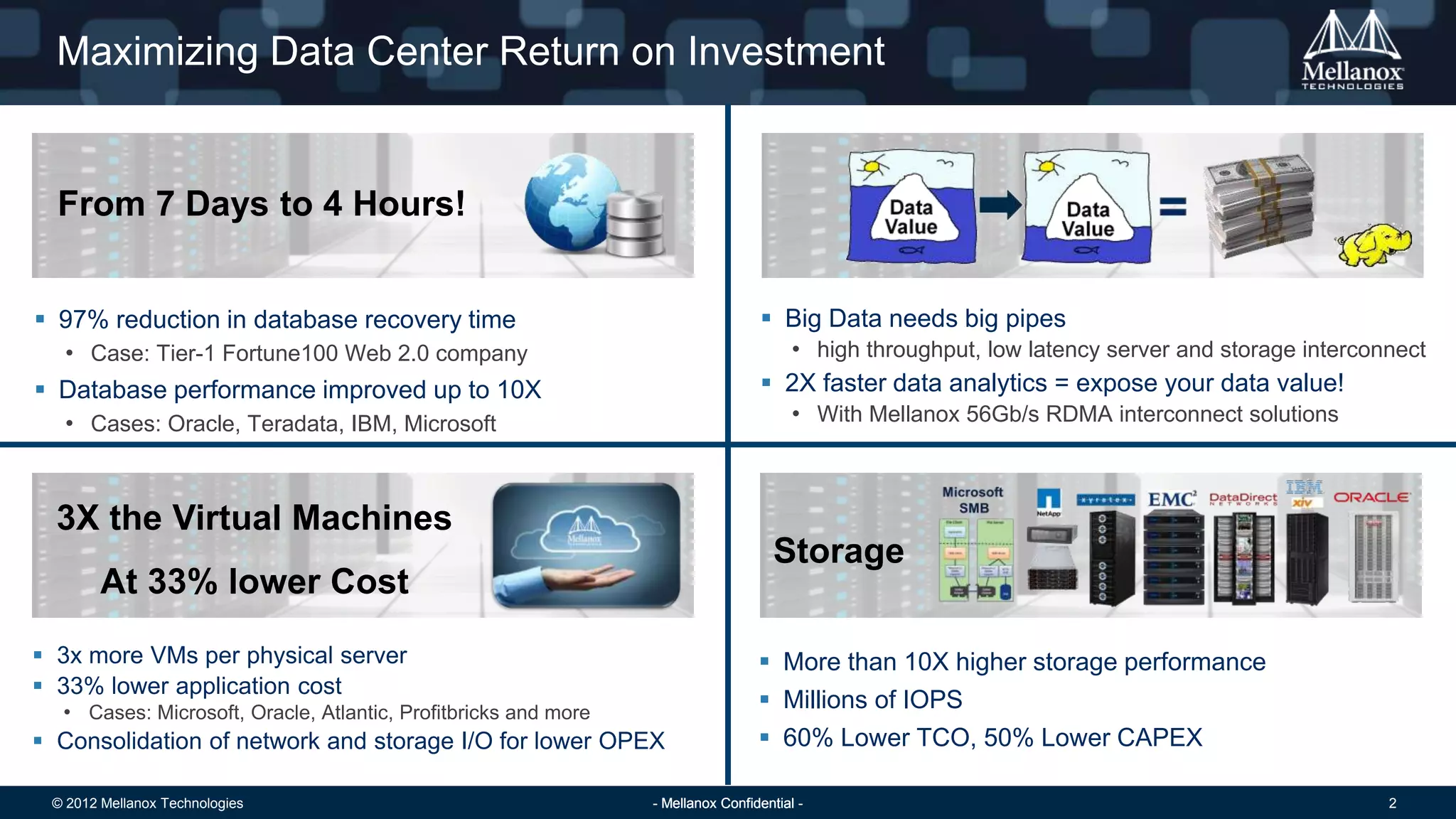

FC 10GbE/TCP 10GbE/RoCE 40GbE/RoCE InfiniBand

Block

NAS and Object

Big Data (Hadoop)

Storage Backplane/Clustering

Messaging

Compare Interconnect Technologies

FC 10GbE/TCP 10GbE/RoCE 40GbE/RoCE InfiniBand

Bandwidth [GB/s] 0.8/1.6 1.25 1.25 5 7

$/GBps [NIC/Switch]* 500 / 500 200 / 150 200 / 150 120 / 90 80 / 50

Credit Based (Lossless) ** **

Built in L2 Multi-path

Latency

Technology Features:

Storage Application/Protocol Support:

* Based on Google Product Search

** Mellanox end to end can be configured as true lossless Mellanox](https://image.slidesharecdn.com/storagesolutionsvmworldyaron-131002122017-phpapp01/75/Mellanox-Storage-Solutions-6-2048.jpg)

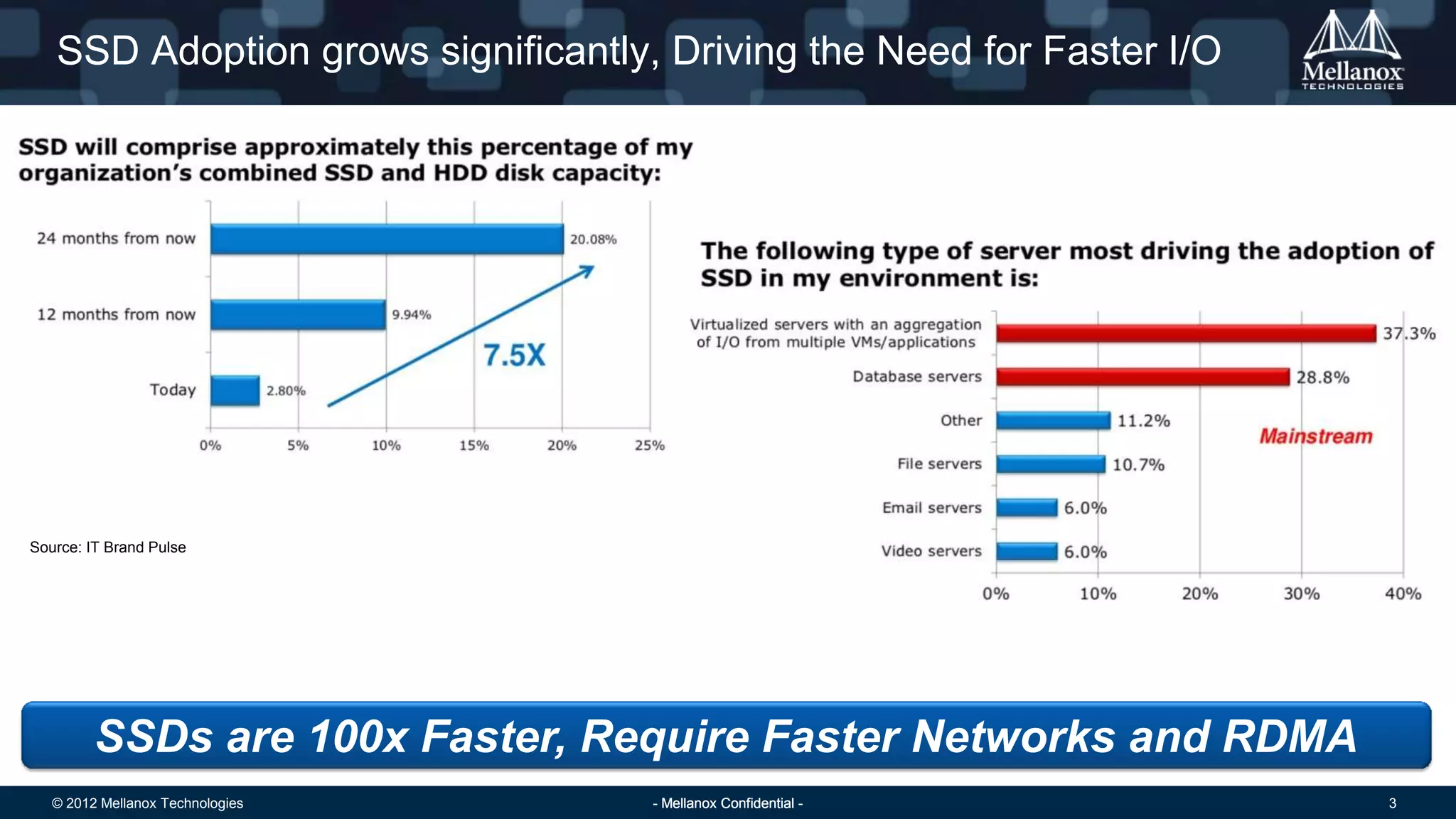

![© 2012 Mellanox Technologies 10- Mellanox Confidential -- Mellanox Confidential -- Mellanox Confidential -

Mellanox Unbeatable Storage Performance

@ 2300K IOPs

5-10% the latency under 20x the workload

0

1000

2000

3000

4000

5000

6000

7000

8000

9000

10000

iSCSI/TCP iSCSI/RDMA

IOLatency@4KIO[micsec]

We Deliver Significantly Faster IO Rates and Lower Access Times !

@ only 131K

IOPs

iSCSI (TCP/IP)

1 x FC 8 Gb

port

4 x FC 8 Gb

port

iSER 1 x

40GbE/IB Port

iSER 2 x

40GbE/IB Port

(+Acceleration)

KIOPs 130 200 800 1100 2300

0

500

1000

1500

2000

2500

KIOPs@4KIOSize](https://image.slidesharecdn.com/storagesolutionsvmworldyaron-131002122017-phpapp01/75/Mellanox-Storage-Solutions-10-2048.jpg)

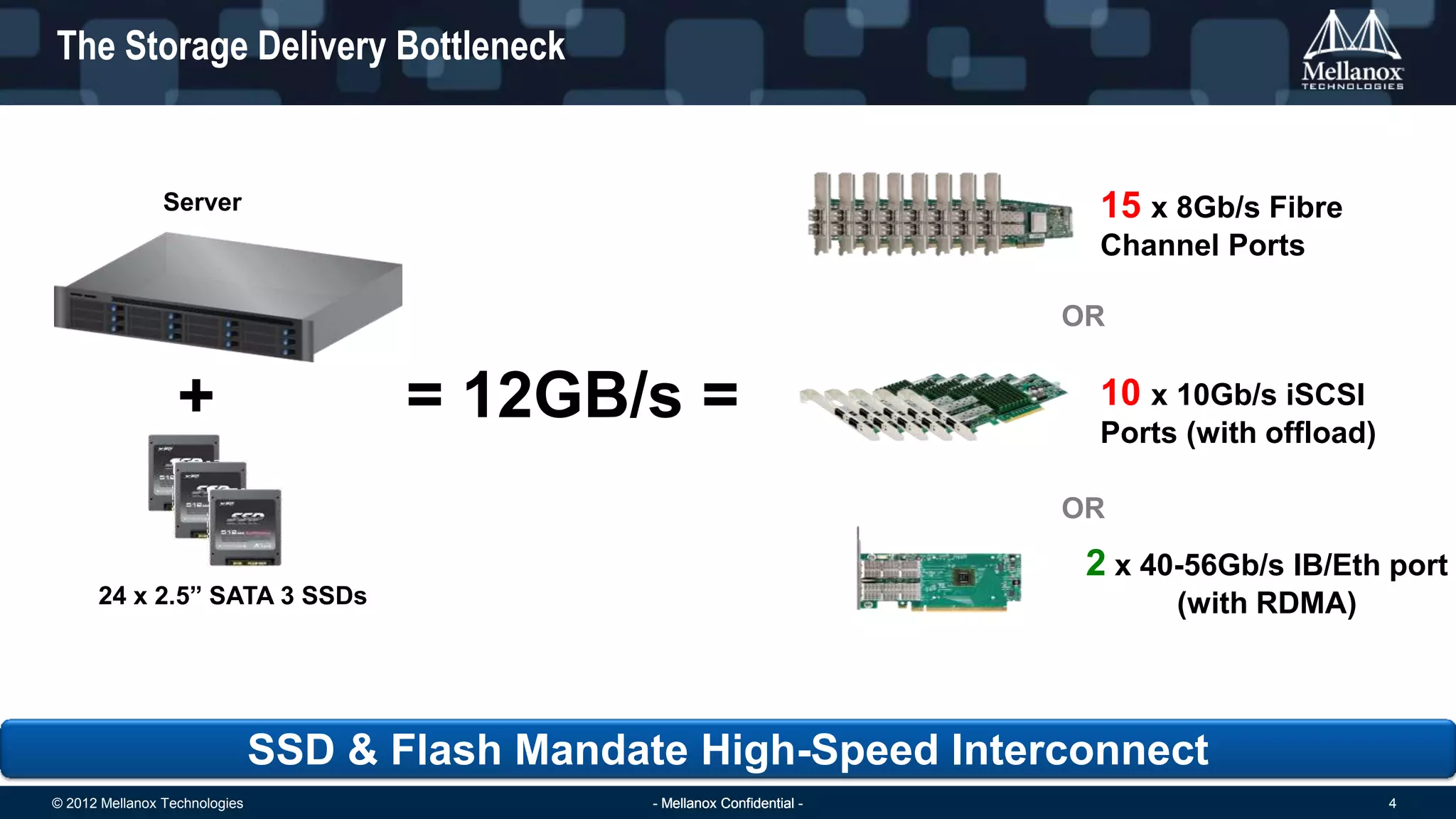

![© 2012 Mellanox Technologies 11- Mellanox Confidential -- Mellanox Confidential -- Mellanox Confidential -

Accelerating IO Performance (Accessing a Single LUN)

0

1000

2000

3000

4000

5000

6000

1 2 4 8 16 32 64 128 256

Bandwidth[MB/s]

IO Size [KB]

iSCSI/TCP

iSER std

iSER BD (bypass)

0

100

200

300

400

500

600

700

1 2 4 8 16 32 64 128 256

IOPs[K/s]

IO Size [KB]

iSCSI/TCP

iSER std

iSER BD (bypass)

0

500

1000

1500

2000

2500

3000

3500

iSCSI/TCP iSER std iSER BD (bypass)

IOLatency[microsecounds]

Read

Write

R/W

0

2

4

6

8

10

12

14

16

18

iSCSI/TCP iSER std iSER BD (bypass)CPUIoWaitin%

Read

Write

R/W

IO Latency and % of CPU in I/O Wait @ 4KB IO size and max IOPsBandwidth & IOPs, Single LUN, 3 Threads

PCIe

Limit

PCIe Limit

@ only 80K IOPs

@ 624K IOPs

@ only 80K IOPs

@ 624K IOPs

@ 235K IOPs

@ 235K IOPs](https://image.slidesharecdn.com/storagesolutionsvmworldyaron-131002122017-phpapp01/75/Mellanox-Storage-Solutions-11-2048.jpg)

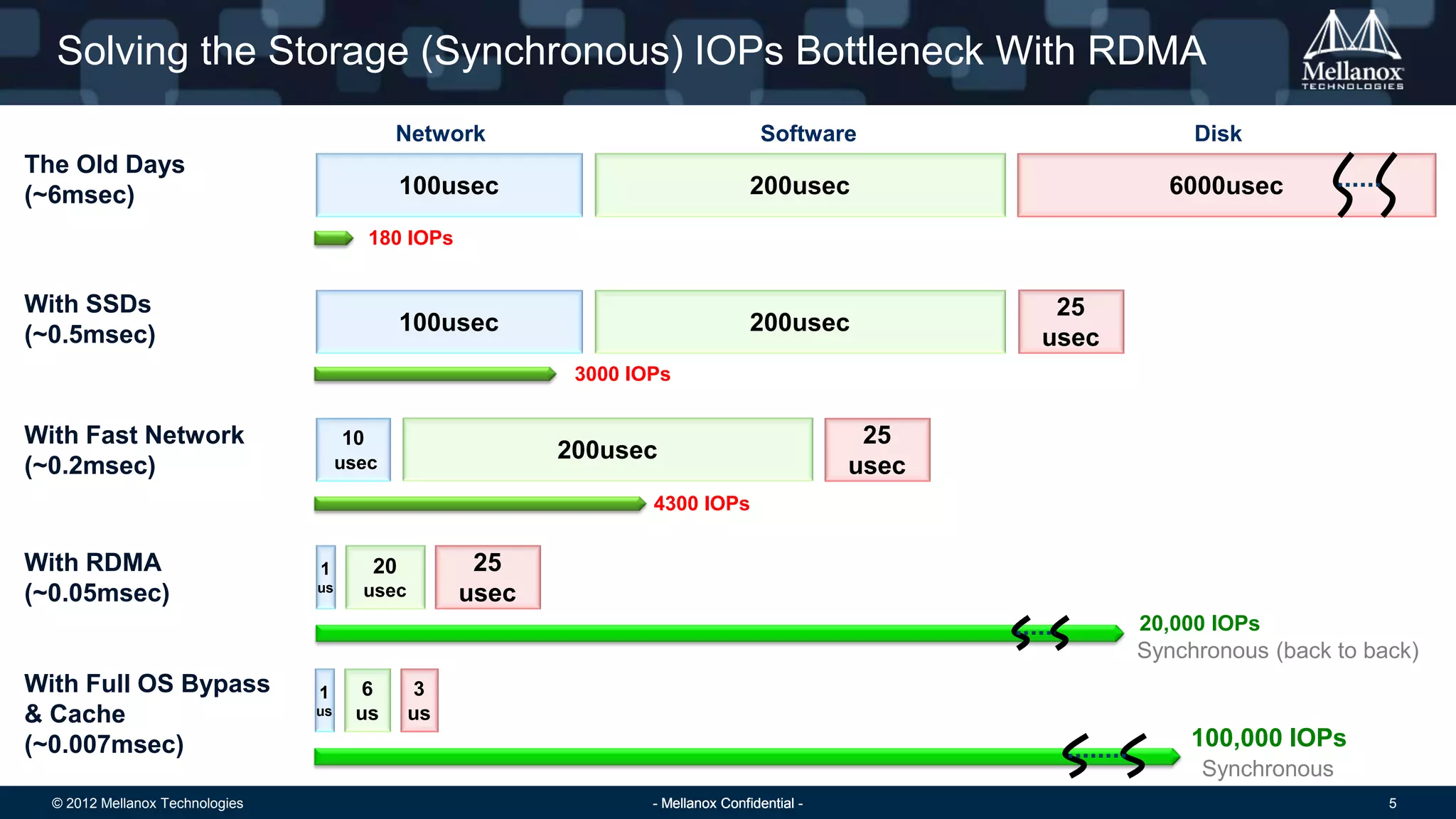

![© 2012 Mellanox Technologies 13- Mellanox Confidential -- Mellanox Confidential -- Mellanox Confidential -

Using OpenStack Built-in components and management (Open-iSCSI, tgt target, Cinder), no

additional software is required, RDMA is already inbox and used by our OpenStack customers !

Mellanox enable faster performance, with much lower CPU%

Next step is to bypass Hypervisor layers, and add NAS & Object storage

Native Integration Into OpenStack Cinder

Hypervisor (KVM)

OS

VM

OS

VM

OS

VM

Adapter

Open-iSCSI w iSER

Compute Servers

Switching Fabric

iSCSI/iSER Target (tgt)

Adapter Local Disks

RDMA Cache

Storage Servers

OpenStack (Cinder)

Using RDMA

to accelerate

iSCSI storage

0

1000

2000

3000

4000

5000

6000

7000

1 2 4 8 16 32 64 128 256

Bandwidth[MB/s]

I/O Size [KB]

iSER 4 VMs Write

iSER 8 VMs Write

iSER 16 VMs Write

iSCSI Write 8 vms

iSCSI Write 16 VMs

PCIe Limit

6X](https://image.slidesharecdn.com/storagesolutionsvmworldyaron-131002122017-phpapp01/75/Mellanox-Storage-Solutions-13-2048.jpg)