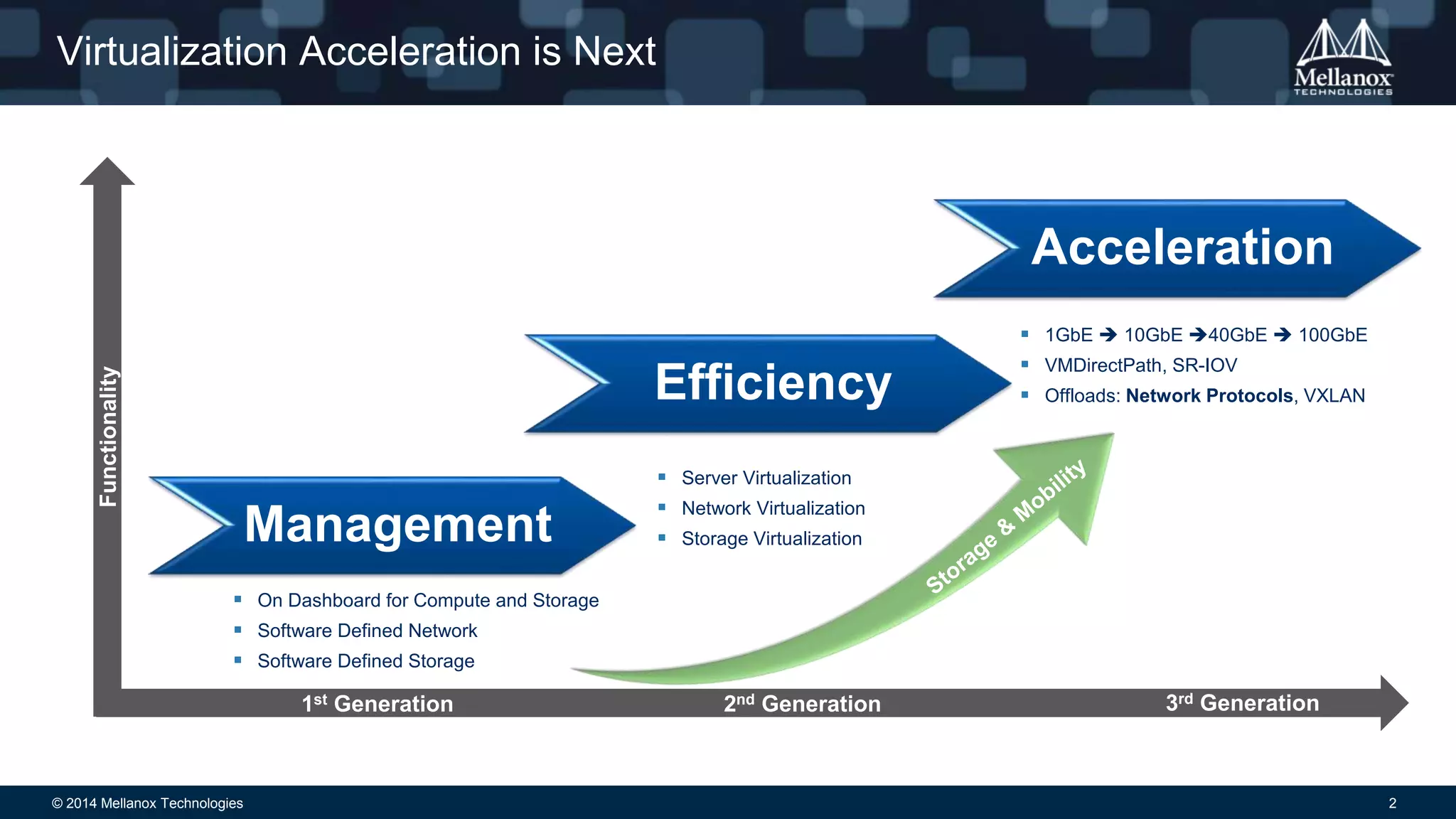

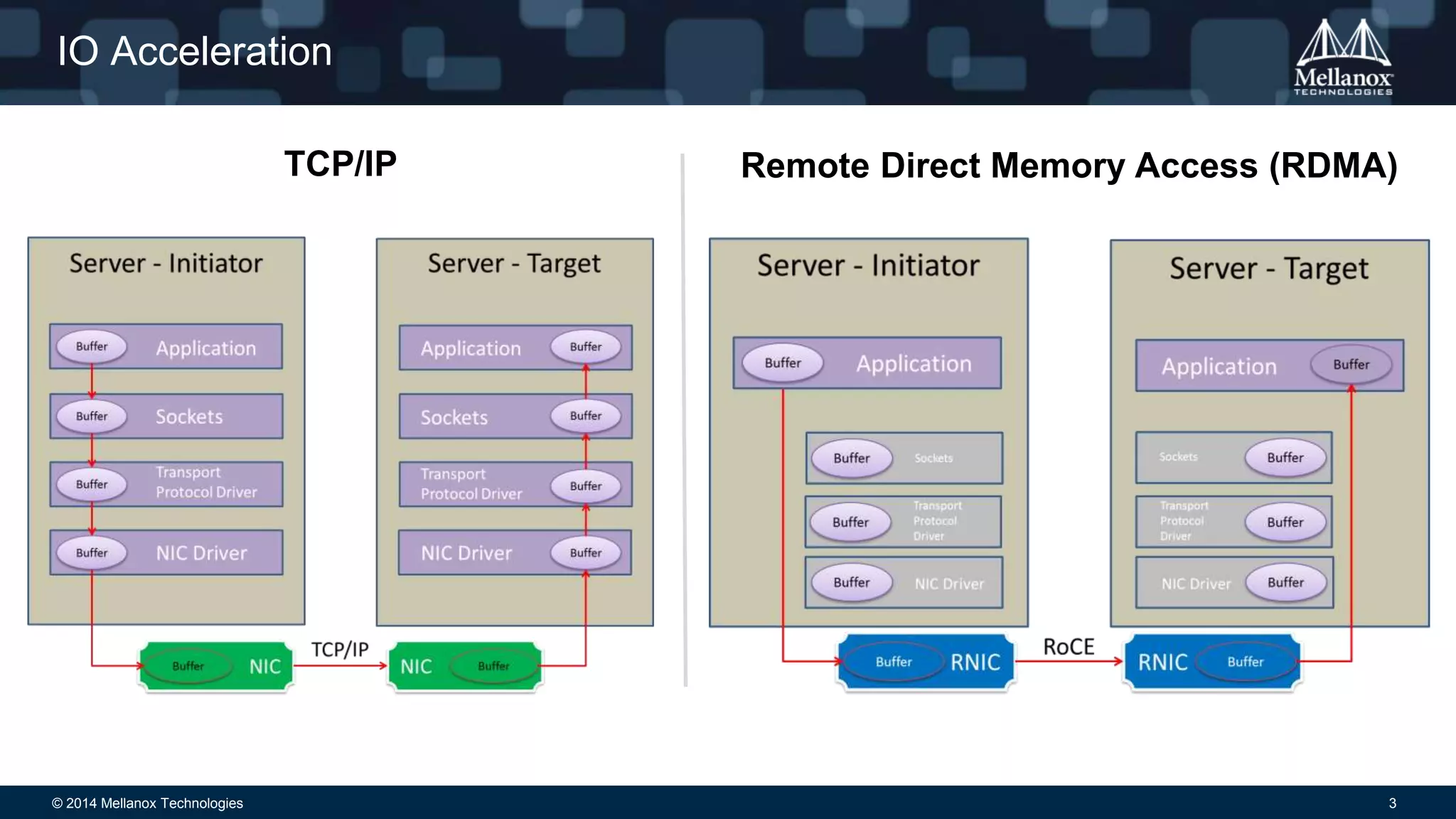

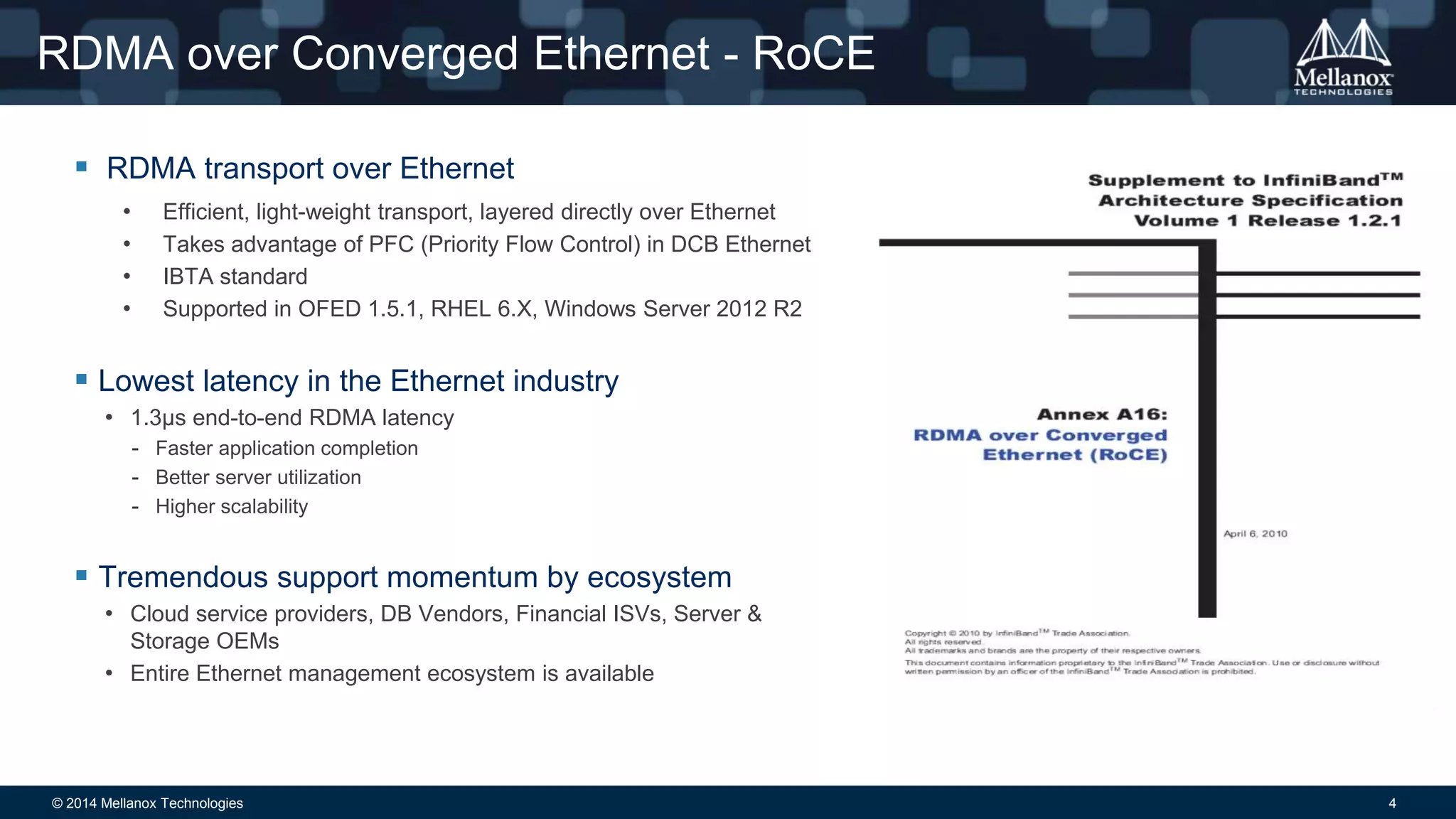

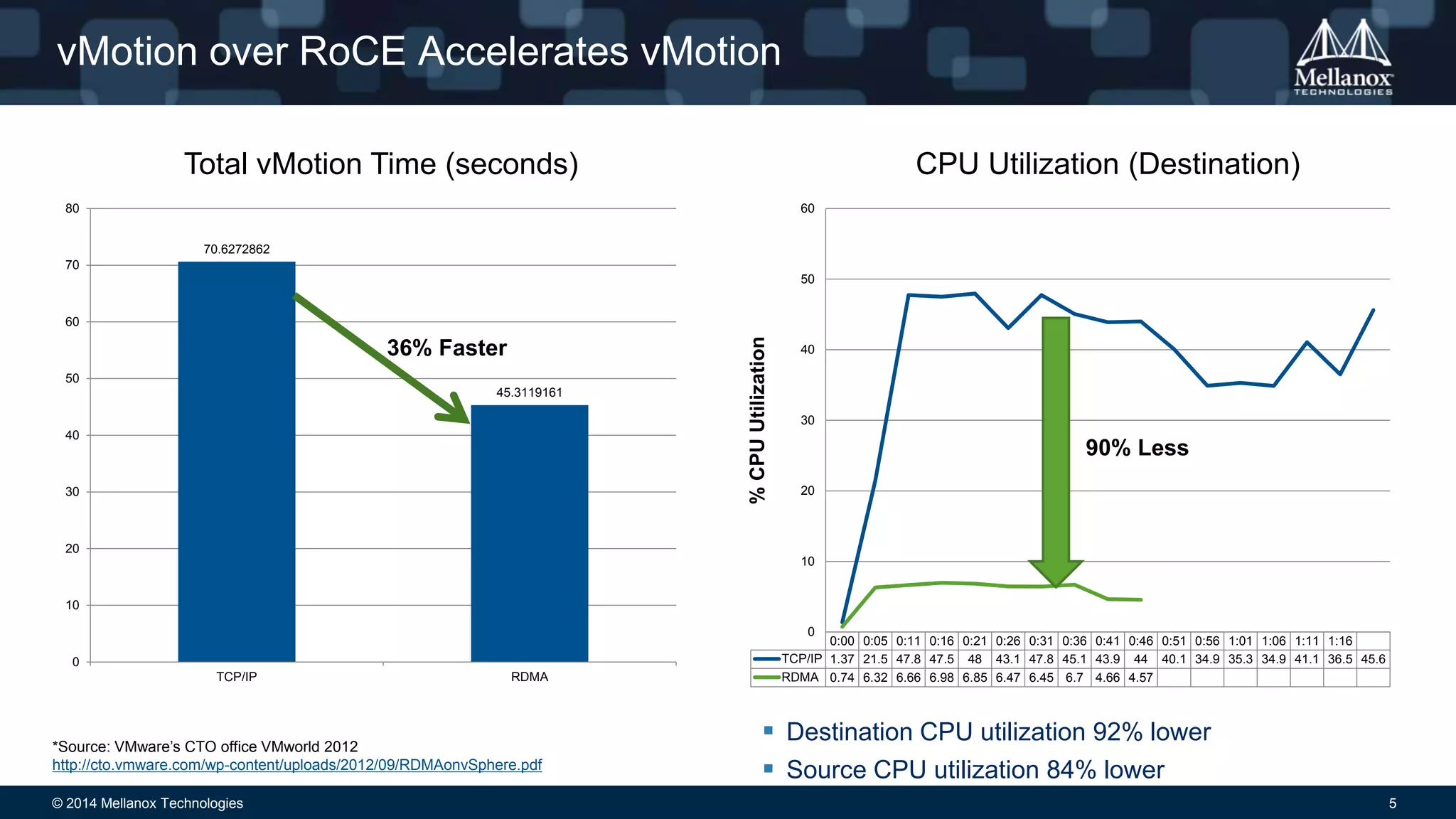

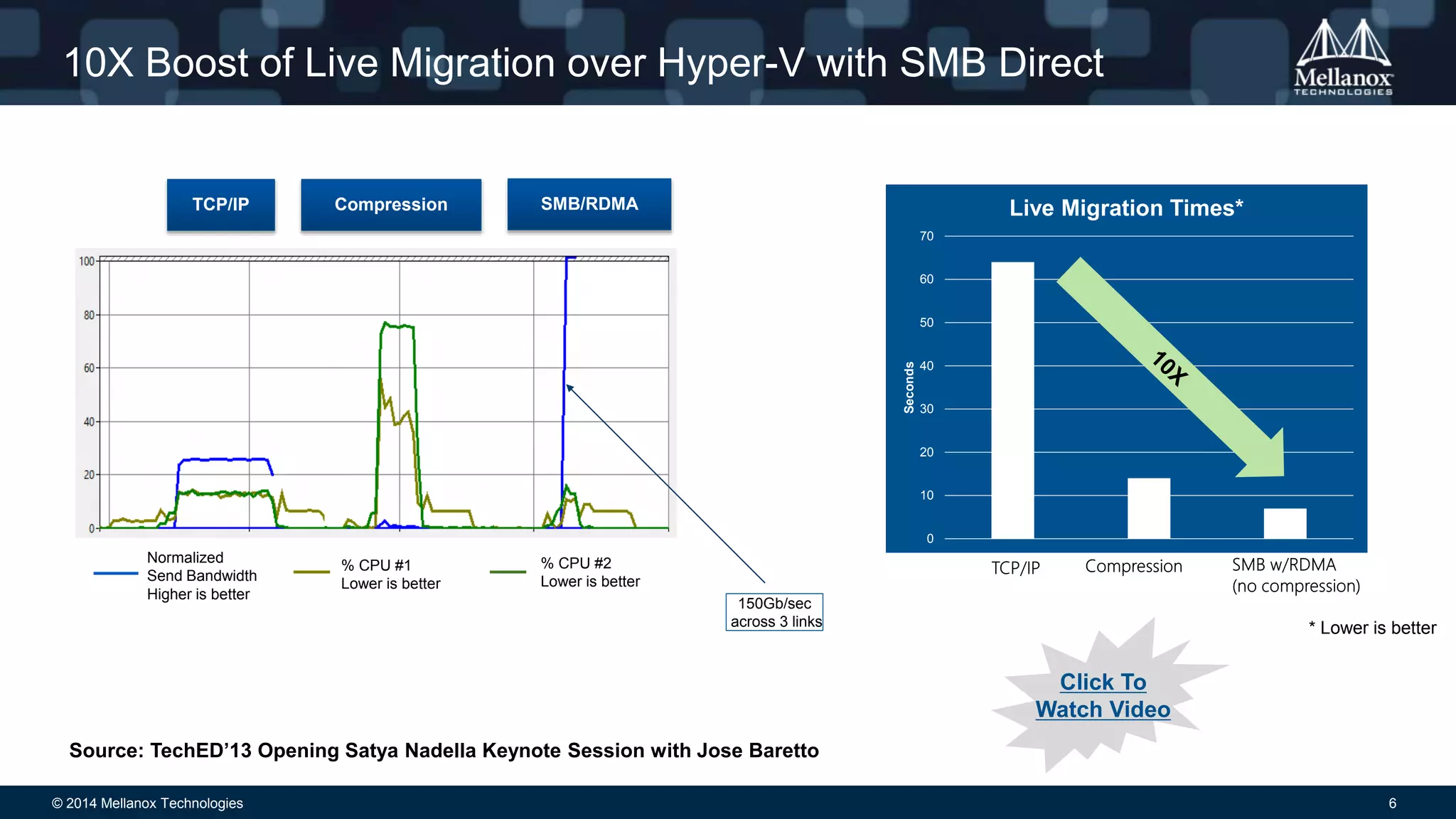

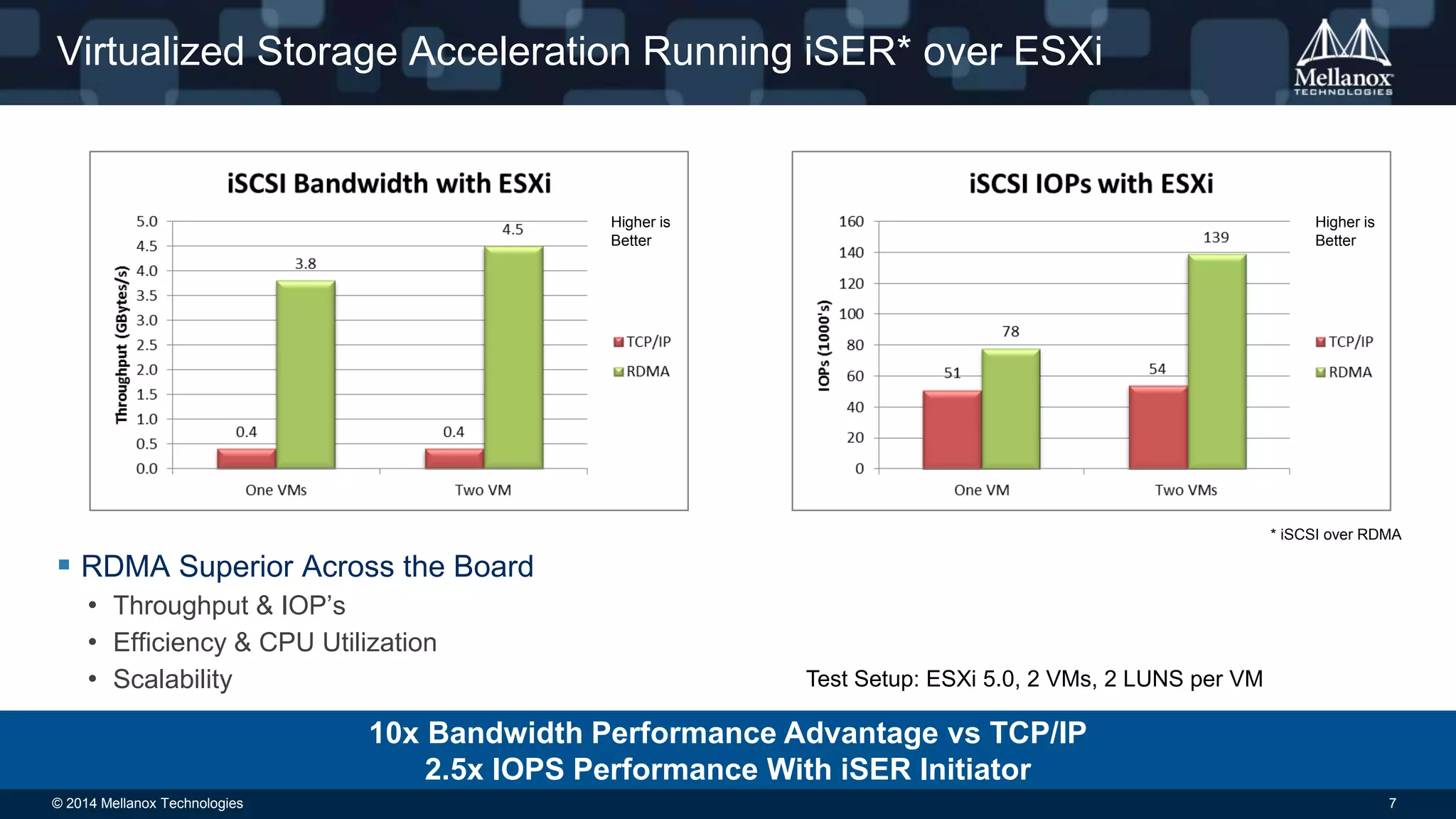

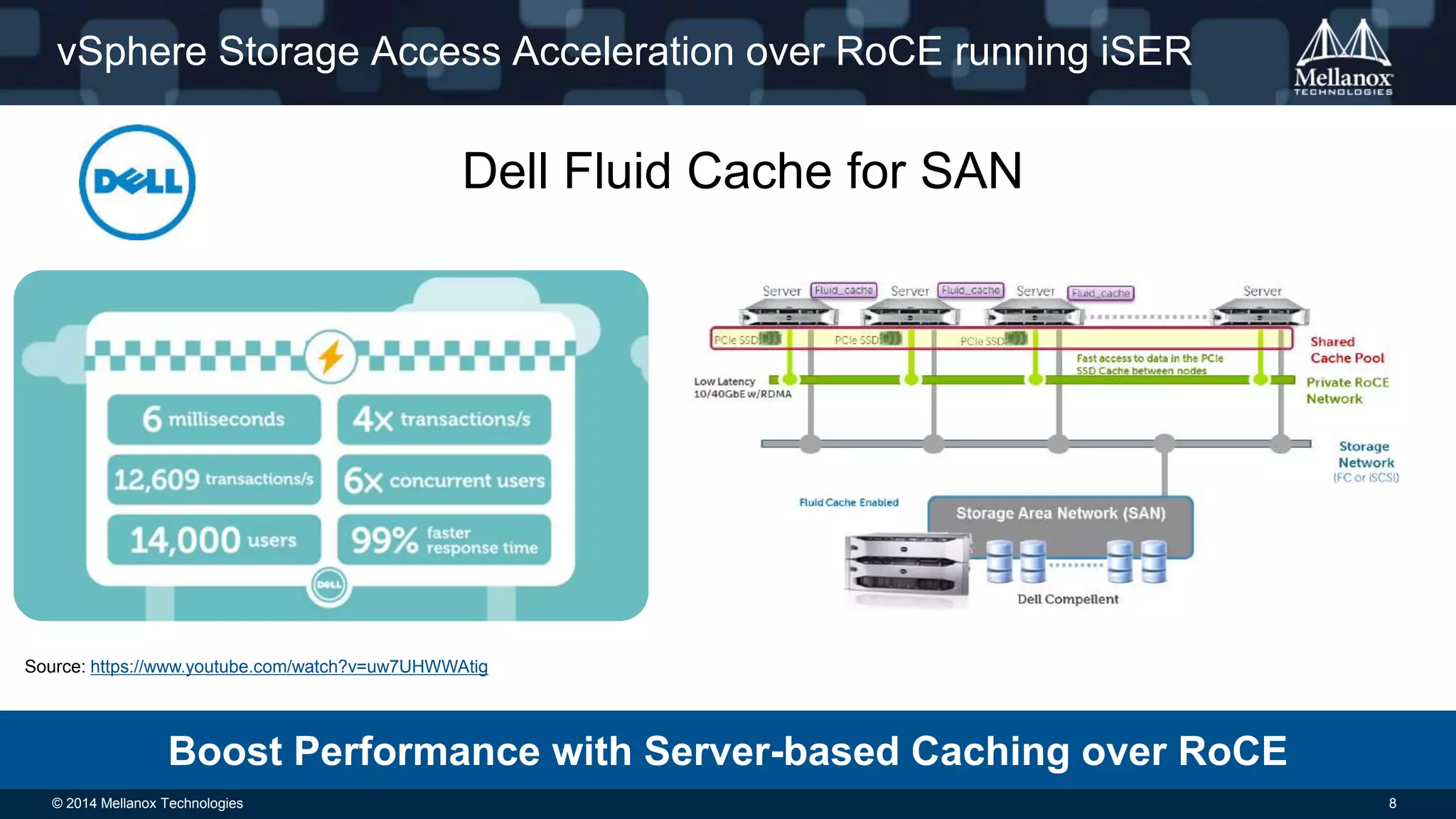

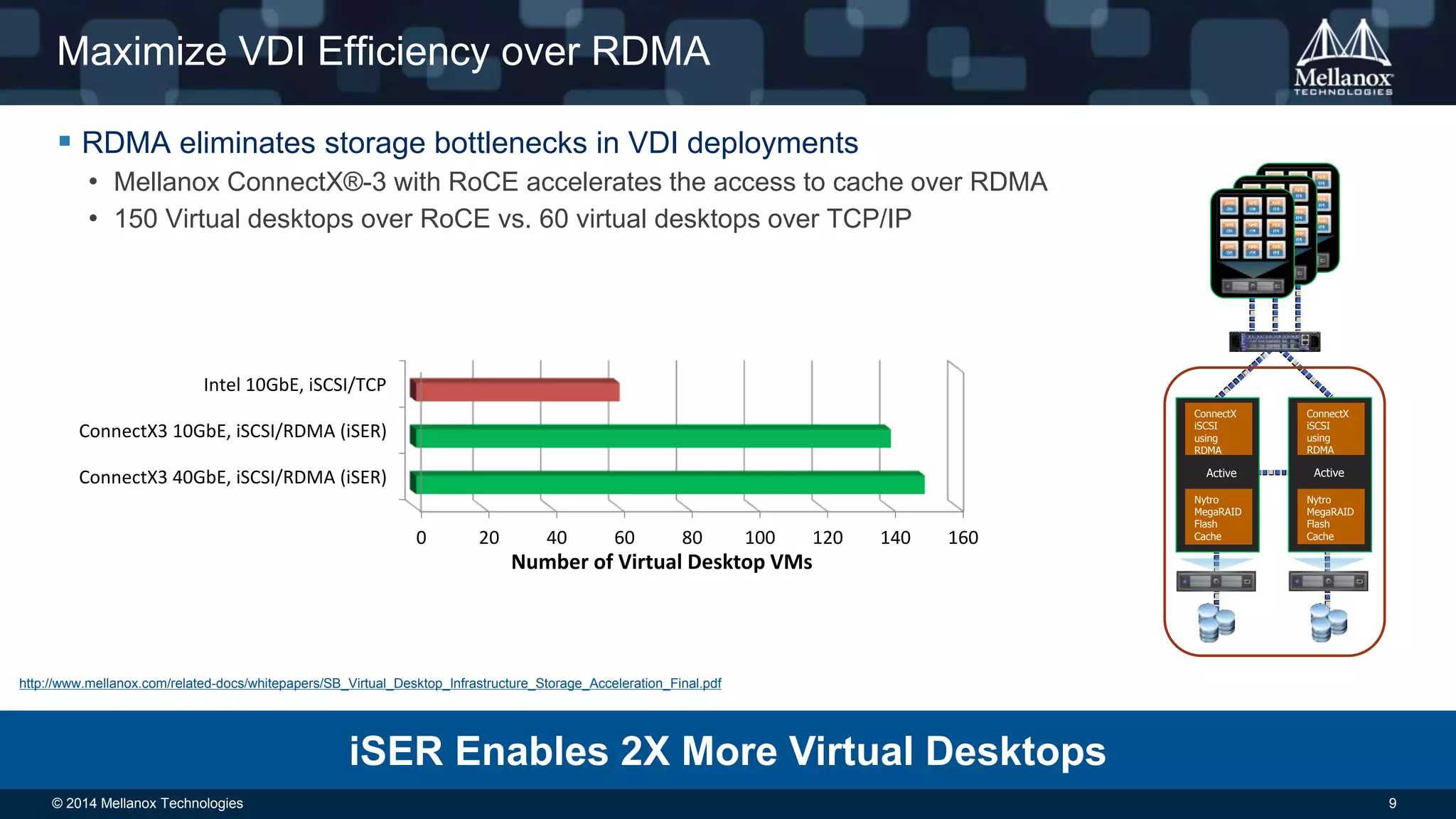

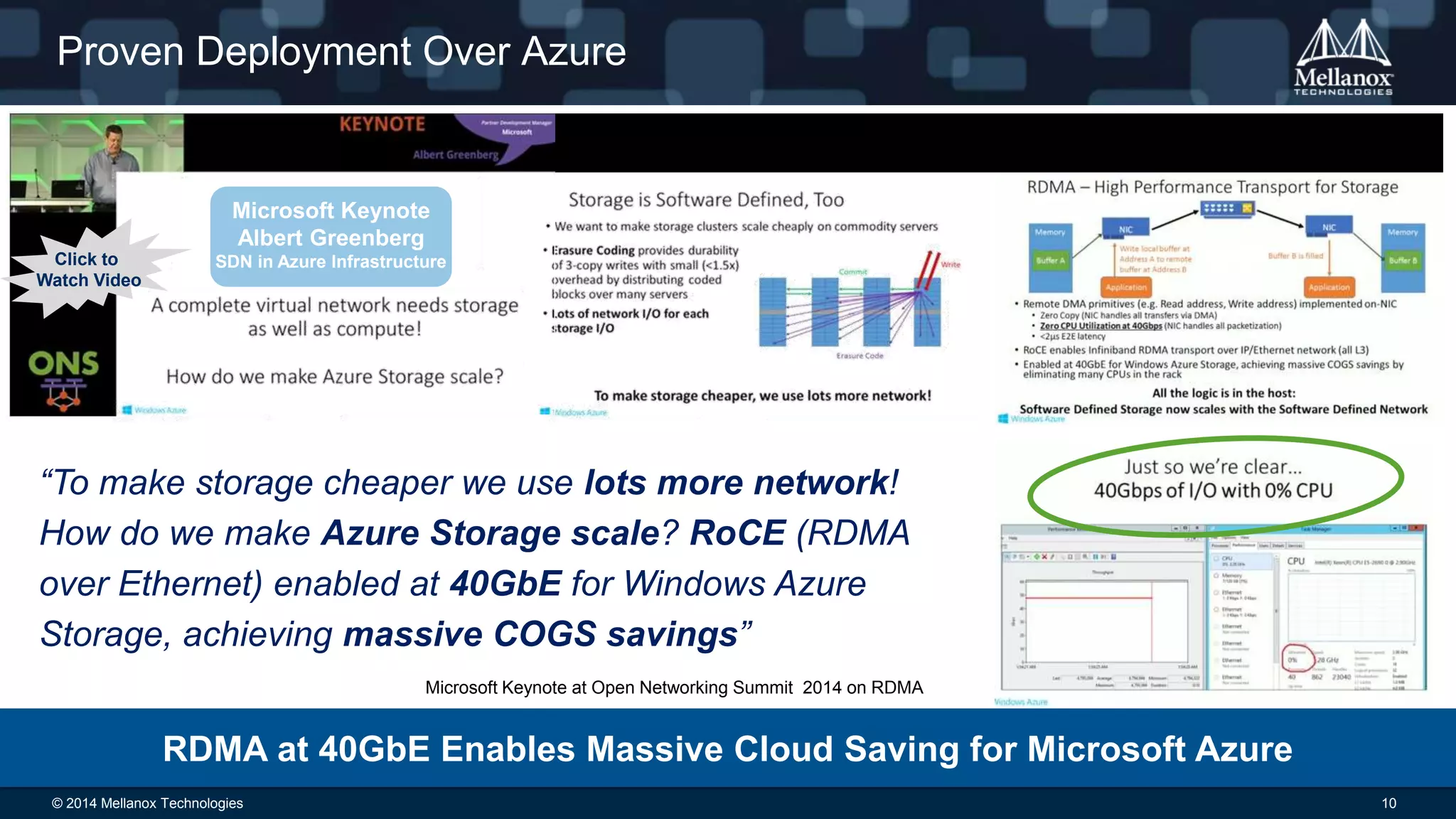

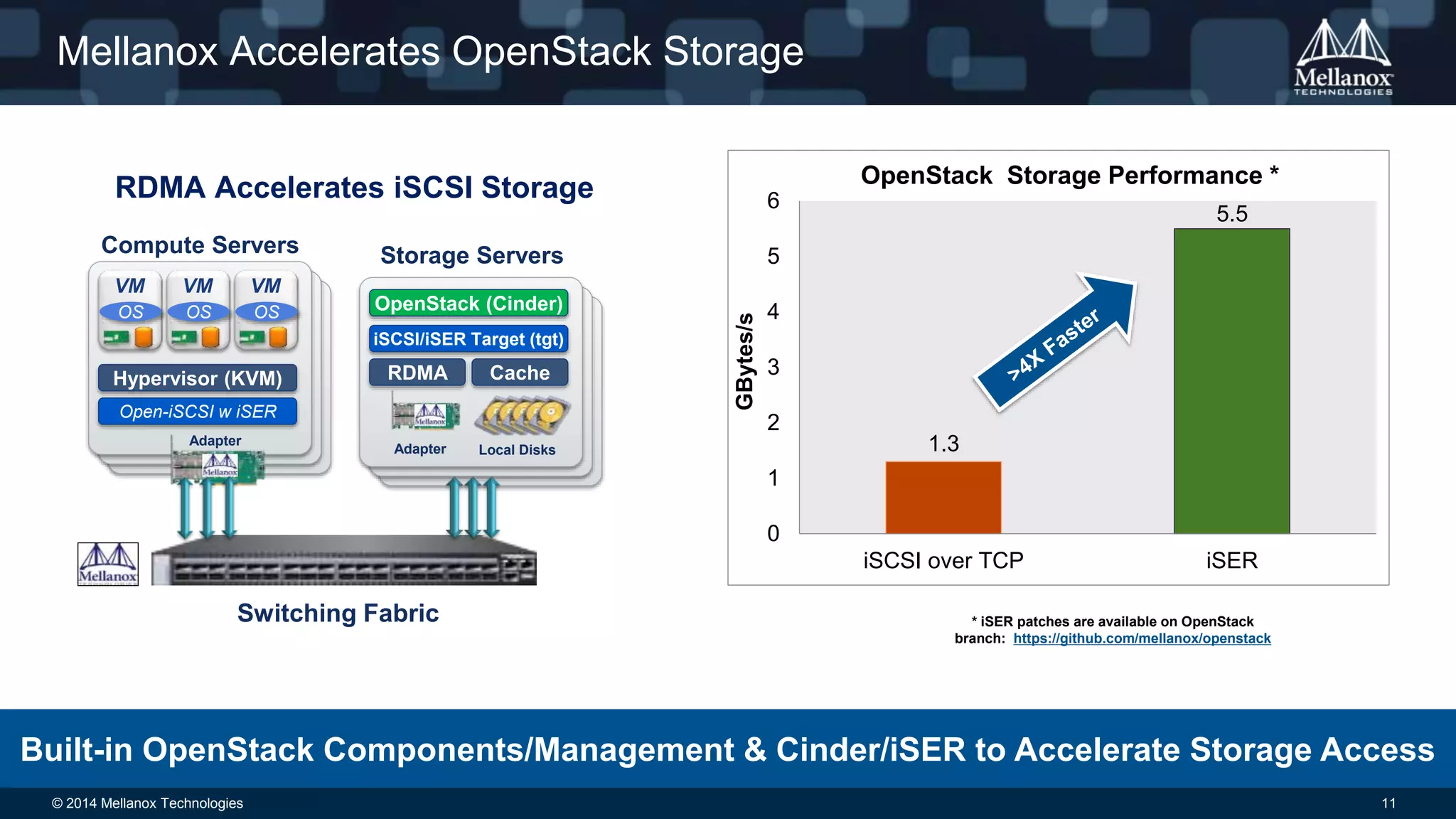

The document discusses advancements in virtualization acceleration technologies presented by Motti Beck at VMworld 2014, focusing on server, network, and storage virtualization. It highlights the benefits of Remote Direct Memory Access (RDMA) over Converged Ethernet (RoCE) for improving application performance, with significant reductions in latency and CPU utilization during operations like live migration and storage access. Additionally, it mentions the enhanced efficiency of virtual desktop infrastructure (VDI) deployments and the integration of RDMA in cloud environments like Microsoft Azure.