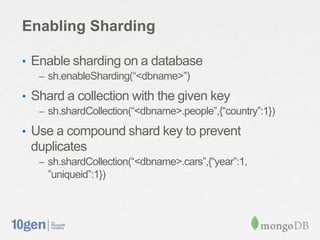

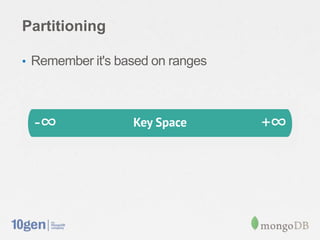

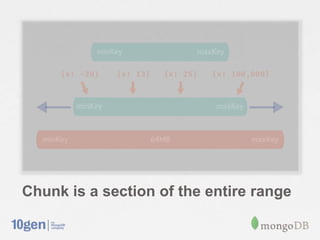

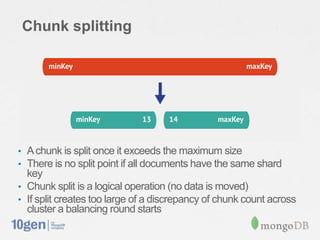

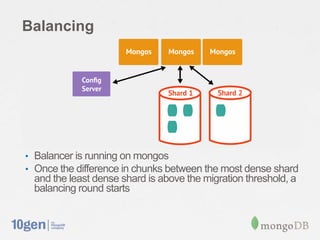

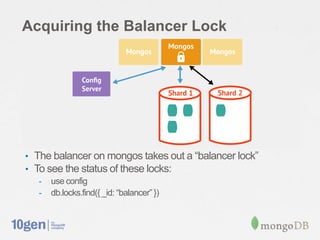

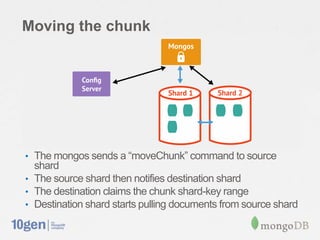

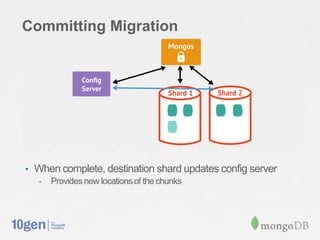

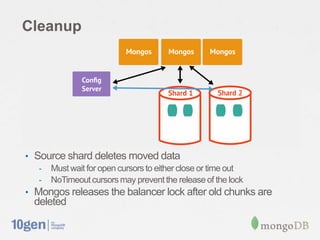

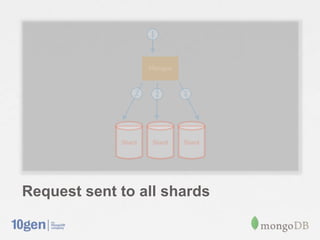

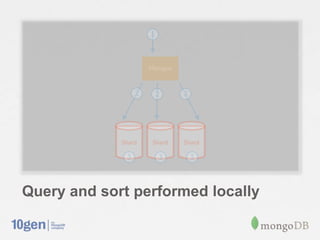

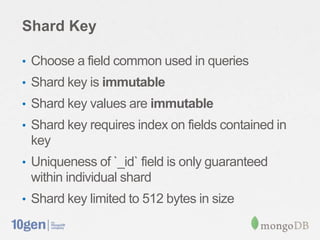

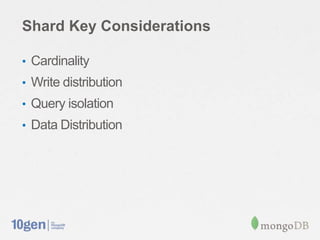

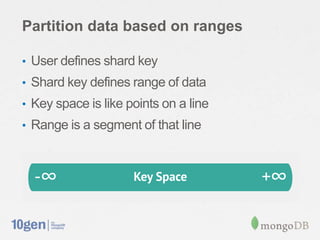

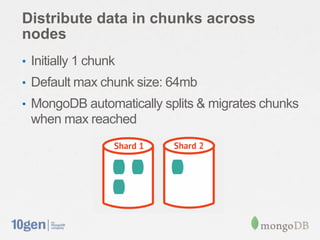

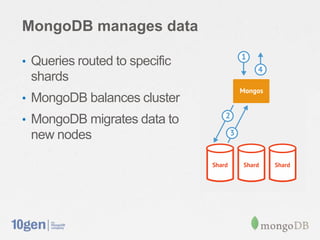

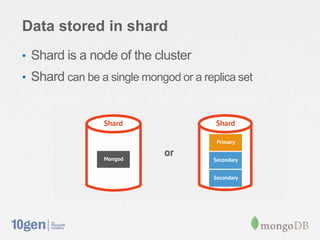

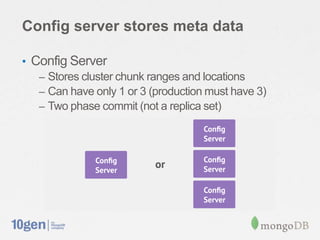

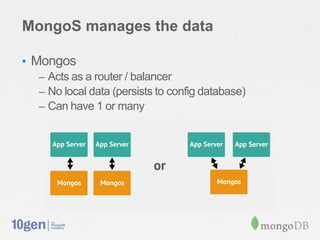

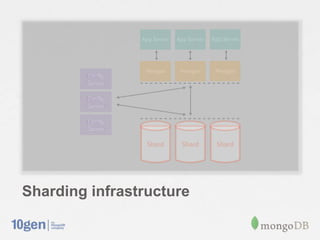

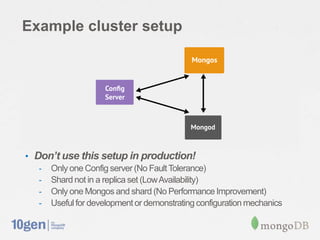

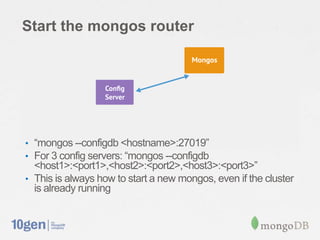

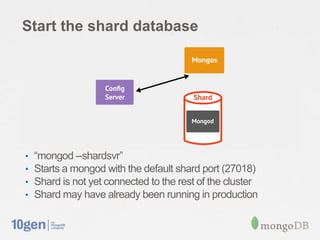

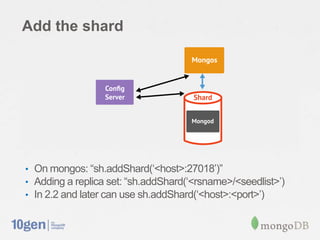

This document discusses MongoDB's approach to sharding. MongoDB uses horizontal sharding to partition and distribute data across multiple machines or nodes. It chooses a shard key to determine how to split data into chunks and distribute chunks across shards. The config server stores metadata about chunk mappings while mongos routes queries to appropriate shards. When chunks grow too large, MongoDB automatically splits and migrates chunks to balance data distribution.

![Verify that the shard was added

• db.runCommand({ listshards:1 })

• { "shards" :

[ { "_id”: "shard0000”, "host”: ”<hostname>:27018” } ],

"ok" : 1

}](https://image.slidesharecdn.com/sharding-seoul2012-121210133543-phpapp02/85/Sharding-Seoul-2012-27-320.jpg)